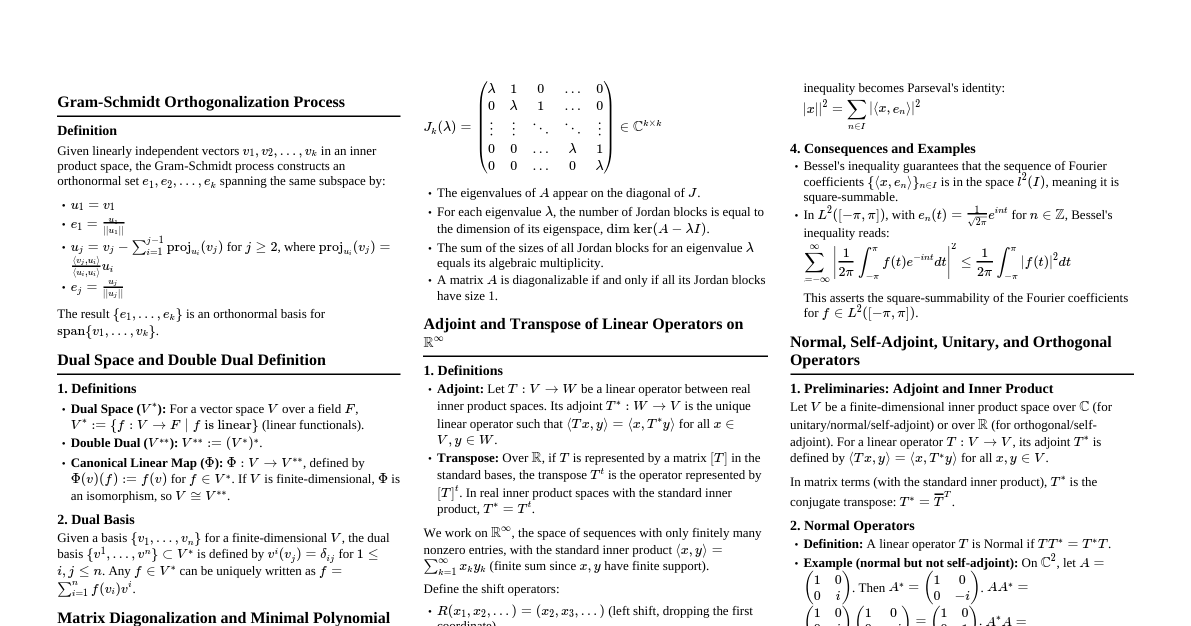

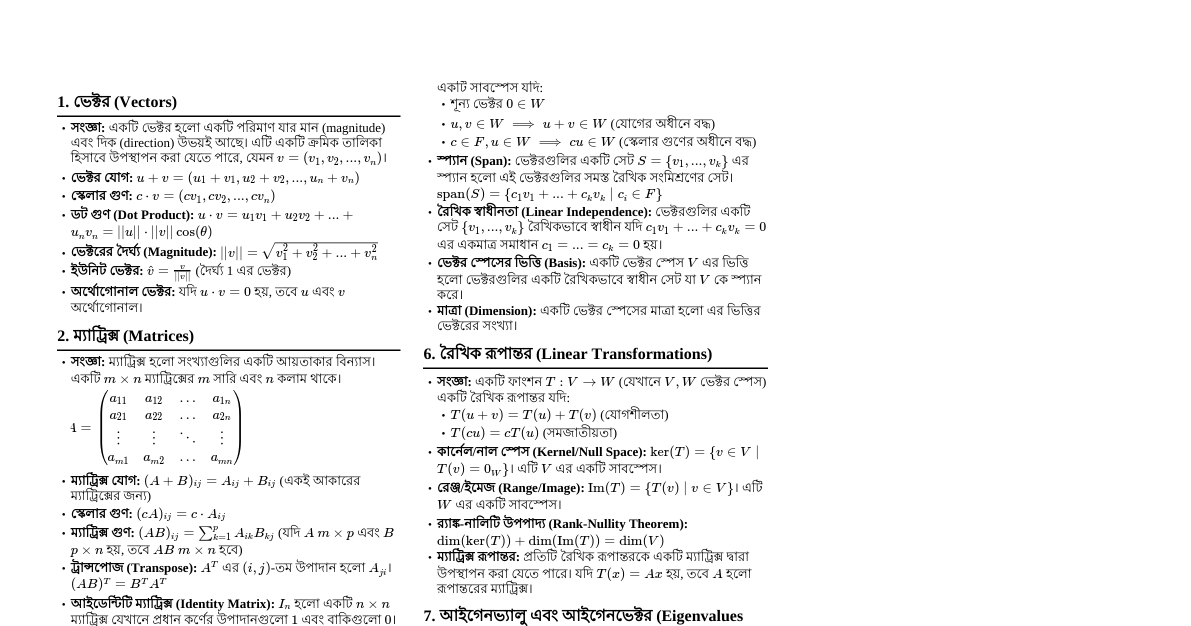

Inner Product Spaces Inner Product An inner product on a real vector space $V$ is a function that associates each pair of vectors $\mathbf{u}, \mathbf{v} \in V$ with a real number $\langle \mathbf{u}, \mathbf{v} \rangle$, satisfying the following axioms for all $\mathbf{u}, \mathbf{v}, \mathbf{w} \in V$ and scalar $c \in \mathbb{R}$: Commutativity: $\langle \mathbf{u}, \mathbf{v} \rangle = \langle \mathbf{v}, \mathbf{u} \rangle$ Additivity: $\langle \mathbf{u} + \mathbf{v}, \mathbf{w} \rangle = \langle \mathbf{u}, \mathbf{w} \rangle + \langle \mathbf{v}, \mathbf{w} \rangle$ Homogeneity: $\langle c\mathbf{u}, \mathbf{v} \rangle = c\langle \mathbf{u}, \mathbf{v} \rangle$ Positive Definiteness: $\langle \mathbf{u}, \mathbf{u} \rangle \ge 0$, and $\langle \mathbf{u}, \mathbf{u} \rangle = 0$ if and only if $\mathbf{u} = \mathbf{0}$ Inner Product Space An inner product space is a vector space $V$ on which an inner product is defined. Example: The Euclidean inner product (dot product) on $\mathbb{R}^n$. If $\mathbf{u} = (u_1, \dots, u_n)$ and $\mathbf{v} = (v_1, \dots, v_n)$, then $\langle \mathbf{u}, \mathbf{v} \rangle = \mathbf{u} \cdot \mathbf{v} = u_1v_1 + u_2v_2 + \dots + u_nv_n$. Norm of a Vector The norm (or length) of a vector $\mathbf{x}$ in an inner product space is defined as $||\mathbf{x}|| = \sqrt{\langle \mathbf{x}, \mathbf{x} \rangle}$. If $\mathbf{x} = (x_1, x_2, \dots, x_n)$ in $\mathbb{R}^n$ with the Euclidean inner product, then $||\mathbf{x}|| = \sqrt{x_1^2 + x_2^2 + \dots + x_n^2}$. Gram-Schmidt Orthonormalization Theorem Let $V$ be an inner product space and $S = \{\mathbf{v}_1, \mathbf{v}_2, \dots, \mathbf{v}_k\}$ be a basis for a subspace $W$ of $V$. Then there exists an orthonormal basis $B = \{\mathbf{u}_1, \mathbf{u}_2, \dots, \mathbf{u}_k\}$ for $W$ constructed as follows: $\mathbf{w}_1 = \mathbf{v}_1$ $\mathbf{w}_2 = \mathbf{v}_2 - \text{proj}_{\mathbf{w}_1} \mathbf{v}_2 = \mathbf{v}_2 - \frac{\langle \mathbf{v}_2, \mathbf{w}_1 \rangle}{\langle \mathbf{w}_1, \mathbf{w}_1 \rangle} \mathbf{w}_1$ $\mathbf{w}_3 = \mathbf{v}_3 - \text{proj}_{\mathbf{w}_1} \mathbf{v}_3 - \text{proj}_{\mathbf{w}_2} \mathbf{v}_3 = \mathbf{v}_3 - \frac{\langle \mathbf{v}_3, \mathbf{w}_1 \rangle}{\langle \mathbf{w}_1, \mathbf{w}_1 \rangle} \mathbf{w}_1 - \frac{\langle \mathbf{v}_3, \mathbf{w}_2 \rangle}{\langle \mathbf{w}_2, \mathbf{w}_2 \rangle} \mathbf{w}_2$ ... $\mathbf{w}_k = \mathbf{v}_k - \sum_{j=1}^{k-1} \frac{\langle \mathbf{v}_k, \mathbf{w}_j \rangle}{\langle \mathbf{w}_j, \mathbf{w}_j \rangle} \mathbf{w}_j$ Then, normalize each vector: $\mathbf{u}_i = \frac{\mathbf{w}_i}{||\mathbf{w}_i||}$ for $i=1, \dots, k$. The set $B = \{\mathbf{u}_1, \dots, \mathbf{u}_k\}$ is an orthonormal basis for $W$. Linear Transformations Definition A function $T: V \to W$ from a vector space $V$ to a vector space $W$ is called a linear transformation if for all $\mathbf{u}, \mathbf{v} \in V$ and any scalar $c$: $T(\mathbf{u} + \mathbf{v}) = T(\mathbf{u}) + T(\mathbf{v})$ (Additivity) $T(c\mathbf{u}) = cT(\mathbf{u})$ (Homogeneity) Range and Kernel (Null Space) The range of $T$, denoted $R(T)$ or $\text{Im}(T)$, is the set of all vectors in $W$ that are images of vectors in $V$: $R(T) = \{ T(\mathbf{v}) \mid \mathbf{v} \in V \}$. It is a subspace of $W$. The kernel (or null space ) of $T$, denoted $K(T)$ or $\text{Null}(T)$, is the set of all vectors in $V$ that are mapped to the zero vector in $W$: $K(T) = \{ \mathbf{v} \in V \mid T(\mathbf{v}) = \mathbf{0}_W \}$. It is a subspace of $V$. Rank and Nullity The rank of a linear transformation $T$ is the dimension of its range: $\text{rank}(T) = \dim(R(T))$. The nullity of a linear transformation $T$ is the dimension of its kernel (null space): $\text{nullity}(T) = \dim(K(T))$. Rank-Nullity Theorem If $T: V \to W$ is a linear transformation, where $V$ is a finite-dimensional vector space, then: $$ \dim(V) = \text{rank}(T) + \text{nullity}(T) $$ In other words, the dimension of the domain is equal to the dimension of the range plus the dimension of the kernel. Multivariable Calculus: Extrema Chain Rule for Partial Differentiation If $z = f(x, y)$ is a differentiable function of $x$ and $y$, and $x = g(t)$, $y = h(t)$ are differentiable functions of $t$, then $z$ is a differentiable function of $t$, and: $$ \frac{dz}{dt} = \frac{\partial z}{\partial x} \frac{dx}{dt} + \frac{\partial z}{\partial y} \frac{dy}{dt} $$ If $z = f(x, y)$ where $x = g(u, v)$ and $y = h(u, v)$, then: $$ \frac{\partial z}{\partial u} = \frac{\partial z}{\partial x} \frac{\partial x}{\partial u} + \frac{\partial z}{\partial y} \frac{\partial y}{\partial u} $$ $$ \frac{\partial z}{\partial v} = \frac{\partial z}{\partial x} \frac{\partial x}{\partial v} + \frac{\partial z}{\partial y} \frac{\partial y}{\partial v} $$ Jacobian Determinant For $u(x, y)$ and $v(x, y)$ with respect to $x$ and $y$: $$ J(u, v) = \frac{\partial(u, v)}{\partial(x, y)} = \det \begin{pmatrix} \frac{\partial u}{\partial x} & \frac{\partial u}{\partial y} \\ \frac{\partial v}{\partial x} & \frac{\partial v}{\partial y} \end{pmatrix} = \frac{\partial u}{\partial x}\frac{\partial v}{\partial y} - \frac{\partial u}{\partial y}\frac{\partial v}{\partial x} $$ For $u(x, y, z), v(x, y, z), w(x, y, z)$ with respect to $x, y, z$: $$ J(u, v, w) = \frac{\partial(u, v, w)}{\partial(x, y, z)} = \det \begin{pmatrix} \frac{\partial u}{\partial x} & \frac{\partial u}{\partial y} & \frac{\partial u}{\partial z} \\ \frac{\partial v}{\partial x} & \frac{\partial v}{\partial y} & \frac{\partial v}{\partial z} \\ \frac{\partial w}{\partial x} & \frac{\partial w}{\partial y} & \frac{\partial w}{\partial z} \end{pmatrix} $$ Extrema of $f(x, y)$ Let $f(x, y)$ have continuous second partial derivatives. Necessary Conditions for Maxima/Minima at $(a, b)$ If $f(x, y)$ has a local maximum or minimum at $(a, b)$, then the first partial derivatives must be zero at that point: $$ \frac{\partial f}{\partial x}(a, b) = 0 \quad \text{and} \quad \frac{\partial f}{\partial y}(a, b) = 0 $$ Such a point $(a, b)$ is called a stationary point or critical point. Sufficient Conditions for Maxima/Minima at $(a, b)$ (Second Derivative Test) Let $(a, b)$ be a stationary point, and define the discriminant $D$ as: $$ D(a, b) = f_{xx}(a, b)f_{yy}(a, b) - [f_{xy}(a, b)]^2 $$ If $D(a, b) > 0$ and $f_{xx}(a, b) > 0$, then $f$ has a local minimum at $(a, b)$. If $D(a, b) > 0$ and $f_{xx}(a, b) local maximum at $(a, b)$. If $D(a, b) saddle point at $(a, b)$. If $D(a, b) = 0$, the test is inconclusive. Stationary Point A point $(a, b)$ is a stationary point (or critical point) of $f(x, y)$ if $\frac{\partial f}{\partial x}(a, b) = 0$ and $\frac{\partial f}{\partial y}(a, b) = 0$. These are candidates for local maxima, minima, or saddle points. Saddle Point A saddle point is a stationary point $(a, b)$ where the function $f(x, y)$ is neither a local maximum nor a local minimum. At a saddle point, the function increases in some directions and decreases in others. This occurs when $D(a, b)