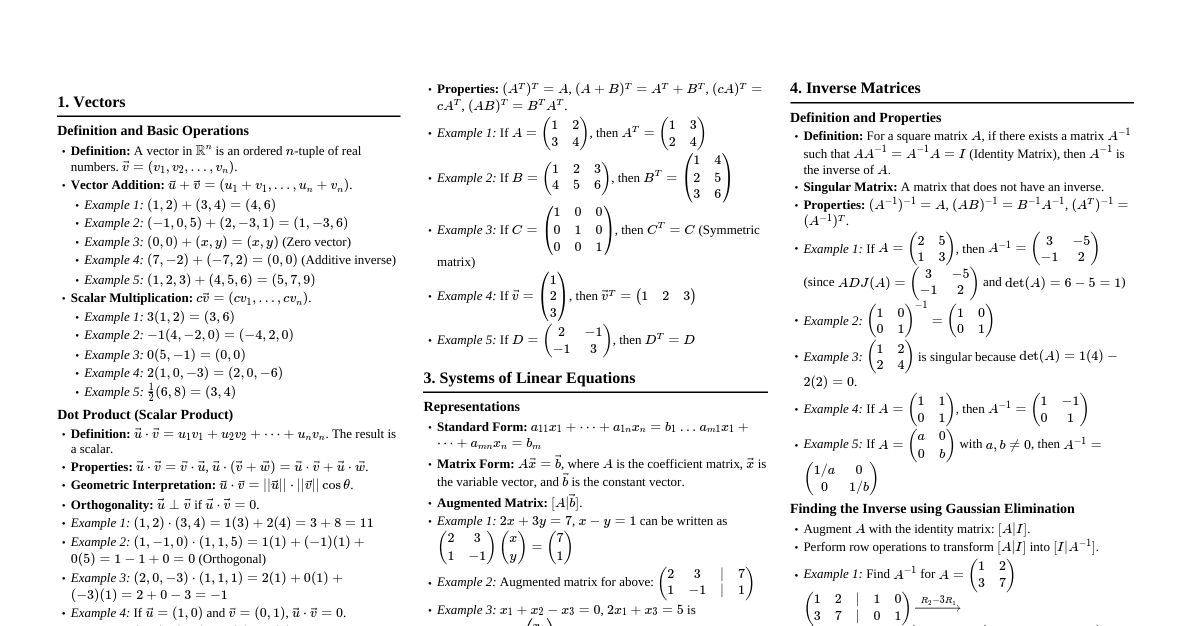

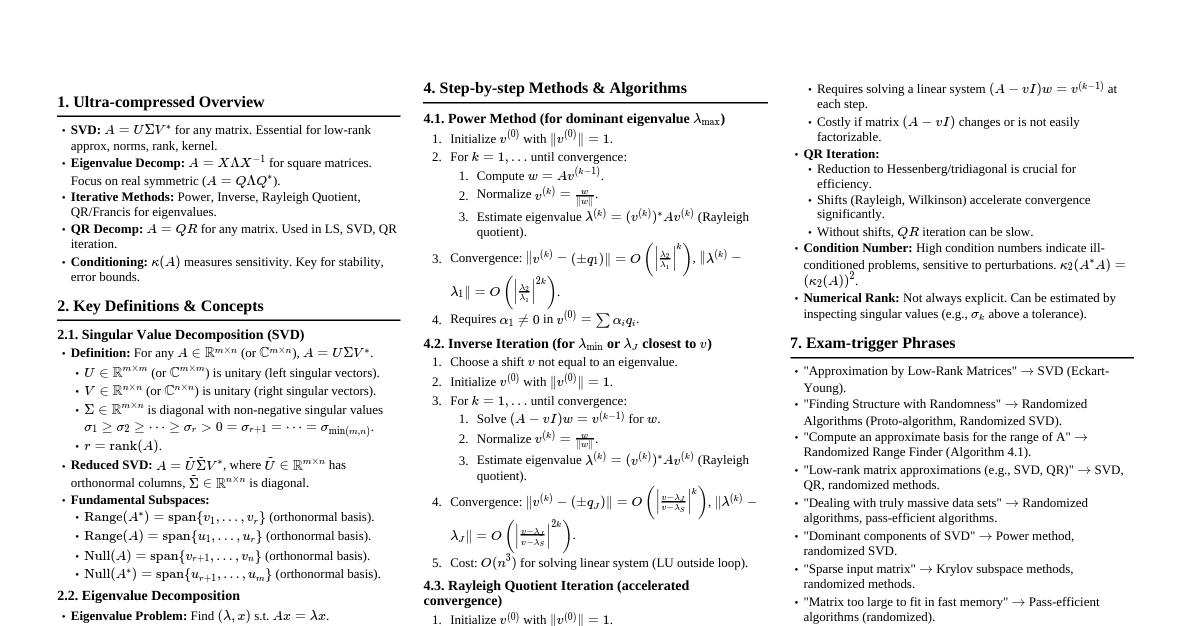

Unit I: Calculus Theorems & Rules Indeterminate Forms: Expressions like $\frac{0}{0}$, $\frac{\infty}{\infty}$, $0 \cdot \infty$, $\infty - \infty$, $0^0$, $1^\infty$, $\infty^0$. L'Hôpital's Rule: For indeterminate forms $\frac{f(x)}{g(x)}$, if $\lim_{x \to c} \frac{f(x)}{g(x)}$ is $\frac{0}{0}$ or $\frac{\infty}{\infty}$, then $\lim_{x \to c} \frac{f(x)}{g(x)} = \lim_{x \to c} \frac{f'(x)}{g'(x)}$. Rolle's Theorem: If $f(x)$ is continuous on $[a, b]$ and differentiable on $(a, b)$, and $f(a) = f(b)$, then there exists at least one $c \in (a, b)$ such that $f'(c) = 0$. Lagrange's Mean Value Theorem: If $f(x)$ is continuous on $[a, b]$ and differentiable on $(a, b)$, then there exists at least one $c \in (a, b)$ such that $f'(c) = \frac{f(b) - f(a)}{b - a}$. Cauchy's Mean Value Theorem: If $f(x)$ and $g(x)$ are continuous on $[a, b]$ and differentiable on $(a, b)$, and $g'(x) \neq 0$ for any $x \in (a,b)$, then there exists at least one $c \in (a, b)$ such that $\frac{f'(c)}{g'(c)} = \frac{f(b) - f(a)}{g(b) - g(a)}$. Series Expansions Taylor's Theorem: $f(x) = \sum_{n=0}^{\infty} \frac{f^{(n)}(a)}{n!}(x-a)^n = f(a) + f'(a)(x-a) + \frac{f''(a)}{2!}(x-a)^2 + \dots$ Maclaurin's Theorem: Taylor series with $a=0$. $f(x) = \sum_{n=0}^{\infty} \frac{f^{(n)}(0)}{n!}x^n = f(0) + f'(0)x + \frac{f''(0)}{2!}x^2 + \dots$ Remainder Term: Often denoted $R_n(x)$ or $R_n(x,a)$. Applications of Differential Calculus Maxima and Minima: Find critical points by setting $f'(x)=0$. Use the first or second derivative test to classify them. Curvature: For a curve $y=f(x)$, $\kappa = \frac{|y''|}{(1+(y')^2)^{3/2}}$. Evolutes and Involutes: Evolute: The locus of the centers of curvature of a curve. Involute: A curve for which a given curve is the evolute. Applications of Integral Calculus Beta Function: $B(x, y) = \int_0^1 t^{x-1}(1-t)^{y-1} dt = \frac{\Gamma(x)\Gamma(y)}{\Gamma(x+y)}$. Gamma Function: $\Gamma(z) = \int_0^\infty t^{z-1}e^{-t} dt$. Properties: $\Gamma(z+1) = z\Gamma(z)$, $\Gamma(n+1) = n!$ for integer $n$. Surface Areas: For revolution about x-axis: $S = 2\pi \int_a^b y \sqrt{1 + (\frac{dy}{dx})^2} dx$. For revolution about y-axis: $S = 2\pi \int_a^b x \sqrt{1 + (\frac{dy}{dx})^2} dx$. Volumes of Solids of Revolution: Disk Method (x-axis): $V = \pi \int_a^b [f(x)]^2 dx$. Shell Method (y-axis): $V = 2\pi \int_a^b x f(x) dx$. Unit II: Matrices & Linear Systems Matrix Operations Addition/Scalar Multiplication: Element-wise. Matrix Multiplication: $(AB)_{ij} = \sum_k A_{ik} B_{kj}$. Determinant: $\det(A)$. For $2 \times 2$ matrix $A = \begin{pmatrix} a & b \\ c & d \end{pmatrix}$, $\det(A) = ad-bc$. Elementary Transformations: Row/column swaps, scaling a row/column, adding a multiple of one row/column to another. Inverse of a Matrix: $A^{-1} = \frac{1}{\det(A)} \text{adj}(A)$. A matrix is invertible if $\det(A) \neq 0$. Matrix Forms & Rank Rank of a Matrix: Maximum number of linearly independent rows or columns. Also, the dimension of the column space (or row space). Normal Form (Canonical Form): Reducing a matrix to $\begin{pmatrix} I_r & 0 \\ 0 & 0 \end{pmatrix}$ using elementary row and column operations, where $r$ is the rank. Echelon Form: All non-zero rows are above any zero rows. The leading entry (pivot) of each non-zero row is in a column to the right of the leading entry of the row above it. All entries in a column below a leading entry are zero. Linear Systems of Equations Representation: $Ax = b$. Linear Independence: A set of vectors $\{v_1, \dots, v_k\}$ is linearly independent if $c_1v_1 + \dots + c_kv_k = 0$ implies $c_1 = \dots = c_k = 0$. Cramer's Rule: For a system $Ax=b$ with $\det(A) \neq 0$, $x_i = \frac{\det(A_i)}{\det(A)}$, where $A_i$ is the matrix formed by replacing the $i$-th column of $A$ with $b$. Gauss Elimination: Transforms the augmented matrix $[A|b]$ into row echelon form to solve the system. Gauss-Jordan Elimination: Transforms the augmented matrix $[A|b]$ into reduced row echelon form. Unit III: Vector Spaces I Fundamentals of Vector Spaces Vector Space: A set $V$ with vector addition and scalar multiplication satisfying 10 axioms. Subspace: A subset $W$ of a vector space $V$ that is itself a vector space under the operations of $V$. (Must contain zero vector, be closed under addition and scalar multiplication). Linear Span of a Set: The set of all possible linear combinations of a given set of vectors $\{v_1, \dots, v_k\}$, denoted $\text{span}\{v_1, \dots, v_k\}$. Basis: A linearly independent set of vectors that spans the entire vector space. Dimension: The number of vectors in any basis of a vector space. Linear Transformations (Maps) Definition: A function $T: V \to W$ is a linear transformation if for all $u, v \in V$ and scalar $c$: $T(u+v) = T(u) + T(v)$ $T(cu) = cT(u)$ Range (Image): $\text{Im}(T) = \{w \in W \mid w = T(v) \text{ for some } v \in V\}$. It's a subspace of $W$. Kernel (Null Space): $\text{Ker}(T) = \{v \in V \mid T(v) = 0_W\}$. It's a subspace of $V$. Rank of a Linear Map: $\dim(\text{Im}(T))$. Nullity of a Linear Map: $\dim(\text{Ker}(T))$. Rank-Nullity Theorem: For a linear transformation $T: V \to W$, $\dim(V) = \text{rank}(T) + \text{nullity}(T)$. Inverse of a Linear Transformation: If $T: V \to W$ is an invertible linear transformation, then $T^{-1}: W \to V$ exists. $T$ is invertible if and only if $\text{Ker}(T) = \{0\}$ and $\text{Im}(T) = W$. Matrix Associated with a Linear Map: Given bases for $V$ and $W$, any linear transformation $T$ can be represented by a matrix. Composition of Linear Maps: If $T_1: U \to V$ and $T_2: V \to W$ are linear maps, then $T_2 \circ T_1: U \to W$ is also a linear map. Its matrix representation is the product of the individual matrices. Unit IV: Vector Spaces II Eigenvalues & Eigenvectors Eigenvalue: A scalar $\lambda$ such that for a matrix $A$, $Av = \lambda v$ for some non-zero vector $v$. Eigenvector: A non-zero vector $v$ satisfying $Av = \lambda v$ for some eigenvalue $\lambda$. Characteristic Equation: $\det(A - \lambda I) = 0$. Solutions for $\lambda$ are the eigenvalues. Eigen-bases: A basis consisting entirely of eigenvectors of a linear operator. Special Matrices Symmetric Matrix: $A^T = A$. Skew-Symmetric Matrix: $A^T = -A$. Orthogonal Matrix: $A^T A = A A^T = I$. Its columns (and rows) form an orthonormal set. Diagonalization Definition: A matrix $A$ is diagonalizable if it is similar to a diagonal matrix $D$, i.e., $A = PDP^{-1}$ for some invertible matrix $P$ and diagonal matrix $D$. Condition: An $n \times n$ matrix $A$ is diagonalizable if and only if it has $n$ linearly independent eigenvectors. The columns of $P$ are the eigenvectors, and the diagonal entries of $D$ are the corresponding eigenvalues. Inner Product Spaces Inner Product: A function $\langle u, v \rangle$ that takes two vectors and returns a scalar, satisfying: $\langle u, v \rangle = \overline{\langle v, u \rangle}$ (conjugate symmetric) $\langle u+v, w \rangle = \langle u, w \rangle + \langle v, w \rangle$ (linearity in the first argument) $\langle cu, v \rangle = c\langle u, v \rangle$ (homogeneity in the first argument) $\langle v, v \rangle \ge 0$ and $\langle v, v \rangle = 0 \iff v = 0$ (positive-definite) Norm: $\|v\| = \sqrt{\langle v, v \rangle}$. Orthogonal Set: A set of vectors $\{v_1, \dots, v_k\}$ such that $\langle v_i, v_j \rangle = 0$ for $i \neq j$. Orthonormal Set: An orthogonal set where each vector has unit norm, i.e., $\|v_i\| = 1$. Orthogonal Complement: For a subspace $W$ of an inner product space $V$, $W^\perp = \{v \in V \mid \langle v, w \rangle = 0 \text{ for all } w \in W\}$. Gram-Schmidt Orthogonalization: A process for converting a set of linearly independent vectors into an orthonormal set. Given basis $\{v_1, \dots, v_k\}$, construct orthonormal basis $\{u_1, \dots, u_k\}$: $u_1 = \frac{v_1}{\|v_1\|}$ $u_2 = \frac{v_2 - \text{proj}_{u_1}v_2}{\|v_2 - \text{proj}_{u_1}v_2\|}$, where $\text{proj}_u v = \frac{\langle v, u \rangle}{\langle u, u \rangle} u$. Continue for $u_3, \dots, u_k$.