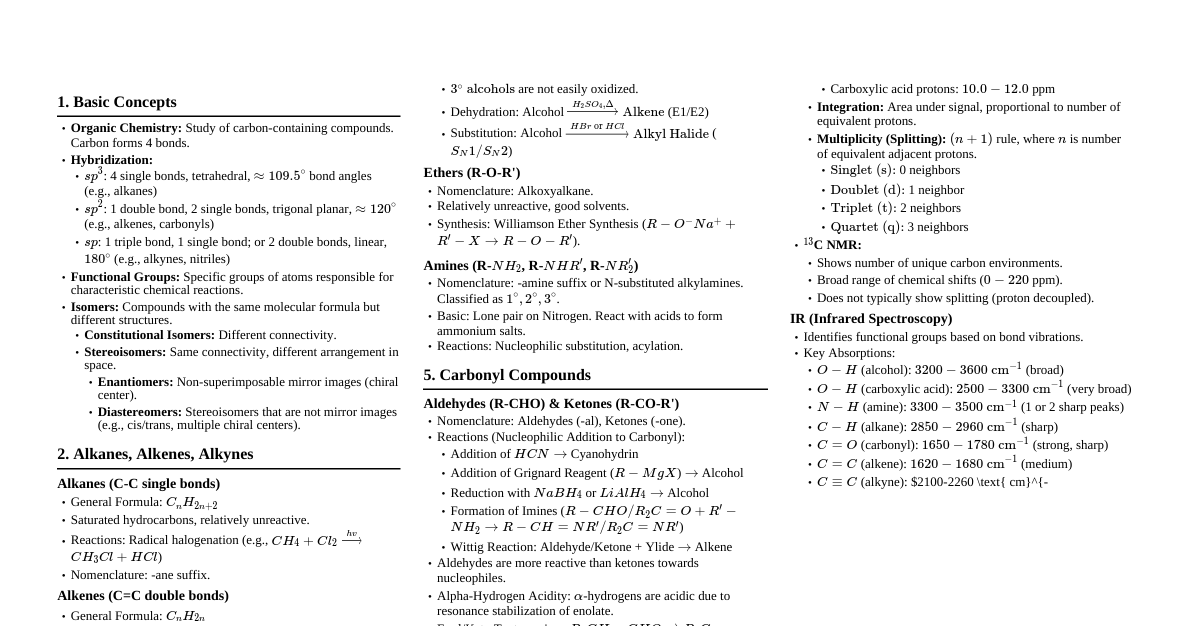

CPU - Basic Operation The "brain" of the computer. Simple cycle: Fetch instruction from memory Decode it to determine type and operands Execute the instruction Repeat CPU - Instruction Sets Each CPU has a specific set of instructions it can execute. x86 processors: Intel processors (8088, 286, 386, Pentium, Core i3/i5/i7). ARM processors: Different instruction set. x86 cannot run ARM programs, and vice versa. CPU - Registers Super-fast storage inside the CPU for key variables and temporary results. General Purpose Registers: Hold variables and temporary results. Load/store words from/to memory. Combine operands (e.g., add two words). Special Registers (Visible to Programmer): Program Counter (PC): Contains memory address of next instruction to fetch. Automatically updated. Stack Pointer (SP): Points to top of current stack in memory. Each procedure frame holds input parameters, local variables, temporary variables. PSW (Program Status Word): Condition code bits (set by comparison instructions). CPU priority level. Mode (user or kernel). Various control bits. Users can read but typically write only some fields. Important for system calls and I/O. CPU - Modes Kernel Mode: CPU can execute EVERY instruction. Can use every hardware feature. Operating system runs here. Has access to complete hardware. User Mode: Only subset of instructions allowed. Only subset of features accessible. All I/O and memory protection instructions disallowed. Cannot set PSW mode bit to enter kernel mode. User programs ALWAYS run in user mode. System Calls & Traps System Calls: How user programs obtain OS services. Traps into kernel and invokes OS. Trap instruction (e.g., syscall on x86-64) switches from user mode to kernel mode. After OS completes, returns control to user program. Other Traps: Hardware warnings of exceptional situations (e.g., divide by 0, floating-point underflow). OS gets control and decides what to do. Architecture vs. Micro-architecture Architecture: Everything visible to software. Instructions and registers. What programmers see and use. Micro-architecture: Implementation of the architecture. Data and instruction caches, Translation Lookaside Buffers (TLBs), branch predictors, pipelined datapath. NOT visible to OS or software. Advanced CPU Features Pipelining Modern CPUs process multiple instructions simultaneously. Example (Three-Stage Pipeline): Fetch unit gets instruction $n+2$. Decode unit decodes instruction $n+1$. Execute unit executes instruction $n$. All three stages work simultaneously like an assembly line. Challenges: Instruction must be executed even if previous instruction was a taken conditional branch. Increases complexity for compiler/OS writers. Superscalar CPU More advanced than pipelining. Multiple execution units present (e.g., integer arithmetic, floating-point, Boolean operations). Multiple instructions fetched, decoded, and placed in a holding buffer. Execution units grab instructions from buffer when available. Result: Instructions often executed out of order. Hardware ensures results match sequential execution. Some complexity pushed onto the operating system. Multithreading / Hyperthreading What it does: CPU holds state of two different threads. Switches between threads on a nanosecond timescale. If one thread waits for memory, CPU switches to the other. Reduces thread-switching time to nanoseconds. Not true parallelism: Only one thread runs at a time, but switching is extremely fast. Impact on OS: Each thread appears as a separate CPU to the OS (e.g., 2 physical CPUs with 2 threads each = OS sees 4 CPUs). OS must schedule carefully to avoid running 2 threads on the same CPU while another CPU is idle. Multicore Chips Modern approach: 4, 8, or more complete processors/cores on one chip. Each core is an independent CPU. High-end processors: 50+ cores (Intel Xeon, AMD Ryzen). Requires a multiprocessor operating system. Cache organization choices: Shared L2 cache among all cores (needs complex cache controller). Separate L2 cache per core (keeping consistent is harder). GPUs (Graphics Processing Units) Literally thousands of tiny cores. Excellent for many small parallel computations (e.g., rendering polygons). Not good at serial tasks. Hard to program. Useful for OS tasks (encryption, network traffic processing). Most of OS won't run on GPU. Memory - Ideal vs. Reality Ideal Memory: Extremely fast, abundantly large, dirt cheap. Reality: No technology satisfies all three goals simultaneously. Solution: Memory hierarchy with multiple layers. Memory - Hierarchy Layer Access Time Capacity Persistent? Cost per Bit Registers $ $ No Highest Cache 1-8 nsec 4-8 MB No Very High Main Memory 10-50 nsec 16-64 GB No Medium Persistent Memory 10s-100s $\mu$sec - Yes Medium-Low Disk/SSD 10 msec / 10s-100s $\mu$sec 2-16+ TB Yes Lowest Pattern: Top layers have higher speed, smaller capacity, greater cost per bit than lower layers (often by factors of billions!). Registers (Memory Hierarchy) Top layer, internal to CPU. Made of same material as CPU, just as fast. No delay accessing them. Capacity: 32-bit CPU: $32 \times 32$ bits ($ 64-bit CPU: $64 \times 64$ bits ($ Programs must manage registers themselves (in software). Cache Memory Organization: Main memory divided into cache lines (typically 64 bytes). Most heavily used cache lines kept in high-speed cache. Cache located inside or very close to CPU. Cache Operation: Cache Hit: Needed line is in cache. Request satisfied from cache. No memory request on bus. Takes only few clock cycles. Cache Miss: Line not in cache. Must go to main memory. Substantial time penalty: tens to hundreds of cycles. Limited by high cost. Cache Levels: L1 Cache: Always inside CPU. Feeds decoded instructions to execution engine. Often two L1 caches: one for instructions, one for data. Typically 32 KB each. No delay accessing. L2 Cache: Larger: several megabytes. Holds recently used memory words. Several clock cycles delay. L3 Cache: (if present) Even larger. More delay. Multicore Cache Design: Option A: Single shared L2 cache for all cores (needs complex cache controller). Option B: Separate L2 cache per core (keeping caches consistent is harder). Caching Concepts Why Caching Works: Some resource pieces are used much more heavily than others. Keeping heavily used pieces in fast storage improves performance dramatically. OS Uses Caching For: Keeping heavily used files in main memory. Path name to disk address conversions. Web page URL to IP address conversions. Many other uses. Key Questions in Any Caching System: When to put new item into cache? Which cache line to put new item in? Which item to remove when slot needed? Where to put newly evicted item in larger memory? Example: CPU Cache Line Selection With 4096 cache lines of 64 bytes and 32-bit addresses: Bits 6-17: specify cache line. Bits 0-5: byte within cache line. Item to remove = same line as new data goes into. Place in memory uniquely determined by address. Nonvolatile Memory ROM (Read Only Memory) Programmed at factory. Cannot be changed. Fast and inexpensive. Used for bootstrap loader on some computers. EEPROM (Electrically Erasable PROM) Nonvolatile like ROM. Can be erased and rewritten. Writing takes much longer than RAM. Used like ROM but bugs can be fixed by rewriting. Bootstrap code may be stored here. Flash Memory Nonvolatile. Can be erased and rewritten. Common for bootstrap code (BIOS). Used in smartphones and SSDs. Faster alternative to hard disks (speed between RAM and disk). Limitation: Wears out if erased too many times. Firmware does load balancing to extend life. CMOS Volatile (loses data when power off). Holds current time and date. Holds configuration parameters (which drive to boot from). Powered by small battery even when computer unplugged. Battery typically lasts several years, draws very little power. When battery fails, computer "forgets" how to boot. Virtual Memory Concept: Run programs larger than physical memory. Program placed on nonvolatile storage (SSD/disk). Main memory used as cache for most heavily executed parts. How It Works: Program needs data not currently in memory. OS frees up some memory (writes unused data to disk). Loads new data at this location. Physical address for data/code is no longer fixed. Remaps memory addresses on the fly. MMU (Memory Management Unit) Part of CPU. Converts program's generated address to physical address in RAM. Does on-the-fly address translation. Performance Impact: Every memory access must be remapped using special data structures (also in memory). In multiprogramming: context switch changes these structures (mappings differ per process). Both address translation and context switch are expensive. Nonvolatile Storage Hard Disk Drives (Magnetic Disks) Advantages: Two orders of magnitude cheaper than RAM per bit. Two orders of magnitude larger capacity. Disadvantage: Random access three orders of magnitude slower (mechanical device). Structure: One or more metal platters rotating at 5,400-15,000 RPM. Mechanical arm pivots over platters. Information written in concentric circles. Key Components: Track: Annular region read by one head at given arm position. Cylinder: All tracks for given arm position (across all platters). Sector: Track division, typically 512 bytes per sector. Modern disks: outer cylinders have more sectors than inner. Performance: Seek time: Moving arm to different cylinder $\sim1$ msec, random $\sim5-10$ msec. Rotational delay: Waiting for sector to rotate under head $\sim5-10$ msec. Transfer rate: 50 MB/sec (low-end) to 160-200 MB/sec (faster drives). Solid State Drives (SSDs) Characteristics: Not actually disks (no platters or moving arms!). Store data in electronic (Flash) memory. Similar to disks: store lots of data, data not lost when power off. From OS perspective: somewhat disk-like. Advantages over HDDs: Much faster. Better at random access (no mechanical arm). Reading: tens of microseconds (vs milliseconds for HDD). Disadvantages: More expensive per byte than rotating disks. Writes more complicated (require erasing full data block first). Write time: few hundred microseconds (still better than HDD). Usage: Not as common in data centers for bulk storage (cost). Popular for consumer devices. Persistent Memory Newest member of storage family. Best known: Intel Optane (available since 2016). Characteristics: Additional layer between SSDs/hard disks and memory. Fast: Only slightly slower than regular RAM. Persistent: Retains content across power cycles. Usage Options: Option 1: As Fast SSD (implement really fast solid-state drive). Option 2: Direct Memory Bus Attachment Attached directly to memory bus. Used like normal memory. Data structures persist when power goes off. Advantages: No special driver needed, byte-granularity access, no need to transfer large blocks. I/O Devices - Structure Operating system must manage many I/O devices beyond disks. I/O Device Components: Controller: Chip or set of chips. Physically controls the device. Accepts/carries out commands from OS. Presents simpler interface to OS. Complexity hidden: Actual device control is complicated. Often contains small embedded computers. Device Itself: Actual hardware. Simple interface. Standardized (e.g., any SATA controller can handle any SATA disk). Example: Hard Disk Controller Accepts command: "read sector 11,206 from disk 2". Converts linear sector number to cylinder, sector, head. Accounts for outer cylinders having more sectors, remapped bad sectors. Determines current cylinder position. Moves arm required number of cylinders. Waits for proper sector rotation. Reads and stores bits from drive. Removes preamble, computes checksum. Assembles bits into words, stores in memory. Device Drivers Purpose: Software that talks to controller. Gives commands and accepts responses. Each controller type needs different software. Requirements: Each controller manufacturer supplies driver for each supported OS. Example: Scanner may come with drivers for macOS, Windows 11, Linux. Installation Methods: Method 1: Relink Kernel Relink kernel with new driver. Reboot system. Used by many older UNIX systems. Method 2: OS File Entry Make entry in OS file indicating driver needed. Reboot system. At boot, OS finds and loads needed drivers. Used by older Windows versions. Method 3: Dynamic Loading OS accepts new drivers while running. Install on the fly without reboot. Becoming much more common. Required for hot-pluggable devices (USB, Thunderbolt). Driver Location: To be used, must be in kernel mode. Most drivers run below kernel boundary. Some modern systems (Linux, Windows) support running outside kernel. Very few systems (like MINIX 3) run ALL drivers in user space. Device Registers and I/O Port Space Device Registers: Small number of registers per controller. Used to communicate with controller. Example: Minimal Disk Controller Registers: Disk address. Memory address. Sector count. Direction (read or write). Driver Operation: Gets command from OS. Translates to appropriate values. Writes values into device registers. Activates controller. I/O Port Space: Collection of all device registers. Two Approaches to Accessing Registers: Approach 1: Memory-Mapped I/O Device registers mapped into OS address space. Read/write like ordinary memory words. No special I/O instructions needed. User programs kept away by not putting addresses in their reach. Approach 2: Special I/O Port Space Device registers in separate I/O port space. Each register has port address. Special IN and OUT instructions (kernel mode only). Drivers use these to read/write registers. Uses no address space but requires special instructions. Three Methods of I/O Method 1: Busy Waiting (Programmed I/O) Process: User program issues system call. Kernel translates to procedure call to driver. Driver starts I/O. Driver sits in tight loop continuously polling device. When I/O completes, driver puts data where needed and returns. OS returns control to caller. Disadvantage: Ties up CPU polling device until finished. Method 2: Interrupt-Driven I/O Process: Driver starts device. Driver asks device for interrupt when finished. Driver returns immediately. OS blocks caller if needed, looks for other work. When transfer completes, controller generates interrupt . Interrupt signals completion. Advantage: CPU can do other work while I/O happens. Interrupt Processing (Detailed): Step 1: Interrupt Signaling Controller finishes transfer. Signals interrupt controller chip using bus lines. Interrupt controller checks if ready (may be handling higher-priority interrupt). If ready, asserts pin on CPU chip. Step 2: CPU Response Interrupt controller puts device number on bus. CPU reads device number. CPU decides to take interrupt. Pushes program counter and PSW onto stack. CPU switches to kernel mode. Step 3: Finding Handler Device number used as index into interrupt vector table . Table contains addresses of interrupt handlers. Each handler is part of driver for that device. Step 4: Handler Execution Interrupt handler starts. Saves stacked program counter, PSW, other registers (in process table). Queries device for status. Performs necessary processing. Step 5: Return Handler restores context. Returns to previously running user program. Resumes at first unexecuted instruction. Method 3: DMA (Direct Memory Access) Concept: Special DMA chip controls bit flow between memory and controller. No constant CPU intervention needed. Process: CPU sets up DMA chip: How many bytes to transfer. Device address. Memory address. Direction. DMA chip takes over. When DMA chip done, causes interrupt. Interrupt handled as described above. Advantage: Minimal CPU involvement in data transfer. Buses - Evolution Simple Organization (Fig 1-6): Used on minicomputers for years, original IBM PC. Single bus connecting CPU, memory, I/O devices. Problem: As processors and memories got faster, single bus couldn't handle traffic, became bottleneck. Solution: Add additional buses for faster I/O devices and CPU-to-memory traffic. Modern Large x86 System (Fig 1-12) Multiple Buses Present: Cache bus. Memory bus (DDR). PCIe. PCI (legacy). USB. SATA. DMI. Each has different transfer rate and function. OS must be aware of all for configuration and management. PCIe (Peripheral Component Interconnect Express) Background: Invented by Intel, successor to PCI bus (which replaced original ISA bus). Much faster than predecessors: tens of gigabits per second. Key Innovation: Point-to-Point Architecture Old buses (ISA, PCI): Parallel and shared (multiple devices use same wires, need arbiter). PCIe: Dedicated, point-to-point connections, each device has its own connection. Serial vs. Parallel: Old PCI: Parallel bus architecture (send 32-bit number over 32 parallel wires, all bits must arrive at same time). PCIe: Serial bus architecture (send all bits through single connection ( lane ), much simpler, no timing synchronization needed, like network packet). Parallelism in PCIe: Multiple lanes work in parallel (e.g., 32 lanes carry 32 messages in parallel). Performance: 16 lanes of PCIe 4.0: 256 gigabits/second. PCIe 5.0: Double the speed. PCIe 6.0: Double again. Upgraded every 3-5 years. Legacy Support: Older PCI devices connect to separate hub processor. System Bus Architecture CPU Connections: To memory: Fast DDR4 bus. To external graphics: PCIe. To all other devices: Through hub via DMI (Direct Media Interface) bus. Hub Connections: USB devices: via Universal Serial Bus. Hard disks/DVDs: via SATA bus. Ethernet frames: via PCIe. Older PCI devices: via traditional PCI bus. Additional Buses: Each core has dedicated cache. Larger cache shared between cores. Each cache introduces yet another bus. USB (Universal Serial Bus) Original Purpose: Attach slow I/O devices (keyboard, mouse). Evolution: USB 1.0: 12 Mbps. USB 2.0: 480 Mbps. USB 3.0: 5 Gbps. USB 3.2: 20 Gbps. USB 4: 40 Gbps. Modern USB is anything but "slow"! Physical Characteristics: Small connector with 4-11 wires (version dependent). Some wires supply electrical power, some connect to ground. Architecture: Centralized bus. Root device polls all I/O devices every 1 msec, checks if they have traffic. Key Advantage: Any USB device can be connected to computer. Functions immediately, no reboot required. Pre-USB devices required reboot (very frustrating!). Booting the Computer Basic Components Motherboard Contains: CPU. Slots for memory chips. Sockets for PCIe (or other) plug-in cards. Small amount of flash memory with system firmware. System Firmware: Program called BIOS (Basic Input Output System). Strictly, BIOS applies only to older IBM PC compatible machines. Modern term: system firmware. Old-Style BIOS Boot Process Initial Steps: Power Button Pressed: Motherboard waits for power supply to stabilize. CPU Starts: Fetches code from hard-coded physical address (reset vector). Reset vector mapped to flash memory. Executes code from BIOS. BIOS Initialization: Detects and initializes resources: RAM, Platform Controller Hub, Interrupt controllers. Scans PCI/PCIe buses. Detects and initializes attached devices. If devices differ from last boot, configures new devices. Sets up runtime firmware for critical services (including low-level I/O). Determine Boot Device: BIOS tries list of devices stored in CMOS memory. User can change list via BIOS configuration program (at boot). Example: Try USB drive first, then hard disk/SSD. Read MBR (Master Boot Record): First sector from boot device read into memory. MBR contains program. Program examines partition table (end of boot sector). Determines which partition is active. Load Secondary Boot Loader: Read from active partition. Loads operating system from active partition. Starts operating system. Operating System Startup: OS Queries BIOS: Gets configuration information. Load Device Drivers: For each device, check if driver available. If not, ask user to install (e.g., download from Internet). Load all drivers into kernel. Final Initialization: Initialize OS tables. Create background processes. Start login program or GUI. Limitations: Slow booting, architecture-dependent, limited to smaller SSDs/disks (up to 2 TB), easy to understand. UEFI Boot Process UEFI (Unified Extensible Firmware Interface) Improvements over BIOS: Fast booting. Architecture independent. Supports storage up to 8 ZiB ($8 \times 2^{70}$ bytes). More complex. Key Differences: Partition Table: No longer relies on MBR in first sector. Uses GPT (GUID Partition Table) in second sector. Contains information about partition locations. File System Support: BIOS itself can read specific file systems. Must support at least: FAT-12, FAT-16, FAT-32. Special partition: EFI System Partition (ESP). No single magic boot sector. Uses proper file system with programs, configs, etc. Executable Format: BIOS can execute programs in PE (Portable Executable) format. BIOS looks like mini operating system: understands partitions, reads file systems, executes programs, has shell with commands. Boot Manager: UEFI has boot manager (boot menu). Multiple entries with configurable order. Can try different boot options. Easy to change menu and default from running OS. Boot Process: Still picks bootloader program. Loads Linux, Windows, or other OS. But many partitions and OSs to choose from. Boot manager decides based on configuration. Bootloader continues loading chosen OS. Secure Boot: Advanced feature. Ensures OS boots as intended. Verifies correct software being loaded. Key Concepts Summary Hardware-Software Relationship OS intimately tied to hardware, must know hardware details. Extends instruction set, manages resources. CPU Essentials Fetch-decode-execute cycle. Two modes: user and kernel. System calls bridge user to kernel. Modern features: pipelining, superscalar, multicore. Memory Hierarchy Trade speed for capacity and cost. Caching critical for performance. Virtual memory allows programs larger than RAM. Storage Options Hard disks: cheap, large, slow (mechanical). SSDs: expensive, fast, electronic. Persistent memory: fast, persistent, byte-accessible. I/O Management Controllers present simple interface. Drivers provide OS-controller communication. Three methods: busy waiting, interrupts, DMA. Modern Bus Architecture Multiple specialized buses. PCIe: point-to-point, serial, fast. USB: universal, hot-pluggable. Booting Old BIOS: simple, limited, MBR-based. UEFI: complex, powerful, flexible, GPT-based. Study Tips Understand the hierarchy: Registers $\rightarrow$ Cache $\rightarrow$ RAM $\rightarrow$ SSD $\rightarrow$ Disk. Know the modes: User (restricted) vs. Kernel (privileged). Remember the trade-offs: Speed vs. Size vs. Cost vs. Persistence. Visualize the flow: How data moves through system. Connect concepts: How CPU, memory, and I/O work together. Important Numbers to Remember Register access: $ Cache access: 1-8 nanoseconds. RAM access: 10-50 nanoseconds. SSD access: 10-100 microseconds. Hard disk access: 5-10 milliseconds. Cache line size: 64 bytes. Typical L1 cache: 32 KB. Typical L2 cache: Few MB. Typical RAM: 16-64 GB.