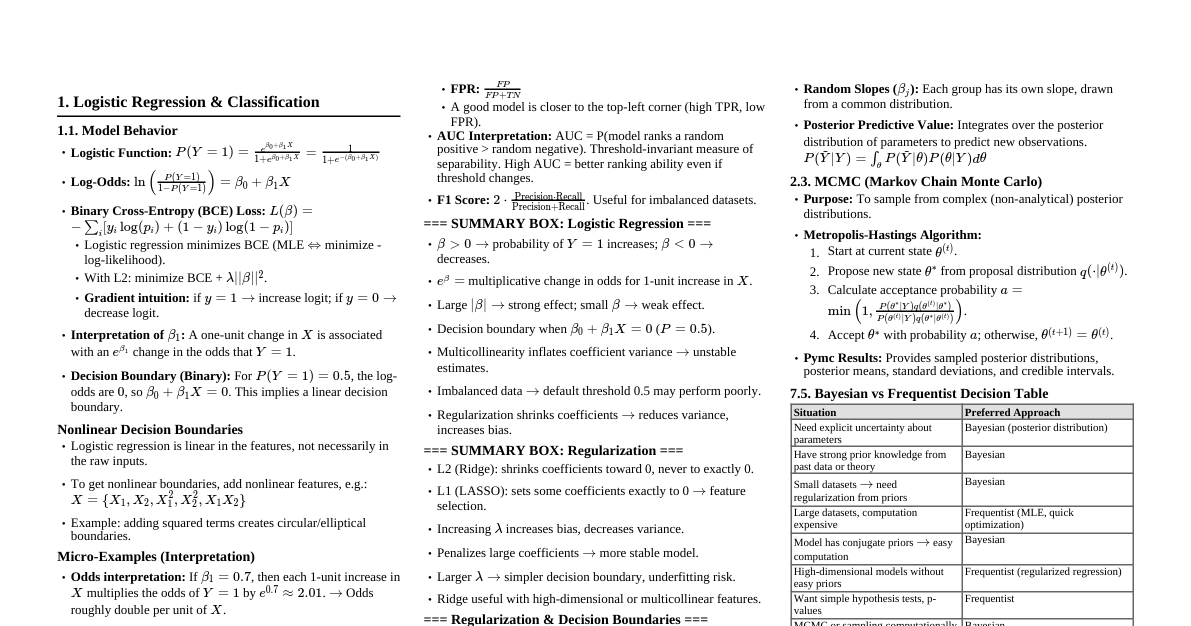

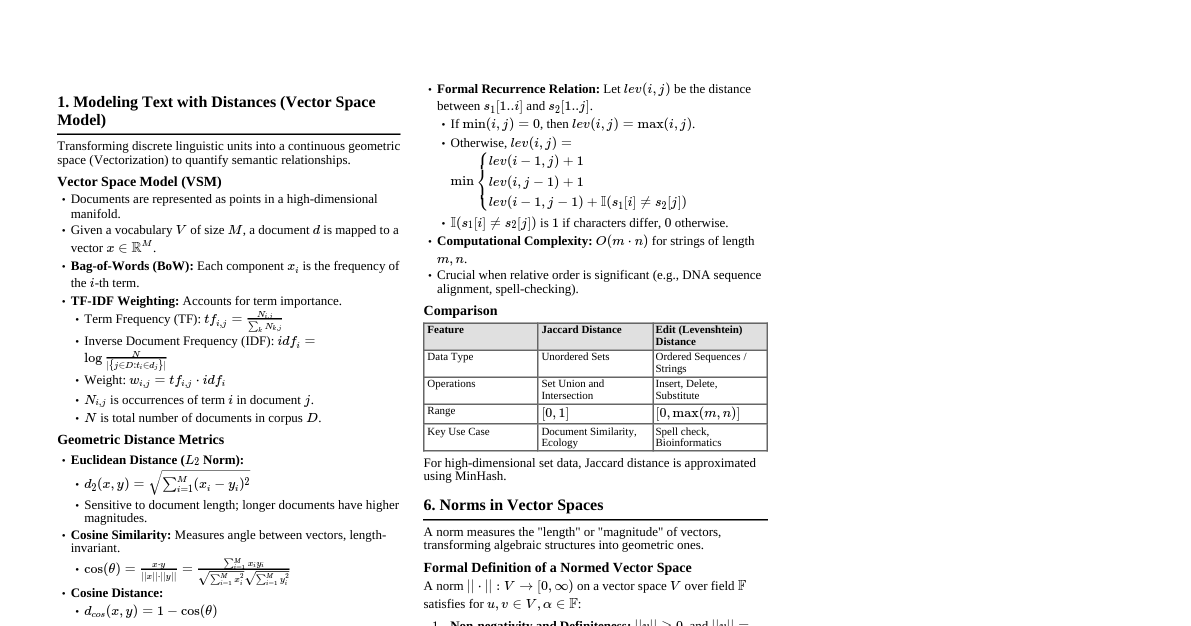

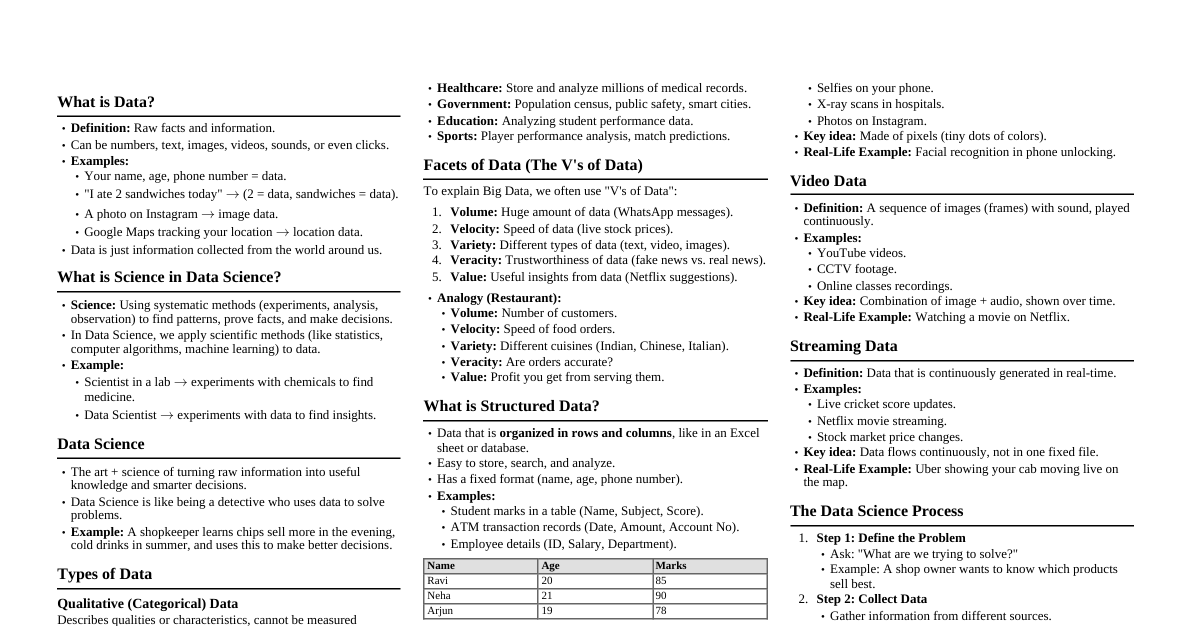

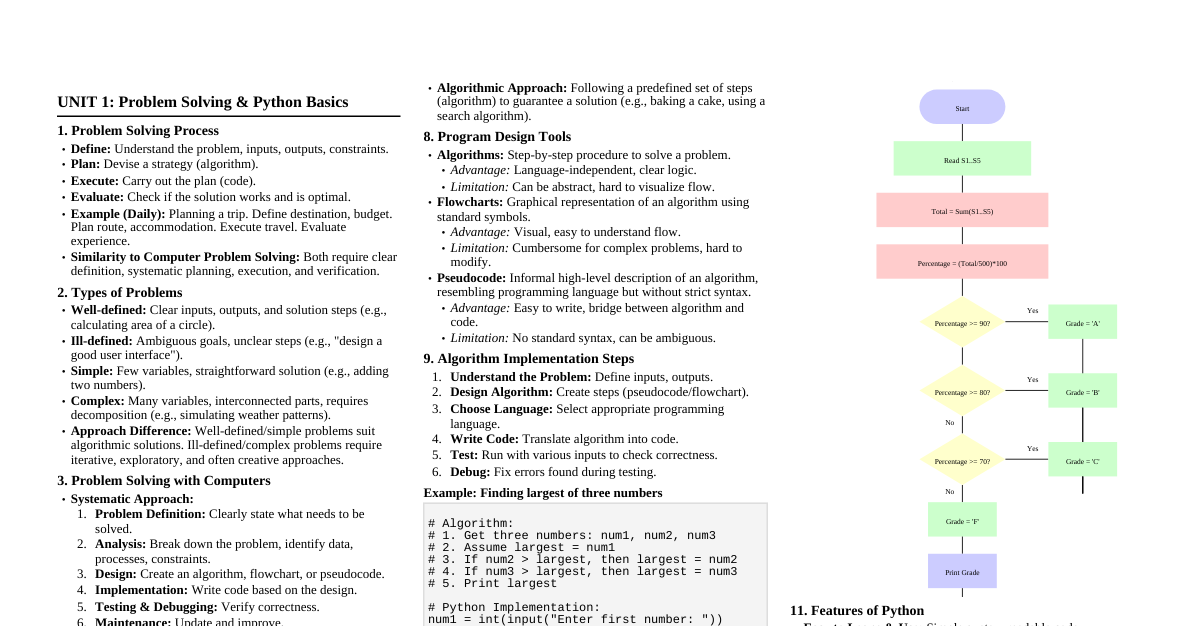

1. Foundations: Math & Statistics 1.1 Linear Algebra Vectors & Matrices: Operations, dot product, cross product, inverse, transpose. Eigenvalues & Eigenvectors: Understanding principal components. Matrix Decomposition: SVD, PCA. Applications: Dimensionality reduction, recommender systems. 1.2 Calculus Derivatives: Gradients, optimization, backpropagation. Integrals: Probability density functions. Multivariable Calculus: Partial derivatives, chain rule. 1.3 Probability & Statistics Probability Theory: Bayes' Theorem, conditional probability, random variables. Distributions: Normal, Binomial, Poisson, Uniform. Descriptive Statistics: Mean, median, mode, variance, standard deviation. Inferential Statistics: Hypothesis testing (t-test, ANOVA), confidence intervals. Regression: Linear, logistic regression foundations. 2. Programming Fundamentals 2.1 Python for Data Science Basics: Variables, data types, control flow (if/else, loops), functions. Data Structures: Lists, tuples, dictionaries, sets. Object-Oriented Programming (OOP): Classes, objects (basic understanding). Virtual Environments: venv , conda . 2.2 Essential Libraries NumPy: Numerical computing, arrays, array operations. Pandas: Data manipulation, DataFrames, Series, I/O operations (CSV, Excel). Matplotlib & Seaborn: Data visualization (histograms, scatter plots, line plots, heatmaps). Scikit-learn: Machine learning algorithms. 2.3 SQL (Structured Query Language) Basic Queries: SELECT , FROM , WHERE , ORDER BY . Joins: INNER JOIN , LEFT JOIN , RIGHT JOIN , FULL JOIN . Aggregations: GROUP BY , HAVING , COUNT , SUM , AVG . Subqueries & CTEs. 3. Data Collection & Preprocessing 3.1 Data Acquisition Databases: SQL, NoSQL (overview). APIs: RESTful APIs for data extraction. Web Scraping: Libraries like Beautiful Soup, Scrapy. File Formats: CSV, JSON, XML, Parquet. 3.2 Data Cleaning & Transformation Handling Missing Values: Imputation (mean, median, mode), deletion. Outlier Detection & Treatment: IQR, Z-score, removal, capping. Data Type Conversion: Correcting types (e.g., string to numeric). Feature Scaling: Normalization (Min-Max), Standardization (Z-score). Encoding Categorical Variables: One-Hot Encoding, Label Encoding. Date & Time Handling. 3.3 Exploratory Data Analysis (EDA) Univariate Analysis: Histograms, box plots, descriptive statistics. Bivariate Analysis: Scatter plots, correlation matrices, cross-tabulations. Multivariate Analysis: Pair plots, 3D plots (if applicable). Hypothesis Generation: Based on observed patterns. 4. Machine Learning Fundamentals 4.1 Supervised Learning Regression: Linear Regression ($y = \beta_0 + \beta_1 x_1 + ... + \beta_n x_n$) Polynomial Regression Ridge, Lasso Regression Classification: Logistic Regression ($P(Y=1|X) = \frac{1}{1 + e^{-(\beta_0 + \beta_1 x_1 + ...)}}$) K-Nearest Neighbors (KNN) Support Vector Machines (SVM) Decision Trees, Random Forests, Gradient Boosting (XGBoost, LightGBM) 4.2 Unsupervised Learning Clustering: K-Means DBSCAN Hierarchical Clustering Dimensionality Reduction: Principal Component Analysis (PCA) t-Distributed Stochastic Neighbor Embedding (t-SNE) 4.3 Model Evaluation & Selection Regression Metrics: MSE, RMSE, MAE, $R^2$. Classification Metrics: Accuracy, Precision, Recall, F1-Score, ROC-AUC. Cross-Validation: K-Fold, Stratified K-Fold. Hyperparameter Tuning: Grid Search, Random Search. Bias-Variance Trade-off. Overfitting & Underfitting. 5. Advanced Topics & Specializations (Optional) 5.1 Deep Learning Neural Networks: Perceptrons, activation functions. Architectures: CNNs (Computer Vision), RNNs/LSTMs (NLP). Frameworks: TensorFlow, Keras, PyTorch. 5.2 Natural Language Processing (NLP) Text Preprocessing: Tokenization, stemming, lemmatization. Feature Extraction: TF-IDF, Word Embeddings (Word2Vec, GloVe). Models: Naive Bayes, LSTMs, Transformers (BERT). 5.3 Big Data Technologies Distributed Computing: Hadoop, Spark. Data Warehousing: Data Lakes, Data Marts. 5.4 Deployment & MLOps Model Deployment: Flask, FastAPI, Docker. Monitoring & Maintenance. 6. Tools & Practices 6.1 Version Control Git & GitHub: Collaborative development, tracking changes. 6.2 Integrated Development Environments (IDEs) Jupyter Notebook/Lab: Interactive data analysis. VS Code, PyCharm. 6.3 Communication & Storytelling Presentation Skills: Explaining technical concepts to non-technical audiences. Visualization Best Practices. Dashboarding Tools: Tableau, Power BI (optional). 7. Learning Strategy Online Courses: Coursera, edX, fast.ai, Kaggle Learn. Books: "Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow", "Python for Data Analysis". Practice: Kaggle competitions, personal projects. Community: Join data science forums, meetups. Build a Portfolio: Showcase your projects on GitHub.