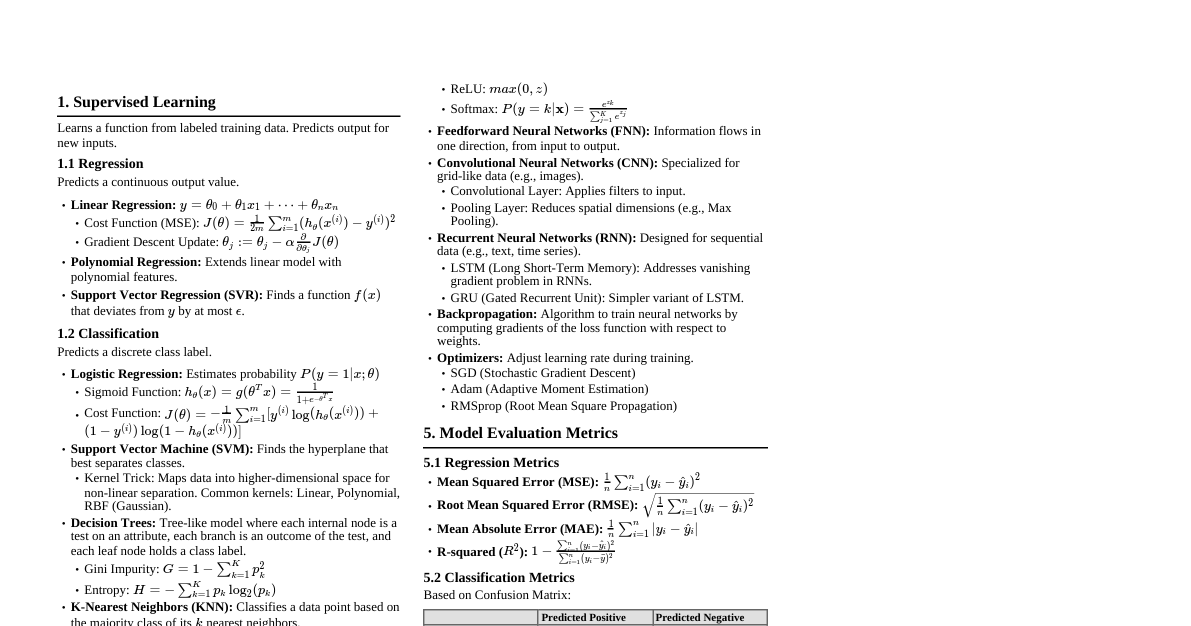

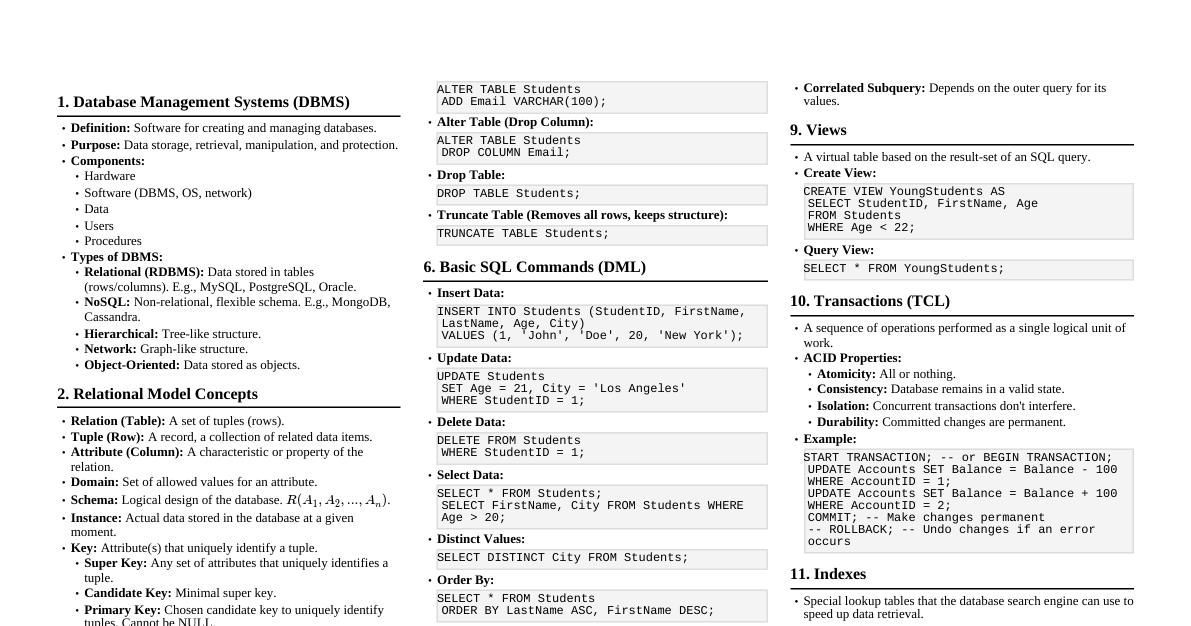

AI Techniques Overview Search Algorithms: Uninformed (Blind): BFS, DFS Informed (Heuristic): A*, Greedy Best-First Knowledge Representation & Reasoning (KRR): Logical, Semantic Nets, Ontologies Machine Learning (ML): Supervised, Unsupervised, Reinforcement Learning Natural Language Processing (NLP): Understanding & generating human language Computer Vision: Interpreting visual inputs (images, videos) Robotics: AI with mechanical systems (SLAM, motion planning) Supervised ML: Classification Algorithms Predicts a categorical output based on labeled data. Logistic Regression: Models probability of binary outcome using sigmoid function. Decision Trees: Tree-like model of decisions, simple to interpret. Random Forest: Ensemble of multiple decision trees, reduces overfitting. Support Vector Machine (SVM): Finds optimal hyperplane maximizing margin between classes. k-Nearest Neighbors (k-NN): Classifies based on majority class of 'k' closest neighbors. Naive Bayes: Based on Bayes' Theorem, assumes feature independence. Applications: Spam detection, medical diagnosis, sentiment analysis. Unsupervised ML: Clustering & Association Finds patterns in unlabeled data. Clustering Algorithms: Groups similar data points. K-Means: Partitions into K pre-defined clusters based on centroids. Hierarchical Clustering: Builds a tree of clusters (dendrogram). DBSCAN: Groups dense regions, identifies outliers. Association Rule Learning: Discovers relations between variables. Apriori Algorithm: Finds frequent itemsets (e.g., Market Basket Analysis). Dimensionality Reduction: Reduces number of features. PCA: Preserves variance in lower dimensions. t-SNE: Visualizes high-dimensional data. Applications: Customer segmentation, fraud detection, feature engineering. Reinforcement Learning (RL) Agent learns to make decisions by interacting with an environment to maximize cumulative reward. Components: Agent, Environment, State ($s$), Action ($a$), Reward ($r$), Policy ($\pi$), Value Function ($V$). Process: Observe state $\rightarrow$ Select action $\rightarrow$ Get reward/new state $\rightarrow$ Update policy. Key Concepts: Exploration vs. Exploitation, Discounted Future Reward. Applications: Game playing (AlphaGo), robotics, autonomous vehicles. Regression Analysis in ML Supervised technique to predict a continuous numerical value. Model: $y = \beta_0 + \beta_1 x + \epsilon$ (Simple Linear Regression). Cost Function: Measures error (e.g., Mean Squared Error, MSE: $\frac{1}{n}\sum_{i=1}^n (y_i - \hat{y}_i)^2$). Optimization: Algorithms like Gradient Descent minimize the cost function to find optimal parameters. Evaluation: R-squared ($R^2$), MAE, MSE, RMSE. Types: Linear, Polynomial, Ridge/Lasso (with regularization). Applications: Price prediction, sales forecasting. Model Evaluation Assessing model performance and generalization. A. Classification Metrics: Confusion Matrix: TP, TN, FP, FN. Accuracy: $(TP+TN) / Total$. Precision: $TP / (TP+FP)$. Recall: $TP / (TP+FN)$. F1-Score: Harmonic mean of Precision & Recall. ROC Curve & AUC: Plots TPR vs FPR. B. Regression Metrics: MAE: $\frac{1}{n}\sum_{i=1}^n |y_i - \hat{y}_i|$. MSE: $\frac{1}{n}\sum_{i=1}^n (y_i - \hat{y}_i)^2$. RMSE: $\sqrt{MSE}$. R-squared ($R^2$): Proportion of variance explained. C. Evaluation Techniques: Train-Test Split: Divide data for training and testing. K-Fold Cross-Validation: Robust estimate by rotating train/test sets. k-Nearest Neighbors (k-NN) Instance-based, lazy learning for classification/regression. How it works: Choose $k$. Calculate distance to all training points. Identify $k$ nearest neighbors. Classify by majority vote among neighbors. Example: Classifying a fruit by finding $k$ nearest known fruits. Advantages: Simple, no training phase, versatile. Disadvantages: Computationally expensive at prediction, sensitive to $k$ and distance, curse of dimensionality, sensitive to noise. Decision Tree Algorithm Tree-like model for classification and regression, splits data based on feature values. How it works: Start at root, select best feature to split. Use Gini Impurity (classification) or Variance Reduction (regression). Recursively partition until stopping criteria. Leaf node is majority class (classification) or average value (regression). Advantages: Easy to understand/interpret, little preprocessing, non-parametric. Disadvantages: Prone to overfitting, unstable, biased with imbalanced data, not optimal for complex relationships. Random Forest & Clustering Types A. Random Forest Algorithm: Ensemble learning method using multiple decision trees. Uses Bagging (Bootstrap Aggregating) and Feature Randomness. Aggregates predictions (majority vote/averaging). Reduces overfitting, handles high dimensionality, provides feature importance. B. Types of Clustering Algorithms: Partitioning: K-Means, K-Medoids. Hierarchical: Agglomerative (bottom-up), Divisive (top-down). Density-Based: DBSCAN, OPTICS (finds arbitrary shapes, handles noise). Distribution-Based: Gaussian Mixture Models (GMM). Grid-Based: STING (for large spatial datasets). K-Means Algorithm Centroid-based, partitioning clustering algorithm. Steps: Initialize $k$ centroids randomly. Assign each data point to nearest centroid. Update centroids as mean of assigned points. Repeat until convergence. Example: Clustering customers by Income & Spending into $k=3$ segments. Key Points: Sensitive to initial centroids, requires $k$, best for spherical clusters. Density-Based Clustering (DBSCAN) Groups dense regions, marks low-density points as outliers. Concepts: $\epsilon$ (eps): Neighborhood radius. MinPts: Min points for dense region. Core Point: $\ge$ MinPts within $\epsilon$. Border Point: Reachable from core, but not core itself. Noise Point: Neither core nor border. Working: Pick unvisited point $\rightarrow$ Find $\epsilon$-neighborhood $\rightarrow$ If core, start cluster and expand $\rightarrow$ If not core, mark noise. Advantages: Finds arbitrary shapes, robust to outliers, no pre-specified $k$. Limitations: Struggles with varying densities, sensitive to $\epsilon$ and MinPts. Conditional Probability Probability of event A given event B occurred: $P(A|B)$. Formula: $P(A|B) = P(A \text{ and } B) / P(B)$, if $P(B) > 0$. Example: Drawing a King given it's a Heart from a deck. $P(\text{Heart}) = 13/52 = 1/4$. $P(\text{King and Heart}) = 1/52$. $P(\text{King}|\text{Heart}) = (1/52) / (13/52) = 1/13$. Naive Bayes Algorithm Probabilistic classifier based on Bayes' Theorem with strong feature independence assumption. How it works: Bayes' Theorem: $P(\text{Class}|\text{Features}) = [P(\text{Features}|\text{Class}) * P(\text{Class})] / P(\text{Features})$. "Naive" Assumption: $P(\text{Features}|\text{Class}) = \prod P(f_i|\text{Class})$. Train: Calculate priors $P(\text{Class})$ and likelihoods $P(f_i|\text{Class})$. Predict: Choose class with highest posterior probability. Types: Gaussian, Multinomial, Bernoulli. Advantages: Fast, simple, scalable, works well with categorical/high-dimensional data. Disadvantages: Naive independence assumption, zero-frequency problem (solved by Laplace smoothing). Applications: Spam filtering, sentiment analysis, document categorization. Working of Neural Networks Computational model inspired by biological neurons, organized in layers. Architecture: Input Layer $\rightarrow$ Hidden Layers $\rightarrow$ Output Layer. Neuron: Input $(x)$, weights $(w)$, bias $(b) \rightarrow$ Weighted sum $z = \sum(w_i x_i) + b \rightarrow$ Activation function $f(z)$ (e.g., ReLU, Sigmoid). Forward Propagation: Data passes through layers to compute output. Loss Function: Compares output to true value (e.g., MSE, Cross-Entropy). Backpropagation: Calculates gradient of loss w.r.t. weights by propagating error backward. Optimization: Updates weights using Gradient Descent: $w_{new} = w_{old} - \eta * (\partial Loss/\partial w)$. Iteration: Repeat until convergence. Neural Network Pros, Cons & Apps Advantages: High accuracy, models complex non-linear relationships. Automatic feature learning. Good generalization. Fault tolerant, versatile. Disadvantages: "Black Box" nature (lack of interpretability). Large data & computational requirements. Prone to overfitting. Long training times. Hyperparameter sensitive. Applications: Computer Vision (image recognition, object detection). Natural Language Processing (translation, chatbots). Speech Recognition, Autonomous Systems, Healthcare, Finance. Neural Networks vs. Deep Learning Aspect Neural Networks (ANN) Deep Learning Definition Broad class of models with $\ge 1$ hidden layer. Subset of ML with many hidden layers ("deep"). Architecture Can be shallow (1-2 hidden layers). Inherently deep (many layers: 10s, 100s, 1000s). Feature Eng. Often requires manual feature engineering. Automatic feature extraction from raw data. Data/Compute Moderate. Extremely high (big data, GPUs/TPUs). Machine Learning vs. Deep Learning Aspect Machine Learning (ML) Deep Learning (DL) Scope Broad field, computers learn from data. Specialized subfield of ML (deep NNs). Data Rep. Relies on feature engineering. Learns hierarchical feature representations. Perf. with Data Performance plateaus. Performance improves with more data/compute. Hardware Can run on standard CPUs. Requires GPUs/TPUs. Interpretability Some models are interpretable. Generally a black box. Support Vector Machine (SVM) Supervised algorithm for classification/regression, finds optimal hyperplane. Working: Finds a hyperplane ($w \cdot x + b = 0$) to separate classes. Maximizes margin (distance between hyperplane and nearest points). Support Vectors: Data points closest to hyperplane, define the margin. Kernel Trick: Maps non-linearly separable data to higher dimensions for linear separation. Advantages: Effective in high-dimensional spaces, memory efficient, versatile (kernels). Disadvantages: Slow with very large datasets, no direct probability estimates, sensitive to kernel/hyperparameters, poor interpretability. Applications: Text classification, image classification, bioinformatics. ANN Example: Handwritten Digit Recognition Classifying 28x28 pixel images of digits (MNIST) using a Feedforward NN. Architecture: Input Layer: 784 neurons (28x28 pixels). Hidden Layer 1: 128 neurons (ReLU activation). Hidden Layer 2: 64 neurons (ReLU activation). Output Layer: 10 neurons (Softmax activation for 0-9 probabilities). Working: Input image $\rightarrow$ Forward propagation through layers $\rightarrow$ Softmax outputs probabilities $\rightarrow$ Highest probability is predicted digit. Training: Backpropagation and optimizer (e.g., Adam) minimize Categorical Cross-Entropy Loss over 60,000 labeled images.