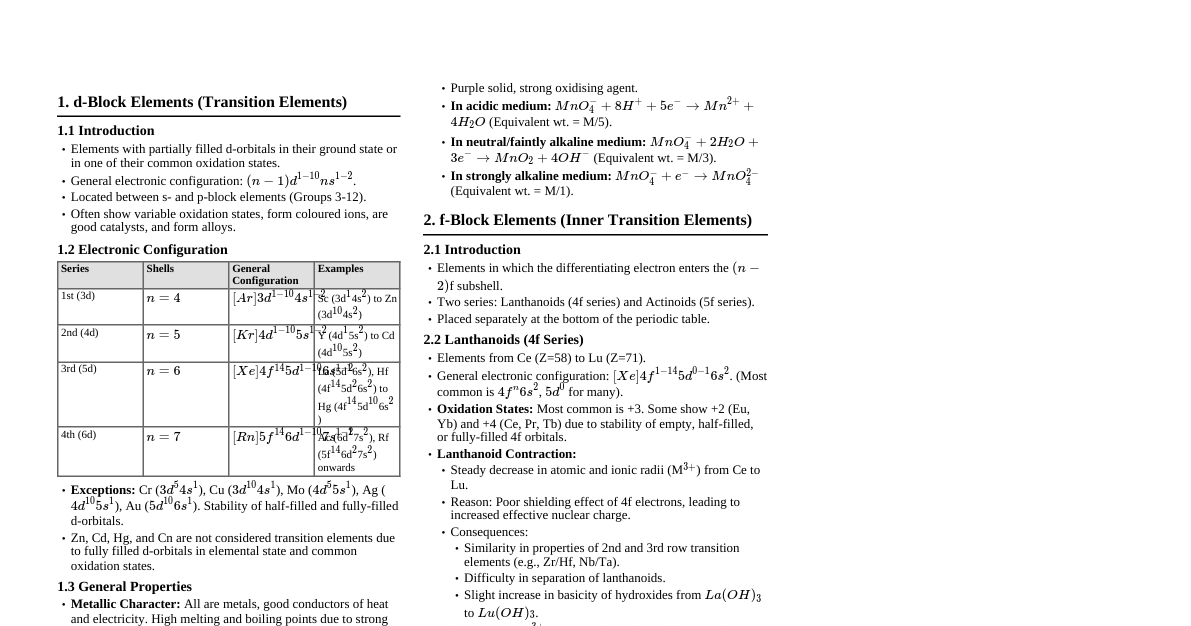

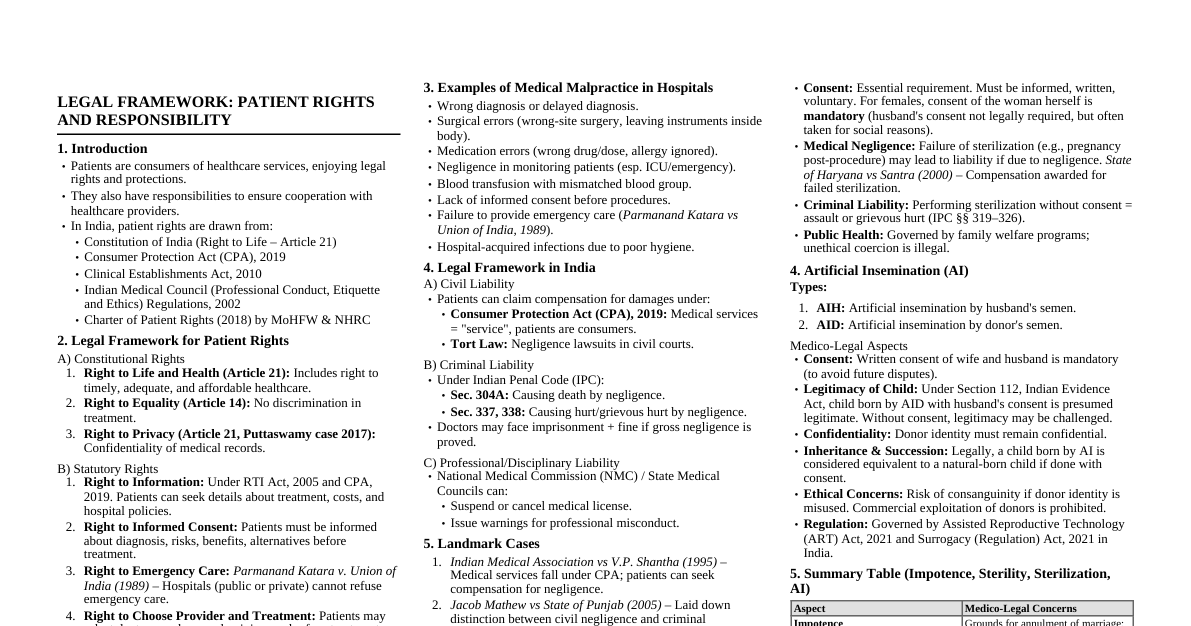

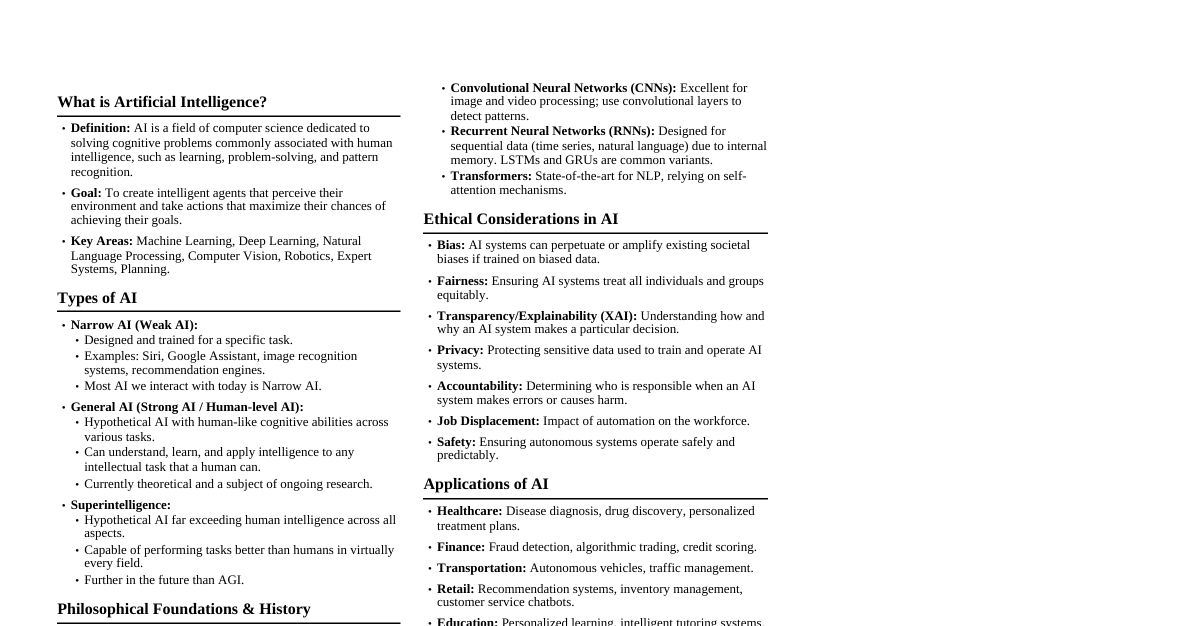

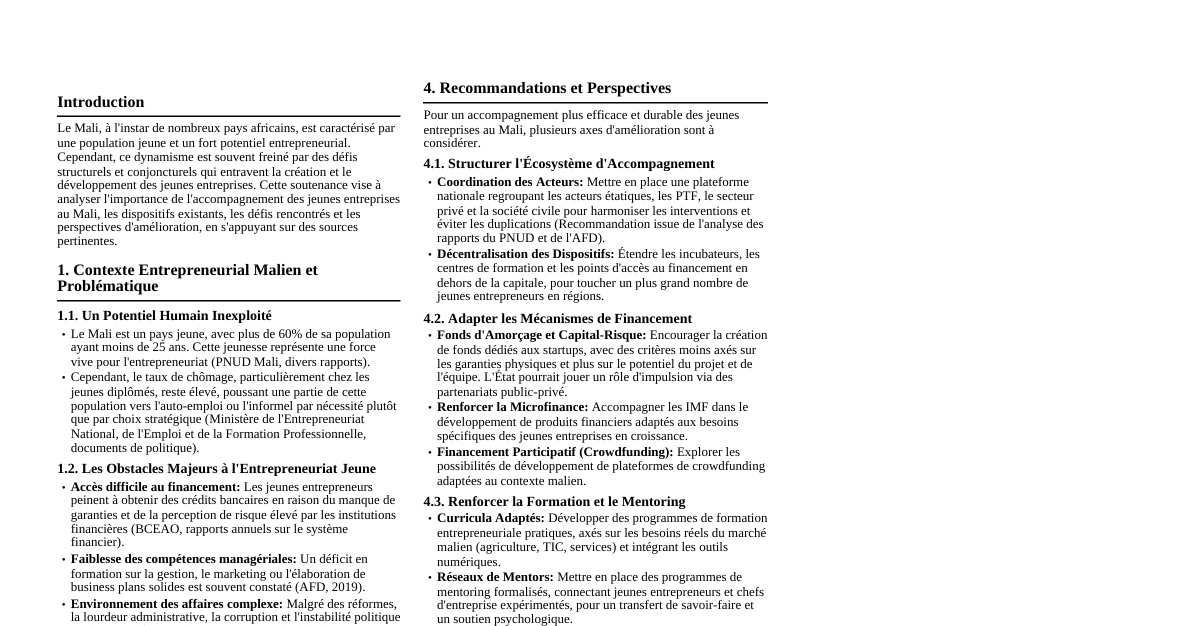

Memory Hierarchy (Chapter 5) 1. Introduction Principle of Locality: Programs access a relatively small portion of their address space at any instant. Temporal Locality: If an item is referenced, it will tend to be referenced again soon. Spatial Locality: If an item is referenced, items whose addresses are close by will tend to be referenced soon. Memory Hierarchy: Multiple levels of memory with different speeds and sizes. Faster memories are smaller and more expensive per bit. Goal: Present the user with large memory capacity (cheapest technology) at the speed of the fastest memory. Block (or Line): Minimum unit of information transferred between levels. Hit Rate: Fraction of memory accesses found in the upper level. Miss Rate: Fraction of memory accesses not found in the upper level ($1 - \text{Hit Rate}$). Hit Time: Time to access a level of the memory hierarchy. Miss Penalty: Time to fetch a block into a level from the lower level, plus transfer time and insertion. 2. Memory Technologies SRAM (Static Random Access Memory): Used for caches (levels closer to processor). Does not need refreshing. Uses 6-8 transistors per bit, faster, more expensive per bit. DRAM (Dynamic Random Access Memory): Used for main memory. Stores charge in a capacitor, requires periodic refreshing. Uses 1 transistor per bit, denser, cheaper per bit, slower. SDRAM (Synchronous DRAM): Uses a clock to synchronize with processor, improving speed. DDR (Double Data Rate) SDRAM: Transfers data on both rising and falling edges of the clock. Flash Memory: Nonvolatile, secondary memory for mobile devices. Writes can wear out bits; wear leveling technique spreads writes. Magnetic Disk: Largest & slowest level in hierarchy (servers). Platters rotate on a spindle, read/write head moves across surfaces. Track: Concentric circles on disk surface. Sector: Segment of a track, smallest amount read/written. Cylinder: All tracks under heads at a given point on all surfaces. Seek: Positioning head over proper track; seek time is time taken. Rotational Latency (Rotational Delay): Time for desired sector to rotate under head. Transfer Time: Time to transfer a block of bits. 3. The Basics of Caches Cache: A small, fast memory that stores copies of data from main memory. Direct-Mapped Cache: Each memory location maps to exactly one cache location. Cache location = (Block address) modulo (Number of blocks in cache). Uses tag to identify if data in cache corresponds to requested word. Valid bit: Indicates if an entry contains valid data. Total Bits in Direct-Mapped Cache: $2^n \times (2^m \times 32 + (32 - n - m - 2) + 1)$ $n$: bits for index (number of blocks $2^n$) $m$: bits for word within block (block size $2^m$ words) $2$: bits for byte part of address (if 4-byte words) $1$: bit for valid field Mapping Address to Multiword Block: Block address = (Byte address) / (Bytes per block). Cache block number = (Block address) modulo (Number of blocks in cache). Larger Blocks: Exploit spatial locality, reduce miss rate, improve hardware efficiency (less tag storage). Miss Penalty: Increases with block size (latency to first word + transfer time for rest). Early Restart / Critical Word First: Resume execution as soon as requested word is returned (can hide some miss penalty). 4. Handling Cache Misses Processor stalls until requested data is fetched from lower memory level. Steps for Instruction Cache Miss: Send original PC value to memory. Instruct main memory to perform read and wait. Write cache entry: data from memory to data portion, upper address bits to tag, set valid bit. Restart instruction execution (refetch, now hits in cache). 5. Handling Writes Write-Through: Writes update both cache and next lower level of memory hierarchy. Ensures consistency. Can be slow (100s of clock cycles per write). Write Buffer: Stores data waiting to be written to memory, allowing processor to continue. Write Allocate: Fetches block into cache on a write miss, then overwrites. Write-Back: Writes update only the cache block. Modified block written to lower level only when replaced. Improves performance by reducing writes to lower levels. More complex to implement. Requires a dirty bit to indicate if block has been modified. Policy on Write Misses: Write Allocate (common): Fetch block, then overwrite. No Write Allocate (alternative): Update memory, not cache. 6. Measuring and Improving Cache Performance CPU Time: $(\text{CPU execution clock cycles} + \text{Memory-stall clock cycles}) \times \text{Clock cycle time}$ Memory-Stall Clock Cycles: $(\text{Read-stall cycles} + \text{Write-stall cycles})$ Read-Stall Cycles: $(\text{Reads} / \text{Program}) \times \text{Read miss rate} \times \text{Read miss penalty}$ Write-Stall Cycles: $(\text{Writes} / \text{Program}) \times \text{Write miss rate} \times \text{Write miss penalty} + \text{Write buffer stalls}$ Average Memory Access Time (AMAT): Time for a hit + Miss rate $\times$ Miss penalty. Multilevel Caching: Adding secondary cache (L2, L3) to reduce miss penalty of primary cache (L1). L1 focuses on minimizing hit time. L2/L3 focus on reducing miss rate (larger, slower). 7. Reducing Cache Misses (Placement) Direct-Mapped: Block can go in exactly one place. Fully Associative: Block can go in any location. Requires parallel search (comparators), high hardware cost. Practical only for small caches. Set-Associative: Fixed number of locations (ways) per set. Block maps to unique set, can be placed in any element of that set. Combines direct-mapped and fully associative. Set = (Block number) modulo (Number of sets in cache). Increases associativity usually decreases miss rate (reduces conflict misses). Disadvantage: Potential increase in hit time. 8. Choosing Which Block to Replace Direct-Mapped: Only one choice, the block in that position. Associative Caches: Least Recently Used (LRU): Replaces block unused for longest time. Costly for high associativity. Random: Randomly selects candidate block. Simpler to build in hardware. 9. Common Framework for Memory Hierarchy (Four Questions) Where can a block be placed? (Mapping) Direct mapped (one place), Set associative (few places), Fully associative (any place). How is a block found? (Indexing/Searching) Indexing (direct mapped), Limited search (set associative), Full search (fully associative), Separate lookup table (page table). Which block is replaced on a miss? (Replacement Policy) LRU or Random. How are writes handled? (Write Policy) Write-through or Write-back. 10. The Three Cs Model of Cache Misses Compulsory Misses (Cold-Start Misses): First access to a block that has never been in cache. Reduce by increasing block size. Capacity Misses: Cache cannot contain all blocks needed during execution. Reduce by enlarging cache size. Conflict Misses (Collision Misses): Multiple blocks compete for the same set in set-associative or direct-mapped caches. Reduce by increasing associativity. 11. Virtual Memory Virtual Memory: Uses main memory as a cache for secondary storage (disk). Motivations: Efficient & safe sharing of memory among programs/VMs. Removes programming burden of small main memory (illusion of large memory). Protection: Mechanisms that ensure processes cannot interfere with each other's data. Virtual Address: Address generated by processor. Physical Address: Address used to access main memory. Address Translation (Address Mapping): Translates virtual addresses to physical addresses. Page: A block in virtual memory. Page Fault: A virtual memory miss (requested page not in main memory). Page Table: Table stored in main memory, indexed by virtual page number, contains physical page number or disk address. Page Offset: Lower portion of virtual address, indicates location within page. Dirty Bit: In page table, indicates if page has been written to since loaded. Swap Space: Disk space reserved for full virtual memory space of a process. Page Fault Handling: Look up page table entry, find location in secondary memory. Choose physical page to replace (write dirty page back to disk if needed). Start read to bring referenced page from secondary memory. TLB (Translation-Lookaside Buffer): A cache for page table entries (recently used address translations). Reduces latency of address translation. TLB hit: Physical page number used to form address. TLB miss: Page table examined; if page in memory, TLB updated; if not, page fault. Virtually Addressed Cache: Accessed with virtual address, uses virtual tags. Physically Addressed Cache: Accessed with physical address, uses physical tags. Aliasing: Two virtual addresses map to the same physical page. Context Switch (Process Switch): Changing processor's internal state to allow different process to use processor. Reference Bit (Use Bit/Access Bit): Set when page is accessed, helps OS approximate LRU. Restartable Instruction: Can resume execution after an exception. Parallel Processors (Chapter 6) 1. Introduction Multiprocessor: Computer system with at least two processors. Multicore Microprocessor: Microprocessor with multiple processors ("cores") on a single chip. Shared Memory Multiprocessor (SMP): Parallel processor with a single physical address space. Task-Level Parallelism (Process-Level Parallelism): Utilizing multiple processors by running independent programs simultaneously. Parallel Processing Program: A single program running on multiple processors simultaneously. Cluster: Set of computers connected over a local area network, functioning as a single large multiprocessor. Strong Scaling: Speed-up measured by keeping problem size fixed. Weak Scaling: Speed-up measured by increasing problem size proportionally to number of processors. Load Balancing: Distributing work evenly among processors to maximize speed-up. 2. Flynn's Taxonomy (Instruction/Data Streams) SISD (Single Instruction, Single Data): Uniprocessor. MIMD (Multiple Instruction, Multiple Data): Multiprocessor (most common today). SIMD (Single Instruction, Multiple Data): Same instruction applied to many data streams (e.g., vector processors, multimedia extensions). Data-Level Parallelism: Performing same operation on independent data. Vector Architecture: Elegant interpretation of SIMD, uses vector registers and pipelined execution units. Vector Lane: One or more vector functional units and a portion of the vector register file. Multimedia Extensions: SIMD additions to instruction sets (e.g., AVX, SSE). MISD (Multiple Instruction, Single Data): No common examples. SPMD (Single Program, Multiple Data): Conventional MIMD programming model, single program runs across all processors. 3. Hardware Multithreading Hardware Multithreading: Increases processor utilization by switching to another thread when one stalls. Thread: Includes program counter, register state, and stack. Process: Includes one or more threads, address space, and OS state. Fine-Grained Multithreading: Switches between threads after every instruction. Hides short & long stalls. Coarse-Grained Multithreading: Switches threads only on expensive stalls (e.g., last-level cache miss). Better for long stalls. Simultaneous Multithreading (SMT): Exploits thread-level parallelism and instruction-level parallelism simultaneously on multiple-issue, dynamically scheduled processors. 4. Shared Memory & NUMA Uniform Memory Access (UMA): Multiprocessor where latency to any word in main memory is about the same for all processors. Nonuniform Memory Access (NUMA): Multiprocessor where some memory accesses are much faster than others (depending on which processor asks for which word). Synchronization: Coordinating behavior of two or more processes. Lock: Synchronization device allowing only one processor access to data at a time. Reduction: Function that processes a data structure and returns a single value (e.g., summing partial sums). OpenMP: API for shared memory multiprocessing in C/C++/Fortran. 5. Graphics Processing Units (GPUs) GPUs: Accelerators that supplement CPUs, designed for parallel processing, especially graphics. Oriented toward bandwidth rather than latency (rely on multithreading to hide memory latency). CUDA Thread: Lowest level of parallelism for NVIDIA GPUs. SIMD Lanes: Parallel functional units within a SIMD processor. Thread Block Scheduler: Assigns blocks of threads to multithreaded SIMD processors. SIMD Thread Scheduler: Within a SIMD processor, schedules when SIMD threads should run. Local Memory: On-chip memory local to each multithreaded SIMD processor, shared by SIMD lanes within a processor. GPU Memory: Off-chip DRAM shared by the whole GPU. 6. Domain-Specific Architectures (DSAs) Specialized architectures designed for a narrow range of tasks (e.g., AI/ML). Principles: Dedicated memories to minimize data movement. Invest resources into more arithmetic units or larger memories. Use easiest form of parallelism matching domain. Reduce data size and type to simplest needed. Use domain-specific programming language. Tensor Processing Unit (TPU): Google's DSA for deep neural networks (DNNs). 7. Clusters & Warehouse-Scale Computers (WSCs) Message Passing: Processors communicate by explicitly sending and receiving messages (separate physical address spaces). Request-Level Parallelism: Many independent efforts can proceed in parallel (e.g., web servers). Warehouse-Scale Computers (WSCs): Massive data centers with thousands of servers. MapReduce: Framework for batch processing in WSCs (Map function processes data, Reduce function aggregates results). Fault Tolerance: Critical for WSCs due to high failure rates. 8. Multiprocessor Network Topologies Network Bandwidth: Peak transfer rate of a network. Bisection Bandwidth: Bandwidth between two equal parts of a multiprocessor (worst-case metric). Ring: Nodes connected in a circular fashion. Fully Connected Network: Every processor has a bidirectional link to every other processor. Multistage Network: Uses multiple switches, messages may travel multiple steps. Crossbar Network: Allows any node to communicate with any other in one pass. 9. Communicating to the Outside World: Cluster Networking PCIe (Peripheral Component Interconnect Express): High-speed link for I/O devices. Memory-Mapped I/O: Portions of address space assigned to I/O devices; reads/writes to these addresses are commands. DMA (Direct Memory Access): Mechanism allowing device controller to transfer data directly to/from memory without involving processor. I/O Interrupt: Indicates to processor that I/O device needs attention (asynchronous). Device Driver: Program that controls an I/O device. Zero-Copy Optimization: DMA engine transfers message directly between user program data space and destination. Polling: Periodically checking status of I/O device instead of interrupts. Stale Data Problem: When cache and memory have different values for same data due to DMA. 10. Benchmarks & Performance Models SPECrate: Throughput metric, runs multiple copies of programs simultaneously. SPLASH: Parallel benchmark suite for shared memory. NAS Parallel Benchmarks: For computational fluid dynamics. PARSEC: Multithreaded programs using Pthreads and OpenMP. MLPerf: Benchmark for machine learning. Arithmetic Intensity: Ratio of floating-point operations to data bytes accessed from main memory. Roofline Model: Graph showing attainable GFLOPs/sec vs. arithmetic intensity, bounded by peak floating-point performance and peak memory bandwidth. 11. Fallacies and Pitfalls Amdahl's Law doesn't apply to parallel computers: False, it reminds that even small sequential parts limit speed-up. Peak performance tracks observed performance: False, actual performance often much lower. Not developing software for novel architecture: Pitfall, software must adapt to hardware. Forgetting byte addressing/block size in cache simulation: Pitfall, leads to incorrect mapping. Less set associativity than cores/threads in shared cache: Pitfall, can cause conflict misses. Using AMAT for out-of-order processors: Pitfall, doesn't account for execution during misses. Extending address space with segments on unsegmented space: Pitfall, awkwardness and performance penalties. Disk failure rates match specifications: False, often much higher in field. OS is best place to schedule disk accesses: False, disk can reorder for better rotational/seek latency. Implementing VMM on non-virtualizable ISA: Pitfall, complex VMM and reduced performance. Hardware attacks can compromise security: Pitfall, e.g., Row Hammer. Can get good vector performance without memory bandwidth: False, memory bandwidth is critical. ISA completely hides physical implementation properties: False, timing channels can leak info (Spectre).