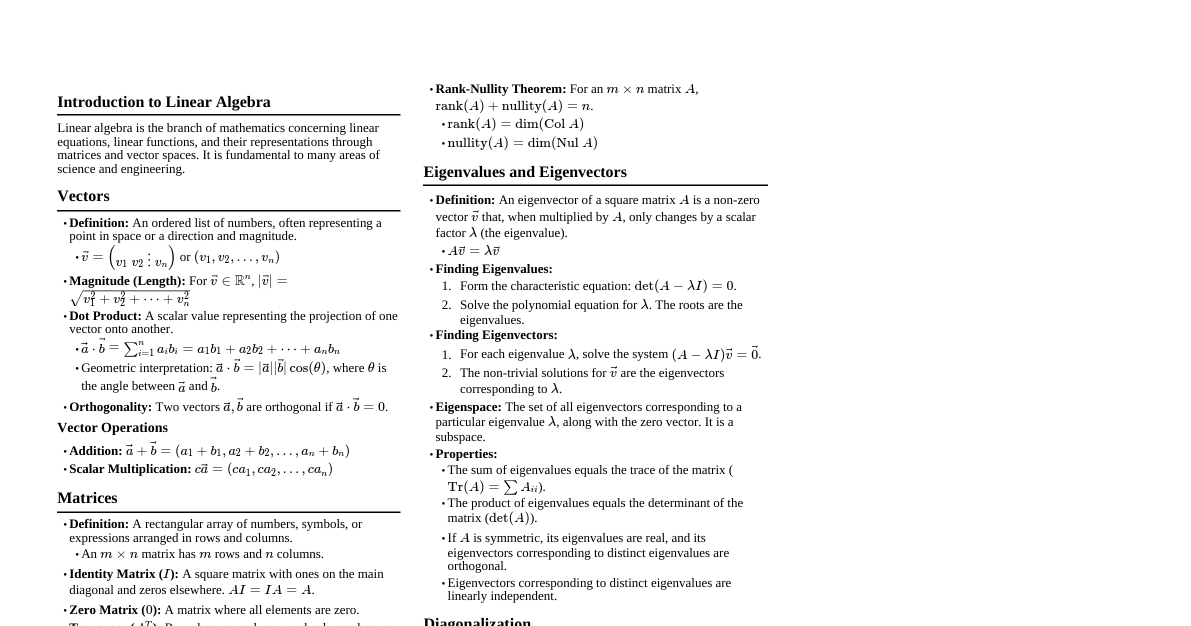

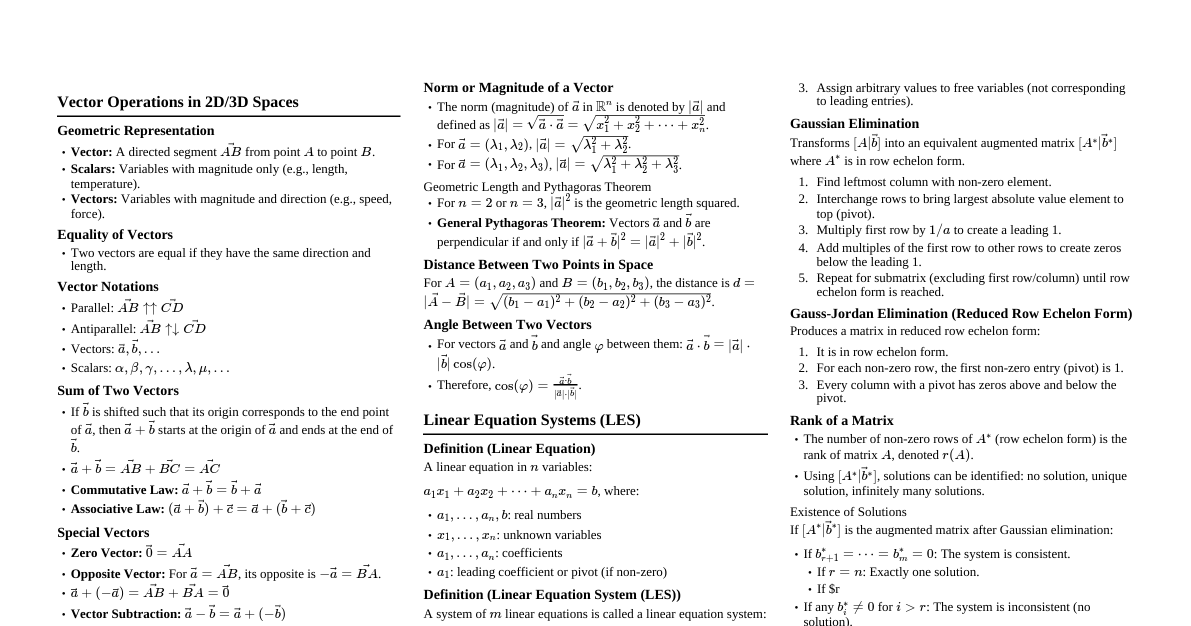

### Vectors - **Definition:** An ordered list of numbers, e.g., $\vec{v} = \begin{pmatrix} v_1 \\ v_2 \\ \vdots \\ v_n \end{pmatrix}$ or $(v_1, v_2, \dots, v_n)$. - **Magnitude (L2-norm):** $|\vec{v}| = \sqrt{v_1^2 + v_2^2 + \dots + v_n^2} = \sqrt{\vec{v} \cdot \vec{v}}$ - **Unit Vector:** $\hat{v} = \frac{\vec{v}}{|\vec{v}|}$ - **Vector Addition/Subtraction:** Component-wise. $\vec{a} \pm \vec{b} = (a_1 \pm b_1, \dots, a_n \pm b_n)$ - **Scalar Multiplication:** $c\vec{v} = (cv_1, cv_2, \dots, cv_n)$ - **Dot Product:** $\vec{a} \cdot \vec{b} = \sum_{i=1}^n a_i b_i = |\vec{a}||\vec{b}|\cos\theta$ - If $\vec{a} \cdot \vec{b} = 0$, vectors are orthogonal. - **Cross Product (3D only):** $\vec{a} \times \vec{b} = (a_2b_3 - a_3b_2, a_3b_1 - a_1b_3, a_1b_2 - a_2b_1)$ - Result is a vector orthogonal to both $\vec{a}$ and $\vec{b}$. - Magnitude: $|\vec{a} \times \vec{b}| = |\vec{a}||\vec{b}|\sin\theta$ (area of parallelogram). ### Matrices - **Definition:** A rectangular array of numbers. $A \in \mathbb{R}^{m \times n}$ has $m$ rows and $n$ columns. - **Elements:** $A = [a_{ij}]$, where $a_{ij}$ is the element in row $i$, column $j$. - **Matrix Addition/Subtraction:** Element-wise (matrices must have same dimensions). - **Scalar Multiplication:** $cA = [ca_{ij}]$ - **Matrix Multiplication:** $C = AB$. If $A \in \mathbb{R}^{m \times n}$ and $B \in \mathbb{R}^{n \times p}$, then $C \in \mathbb{R}^{m \times p}$. - $(AB)_{ij} = \sum_{k=1}^n A_{ik}B_{kj}$ - Not commutative: $AB \neq BA$ generally. - **Identity Matrix:** $I_n \in \mathbb{R}^{n \times n}$ (diagonal elements are 1, others 0). $AI = IA = A$. - **Transpose:** $A^T$. $(A^T)_{ij} = A_{ji}$. - $(A^T)^T = A$ - $(A+B)^T = A^T+B^T$ - $(AB)^T = B^TA^T$ - **Symmetric Matrix:** $A = A^T$ - **Inverse Matrix:** $A^{-1}$. If it exists, $AA^{-1} = A^{-1}A = I$. - **Determinant (2x2):** $\det \begin{pmatrix} a & b \\ c & d \end{pmatrix} = ad - bc$ - **Inverse (2x2):** $A^{-1} = \frac{1}{\det(A)} \begin{pmatrix} d & -b \\ -c & a \end{pmatrix}$, if $\det(A) \neq 0$. - A matrix is invertible if and only if $\det(A) \neq 0$. - **Trace:** $\text{tr}(A) = \sum_{i=1}^n a_{ii}$ (sum of diagonal elements for square matrix). ### Determinants - **Definition:** A scalar value that can be computed from the elements of a square matrix. - **Properties:** - $\det(I) = 1$ - $\det(A^T) = \det(A)$ - $\det(AB) = \det(A)\det(B)$ - $\det(A^{-1}) = 1/\det(A)$ - If a matrix has a row or column of zeros, $\det(A) = 0$. - If a matrix has two identical rows or columns, $\det(A) = 0$. - Swapping two rows/columns changes the sign of the determinant. - Multiplying a row/column by $c$ multiplies the determinant by $c$. - Adding a multiple of one row to another does not change the determinant. - **Cofactor Expansion:** $\det(A) = \sum_{j=1}^n a_{ij}C_{ij}$ along row $i$, or $\sum_{i=1}^n a_{ij}C_{ij}$ along column $j$. - $C_{ij} = (-1)^{i+j}M_{ij}$, where $M_{ij}$ is the determinant of the submatrix formed by removing row $i$ and column $j$. ### Systems of Linear Equations - **Form:** $A\vec{x} = \vec{b}$ - **Augmented Matrix:** $[A|\vec{b}]$ - **Gaussian Elimination:** Use elementary row operations to transform $[A|\vec{b}]$ into row echelon form or reduced row echelon form. - **Row Echelon Form (REF):** 1. All nonzero rows are above any rows of all zeros. 2. The leading entry (pivot) of each nonzero row is in a column to the right of the leading entry of the row above it. 3. All entries in a column below a leading entry are zeros. - **Reduced Row Echelon Form (RREF):** REF plus: 4. Each leading entry is 1. 5. Each leading 1 is the only nonzero entry in its column. - **Solutions:** - **Unique Solution:** One pivot in every column of $A$. $\text{rank}(A) = n$ (number of variables). - **No Solution:** Inconsistent system (e.g., $0=1$ row in REF). - **Infinitely Many Solutions:** Fewer pivots than columns. Free variables exist. ### Vector Spaces & Subspaces - **Vector Space:** A set $V$ with operations of vector addition and scalar multiplication satisfying 10 axioms (closure, associativity, commutativity, identity, inverse, distributivity). - Examples: $\mathbb{R}^n$, space of polynomials $P_n$, space of $m \times n$ matrices $M_{m \times n}$. - **Subspace:** A subset $W$ of a vector space $V$ that is itself a vector space under the operations of $V$. - **Conditions:** 1. $W$ contains the zero vector. 2. $W$ is closed under vector addition (if $\vec{u}, \vec{v} \in W$, then $\vec{u}+\vec{v} \in W$). 3. $W$ is closed under scalar multiplication (if $\vec{u} \in W$ and $c \in \mathbb{R}$, then $c\vec{u} \in W$). - **Span:** The set of all linear combinations of a set of vectors $\{\vec{v}_1, \dots, \vec{v}_k\}$. $\text{span}\{\vec{v}_1, \dots, \vec{v}_k\}$. - **Linear Independence:** A set of vectors $\{\vec{v}_1, \dots, \vec{v}_k\}$ is linearly independent if $c_1\vec{v}_1 + \dots + c_k\vec{v}_k = \vec{0}$ implies $c_1 = \dots = c_k = 0$. - **Basis:** A set of linearly independent vectors that span a vector space $V$. - The number of vectors in any basis for $V$ is unique and called the **dimension** of $V$. - **Dimension:** $\dim(V)$. - $\dim(\mathbb{R}^n) = n$. - $\dim(P_n) = n+1$. - **Column Space ($Col(A)$):** The span of the columns of $A$. It is a subspace of $\mathbb{R}^m$. - **Null Space ($Nul(A)$):** The set of all solutions to $A\vec{x} = \vec{0}$. It is a subspace of $\mathbb{R}^n$. - **Row Space ($Row(A)$):** The span of the rows of $A$. It is a subspace of $\mathbb{R}^n$. - **Rank-Nullity Theorem:** For $A \in \mathbb{R}^{m \times n}$: $\text{rank}(A) + \dim(Nul(A)) = n$. - $\text{rank}(A) = \dim(Col(A)) = \dim(Row(A))$ (number of pivot columns in RREF). ### Eigenvalues & Eigenvectors - **Definition:** For a square matrix $A$, an eigenvector $\vec{v} \neq \vec{0}$ is a vector such that $A\vec{v} = \lambda\vec{v}$, where $\lambda$ is a scalar called the eigenvalue. - **Characteristic Equation:** To find eigenvalues, solve $\det(A - \lambda I) = 0$. - **Eigenspace:** For each eigenvalue $\lambda$, the set of all eigenvectors corresponding to $\lambda$ (plus the zero vector) forms a subspace called the eigenspace $E_\lambda = Nul(A - \lambda I)$. - **Diagonalization:** A square matrix $A$ is diagonalizable if there exists an invertible matrix $P$ and a diagonal matrix $D$ such that $A = PDP^{-1}$. - The columns of $P$ are linearly independent eigenvectors of $A$. - The diagonal entries of $D$ are the corresponding eigenvalues. - $A$ is diagonalizable if and only if there are enough eigenvectors to form a basis for $\mathbb{R}^n$ (i.e., the dimension of each eigenspace equals its algebraic multiplicity). ### Orthogonality - **Orthogonal Vectors:** $\vec{u} \cdot \vec{v} = 0$. - **Orthogonal Set:** A set of vectors where every pair of distinct vectors is orthogonal. - **Orthogonal Basis:** A basis that is an orthogonal set. - **Orthonormal Set:** An orthogonal set where each vector has unit length. - **Gram-Schmidt Process:** Converts a basis $\{\vec{x}_1, \dots, \vec{x}_p\}$ for a subspace $W$ into an orthogonal basis $\{\vec{v}_1, \dots, \vec{v}_p\}$. 1. $\vec{v}_1 = \vec{x}_1$ 2. $\vec{v}_2 = \vec{x}_2 - \frac{\vec{x}_2 \cdot \vec{v}_1}{\vec{v}_1 \cdot \vec{v}_1}\vec{v}_1$ 3. $\vec{v}_3 = \vec{x}_3 - \frac{\vec{x}_3 \cdot \vec{v}_1}{\vec{v}_1 \cdot \vec{v}_1}\vec{v}_1 - \frac{\vec{x}_3 \cdot \vec{v}_2}{\vec{v}_2 \cdot \vec{v}_2}\vec{v}_2$ ... (Normalize for orthonormal basis) - **Orthogonal Matrix:** A square matrix $U$ such that $U^T U = UU^T = I$. Its columns form an orthonormal basis. - If $U$ is orthogonal, then $U^{-1} = U^T$. - For an orthogonal matrix $U$, $|U\vec{x}| = |\vec{x}|$ (preserves length). - $\det(U) = \pm 1$. ### Least Squares - **Problem:** Find $\vec{x}$ that minimizes $|A\vec{x} - \vec{b}|^2$ when $A\vec{x} = \vec{b}$ has no exact solution. - **Normal Equations:** The solution $\hat{x}$ satisfies $A^T A \hat{x} = A^T \vec{b}$. - **Solution:** If $A^T A$ is invertible, $\hat{x} = (A^T A)^{-1} A^T \vec{b}$. - **Geometric Interpretation:** $\hat{b} = A\hat{x}$ is the orthogonal projection of $\vec{b}$ onto $Col(A)$.