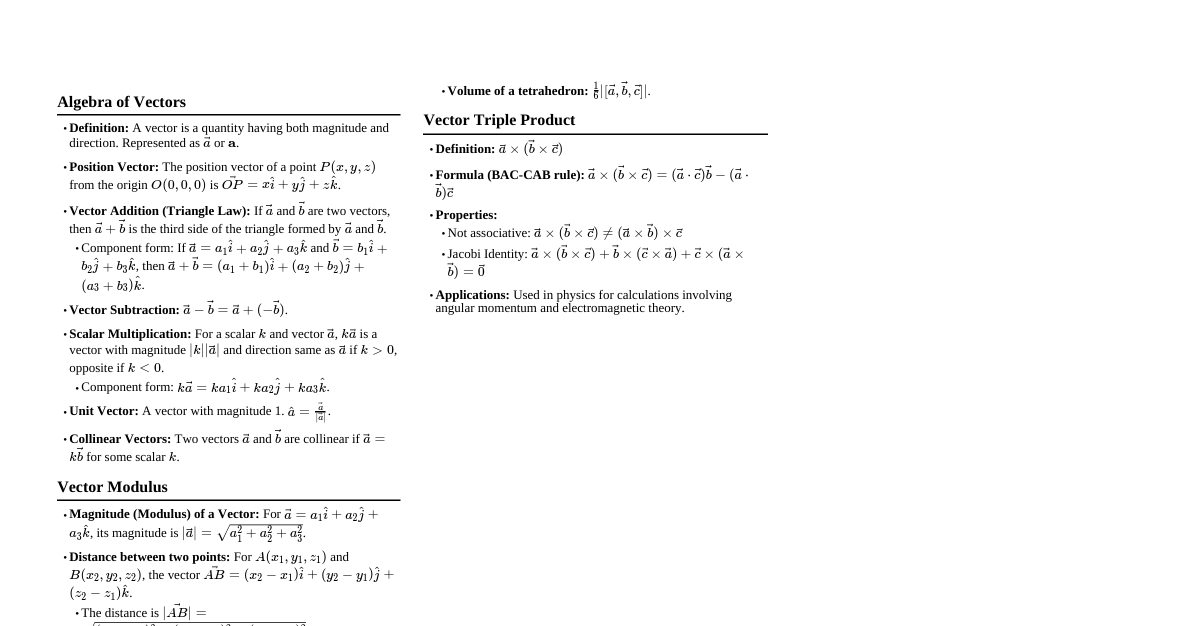

Vector Operations in 2D/3D Spaces Geometric Representation Vector: A directed segment $\vec{AB}$ from point $A$ to point $B$. Scalars: Variables with magnitude only (e.g., length, temperature). Vectors: Variables with magnitude and direction (e.g., speed, force). Equality of Vectors Two vectors are equal if they have the same direction and length. Vector Notations Parallel: $\vec{AB} \uparrow\uparrow \vec{CD}$ Antiparallel: $\vec{AB} \uparrow\downarrow \vec{CD}$ Vectors: $\vec{a}, \vec{b}, \dots$ Scalars: $\alpha, \beta, \gamma, \dots, \lambda, \mu, \dots$ Sum of Two Vectors If $\vec{b}$ is shifted such that its origin corresponds to the end point of $\vec{a}$, then $\vec{a} + \vec{b}$ starts at the origin of $\vec{a}$ and ends at the end of $\vec{b}$. $\vec{a} + \vec{b} = \vec{AB} + \vec{BC} = \vec{AC}$ Commutative Law: $\vec{a} + \vec{b} = \vec{b} + \vec{a}$ Associative Law: $(\vec{a} + \vec{b}) + \vec{c} = \vec{a} + (\vec{b} + \vec{c})$ Special Vectors Zero Vector: $\vec{0} = \vec{AA}$ Opposite Vector: For $\vec{a} = \vec{AB}$, its opposite is $-\vec{a} = \vec{BA}$. $\vec{a} + (-\vec{a}) = \vec{AB} + \vec{BA} = \vec{0}$ Vector Subtraction: $\vec{a} - \vec{b} = \vec{a} + (-\vec{b})$ Multiplication with a Scalar Given vector $\vec{a}$ and scalar $\lambda$: Length: $|\lambda \cdot \vec{a}| = |\lambda| \cdot |\vec{a}|$ If $\lambda > 0$, then $\lambda \cdot \vec{a}$ is in the same direction as $\vec{a}$. If $\lambda If $\lambda = 0$, then $0 \cdot \vec{a} = \vec{0}$. Properties: For vectors $\vec{a}, \vec{b}$ and scalars $\lambda, \mu \in \mathbb{R}$: $(\lambda + \mu) \cdot \vec{a} = \lambda \cdot \vec{a} + \mu \cdot \vec{a}$ $(\lambda \cdot \mu) \cdot \vec{a} = \lambda \cdot (\mu \cdot \vec{a})$ $\lambda \cdot (\vec{a} + \vec{b}) = \lambda \cdot \vec{a} + \lambda \cdot \vec{b}$ Coordinate Representation of Vectors An $n$-dimensional vector is given by $\vec{x} = \begin{pmatrix} x_1 \\ x_2 \\ \vdots \\ x_n \end{pmatrix}$ with $x_1, \dots, x_n \in \mathbb{R}$. Unit vectors in $\mathbb{R}^3$: $\vec{e_1} = \vec{OE_1} = (1,0,0)$, $\vec{e_2} = \vec{OE_2} = (0,1,0)$, $\vec{e_3} = \vec{OE_3} = (0,0,1)$. Any vector $\vec{a} = (a_1, a_2, a_3)$ can be written as $\vec{a} = a_1 \vec{e_1} + a_2 \vec{e_2} + a_3 \vec{e_3}$. Arithmetic Operations with Vectors (Column Notation) Let $\vec{x} = \begin{pmatrix} x_1 \\ \vdots \\ x_n \end{pmatrix}$ and $\vec{y} = \begin{pmatrix} y_1 \\ \vdots \\ y_n \end{pmatrix}$. Addition/Subtraction: $\vec{x} \pm \vec{y} = \begin{pmatrix} x_1 \pm y_1 \\ \vdots \\ x_n \pm y_n \end{pmatrix}$ Scalar Multiplication: For $\lambda \in \mathbb{R}$, $\lambda \cdot \vec{x} = \begin{pmatrix} \lambda x_1 \\ \vdots \\ \lambda x_n \end{pmatrix}$ Linear Combination For $n$-dimensional vectors $\vec{x_1}, \dots, \vec{x_m}$ and scalars $c_1, \dots, c_m \in \mathbb{R}$, a linear combination is $c_1 \vec{x_1} + \dots + c_m \vec{x_m} = \sum_{i=1}^m c_i \vec{x_i}$. Dot/Inner Product 2D: For $\vec{a} = (a_1, a_2)$ and $\vec{b} = (b_1, b_2)$, $\vec{a} \cdot \vec{b} = a_1 b_1 + a_2 b_2$. 3D: For $\vec{a} = (a_1, a_2, a_3)$ and $\vec{b} = (b_1, b_2, b_3)$, $\vec{a} \cdot \vec{b} = a_1 b_1 + a_2 b_2 + a_3 b_3$. $n$-dimensional: $\vec{a} \cdot \vec{b} = a_1 b_1 + a_2 b_2 + \dots + a_n b_n$. Dot product can only be computed for vectors of the same dimension. Properties: For vectors $\vec{a}, \vec{b}, \vec{c}$ and scalar $\lambda \in \mathbb{R}$: $(\lambda \vec{a}) \cdot \vec{b} = \lambda (\vec{a} \cdot \vec{b})$ $\vec{a} \cdot \vec{b} = \vec{b} \cdot \vec{a}$ (Commutative) $\vec{a} \cdot (\vec{b} + \vec{c}) = \vec{a} \cdot \vec{b} + \vec{a} \cdot \vec{c}$ (Distributive) Orthogonal Vectors: Two vectors $\vec{a}$ and $\vec{b}$ are orthogonal if $\vec{a} \cdot \vec{b} = 0$. Norm or Magnitude of a Vector The norm (magnitude) of $\vec{a}$ in $\mathbb{R}^n$ is denoted by $|\vec{a}|$ and defined as $|\vec{a}| = \sqrt{\vec{a} \cdot \vec{a}} = \sqrt{x_1^2 + x_2^2 + \dots + x_n^2}$. For $\vec{a} = (\lambda_1, \lambda_2)$, $|\vec{a}| = \sqrt{\lambda_1^2 + \lambda_2^2}$. For $\vec{a} = (\lambda_1, \lambda_2, \lambda_3)$, $|\vec{a}| = \sqrt{\lambda_1^2 + \lambda_2^2 + \lambda_3^2}$. Geometric Length and Pythagoras Theorem For $n=2$ or $n=3$, $|\vec{a}|^2$ is the geometric length squared. General Pythagoras Theorem: Vectors $\vec{a}$ and $\vec{b}$ are perpendicular if and only if $|\vec{a} + \vec{b}|^2 = |\vec{a}|^2 + |\vec{b}|^2$. Distance Between Two Points in Space For $A = (a_1, a_2, a_3)$ and $B = (b_1, b_2, b_3)$, the distance is $d = |\vec{A} - \vec{B}| = \sqrt{(b_1 - a_1)^2 + (b_2 - a_2)^2 + (b_3 - a_3)^2}$. Angle Between Two Vectors For vectors $\vec{a}$ and $\vec{b}$ and angle $\varphi$ between them: $\vec{a} \cdot \vec{b} = |\vec{a}| \cdot |\vec{b}| \cos(\varphi)$. Therefore, $\cos(\varphi) = \frac{\vec{a} \cdot \vec{b}}{|\vec{a}| \cdot |\vec{b}|}$. Linear Equation Systems (LES) Definition (Linear Equation) A linear equation in $n$ variables: $a_1 x_1 + a_2 x_2 + \dots + a_n x_n = b$, where: $a_1, \dots, a_n, b$: real numbers $x_1, \dots, x_n$: unknown variables $a_1, \dots, a_n$: coefficients $a_1$: leading coefficient or pivot (if non-zero) Definition (Linear Equation System (LES)) A system of $m$ linear equations is called a linear equation system: $$ \begin{cases} a_{11}x_1 + a_{12}x_2 + \dots + a_{1n}x_n = b_1 \\ a_{21}x_1 + a_{22}x_2 + \dots + a_{2n}x_n = b_2 \\ \vdots \\ a_{m1}x_1 + a_{m2}x_2 + \dots + a_{mn}x_n = b_m \end{cases} $$ where $a_{ij}, b_i \in \mathbb{R}$ for $i=1,\dots,m, j=1,\dots,n$. $x_1,\dots,x_n$ are unknowns. Solution Set of a LES A solution is a set of numbers $s_1, \dots, s_n$ that satisfy all equations. The solution set is the set of all possible solutions. Consistency of a LES Consistent: Has at least one solution. Inconsistent: Has no solution. If $b_i = 0$ for all $i$, the LES is homogeneous . Otherwise, it is inhomogeneous . Every system of linear equations has either exactly one solution, infinitely many solutions, or no solution. Equivalent Systems Two systems are equivalent if they have precisely the same solution set. Elementary Row Operations produce equivalent systems: Interchange two equations. Multiply an equation by a non-zero constant. Add a multiple of an equation to another equation. Coefficient Matrix of a LES The matrix $A = \begin{pmatrix} a_{11} & \dots & a_{1n} \\ \vdots & \ddots & \vdots \\ a_{m1} & \dots & a_{mn} \end{pmatrix}$ contains the coefficients. ($m \times n$ matrix). Square Matrix: If $m=n$. Main Diagonal Entries: $a_{11}, a_{22}, \dots, a_{nn}$. Matrix Form of LES $A\vec{x} = \vec{b}$, where $A$ is the coefficient matrix, $\vec{x} = \begin{pmatrix} x_1 \\ \vdots \\ x_n \end{pmatrix}$ is the vector of unknowns, and $\vec{b} = \begin{pmatrix} b_1 \\ \vdots \\ b_m \end{pmatrix}$ is the right-hand side vector. Augmented Matrix The augmented matrix is $[A|\vec{b}] = \begin{pmatrix} a_{11} & \dots & a_{1n} & b_1 \\ \vdots & \ddots & \vdots & \vdots \\ a_{m1} & \dots & a_{mn} & b_m \end{pmatrix}$. The solution $\vec{x}$ is called the solution vector. Row Echelon Form of a Matrix A matrix is in row echelon form if: All non-zero rows are above any rows of all zeros. Each leading entry (pivot) of a row is in a column to the right of the leading entry of the row above it. All entries below a leading entry in a column are zeros. Back-Substitution for Solving LES Solve for leading variables. Substitute calculated variables into equations above. Assign arbitrary values to free variables (not corresponding to leading entries). Gaussian Elimination Transforms $[A|\vec{b}]$ into an equivalent augmented matrix $[A^*|\vec{b}^*]$ where $A^*$ is in row echelon form. Find leftmost column with non-zero element. Interchange rows to bring largest absolute value element to top (pivot). Multiply first row by $1/a$ to create a leading 1. Add multiples of the first row to other rows to create zeros below the leading 1. Repeat for submatrix (excluding first row/column) until row echelon form is reached. Gauss-Jordan Elimination (Reduced Row Echelon Form) Produces a matrix in reduced row echelon form: It is in row echelon form. For each non-zero row, the first non-zero entry (pivot) is 1. Every column with a pivot has zeros above and below the pivot. Rank of a Matrix The number of non-zero rows of $A^*$ (row echelon form) is the rank of matrix $A$, denoted $r(A)$. Using $[A^*|\vec{b}^*]$, solutions can be identified: no solution, unique solution, infinitely many solutions. Existence of Solutions If $[A^*|\vec{b}^*]$ is the augmented matrix after Gaussian elimination: If $b^*_{r+1} = \dots = b^*_m = 0$: The system is consistent. If $r=n$: Exactly one solution. If $r If any $b^*_i \neq 0$ for $i > r$: The system is inconsistent (no solution). Homogeneous Linear Equation Systems $A\vec{x} = \vec{0}$ (right-hand side is the null vector). Always has the trivial solution $\vec{x} = \vec{0}$. If $r=n$: Only the trivial solution. If $r If $n>m$ (more unknowns than equations), it has infinitely many solutions. Square Linear Equation Systems A LES with $m=n$ (number of equations = number of unknowns). Has a unique solution if and only if $r=n$. Can be solved by Gauss-Jordan elimination to directly obtain the solution vector $\vec{x}$, where $[I|\vec{x}]$ is the reduced augmented matrix. Matrices Definition (Matrix) A rectangular array of $m \times n$ numbers, $A = \begin{pmatrix} a_{11} & \dots & a_{1n} \\ \vdots & \ddots & \vdots \\ a_{m1} & \dots & a_{mn} \end{pmatrix}$. $m$: number of rows. $n$: number of columns. $a_{ij}$: entry in row $i$, column $j$. $M_{m \times n}$: set of all matrices of size $m \times n$. A vector is a matrix with one column (column vector) or one row (row vector). Equality of Matrices Two matrices $A$ and $B$ are equal if they have the same size and $a_{ij} = b_{ij}$ for all $i, j$. Matrix Operations: Addition and Subtraction For $A = (a_{ij})$ and $B = (b_{ij})$ of the same size $m \times n$: $A \pm B = (a_{ij} \pm b_{ij})$ (element-wise operation). Matrix Operations: Multiplication with a Scalar For $A = (a_{ij})$ of size $m \times n$ and scalar $\lambda \in \mathbb{R}$: $\lambda \cdot A = (\lambda a_{ij})$ (element-wise multiplication). Properties (Calculation Rules) For $m \times n$ matrices $A, B, C$ and scalars $\lambda, \mu \in \mathbb{R}$: $A + B = B + A$ (Commutative) $(A + B) + C = A + (B + C)$ (Associative) $\lambda (A + B) = \lambda A + \lambda B$ (Distributive) $(\lambda + \mu) A = \lambda A + \mu A$ (Distributive) Matrix Operations: Matrix Multiplication For $A$ of size $m \times n$ and $B$ of size $n \times p$, their product $C = AB$ is an $m \times p$ matrix with entries $c_{ij} = \sum_{k=1}^n a_{ik} b_{kj}$. $AB$ is defined only if the number of columns of $A$ equals the number of rows of $B$. The product of an $m \times 1$ column vector and a $1 \times m$ row vector is an $m \times m$ matrix. The product of a $1 \times m$ row vector and an $m \times 1$ column vector is a $1 \times 1$ matrix (scalar, dot product). Missing Calculation Rules for Matrix Multiplication Not commutative: $AB \neq BA$ in general. No zero law for products: $AB = 0$ does not imply $A=0$ or $B=0$. No cancellation law: $AC = BC$ and $C \neq 0$ does not imply $A=B$. Matrix Operations: Transposing Matrices The transpose of an $m \times n$ matrix $A$, denoted $A^T$, is an $n \times m$ matrix where the rows of $A$ become the columns of $A^T$ (i.e., $(A^T)_{ij} = A_{ji}$). Properties of Transposes For matrices $A, B$ and scalar $c$: $(A^T)^T = A$ $(A + B)^T = A^T + B^T$ $(cA)^T = cA^T$ $(AB)^T = B^T A^T$ Some Special Matrices Zero Matrix ($0$): All entries are 0. ($a_{ij}=0$ for all $i, j$). Properties of Zero Matrices: $A + 0_{m \times n} = A$ $A + (-A) = 0_{m \times n}$ If $cA = 0_{m \times n}$, then $c=0$ or $A=0_{m \times n}$. Square Matrix: An $n \times n$ matrix. Main diagonal entries are $a_{ii}$. Diagonal Matrix: A square matrix where $d_{ij} = 0$ for $i \neq j$. $D = \begin{pmatrix} d_{11} & \dots & 0 \\ \vdots & \ddots & \vdots \\ 0 & \dots & d_{nn} \end{pmatrix}$. Identity Matrix ($I$): A diagonal matrix with main diagonal entries equal to 1. ($d_{ii}=1$). Denoted $I_{n \times n}$ or $I$. Upper Triangular Matrix: A square matrix where $d_{ij} = 0$ for $i > j$. Lower Triangular Matrix: A square matrix where $d_{ij} = 0$ for $i Symmetric Matrix: A square matrix $A$ where $a_{ij} = a_{ji}$ for all $i, j$. ($A = A^T$). The Inverse of a Matrix Regular Matrix: An $n \times n$ matrix $A$ is regular if $rank(A) = n$. Singular Matrix: An $n \times n$ matrix $A$ is singular if $rank(A) A regular matrix implies $rank(A) = n$, which implies a unique solution for $A\vec{x} = \vec{b}$ and only the trivial solution for $A\vec{x} = \vec{0}$. Invertible Matrix: An $n \times n$ matrix $A$ is invertible if there exists an $n \times n$ matrix $X$ such that $AX = XA = I$. $X$ is called the inverse of $A$, denoted $A^{-1}$. $A$ is regular $\iff A$ is invertible. If $A$ is invertible, $A^{-1}$ is uniquely determined. $A^{-1}$ is regular, and $A A^{-1} = A^{-1} A = I$. Properties of Inverse Matrices For invertible matrices $A, B$ and non-zero scalar $c$: $(A^{-1})^{-1} = A$ $(A^k)^{-1} = (A^{-1})^k$ for positive integer $k$. $(cA)^{-1} = \frac{1}{c} A^{-1}$ $(A^T)^{-1} = (A^{-1})^T$ $(AB)^{-1} = B^{-1}A^{-1}$ Power of a Square Matrix For a square matrix $A \in M_{n \times n}$: $A^0 = I$ $A^k = A \cdot A \dots A$ ($k$ times) for $k \in \mathbb{N}$. $A^r A^s = A^{r+s}$ $(A^r)^s = A^{rs}$ If $D = \begin{pmatrix} d_1 & \dots & 0 \\ \vdots & \ddots & \vdots \\ 0 & \dots & d_n \end{pmatrix}$ is a diagonal matrix, then $D^k = \begin{pmatrix} d_1^k & \dots & 0 \\ \vdots & \ddots & \vdots \\ 0 & \dots & d_n^k \end{pmatrix}$. Computation of the Inverse To find $A^{-1}$: Augment $A$ with the identity matrix: $[A|I]$. Perform Gauss-Jordan elimination on $[A|I]$. The result will be $[I|A^{-1}]$. If $A$ cannot be row reduced to $I$, then $A$ is singular and not invertible. Inverse Matrix for 2x2 Case For $A = \begin{pmatrix} a & b \\ c & d \end{pmatrix}$, $A^{-1} = \frac{1}{\det(A)} \begin{pmatrix} d & -b \\ -c & a \end{pmatrix}$, provided $\det(A) = ad-bc \neq 0$. Formula for the Inverse Matrix (using Adjoint) If $A$ is an invertible matrix and $C$ is the cofactor matrix (entries $C_{ij}$ are cofactors of $a_{ij}$), then $A^{-1} = \frac{1}{\det(A)} C^T$. The matrix $C^T$ is called the adjoint matrix of $A$, denoted $\text{adj}(A)$. So $A^{-1} = \frac{1}{\det(A)} \text{adj}(A)$. Matrix-Vector Equation for LES A linear system can be written as $A\vec{x} = \vec{b}$. If $A$ is an invertible $n \times n$ matrix, then the LES has the unique solution $\vec{x} = A^{-1}\vec{b}$. Determinants and Applications Definition (Determinant) A number assigned to each square matrix. Can be used for: Invertibility existence of a matrix. Solution existence of a linear system. Finding the inverse using cofactors. Solving linear systems via Cramer's rule. Measuring dependence of $A^{-1}\vec{b}$ on $\vec{b}$. Measuring how a linear transformation changes area/volume. Determinant of a $2 \times 2$ Matrix For $A = \begin{pmatrix} a_{11} & a_{12} \\ a_{21} & a_{22} \end{pmatrix}$, $\det(A) = a_{11}a_{22} - a_{12}a_{21}$. Determinant of a $3 \times 3$ Matrix For $A = \begin{pmatrix} a_{11} & a_{12} & a_{13} \\ a_{21} & a_{22} & a_{23} \\ a_{31} & a_{32} & a_{33} \end{pmatrix}$, $\det(A) = a_{11}a_{22}a_{33} + a_{12}a_{23}a_{31} + a_{13}a_{21}a_{32} - a_{13}a_{22}a_{31} - a_{11}a_{23}a_{32} - a_{12}a_{21}a_{33}$. This can be computed using Sarrus' rule or cofactor expansion . Minor and Cofactor Minor ($M_{ij}$): The determinant of the $(n-1) \times (n-1)$ matrix obtained by removing row $i$ and column $j$ from $A$. Cofactor ($C_{ij}$): $C_{ij} = (-1)^{i+j} M_{ij}$. Cofactor Expansion (for $\det(A)$ of an $n \times n$ matrix) Along row $i$: $\det(A) = \sum_{j=1}^n a_{ij} C_{ij} = a_{i1} C_{i1} + a_{i2} C_{i2} + \dots + a_{in} C_{in}$. Along column $j$: $\det(A) = \sum_{i=1}^n a_{ij} C_{ij} = a_{1j} C_{1j} + a_{2j} C_{2j} + \dots + a_{nj} C_{nj}$. Choose row/column with most zeros to simplify calculations. Determinant of Triangular and Diagonal Matrices If $A$ is a triangular matrix (upper or lower) or a diagonal matrix, $\det(A)$ is the product of its main diagonal entries. Basic Properties of Determinants For square matrices $A, B$ and scalar $k$: If $A$ contains a zero row or column, $\det(A) = 0$. $\det(A^T) = \det(A)$. If $A$ is a triangular matrix, $\det(A)$ is the product of its diagonal entries. $\det(I) = 1$. $\det(AB) = \det(A)\det(B)$. $\det(A^{-1}) = \frac{1}{\det(A)}$. In general, $\det(A+B) \neq \det(A) + \det(B)$. Row Operation Rules for Determinants Let $A$ be a square matrix: If a multiple of one row (column) is added to another row (column) to produce $B$, then $\det(B) = \det(A)$. If two rows (columns) of $A$ are interchanged to produce $B$, then $\det(B) = -\det(A)$. If one row (column) of $A$ is multiplied by $k$ to produce $B$, then $\det(B) = k \det(A)$. Zero Determinants If $A$ is a square matrix, then $\det(A) = 0$ if: An entire row (column) consists of zeros. Two rows (columns) are equal. One row (column) is a multiple of another row (column). One row (column) is a linear combination of other rows (columns). Cramer's Rule If $A\vec{x} = \vec{b}$ is a LES with $A$ an invertible square matrix ($\det(A) \neq 0$), then the unique solution is given by $x_k = \frac{\det(A_k)}{\det(A)}$, where $A_k$ is the matrix obtained by replacing the $k$-th column of $A$ with $\vec{b}$. Useful for theoretical calculations, but inefficient for large matrices (except $2 \times 2$ or $3 \times 3$). Gaussian/Gauss-Jordan elimination are preferred. Vector Spaces Introduction Vector spaces generalize the properties of $\mathbb{R}^n$. They are used to tie together concepts like rank, null and column spaces, and linear transformations. Definition (Vector Space) A set $V$ with two operations (vector addition $+$ and scalar multiplication $\cdot$) satisfying the following axioms for all $\vec{u}, \vec{v}, \vec{w} \in V$ and scalars $\lambda, \mu \in \mathbb{R}$: $\vec{u} + \vec{v} \in V$ (Closure under addition) $\vec{u} + \vec{v} = \vec{v} + \vec{u}$ (Commutativity of addition) $(\vec{u} + \vec{v}) + \vec{w} = \vec{u} + (\vec{v} + \vec{w})$ (Associativity of addition) There exists a zero vector $\vec{0} \in V$ such that $\vec{u} + \vec{0} = \vec{u}$. For each $\vec{u} \in V$, there exists an opposite vector $-\vec{u} \in V$ such that $\vec{u} + (-\vec{u}) = \vec{0}$. $\lambda \vec{u} \in V$ (Closure under scalar multiplication) $\lambda (\vec{u} + \vec{v}) = \lambda \vec{u} + \lambda \vec{v}$ (Distributivity over vector addition) $(\lambda + \mu) \vec{u} = \lambda \vec{u} + \mu \vec{u}$ (Distributivity over scalar addition) $\lambda (\mu \vec{u}) = (\lambda \mu) \vec{u}$ (Associativity of scalar multiplication) $1 \cdot \vec{u} = \vec{u}$ (Identity for scalar multiplication) Examples of Vector Spaces $n$-tuple space $\mathbb{R}^n$. Matrix space $M_{m \times n}$. Set of all real polynomials of degree not exceeding $n$, $P_n(x)$. Set of all solutions to a homogeneous linear equation system. Set of all continuous functions on a given domain. Subspaces A subset $W$ of a vector space $V$ is a subspace if $W$ is non-empty and for any $\vec{u}, \vec{v} \in W$ and scalar $\lambda \in \mathbb{R}$: $\vec{u} + \vec{v} \in W$ (Closure under addition) $\lambda \vec{u} \in W$ (Closure under scalar multiplication) Trivial subspaces: $\{\vec{0}\}$ and $V$ itself. The intersection of two subspaces is a subspace. Linear Combinations and Spanning Sets A linear combination of vectors $\vec{v_1}, \dots, \vec{v_k}$ is any expression $c_1 \vec{v_1} + \dots + c_k \vec{v_k}$. The set of all possible linear combinations of vectors in $S = \{\vec{v_1}, \dots, \vec{v_k}\}$ is called the span of $S$ , denoted $\text{span}(S)$. $\text{span}(S)$ is always a subspace. If $V = \text{span}(S)$, then $S$ is a spanning set for $V$. Linear Independence and Linear Dependence A set of vectors $S = \{\vec{v_1}, \dots, \vec{v_k}\}$ is: Linearly Independent: If the equation $c_1 \vec{v_1} + \dots + c_k \vec{v_k} = \vec{0}$ has only the trivial solution ($c_1 = \dots = c_k = 0$). Linearly Dependent: If the equation $c_1 \vec{v_1} + \dots + c_k \vec{v_k} = \vec{0}$ has a non-trivial solution (at least one $c_i \neq 0$). Notes on Linear Dependence The empty set $\emptyset$ is linearly independent. If $\vec{0} \in S$, then $S$ is linearly dependent. If $S_1 \subseteq S_2$: If $S_1$ is linearly dependent, $S_2$ is linearly dependent. If $S_2$ is linearly independent, $S_1$ is linearly independent. Spanning Set Theorem: If $S$ is a spanning set for $H$, and some vector in $S$ is a linear combination of others, then removing that vector still spans $H$. If $H \neq \{\vec{0}\}$, some subset of $S$ is a basis for $H$. Basis and Dimension A set $S = \{\vec{v_1}, \dots, \vec{v_n}\}$ is a basis for $V$ if: $S$ spans $V$. $S$ is linearly independent. Standard Basis for $\mathbb{R}^n$: $\{\vec{e_1}, \dots, \vec{e_n}\}$. Properties of Basis: If $S$ is a basis for $V$, every vector in $V$ can be written as a unique linear combination of vectors in $S$. If $S$ is a basis for $V$ with $|S|=n$, any set containing more than $n$ vectors in $V$ is linearly dependent. All bases for a finite-dimensional vector space have the same number of vectors. Dimension ($\dim(V)$): The number of vectors in a basis for $V$. Finite-Dimensional: A vector space with a finite basis. Infinite-Dimensional: A vector space without a finite basis. $\dim(\{\vec{0}\}) = 0$. If $\dim(V) = n$: If $S$ spans $V$, then $|S| \geq n$. If $S$ is linearly independent, then $|S| \leq n$. If $S$ is a basis, then $|S| = n$. If $W$ is a subspace of $V$, then $\dim(W) \leq n$. Null and Column Spaces Null Space ($\text{Nul}(A)$): The set of all solutions to the homogeneous equation $A\vec{x} = \vec{0}$. $\text{Nul}(A) = \{\vec{x} \in \mathbb{R}^n : A\vec{x} = \vec{0}\}$. $\text{Nul}(A)$ is a subspace of $\mathbb{R}^n$. Finding a Basis for $\text{Nul}(A)$ and $\dim(\text{Nul}(A))$: Find the reduced echelon form of $[A|\vec{0}]$. Write dependent variables in terms of free variables. Write the general solution. Decompose the solution vector into a linear combination where coefficients are free variables. These vectors form a basis for $\text{Nul}(A)$. $\dim(\text{Nul}(A))$ is the number of free variables. Column Space ($\text{Col}(A)$): The set of all linear combinations of the columns of $A$. $\text{Col}(A) = \text{span}\{\vec{a_1}, \dots, \vec{a_n}\}$. $\text{Col}(A)$ is a subspace of $\mathbb{R}^m$. A basis for $\text{Col}(A)$ is formed by the columns of $A$ corresponding to the pivot columns in the row echelon form $A^*$. Row Space Row Space ($\text{Row}(A)$): The subspace spanned by the rows of $A$. $\dim(\text{Col}(A)) = \dim(\text{Row}(A))$. This is the rank of $A$, $r(A)$. Rank-Nullity Theorem: $r(A) + \dim(\text{Nul}(A)) = n$ (number of columns). $r(A^T) + \dim(\text{Nul}(A^T)) = m$ (number of rows). Theorem (Invertible Matrix Characterization) For an $n \times n$ matrix $A$, the following are equivalent: Columns of $A$ form a basis of $\mathbb{R}^n$. $\text{Col}(A) = \mathbb{R}^n$. $\dim(\text{Col}(A)) = n$. $r(A) = n$. $\text{Nul}(A) = \{\vec{0}\}$. $\dim(\text{Nul}(A)) = 0$. Linear Mappings Definition (Linear Mapping/Transformation) A mapping $T: V \to W$ between vector spaces $V$ and $W$ (over $\mathbb{R}$) is linear if for any $\vec{u}, \vec{v} \in V$ and scalar $c \in \mathbb{R}$: $T(\vec{u} + \vec{v}) = T(\vec{u}) + T(\vec{v})$ $T(c\vec{u}) = cT(\vec{u})$ Examples Projection: $T(x,y,z) = (x,y)$. Inner product: $T(x,y,z) = ax+by+cz$. Linear mapping given by a matrix: $T(\vec{v}) = A\vec{v}$. Transformation from $M_{m \times n}$ to $M_{n \times m}$: $T(A) = A^T$. Differentiation: $T(f) = f'$. Properties of Linear Mappings For a linear mapping $T: V \to W$: $T(\vec{0}) = \vec{0}$ $T(-\vec{v}) = -T(\vec{v})$ $T(c_1 \vec{v_1} + \dots + c_k \vec{v_k}) = c_1 T(\vec{v_1}) + \dots + c_k T(\vec{v_k})$ A linear mapping is well-defined by its images of a basis. Kernel and Image of a Linear Mapping Kernel ($\text{ker}(T)$): The set of all vectors $\vec{v} \in V$ such that $T(\vec{v}) = \vec{0}$. $\text{ker}(T) = \{\vec{v} \in V : T(\vec{v}) = \vec{0}\}$. $\text{ker}(T)$ is a subspace of $V$ (also called nullspace). If $T(\vec{x}) = A\vec{x}$, then $\text{ker}(T) = \text{Nul}(A)$. Coordinate Systems and Basis Changes Coordinates of a Vector Relative to a Basis: Given a basis $B = \{\vec{v_1}, \dots, \vec{v_n}\}$ for $V$, any vector $\vec{v} \in V$ can be uniquely written as $\vec{v} = \lambda_1 \vec{v_1} + \dots + \lambda_n \vec{v_n}$. The coordinates of $\vec{v}$ relative to $B$ are $[\vec{v}]_B = \begin{pmatrix} \lambda_1 \\ \vdots \\ \lambda_n \end{pmatrix}$. Change-of-Coordinates Matrix: If $B = \{\vec{b_1}, \dots, \vec{b_n}\}$ is a basis for $\mathbb{R}^n$, the matrix $P_B = [\vec{b_1} \dots \vec{b_n}]$ is the change-of-coordinates matrix from $B$ to the standard basis. Then $\vec{v} = P_B [\vec{v}]_B$. The change-of-coordinates matrix from the standard basis to $B$ is $P_B^{-1}$. So $[\vec{v}]_B = P_B^{-1} \vec{v}$. Change of Basis: If $B$ and $C$ are two bases for $\mathbb{R}^n$, and $P_B$ and $P_C$ are their respective change-of-coordinates matrices to the standard basis, then the change-of-coordinates matrix from $B$ to $C$ is $P_C^{-1} P_B$. So $[\vec{v}]_C = P_C^{-1} P_B [\vec{v}]_B$. Matrix of a Linear Transformation Given linear transformation $T: V \to W$, and bases $B = \{\vec{v_1}, \dots, \vec{v_n}\}$ for $V$ and $C = \{\vec{w_1}, \dots, \vec{w_m}\}$ for $W$. The matrix representation of $T$ associated to bases $B$ and $C$ is $A = [[T(\vec{v_1})]_C \dots [T(\vec{v_n})]_C]$. Then $[T(\vec{x})]_C = A [\vec{x}]_B$. General Algebra - Propositional Logic Statements A statement is an assertion that is either true (T) or false (F). The truth value is T or F. Compound Statements and Connectives Statements formed by combining simpler statements using connectives. Connective Symbol Formal Name not $\neg$ Negation and $\land$ Conjunction or $\lor$ Disjunction if ... then $\implies$ Conditional ... if and only if ... $\iff$ Biconditional "Or" is inclusive in logic. Conditional Connective ($p \implies q$) If $p$ then $q$ $q$ if $p$ $p$ is sufficient for $q$ $q$ is a necessary condition for $p$ $p$ only if $q$ Truth Tables Summarize the truth value of a compound statement based on the truth values of its simple components. $p$ $\neg p$ T F F T $p$ $q$ $p \lor q$ $p \land q$ $p \implies q$ $p \iff q$ T T T T T T T F T F F F F T T F T F F F F F T T $p \lor q$ is T unless both $p$ and $q$ are F. $p \land q$ is F unless both $p$ and $q$ are T. Tautology and Contradiction A statement that is always true is called logically true or a tautology . A statement that is always false is called logically false or a contradiction . Logical Equivalence Statements $r$ and $s$ are logically equivalent if their truth tables are identical. The statements $r$ and $s$ are equivalent if and only if $r \iff s$ is a tautology. Example: $p \implies q$ is logically equivalent to $\neg p \lor q$. Implication We say that $r$ implies $s$ if $s$ is true whenever $r$ is true. If $r$ implies $s$ then we write $r \implies s$. Alternatively, $r$ implies $s$ if and only if the statement $r \implies s$ is a tautology. Order of Precedence Operator Precedence $\neg$ 1 $\land$ 2 $\lor$ 3 $\implies$ 4 $\iff$ 5 $\neg p \land q$ is read as $(\neg p) \land q$. $p \land q \lor r$ is read as $(p \land q) \lor r$. $p \lor q \implies r$ is read as $(p \lor q) \implies r$. $p \implies q \iff r$ is read as $(p \implies q) \iff r$. Propositional Logic Laws Commutative Laws: $p \land q \iff q \land p$; $p \lor q \iff q \lor p$. Associative Laws: $p \land (q \land r) \iff (p \land q) \land r$; $p \lor (q \lor r) \iff (p \lor q) \lor r$. Distributive Laws: $p \land (q \lor r) \iff (p \land q) \lor (p \land r)$; $p \lor (q \land r) \iff (p \lor q) \land (p \lor r)$. Idempotent Laws: $p \land p \iff p$; $p \lor p \iff p$. Absorption Laws: $p \land (p \lor q) \iff p$; $p \lor (p \land q) \iff p$. Complementation Laws: $p \land \neg p \iff F$; $p \lor \neg p \iff T$. Law of Involution (Double Negation): $\neg\neg p \iff p$. De Morgan's Laws: $\neg(p \land q) \iff \neg p \lor \neg q$; $\neg(p \lor q) \iff \neg p \land \neg q$. Identity Elements: $p \lor T \iff T$; $p \lor F \iff p$; $p \land T \iff p$; $p \land F \iff F$. Rules of Inference An argument is valid if the conclusion follows from the truth of the premises. An argument is valid if it is based on a tautology. Addition: $p \implies (p \lor q)$ Simplification: $(p \land q) \implies p$ Modus Ponens: $p, p \implies q \implies q$ Modus Tollens: $\neg q, p \implies q \implies \neg p$ Hypothetical Syllogism: $p \implies q, q \implies r \implies p \implies r$ Disjunctive Syllogism: $p \lor q, \neg p \implies q$ Fallacies Affirming the Conclusion: $[(p \implies q) \land q] \implies p$ (invalid) Denying the Hypothesis: $[(p \implies q) \land \neg p] \implies \neg q$ (invalid) General Algebra: Set Theory Review and Relations Sets A set $L$ is a collection of objects, called elements. Ex: $L = \{a, b, c, d\}$. Notation: $a \in L$ (belongs to), $z \notin L$ (does not belong to). Order and recurrence are not important: $\{red, blue, red\} = \{red, blue\}$. Two sets $A$ and $B$ are equal if they have the same elements. Empty set: $\emptyset = \{\}$. Subset: $A \subseteq B$ if all elements of $A$ are in $B$. Proper subset: $A \subset B$ if $A \subseteq B$ and $A \neq B$. $A = B \iff A \subseteq B \text{ and } B \subseteq A$. $|\text{A}|$ denotes the number of elements in a finite set $A$. Characterization of Elements Infinite sets: $N = \{0, 1, 2, \dots\}$. Set builder notation: $B = \{x | x \text{ has property P}\}$. Ex: $B = \{x | x \in N \text{ and } x \text{ not divisible by } 2\}$. Set Operations Complement: $A^c = \{x | x \in U \text{ and } x \notin A\}$, where $U$ is the universal set. Union: $A \cup B = \{x | x \in A \text{ or } x \in B\}$. Ex: $\{1, 2, 3\} \cup \{3, 4, 5\} = \{1, 2, 3, 4, 5\}$. Intersection: $A \cap B = \{x | x \in A \text{ and } x \in B\}$. Ex: $\{1, 2, 3\} \cap \{3, 4, 5\} = \{3\}$. Difference (Relative Complement): $A - B = A \setminus B = \{x | x \in A \text{ and } x \notin B\}$. Ex: $\{1, 2, 3\} - \{3, 4, 5\} = \{1, 2\}$. Disjoint Sets: $A \cap B = \emptyset$. Cartesian Product $A \times B = \{(a, b) | a \in A \text{ and } b \in B\}$. Ex: $R \times R = R^2$ (real plane). Ex: $A = \{1, 2, 3\}$, $B = \{a, b\}$. $A \times B = \{(1, a), (1, b), (2, a), (2, b), (3, a), (3, b)\}$. $|A \times B| = |A| \cdot |B|$. Computer Representation of Sets (Bit Strings) If $U = \{a_1, \dots, a_n\}$, a subset $A$ is represented by a bit string of length $n$, where the $i$-th bit is 1 if $a_i \in A$, else 0. Intersection ($A \cap B$) corresponds to bitwise AND. Union ($A \cup B$) corresponds to bitwise OR. Relations What is a Relation? A (binary) relation from set $A$ to set $B$ is a subset of $A \times B$. A relation in $A$ is a subset of $A \times A$. Examples: Order relation $\le$ on real numbers: $R = \{(i, j) | i, j \in R \text{ and } i \le j\}$. Student-Grade relation: $R = \{(Minh, 1), (Binh, 2), (Trung, 4)\}$. Congruence relation $\equiv \pmod n$: $R = \{(a, b) \in Z \times Z : a \equiv b \pmod n\}$. "Divide" relation $\mid$: $R = \{(a, b) \in N \times N : a \mid b\}$. Properties of Relations (on set $A$) Reflexive: $(a, a) \in R$ for all $a \in A$. Symmetric: If $(a, b) \in R$, then $(b, a) \in R$. Antisymmetric: If $(a, b) \in R$ and $a \neq b$, then $(b, a) \notin R$. (Equivalently, if $(a, b) \in R$ and $(b, a) \in R$, then $a=b$). Transitive: If $(a, b) \in R$ and $(b, c) \in R$, then $(a, c) \in R$. Equivalence Relations An equivalence relation is a relation that is reflexive, symmetric, and transitive. Such a relation partitions the set $A$ into disjoint subsets called equivalence classes . Relation Representations Directed Graphs (Digraphs): Vertices represent elements, directed edges represent related pairs. Zero-One Matrices: For a relation $R$ from $\{a_1, \dots, a_m\}$ to $\{b_1, \dots, b_n\}$, the matrix $M_R = [a_{ij}]$ has $a_{ij}=1$ if $(a_i, b_j) \in R$, else 0. Operations on Zero-One Matrices For $A=[a_{ij}]$ and $B=[b_{ij}]$ of same size: Meet: $A \land B = [a_{ij} \land b_{ij}]$ (element-wise logical AND). Join: $A \lor B = [a_{ij} \lor b_{ij}]$ (element-wise logical OR). Symmetric Difference: $A \oplus B = [a_{ij} \oplus b_{ij}]$ (element-wise XOR). Complement: $\bar{A} = [\bar{a}_{ij}]$. Boolean Product For $A$ ($m \times n$) and $B$ ($n \times p$), $A \odot B$ is an $m \times p$ matrix with entries $c_{ij} = (a_{i1} \land b_{1j}) \lor (a_{i2} \land b_{2j}) \lor \dots \lor (a_{in} \land b_{nj})$. Boolean Powers For a square matrix $A$, $A^{[r]} = A \odot A \odot \dots \odot A$ ($r$ times). $A^{[0]} = I_n$. Operations on Relations and Boolean Matrices Let $M_R$ and $M_S$ be representing matrices for relations $R$ and $S$ on $A$. $M_{R \cup S} = M_R \lor M_S$ $M_{R \cap S} = M_R \land M_S$ $M_{\bar{R}} = \bar{M}_R$ $M_{R^{-1}} = (M_R)^T$ $M_{S \circ R} = M_R \odot M_S$ (composition) $M_{R^n} = M_R^{[n]}$ Closures of Relations Reflexive closure: $R \cup \Delta$, where $\Delta = \{(a, a) | a \in A\}$. Symmetric closure: $R \cup R^{-1}$. Transitive closure: $R^* = R \cup R^2 \cup R^3 \cup \dots$. For a relation on $n$ elements, $R^* = R \cup R^2 \cup \dots \cup R^n$. Using matrices: $M_{R^*} = M_R \lor M_R^{[2]} \lor \dots \lor M_R^{[n]}$. Warshall's Algorithm (Transitive Closure) Constructs a sequence of matrices $M_0, M_1, \dots, M_n$ recursively: $M_0 = M_R$. $M_k = M_{k-1} \lor (\text{Boolean product of } k\text{-th column and } k\text{-th row of } M_{k-1})$. $M_n$ is the matrix of the transitive closure. Partial Ordering Relations Definition A binary relation $R$ on set $A$ is a partial ordering if $R$ is reflexive, antisymmetric, and transitive. $(A, R)$ is a partially ordered set or poset . Notation: $(A, \preceq)$. Examples: $(\mathbb{R}, \le)$ is a poset. $(\mathbb{N}^*, \mid)$ (divisibility) is a poset. $(2^A, \subseteq)$ (power set with containment) is a poset. Comparable and Linearly Ordered/Totally Ordered Elements $a, b \in A$ are comparable if $a \preceq b$ or $b \preceq a$. A poset $(A, \preceq)$ is linearly ordered (or totally ordered ) if any two arbitrary elements are comparable. Cartesian Product of Posets (Lexicographic Order) Given posets $(A, \preceq_A)$ and $(B, \preceq_B)$, define lexicographic order $\preceq$ on $A \times B$: $(a_1, b_1) \preceq (a_2, b_2)$ if $a_1 \preceq_A a_2$ OR ($a_1 = a_2$ AND $b_1 \preceq_B b_2$). This extends to $n$ posets. The Cartesian product of posets with lexicographic order is a poset. If $A$ and $B$ are linearly ordered, then $A \times B$ is linearly ordered. Hasse Diagram A graphical representation of a finite poset $(S, \preceq)$: Remove all loops $(a, a)$. Remove all edges $(x, y)$ if there is a $z$ such that $x \preceq z \preceq y$. Arrange edges so initial vertex is below terminal vertex. Remove arrows. An element $b \in A$ covers an element $a \in A$ if $b \preceq a$ and there is no $c \in A$ such that $a \preceq c \preceq b$. The Hasse diagram uses edges to represent covering relations. n-ary Relations and Their Applications An n-ary relation on sets $A_1, \dots, A_n$ is a subset of $A_1 \times \dots \times A_n$. Used in databases to represent records. Primary Key: A domain whose value uniquely determines an n-tuple. Composite Key: A set of domains that together uniquely determine n-tuples. Operations on n-ary Relations Selection Operator ($S_C$): Filters records based on a condition $C$. Projection Operator ($P$): Creates a new relation by selecting specific columns (domains). Join Operator ($J$): Combines two relations based on common domains. General Algebra: Number Theory and Cryptography The Integers and Division $a \mid b$ (a divides b) if there exists an integer $m$ such that $b = am$. Properties: If $a \mid b$ and $a \mid c$, then $a \mid (b+c)$. If $a \mid b$, then $a \mid bc$ for all $c$. If $a \mid b$ and $b \mid c$, then $a \mid c$. The Division Algorithm (Euclid's Division Theorem) Given integers $m, n$ ($n>0$), there exist unique integers $q$ (quotient) and $r$ (remainder) such that $m = nq + r$ and $0 \le r $r = m \pmod n$. Properties of modular arithmetic: $(a+b) \pmod n = (a \pmod n + b \pmod n) \pmod n$. $(a \cdot b) \pmod n = (a \pmod n \cdot b \pmod n) \pmod n$. $a^r \pmod n = (a \pmod n)^r \pmod n$. Prime and Composite Numbers A positive integer $p > 1$ is prime if its only positive divisors are 1 and $p$. An integer $> 1$ that is not prime is composite . Fundamental Theorem of Arithmetic: Any integer $> 1$ can be uniquely written as a product of powers of distinct primes. Lemma: If $p$ is prime and $p \mid ab$, then $p \mid a$ or $p \mid b$. If $p \mid a^r$, then $p \mid a$. Greatest Common Divisors (GCD) and Least Common Multiples (LCM) GCD: $gcd(a, b)$ is the largest integer that divides both $a$ and $b$. LCM: $lcm(a, b)$ is the smallest positive integer divisible by both $a$ and $b$. If $a = p_1^{a_1} \dots p_n^{a_n}$ and $b = p_1^{b_1} \dots p_n^{b_n}$: $gcd(a, b) = p_1^{\min(a_1, b_1)} \dots p_n^{\min(a_n, b_n)}$. $lcm(a, b) = p_1^{\max(a_1, b_1)} \dots p_n^{\max(a_n, b_n)}$. Theorem: $ab = gcd(a, b) \cdot lcm(a, b)$. Relatively Prime: $a, b$ are relatively prime if $gcd(a, b) = 1$. Euler's Totient Function $\phi(n)$: Number of positive integers less than or equal to $n$ that are relatively prime to $n$. Euclid's Algorithm for GCD $gcd(a, b) = gcd(b, a \pmod b)$. Repeatedly apply this property until remainder is 0. The last non-zero remainder is the GCD. GCD as a Linear Combination (Bezout's Theorem) If $a, b$ are positive integers, there exist integers $x, y$ such that $gcd(a, b) = ax + by$. Extended Euclidean Algorithm: Finds $x, y$ by working backwards through Euclid's algorithm. Arithmetic Modulo n ($Z_n$) $Z_n = \{0, 1, \dots, n-1\}$. Addition: $i +_n j = (i+j) \pmod n$. Multiplication: $i \cdot_n j = (i \cdot j) \pmod n$. Groups A set $G$ with a binary operation $*$ is a group $(G, *)$ if it's closed, associative, has an identity element $e$, and every element has an inverse. Examples: $(\mathbb{Z}, +)$, $(\mathbb{Z}_n, +_n)$, invertible matrices with matrix multiplication. Multiplicative Group ($Z_n^\times$) An element $a \in Z_n$ is multiplicatively invertible if there exists $b \in Z_n$ such that $a \cdot_n b = 1 \pmod n$. $b$ is $a^{-1}$. $a \in Z_n$ is multiplicatively invertible $\iff gcd(n, a) = 1$. $Z_n^\times$ is the set of all multiplicatively invertible elements in $Z_n$. $(Z_n^\times, \cdot_n)$ forms a group. If $a$ has an inverse $a^{-1}$ in $Z_n$, then $a \cdot_n x = b$ has unique solution $x = a^{-1} \cdot_n b$. Inverse can be found using the Extended Euclidean Algorithm. Euler's Theorem $(Z_n^\times, \cdot_n)$ is a group of order $\phi(n)$. If $gcd(a, n) = 1$, then $a^{\phi(n)} \equiv 1 \pmod n$. Ferma's Little Theorem If $p$ is a prime number, then $a^{p-1} \equiv 1 \pmod p$ for any non-zero $a \in Z_p$. Algorithm for Computing Exponentiation Modulo n (Modular Exponentiation) Procedure ModExp(b, m: positive integers, n = (ak ... a0)2) x := 1 power := b mod m for i := 0 to k if ai = 1 then x := (x * power) mod m power := (power * power) mod m Print(x) Cryptography Basic Definitions Plaintext: Original message. Ciphertext: Encrypted message. Sender (Alice), Receiver (Bob), Adversary. Cryptography Classification Private-key (Symmetric): Sender and receiver share a secret key. Public-key (Asymmetric): Each person has a public key (for encryption) and a private key (for decryption). Kerckhoff's Principle A cryptosystem should be secure even if the attacker knows all details about the system except the secret key. Substitution Cipher Replaces each plaintext letter with a fixed ciphertext letter (permutation on the alphabet). Vulnerable to frequency analysis. Shift or Caesar Cipher Each letter is shifted by a fixed amount. Encryption: $e(m) = (m+k) \pmod{26}$. Decryption: $d(c) = (c-k) \pmod{26}$. Weak due to small key space (26 possibilities) and frequency analysis. Turing's Cipher, Version 1 (Multiplication) Secret key $e$ (large prime). Encryption: $y = x \cdot e$. Decryption: $x = y/e$. Vulnerable if two encrypted messages $y_1, y_2$ are known ($e = gcd(y_1, y_2)$). Turing's Cipher, Version 2 (Multiplication Modulo n) Public $n$, private $e$. Encryption: $e(x) = (x \cdot e) \pmod n$. Requirement: $gcd(n, e)=1$. Decryption: $d(y) = (y \cdot e^{-1}) \pmod n$, where $e^{-1}$ is the multiplicative inverse of $e$ in $Z_n$. Vulnerable if plaintext $x$ and ciphertext $y$ are known ($e = (x^{-1} \cdot y) \pmod n$). RSA Crypto-system (Public Key) Setup: Choose two large prime numbers $p, q$. Compute $n = p \cdot q$ (public modulus). Compute $\phi(n) = (p-1)(q-1)$ (Euler's totient function). Choose integer $e$ such that $1 \le e \le \phi(n)$ and $gcd(e, \phi(n)) = 1$ (public exponent). Compute $d = e^{-1} \pmod{\phi(n)}$ (private key). Public Key: $(n, e)$. Private Key: $d$. Encryption: To encrypt message $x$, compute $y = x^e \pmod n$. Decryption: To decrypt ciphertext $y$, compute $x = y^d \pmod n$. Security: Relies on the difficulty of prime factorization of large $n$ (NP-hard problem), which is needed to find $\phi(n)$ and thus $d$. Correctness based on Euler's Theorem. Check Digits (e.g., ISBN) ISBN-10: $x_1 x_2 \dots x_{10}$. The check digit $x_{10}$ is chosen so that $\sum_{i=1}^{10} i \cdot x_i \pmod{11} = 0$.