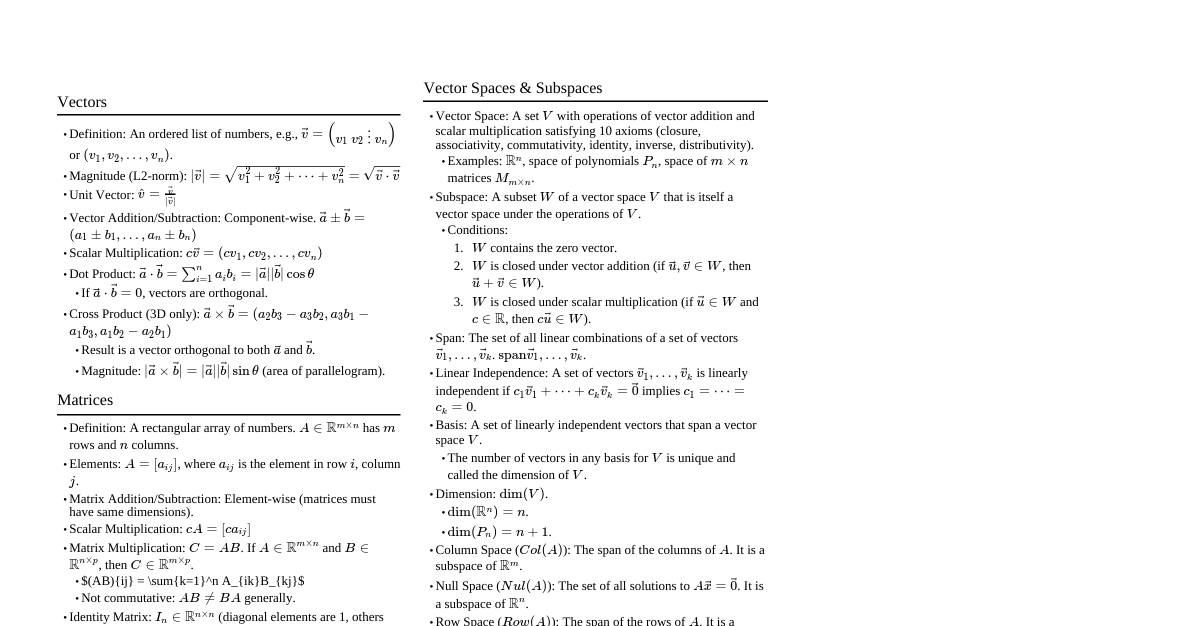

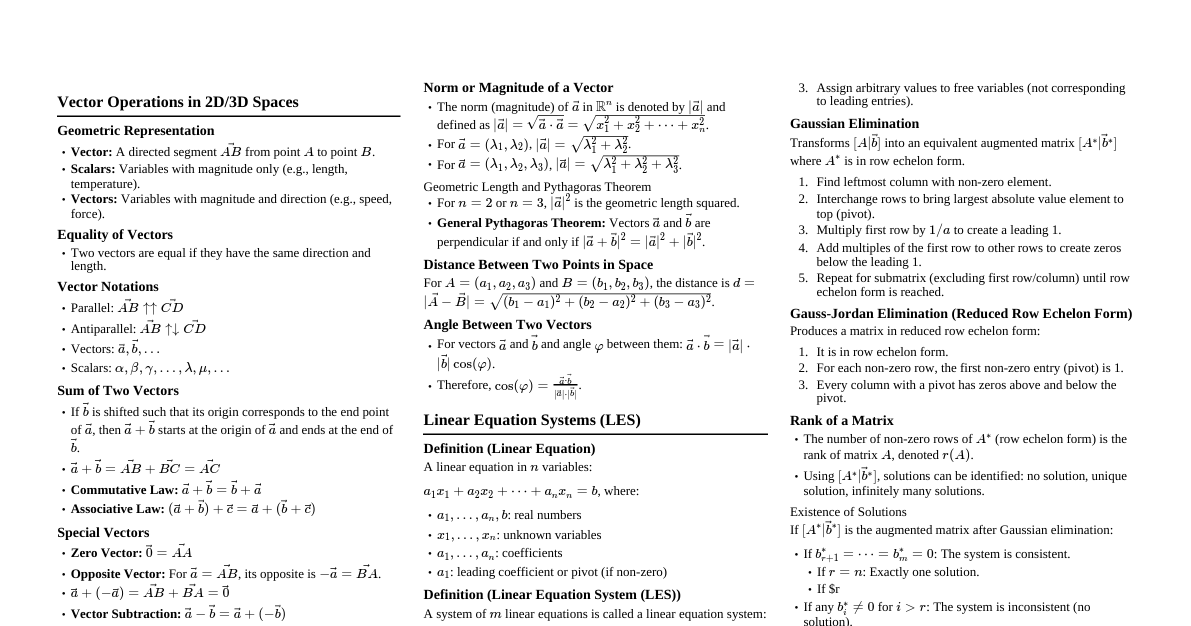

### Introduction to Linear Algebra Linear algebra is the branch of mathematics concerning linear equations, linear functions, and their representations through matrices and vector spaces. It is fundamental to many areas of science and engineering. ### Vectors - **Definition:** An ordered list of numbers, often representing a point in space or a direction and magnitude. - $\vec{v} = \begin{pmatrix} v_1 \\ v_2 \\ \vdots \\ v_n \end{pmatrix}$ or $(v_1, v_2, \dots, v_n)$ - **Magnitude (Length):** For $\vec{v} \in \mathbb{R}^n$, $|\vec{v}| = \sqrt{v_1^2 + v_2^2 + \dots + v_n^2}$ - **Dot Product:** A scalar value representing the projection of one vector onto another. - $\vec{a} \cdot \vec{b} = \sum_{i=1}^n a_i b_i = a_1b_1 + a_2b_2 + \dots + a_nb_n$ - Geometric interpretation: $\vec{a} \cdot \vec{b} = |\vec{a}| |\vec{b}| \cos(\theta)$, where $\theta$ is the angle between $\vec{a}$ and $\vec{b}$. - **Orthogonality:** Two vectors $\vec{a}, \vec{b}$ are orthogonal if $\vec{a} \cdot \vec{b} = 0$. #### Vector Operations - **Addition:** $\vec{a} + \vec{b} = (a_1+b_1, a_2+b_2, \dots, a_n+b_n)$ - **Scalar Multiplication:** $c\vec{a} = (ca_1, ca_2, \dots, ca_n)$ ### Matrices - **Definition:** A rectangular array of numbers, symbols, or expressions arranged in rows and columns. - An $m \times n$ matrix has $m$ rows and $n$ columns. - **Identity Matrix ($I$):** A square matrix with ones on the main diagonal and zeros elsewhere. $AI = IA = A$. - **Zero Matrix ($0$):** A matrix where all elements are zero. - **Transpose ($A^T$):** Rows become columns and columns become rows. $(A^T)_{ij} = A_{ji}$. - **Symmetric Matrix:** $A = A^T$. - **Inverse Matrix ($A^{-1}$):** For a square matrix $A$, if $A^{-1}$ exists, then $AA^{-1} = A^{-1}A = I$. - A matrix is invertible if and only if its determinant is non-zero. #### Matrix Operations - **Addition:** $(A+B)_{ij} = A_{ij} + B_{ij}$ (Matrices must have the same dimensions) - **Scalar Multiplication:** $(cA)_{ij} = cA_{ij}$ - **Matrix Multiplication:** $(AB)_{ij} = \sum_k A_{ik} B_{kj}$ (Number of columns in $A$ must equal number of rows in $B$) - Not commutative: $AB \neq BA$ in general. #### Determinants - **Definition:** A scalar value that can be computed from the elements of a square matrix. - **$2 \times 2$ Matrix:** If $A = \begin{pmatrix} a & b \\ c & d \end{pmatrix}$, then $\det(A) = ad - bc$. - **$3 \times 3$ Matrix (Sarrus' Rule):** $A = \begin{pmatrix} a & b & c \\ d & e & f \\ g & h & i \end{pmatrix}$ $\det(A) = a(ei-fh) - b(di-fg) + c(dh-eg)$ - **Properties:** - $\det(AB) = \det(A)\det(B)$ - $\det(A^T) = \det(A)$ - $\det(A^{-1}) = 1/\det(A)$ - $\det(cA) = c^n \det(A)$ for an $n \times n$ matrix $A$. - If $\det(A) = 0$, $A$ is singular (not invertible). ### Systems of Linear Equations - Represented as $A\vec{x} = \vec{b}$, where $A$ is the coefficient matrix, $\vec{x}$ is the vector of unknowns, and $\vec{b}$ is the constant vector. - **Solutions:** - **Unique Solution:** If $\det(A) \neq 0$, then $\vec{x} = A^{-1}\vec{b}$. - **No Solution:** If rows of $A$ are linearly dependent and $\vec{b}$ is not in the column space of $A$. - **Infinitely Many Solutions:** If rows of $A$ are linearly dependent and $\vec{b}$ is in the column space of $A$. - **Gaussian Elimination:** A method to solve systems of linear equations by transforming the augmented matrix $[A|\vec{b}]$ into row echelon form. ### Vector Spaces - **Definition:** A set $V$ of vectors equipped with two operations (vector addition and scalar multiplication) that satisfy ten axioms. - **Subspace:** A subset $W$ of a vector space $V$ that is itself a vector space under the same operations. $W$ must contain the zero vector, be closed under addition, and closed under scalar multiplication. - **Span:** The set of all possible linear combinations of a set of vectors $\{\vec{v_1}, \dots, \vec{v_k}\}$. - **Linear Independence:** A set of vectors $\{\vec{v_1}, \dots, \vec{v_k}\}$ is linearly independent if $c_1\vec{v_1} + \dots + c_k\vec{v_k} = \vec{0}$ implies $c_1 = \dots = c_k = 0$. - **Basis:** A set of linearly independent vectors that span the entire vector space. The number of vectors in a basis is the dimension of the vector space. - **Column Space (Col $A$):** The span of the column vectors of $A$. - **Null Space (Nul $A$):** The set of all solutions to $A\vec{x} = \vec{0}$. - **Rank-Nullity Theorem:** For an $m \times n$ matrix $A$, $\text{rank}(A) + \text{nullity}(A) = n$. - $\text{rank}(A) = \text{dim(Col }A\text{)}$ - $\text{nullity}(A) = \text{dim(Nul }A\text{)}$ ### Eigenvalues and Eigenvectors - **Definition:** An eigenvector of a square matrix $A$ is a non-zero vector $\vec{v}$ that, when multiplied by $A$, only changes by a scalar factor $\lambda$ (the eigenvalue). - $A\vec{v} = \lambda\vec{v}$ - **Finding Eigenvalues:** 1. Form the characteristic equation: $\det(A - \lambda I) = 0$. 2. Solve the polynomial equation for $\lambda$. The roots are the eigenvalues. - **Finding Eigenvectors:** 1. For each eigenvalue $\lambda$, solve the system $(A - \lambda I)\vec{v} = \vec{0}$. 2. The non-trivial solutions for $\vec{v}$ are the eigenvectors corresponding to $\lambda$. - **Eigenspace:** The set of all eigenvectors corresponding to a particular eigenvalue $\lambda$, along with the zero vector. It is a subspace. - **Properties:** - The sum of eigenvalues equals the trace of the matrix ($\text{Tr}(A) = \sum A_{ii}$). - The product of eigenvalues equals the determinant of the matrix ($\det(A)$). - If $A$ is symmetric, its eigenvalues are real, and its eigenvectors corresponding to distinct eigenvalues are orthogonal. - Eigenvectors corresponding to distinct eigenvalues are linearly independent. ### Diagonalization - **Definition:** A square matrix $A$ is diagonalizable if it is similar to a diagonal matrix $D$. That is, $A = PDP^{-1}$ for some invertible matrix $P$ and diagonal matrix $D$. - **Condition for Diagonalization:** An $n \times n$ matrix $A$ is diagonalizable if and only if it has $n$ linearly independent eigenvectors. - **Constructing $P$ and $D$:** - The columns of $P$ are the linearly independent eigenvectors of $A$. - The diagonal entries of $D$ are the corresponding eigenvalues, in the same order as their eigenvectors in $P$. - **Applications:** - Computing powers of a matrix: $A^k = PD^k P^{-1}$. - Solving systems of differential equations.