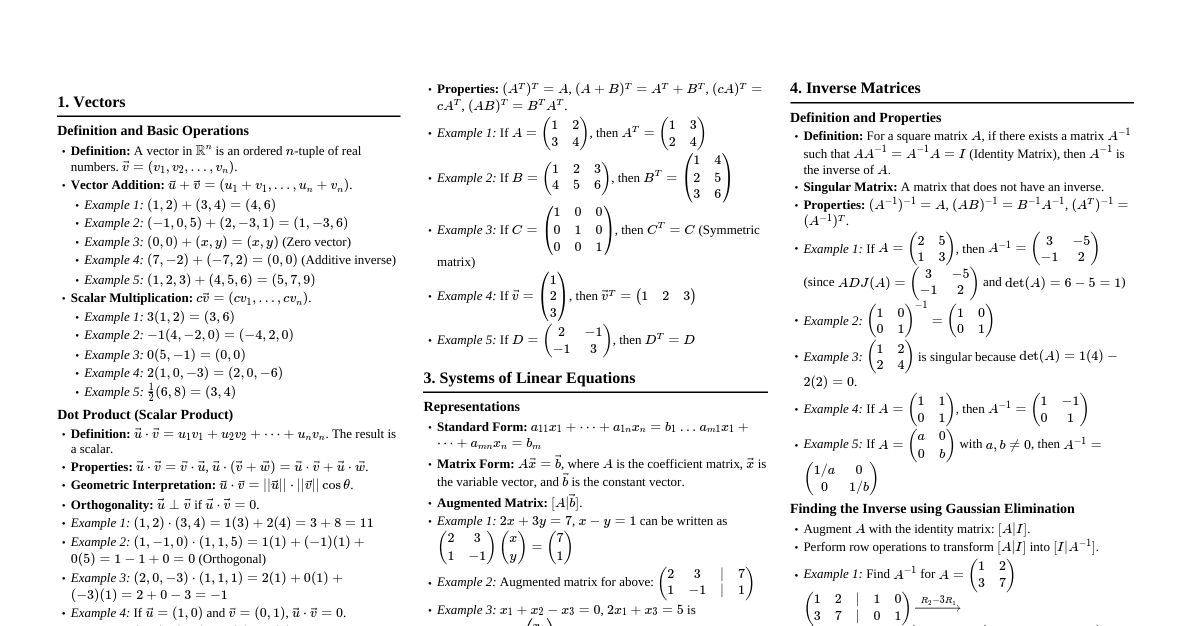

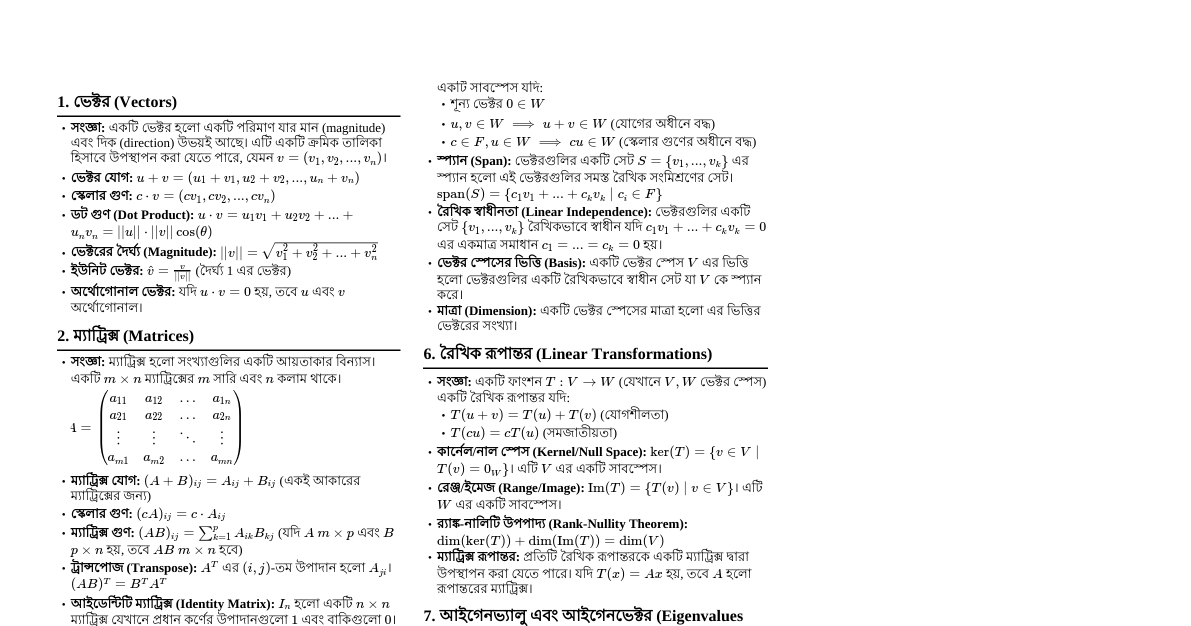

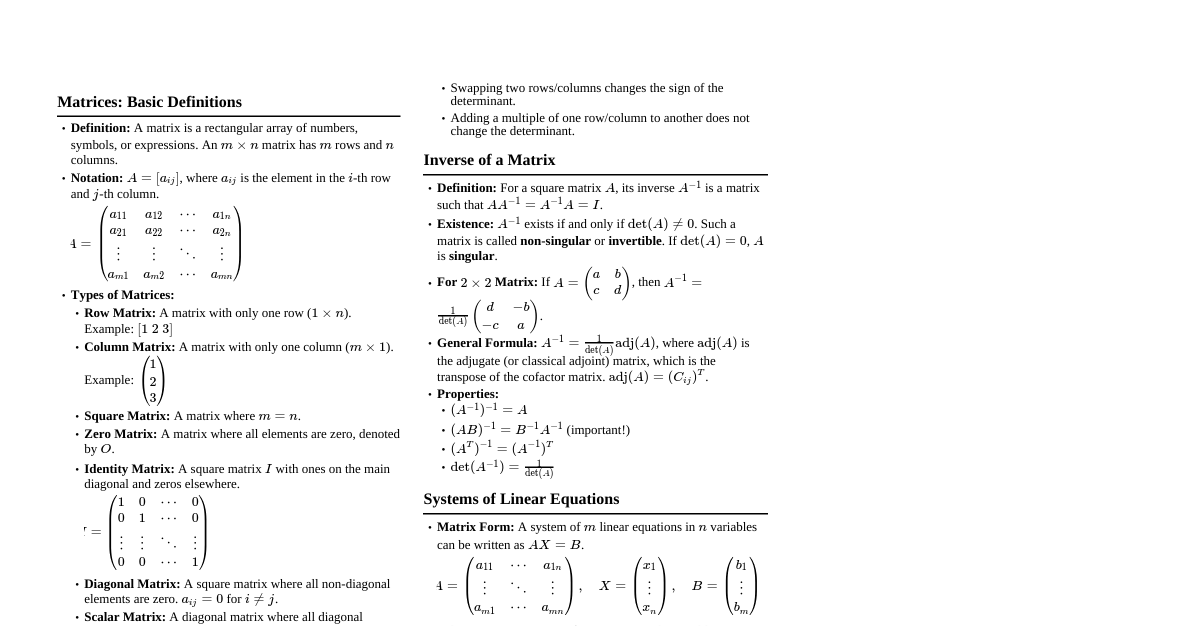

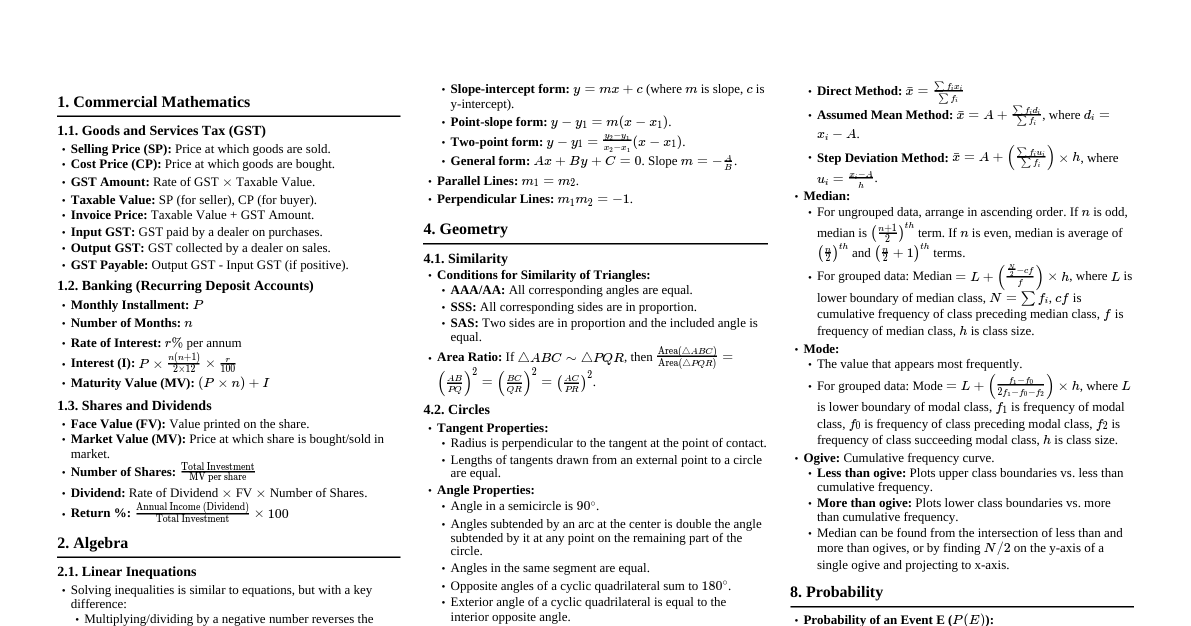

1. Vectors Definition: A vector is an ordered list of numbers. Geometrically, it represents a magnitude and direction. Notation: $\vec{v} = \begin{pmatrix} v_1 \\ v_2 \\ \vdots \\ v_n \end{pmatrix}$ or $\vec{v} = (v_1, v_2, \dots, v_n)$ Vector Addition: If $\vec{u} = (u_1, u_2)$ and $\vec{v} = (v_1, v_2)$, then $\vec{u} + \vec{v} = (u_1+v_1, u_2+v_2)$. Scalar Multiplication: If $c$ is a scalar and $\vec{v} = (v_1, v_2)$, then $c\vec{v} = (cv_1, cv_2)$. Magnitude (L2-norm): For $\vec{v} = (v_1, \dots, v_n)$, $||\vec{v}|| = \sqrt{v_1^2 + \dots + v_n^2}$. Unit Vector: A vector with magnitude 1. $\hat{v} = \frac{\vec{v}}{||\vec{v}||}$. 2. Dot Product Definition: For $\vec{u} = (u_1, \dots, u_n)$ and $\vec{v} = (v_1, \dots, v_n)$, $\vec{u} \cdot \vec{v} = u_1v_1 + u_2v_2 + \dots + u_nv_n = \sum_{i=1}^n u_iv_i$. Geometric Interpretation: $\vec{u} \cdot \vec{v} = ||\vec{u}|| \cdot ||\vec{v}|| \cos(\theta)$, where $\theta$ is the angle between $\vec{u}$ and $\vec{v}$. Orthogonality: If $\vec{u} \cdot \vec{v} = 0$, then $\vec{u}$ and $\vec{v}$ are orthogonal (perpendicular). 3. Matrices Definition: A rectangular array of numbers. An $m \times n$ matrix has $m$ rows and $n$ columns. Notation: $A = [a_{ij}]$, where $a_{ij}$ is the element in row $i$ and column $j$. Matrix Addition: $A+B$ is defined if $A$ and $B$ have the same dimensions. $(A+B)_{ij} = a_{ij} + b_{ij}$. Scalar Multiplication: $(cA)_{ij} = c \cdot a_{ij}$. Matrix Multiplication: If $A$ is $m \times n$ and $B$ is $n \times p$, then $C=AB$ is $m \times p$. $c_{ij} = \sum_{k=1}^n a_{ik}b_{kj}$. (Number of columns in $A$ must equal number of rows in $B$). Identity Matrix ($I$): A square matrix with 1s on the main diagonal and 0s elsewhere. $AI = IA = A$. Transpose ($A^T$): Rows become columns and columns become rows. $(A^T)_{ij} = a_{ji}$. Symmetric Matrix: $A = A^T$. 4. Determinants Definition: A scalar value that can be computed from the elements of a square matrix. Denoted as $\det(A)$ or $|A|$. $2 \times 2$ Matrix: For $A = \begin{pmatrix} a & b \\ c & d \end{pmatrix}$, $\det(A) = ad - bc$. $3 \times 3$ Matrix (Sarrus' Rule): For $A = \begin{pmatrix} a & b & c \\ d & e & f \\ g & h & i \end{pmatrix}$, $\det(A) = a(ei-fh) - b(di-fg) + c(dh-eg)$. Properties: $\det(A^T) = \det(A)$ $\det(AB) = \det(A)\det(B)$ $\det(A^{-1}) = 1/\det(A)$ If a row/column is all zeros, $\det(A) = 0$. If two rows/columns are identical, $\det(A) = 0$. If rows/columns are linearly dependent, $\det(A) = 0$. 5. Inverse Matrix Definition: For a square matrix $A$, its inverse $A^{-1}$ satisfies $AA^{-1} = A^{-1}A = I$. Existence: $A^{-1}$ exists if and only if $\det(A) \neq 0$ (A is non-singular or invertible). $2 \times 2$ Inverse: For $A = \begin{pmatrix} a & b \\ c & d \end{pmatrix}$, $A^{-1} = \frac{1}{\det(A)} \begin{pmatrix} d & -b \\ -c & a \end{pmatrix}$. General Formula (using Adjoint): $A^{-1} = \frac{1}{\det(A)} \text{adj}(A)$, where $\text{adj}(A)$ is the adjugate matrix (transpose of the cofactor matrix). Solving Linear Systems: If $A\vec{x} = \vec{b}$ and $A^{-1}$ exists, then $\vec{x} = A^{-1}\vec{b}$. 6. Systems of Linear Equations Form: $A\vec{x} = \vec{b}$ $$ \begin{pmatrix} a_{11} & \dots & a_{1n} \\ \vdots & \ddots & \vdots \\ a_{m1} & \dots & a_{mn} \end{pmatrix} \begin{pmatrix} x_1 \\ \vdots \\ x_n \end{pmatrix} = \begin{pmatrix} b_1 \\ \vdots \\ b_m \end{pmatrix} $$ Augmented Matrix: $[A | \vec{b}]$. Methods: Gaussian Elimination: Use elementary row operations to transform $[A | \vec{b}]$ into row echelon form. Gauss-Jordan Elimination: Further reduce to reduced row echelon form. Cramer's Rule: For $A\vec{x} = \vec{b}$ with $n$ equations and $n$ variables, $x_i = \frac{\det(A_i)}{\det(A)}$, where $A_i$ is $A$ with $i$-th column replaced by $\vec{b}$. (Only if $\det(A) \neq 0$). Number of Solutions: Unique solution: $\det(A) \neq 0$ (for square $A$). No solution (inconsistent). Infinitely many solutions (consistent, but underdetermined). 7. Vector Spaces and Subspaces Vector Space: A set $V$ with operations vector addition and scalar multiplication satisfying certain axioms (closure, associativity, commutativity, identity, inverse, distributivity). Subspace: A subset $W$ of a vector space $V$ that is itself a vector space under the same operations. Conditions: $\vec{0} \in W$ If $\vec{u}, \vec{v} \in W$, then $\vec{u} + \vec{v} \in W$ (closure under addition). If $\vec{u} \in W$ and $c$ is a scalar, then $c\vec{u} \in W$ (closure under scalar multiplication). Span ($\text{span}\{\vec{v}_1, \dots, \vec{v}_k\}$): The set of all linear combinations of the vectors $\vec{v}_1, \dots, \vec{v}_k$. It always forms a subspace. Basis: A set of vectors in a vector space that are linearly independent and span the entire space. Dimension: The number of vectors in any basis for the vector space. 8. Fundamental Subspaces of a Matrix $A$ ($m \times n$) Column Space ($\text{Col}(A)$ or $\text{Im}(A)$): The span of the columns of $A$. It's a subspace of $\mathbb{R}^m$. Dimension: $\text{rank}(A)$. Row Space ($\text{Row}(A)$): The span of the rows of $A$. It's a subspace of $\mathbb{R}^n$. Dimension: $\text{rank}(A)$. Null Space ($\text{Null}(A)$ or $\text{Ker}(A)$): The set of all vectors $\vec{x}$ such that $A\vec{x} = \vec{0}$. It's a subspace of $\mathbb{R}^n$. Dimension: $\text{nullity}(A) = n - \text{rank}(A)$ (Rank-Nullity Theorem). Left Null Space ($\text{Null}(A^T)$): The set of all vectors $\vec{y}$ such that $A^T\vec{y} = \vec{0}$. It's a subspace of $\mathbb{R}^m$. Dimension: $m - \text{rank}(A)$. 9. Eigenvalues and Eigenvectors Definition: For a square matrix $A$, an eigenvector $\vec{v}$ (non-zero) is a vector such that $A\vec{v} = \lambda\vec{v}$ for some scalar $\lambda$. The scalar $\lambda$ is called an eigenvalue. Finding Eigenvalues: Solve the characteristic equation $\det(A - \lambda I) = 0$. Finding Eigenvectors: For each eigenvalue $\lambda$, solve the system $(A - \lambda I)\vec{v} = \vec{0}$. This gives the eigenspace for $\lambda$. Diagonalization: A matrix $A$ is diagonalizable if it is similar to a diagonal matrix $D$, i.e., $A = PDP^{-1}$, where $D$ contains the eigenvalues on its diagonal and the columns of $P$ are the corresponding eigenvectors. $A$ is diagonalizable if and only if it has $n$ linearly independent eigenvectors. 10. Orthogonality Orthogonal Vectors: $\vec{u} \cdot \vec{v} = 0$. Orthogonal Set: A set of vectors where every pair of distinct vectors is orthogonal. Orthonormal Set: An orthogonal set where every vector is a unit vector. Orthogonal Matrix ($Q$): A square matrix whose columns (and rows) form an orthonormal set. $Q^T Q = QQ^T = I$, so $Q^{-1} = Q^T$. Orthogonal Projection: The projection of $\vec{y}$ onto $\vec{u}$ is $\text{proj}_{\vec{u}}\vec{y} = \frac{\vec{y} \cdot \vec{u}}{\vec{u} \cdot \vec{u}}\vec{u}$. Gram-Schmidt Process: Converts a basis for a subspace into an orthogonal or orthonormal basis. 11. Linear Transformations Definition: A function $T: V \to W$ between vector spaces $V$ and $W$ such that: $T(\vec{u} + \vec{v}) = T(\vec{u}) + T(\vec{v})$ for all $\vec{u}, \vec{v} \in V$. $T(c\vec{u}) = cT(\vec{u})$ for all $\vec{u} \in V$ and scalar $c$. Matrix Representation: Any linear transformation $T: \mathbb{R}^n \to \mathbb{R}^m$ can be represented by an $m \times n$ matrix $A$ such that $T(\vec{x}) = A\vec{x}$. The columns of $A$ are $T(\vec{e}_1), T(\vec{e}_2), \dots, T(\vec{e}_n)$, where $\vec{e}_i$ are standard basis vectors. Kernel (Null Space): $\text{Ker}(T) = \{\vec{v} \in V \mid T(\vec{v}) = \vec{0}\}$. Image (Range/Column Space): $\text{Im}(T) = \{T(\vec{v}) \mid \vec{v} \in V\}$.