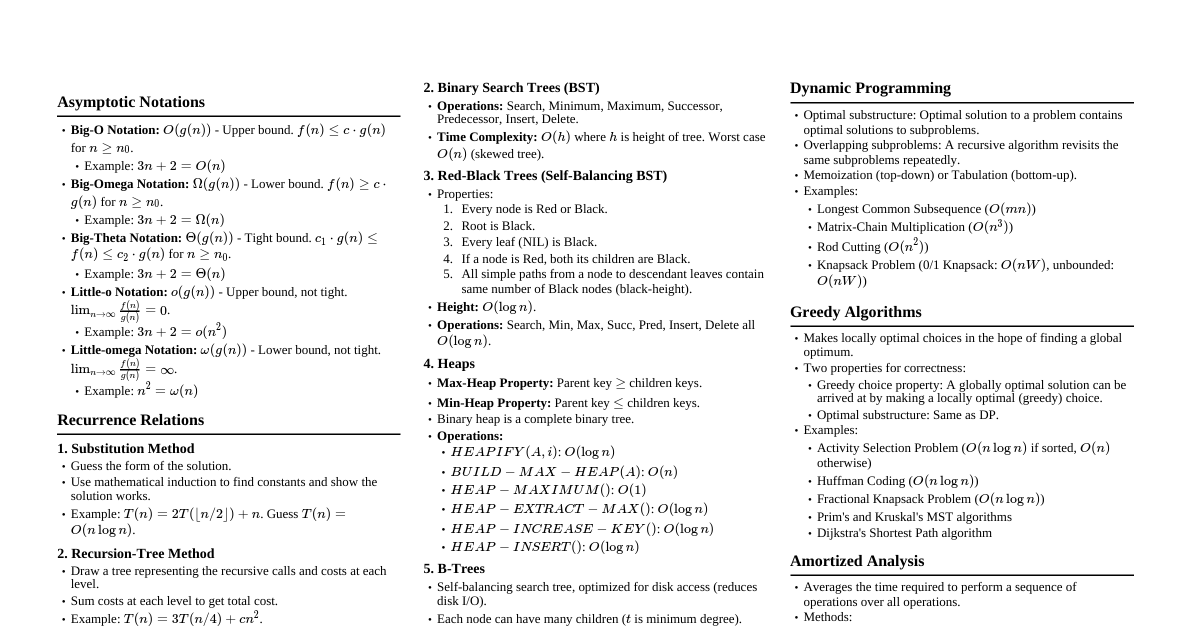

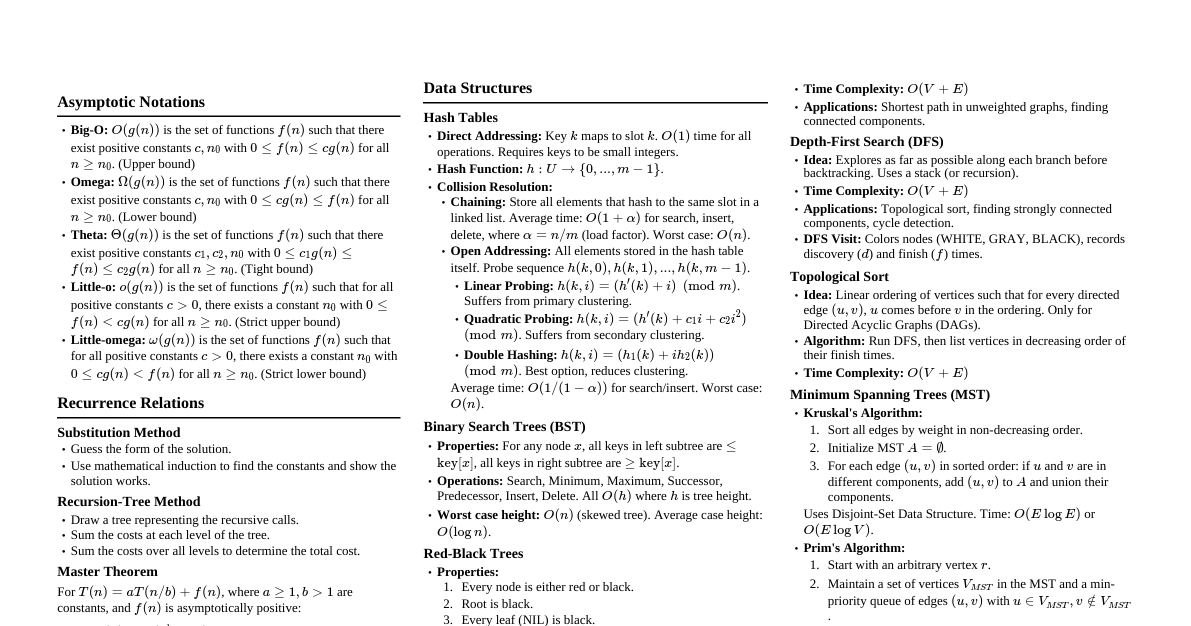

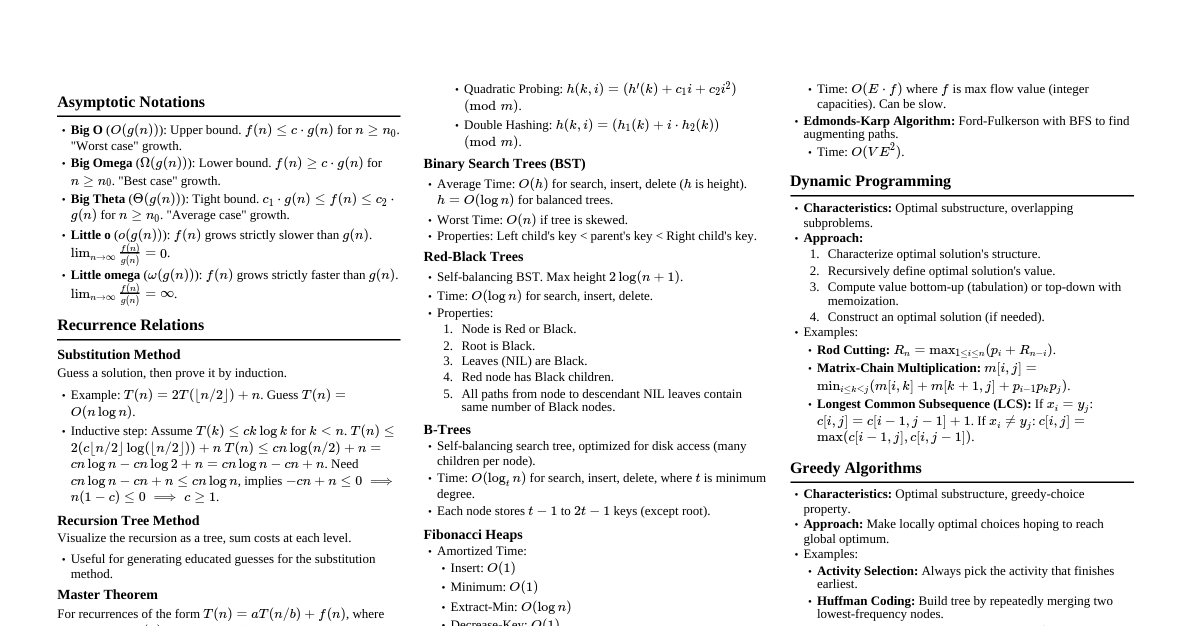

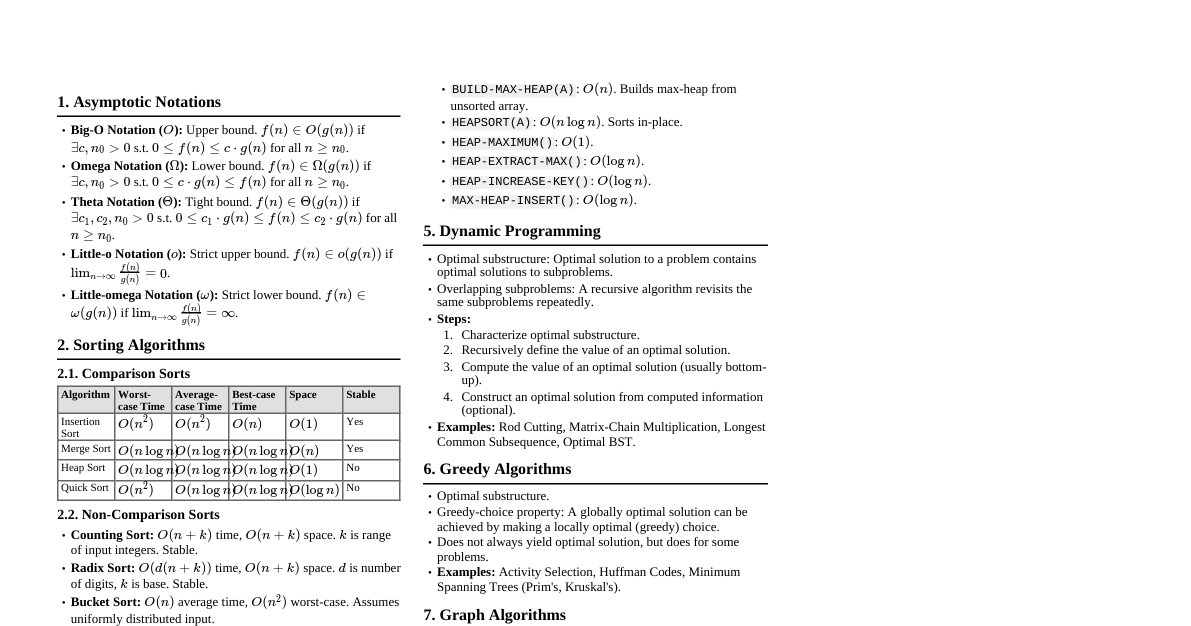

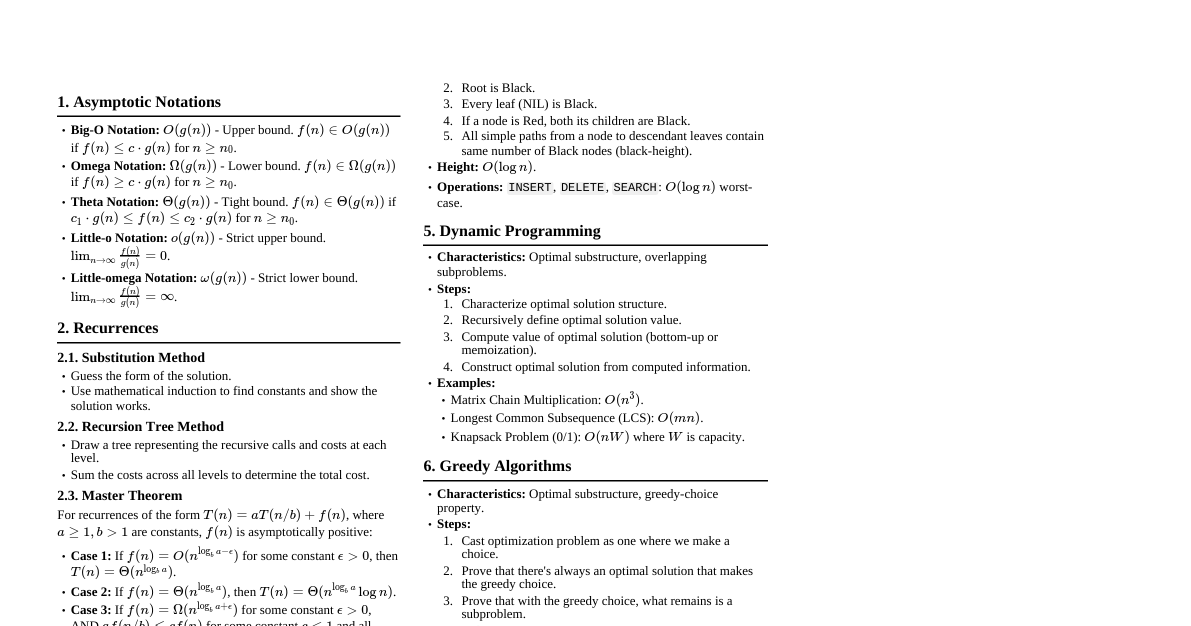

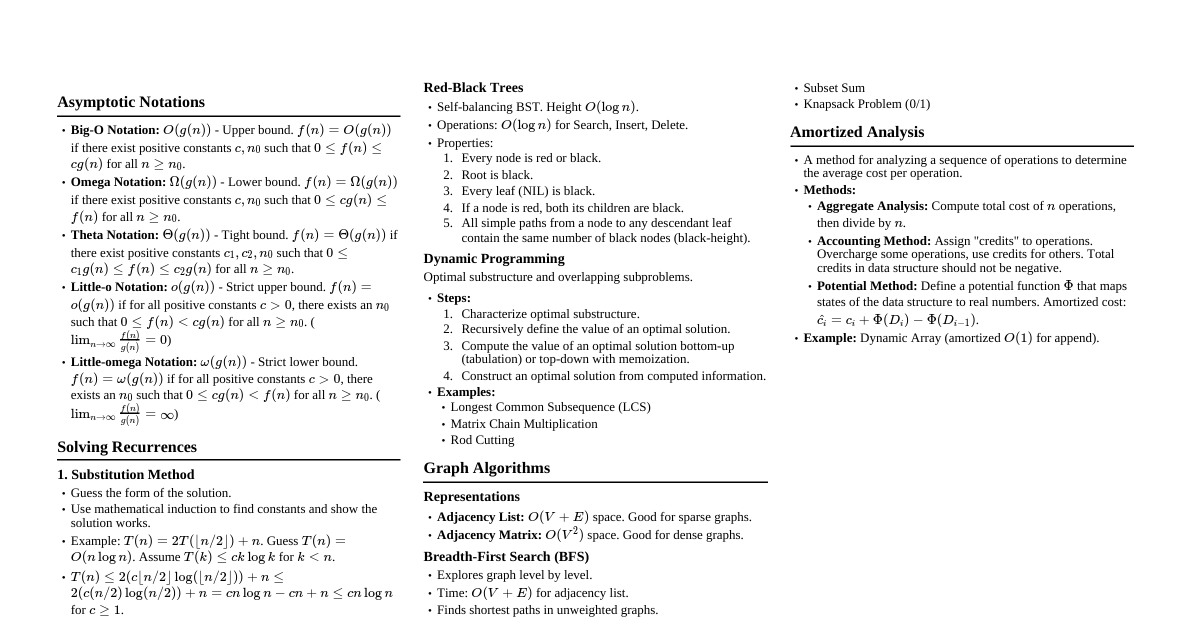

Asymptotic Notations Big-O Notation ($O$): Upper bound. $O(g(n)) = \{f(n) : \exists c, n_0 > 0 \text{ s.t. } 0 \le f(n) \le c g(n) \text{ for all } n \ge n_0\}$. Omega Notation ($\Omega$): Lower bound. $\Omega(g(n)) = \{f(n) : \exists c, n_0 > 0 \text{ s.t. } 0 \le c g(n) \le f(n) \text{ for all } n \ge n_0\}$. Theta Notation ($\Theta$): Tight bound. $\Theta(g(n)) = \{f(n) : \exists c_1, c_2, n_0 > 0 \text{ s.t. } 0 \le c_1 g(n) \le f(n) \le c_2 g(n) \text{ for all } n \ge n_0\}$. Little-o Notation ($o$): Strict upper bound. $f(n) = o(g(n))$ if $\lim_{n \to \infty} \frac{f(n)}{g(n)} = 0$. Little-omega Notation ($\omega$): Strict lower bound. $f(n) = \omega(g(n))$ if $\lim_{n \to \infty} \frac{f(n)}{g(n)} = \infty$. Recurrence Relations Master Theorem For $T(n) = aT(n/b) + f(n)$, where $a \ge 1, b > 1$. Case 1: If $f(n) = O(n^{\log_b a - \epsilon})$ for some $\epsilon > 0$, then $T(n) = \Theta(n^{\log_b a})$. Case 2: If $f(n) = \Theta(n^{\log_b a})$, then $T(n) = \Theta(n^{\log_b a} \log n)$. Case 3: If $f(n) = \Omega(n^{\log_b a + \epsilon})$ for some $\epsilon > 0$, AND $af(n/b) \le c f(n)$ for some $c Sorting Algorithms Algorithm Worst-case Avg-case Best-case Space Stable Notes Insertion Sort $O(n^2)$ $O(n^2)$ $O(n)$ $O(1)$ Yes Good for nearly sorted arrays. Merge Sort $O(n \log n)$ $O(n \log n)$ $O(n \log n)$ $O(n)$ Yes Divide & Conquer. Not in-place. Heap Sort $O(n \log n)$ $O(n \log n)$ $O(n \log n)$ $O(1)$ No In-place. Uses a binary heap. Quick Sort $O(n^2)$ $O(n \log n)$ $O(n \log n)$ $O(\log n)$ No Divide & Conquer. Pivot choice matters. Counting Sort $O(n+k)$ $O(n+k)$ $O(n+k)$ $O(n+k)$ Yes For integers in a range $[0, k]$. Radix Sort $O(d(n+k))$ $O(d(n+k))$ $O(d(n+k))$ $O(n+k)$ Yes Sorts $d$-digit numbers. Stable sub-sort necessary. Data Structures Hash Tables Direct Addressing: Array $T[0 \dots m-1]$. Key $k$ stored at $T[k]$. $O(1)$ search/insert/delete. Collision Resolution: Chaining: Each slot $T[j]$ points to a linked list of elements that hash to $j$. Avg. search/insert/delete: $\Theta(1 + \alpha)$, where $\alpha = n/m$ (load factor). Worst-case: $\Theta(n)$. Open Addressing: All elements stored in the hash table itself. When a collision occurs, probe for another slot. Linear Probing: $h(k, i) = (h'(k) + i) \pmod m$. Can lead to primary clustering. Quadratic Probing: $h(k, i) = (h'(k) + c_1 i + c_2 i^2) \pmod m$. Can lead to secondary clustering. Double Hashing: $h(k, i) = (h_1(k) + i h_2(k)) \pmod m$. Best results generally. Binary Search Trees (BSTs) Left child $\le$ parent $\le$ right child. Operations (Search, Min, Max, Successor, Predecessor, Insert, Delete): $O(h)$ where $h$ is tree height. Worst-case height: $O(n)$ (skewed tree). Average-case height: $O(\log n)$. Red-Black Trees Self-balancing BST. Properties: Every node is either red or black. Root is black. Every leaf (NIL) is black. If a node is red, both its children are black. All simple paths from a node to any descendant leaf contain the same number of black nodes (black-height). Height is $O(\log n)$. Operations (Search, Insert, Delete): $O(\log n)$. B-Trees Self-balancing search tree, optimized for disk access. Each node can have many children. Degree $t \ge 2$: Every node has at most $2t-1$ keys. Every internal node has at most $2t$ children. Every node (except root) has at least $t-1$ keys and $t$ children. Root has at least 1 key (if not a leaf). All leaves have the same depth. Height: $O(\log_t n)$. Operations (Search, Insert, Delete): $O(t \log_t n)$ in terms of CPU time; $O(\log_t n)$ disk access. Fibonacci Heaps Collection of min-heap-ordered trees. Amortized analysis. Operation Amortized Worst-case Make-Heap $O(1)$ $O(1)$ Insert $O(1)$ $O(1)$ Minimum $O(1)$ $O(1)$ Extract-Min $O(\log n)$ $O(n)$ Decrease-Key $O(1)$ $O(n)$ Delete $O(\log n)$ $O(n)$ Union $O(1)$ $O(n)$ Graph Algorithms Representations Adjacency List: Array of lists. $O(V+E)$ space. Good for sparse graphs. Adjacency Matrix: $V \times V$ matrix. $O(V^2)$ space. Good for dense graphs. Breadth-First Search (BFS) Explores layer by layer. Uses a queue. Time: $O(V+E)$. Finds shortest path in unweighted graphs. Depth-First Search (DFS) Explores deeply before backtracking. Uses a stack (or recursion). Time: $O(V+E)$. Useful for topological sort, finding connected components, cycles. Minimum Spanning Trees (MST) A spanning tree with minimum total edge weight. Kruskal's Algorithm: Sort all edges by weight. Iterate through sorted edges, add edge if it doesn't form a cycle (using Union-Find). Time: $O(E \log E)$ or $O(E \log V)$ (dominated by sorting). Prim's Algorithm: Start with arbitrary vertex, grow MST by adding cheapest edge connecting a vertex in MST to one outside. Uses a min-priority queue. Time: $O(E \log V)$ with binary heap, $O(E + V \log V)$ with Fibonacci heap. Shortest Paths Dijkstra's Algorithm: Finds shortest paths from a single source to all other vertices in a graph with non-negative edge weights. Uses a min-priority queue. Time: $O(E \log V)$ with binary heap, $O(E + V \log V)$ with Fibonacci heap. Bellman-Ford Algorithm: Finds shortest paths from a single source to all other vertices, can handle negative edge weights. Detects negative cycles. Time: $O(VE)$. Floyd-Warshall Algorithm: Finds all-pairs shortest paths. Uses dynamic programming. Time: $O(V^3)$. Johnson's Algorithm: All-pairs shortest paths for sparse graphs with negative edge weights. Reweights edges using Bellman-Ford, then runs Dijkstra $V$ times. Time: $O(VE + V^2 \log V)$. Topological Sort Linear ordering of vertices such that for every directed edge $(u, v)$, $u$ comes before $v$. Only for Directed Acyclic Graphs (DAGs). Can be done using DFS or by counting in-degrees. Time: $O(V+E)$. Dynamic Programming Optimal substructure: Optimal solution to a problem contains optimal solutions to subproblems. Overlapping subproblems: A recursive algorithm revisits the same subproblems repeatedly. Techniques: Memoization (Top-down): Store results of expensive function calls and return the cached result when the same inputs occur again. Tabulation (Bottom-up): Solve smaller subproblems first and build up to the solution of larger problems. Examples: Rod Cutting: Given a rod of length $n$ and prices $p_i$ for lengths $i=1 \dots n$, find max revenue. Matrix Chain Multiplication: Find optimal parenthesization to minimize scalar multiplications. Longest Common Subsequence (LCS): Find longest subsequence common to two sequences. Knapsack Problem: (0/1 Knapsack) Given weights and values, maximize value within capacity. Greedy Algorithms Makes locally optimal choices in the hope of finding a global optimum. Properties: Greedy choice property: A globally optimal solution can be reached by making a locally optimal (greedy) choice. Optimal substructure: An optimal solution to the original problem contains optimal solutions to subproblems. Examples: Activity Selection Problem: Maximize compatible activities. Huffman Coding: Optimal prefix codes for data compression. Fractional Knapsack: Can take fractions of items. Amortized Analysis Averages the time required to perform a sequence of operations over all operations. Techniques: Aggregate Analysis: Total cost for $n$ operations is $T(n)$, average is $T(n)/n$. Accounting Method: Assign different costs to operations, some operations "pay" for future expensive operations (credits). Potential Method: Define a potential function $\Phi(D)$ for data structure $D$. Amortized cost $c_i' = c_i + \Phi(D_i) - \Phi(D_{i-1})$. Maximum Flow Flow Network: Directed graph $G=(V,E)$ with capacities $c(u,v)$ and source $s$, sink $t$. Flow $f$: Assignment of values to edges s.t. capacity constraint, skew symmetry, and flow conservation. Ford-Fulkerson Method: Repeatedly find augmenting paths in the residual graph until no more paths exist. Edmonds-Karp Algorithm: Ford-Fulkerson using BFS to find augmenting paths. Time: $O(VE^2)$. Max-Flow Min-Cut Theorem: The value of a maximum flow is equal to the capacity of a minimum cut. String Matching Naive Algorithm: Time $O((n-m+1)m)$. Rabin-Karp Algorithm: Uses hashing to quickly check for matches. Average-case: $O(n+m)$. Worst-case: $O((n-m+1)m)$. Uses rolling hash function. Knuth-Morris-Pratt (KMP) Algorithm: Uses a precomputed prefix function (LPS array) to avoid re-matching characters. Time: $O(n+m)$. Prefix function computation: $O(m)$. Matching: $O(n)$.