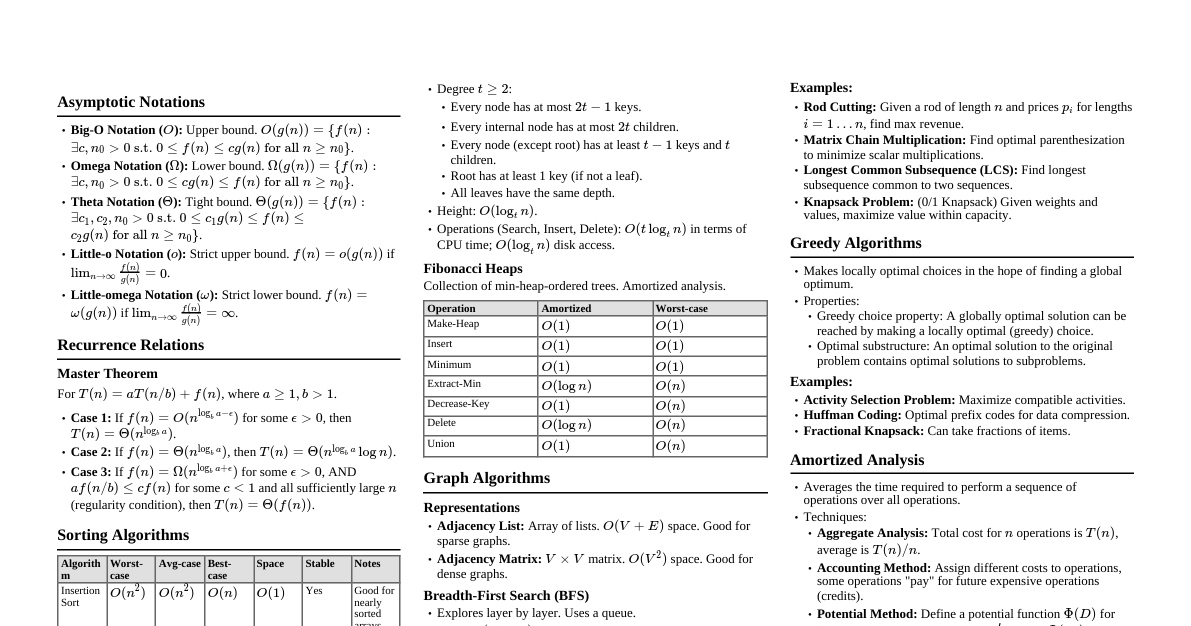

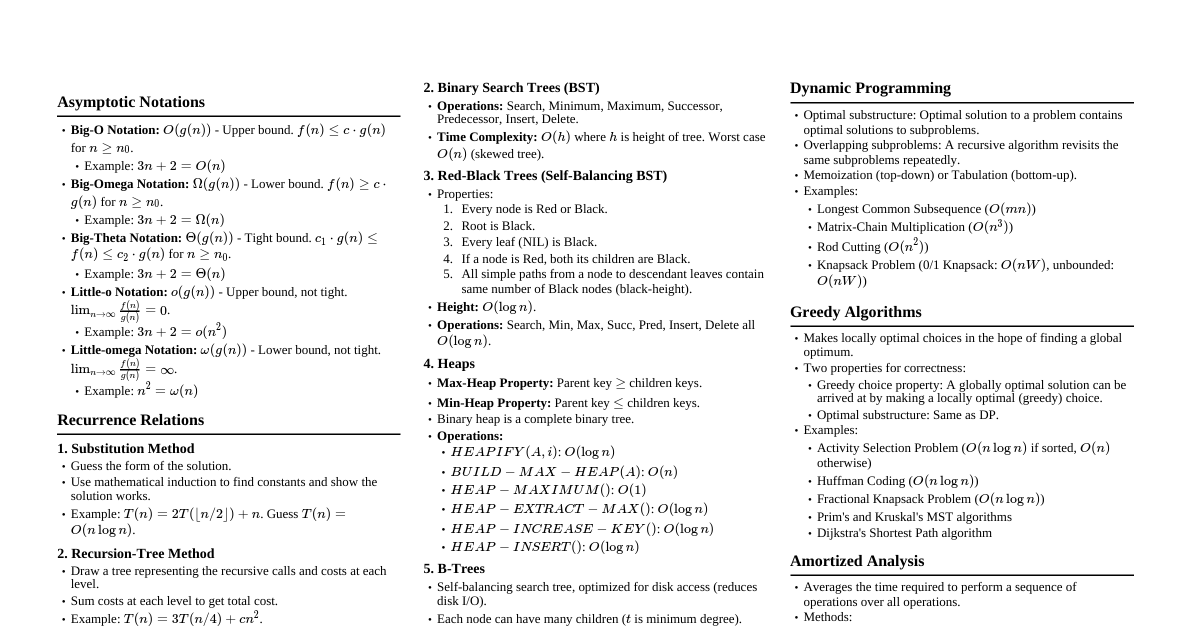

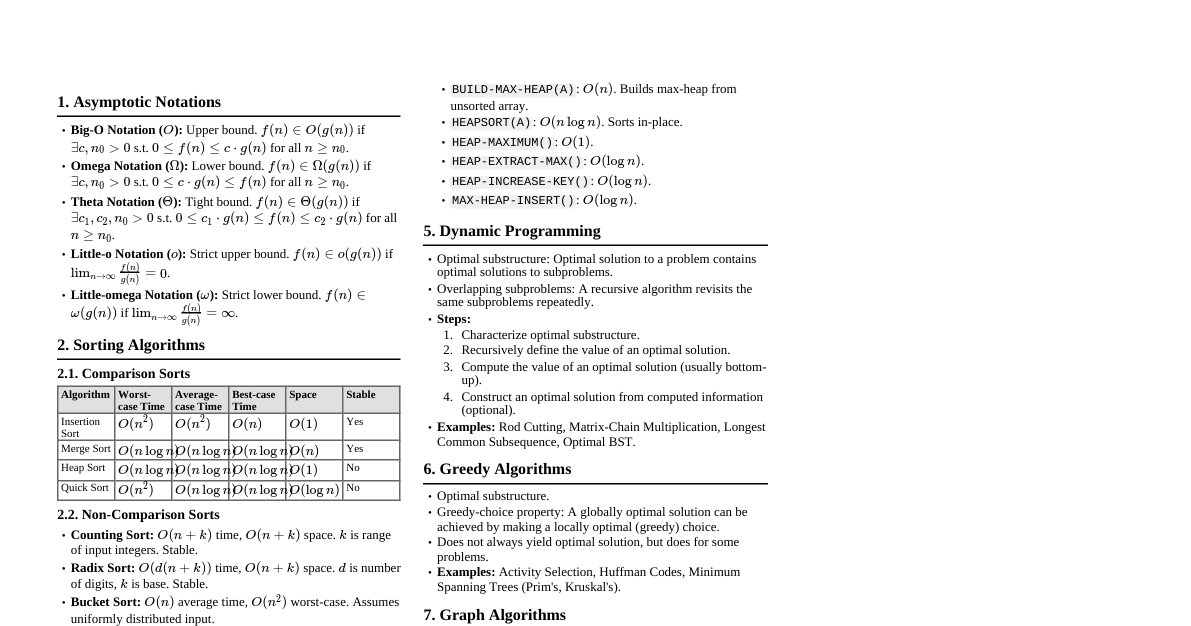

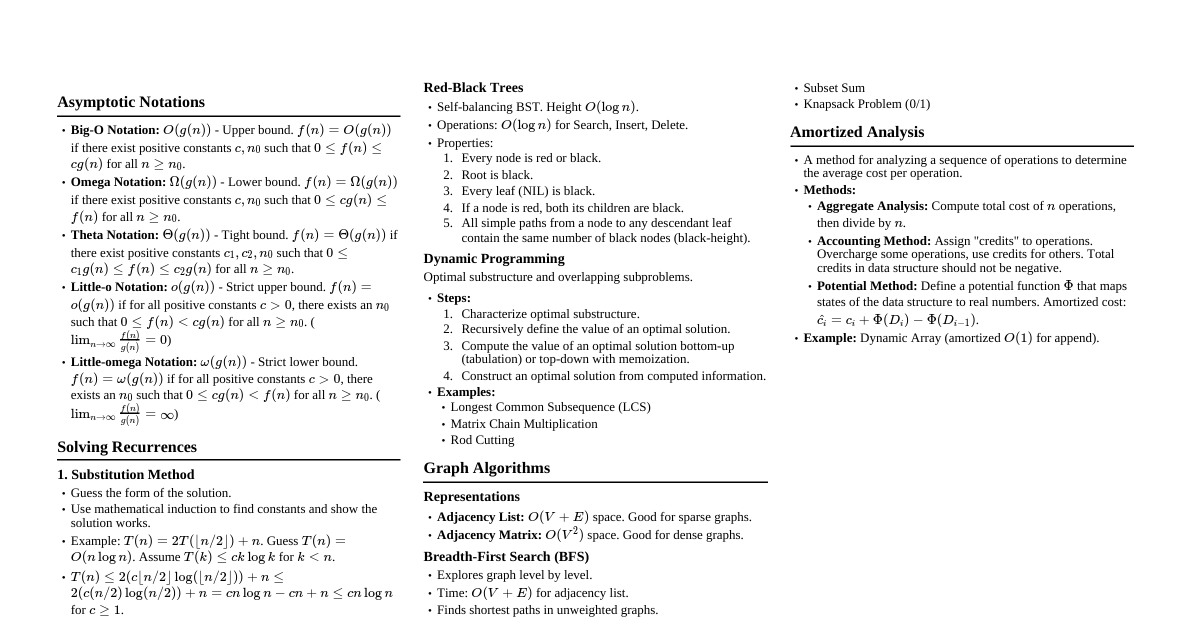

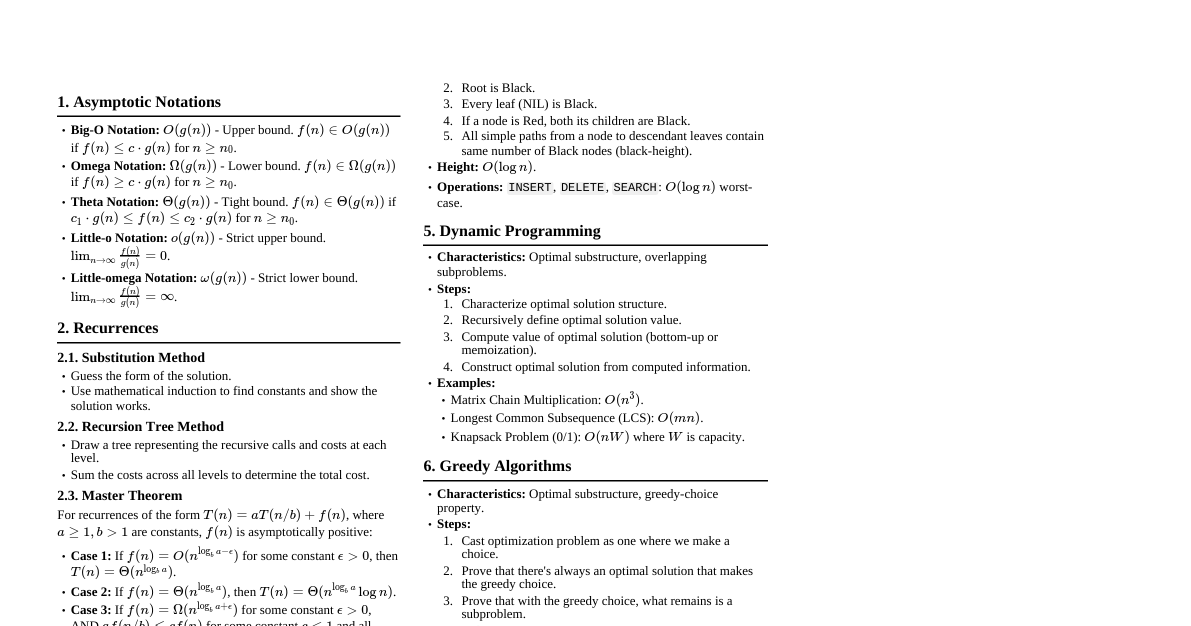

### Asymptotic Notation - **O-notation (Big-O):** $O(g(n))$ is the set of functions $f(n)$ for which there exist positive constants $c$ and $n_0$ such that $0 \le f(n) \le c g(n)$ for all $n \ge n_0$. (Upper bound) - **Ω-notation (Big-Omega):** $\Omega(g(n))$ is the set of functions $f(n)$ for which there exist positive constants $c$ and $n_0$ such that $0 \le c g(n) \le f(n)$ for all $n \ge n_0$. (Lower bound) - **Θ-notation (Theta):** $\Theta(g(n))$ is the set of functions $f(n)$ for which there exist positive constants $c_1, c_2$ and $n_0$ such that $0 \le c_1 g(n) \le f(n) \le c_2 g(n)$ for all $n \ge n_0$. (Tight bound) - **o-notation (Little-o):** $f(n) = o(g(n))$ if for all positive constants $c > 0$, there exists a constant $n_0 > 0$ such that $0 \le f(n) 0$, there exists a constant $n_0 > 0$ such that $0 \le c g(n) ### Solving Recurrence Relations - **Substitution Method:** Guess a solution and prove it by induction. - **Recursion-Tree Method:** Draw a recursion tree to sum up costs at each level. - **Master Method:** For recurrences of the form $T(n) = aT(n/b) + f(n)$, where $a \ge 1, b > 1$ are constants, and $f(n)$ is asymptotically positive. 1. If $f(n) = O(n^{\log_b a - \epsilon})$ for some constant $\epsilon > 0$, then $T(n) = \Theta(n^{\log_b a})$. 2. If $f(n) = \Theta(n^{\log_b a})$, then $T(n) = \Theta(n^{\log_b a} \lg n)$. 3. If $f(n) = \Omega(n^{\log_b a + \epsilon})$ for some constant $\epsilon > 0$, and if $a f(n/b) \le c f(n)$ for some constant $c ### Sorting Algorithms #### Insertion Sort - **Time Complexity:** $O(n^2)$ average and worst-case, $\Omega(n)$ best-case. - **Space Complexity:** $O(1)$ - **Stable:** Yes - **Concept:** Iteratively inserts each element into its correct position in the sorted part of the array. #### Merge Sort - **Time Complexity:** $\Theta(n \lg n)$ in all cases. - **Space Complexity:** $O(n)$ - **Stable:** Yes - **Concept:** Divide and Conquer. Divides array into two halves, recursively sorts them, then merges the two sorted halves. ```python def merge_sort(arr): if len(arr) > 1: mid = len(arr) // 2 L = arr[:mid] R = arr[mid:] merge_sort(L) merge_sort(R) i = j = k = 0 while i ### Data Structures #### Hash Tables - **Concept:** Maps keys to values using a hash function to compute an index into an array of buckets. - **Collision Resolution:** - **Chaining:** Store all elements that hash to the same slot in a linked list. - **Open Addressing:** If a slot is occupied, probe for an alternative slot. - **Linear Probing:** $h(k,i) = (h'(k) + i) \pmod m$ - **Quadratic Probing:** $h(k,i) = (h'(k) + c_1 i + c_2 i^2) \pmod m$ - **Double Hashing:** $h(k,i) = (h_1(k) + i h_2(k)) \pmod m$ - **Load Factor ($\alpha$):** $n/m$ (number of elements / number of slots). - **Average Search/Insert/Delete:** $O(1+\alpha)$ for chaining, $O(1/(1-\alpha))$ for open addressing. #### Binary Search Trees (BST) - **Properties:** Left child ### Graph Algorithms - **Representations:** Adjacency List ($O(V+E)$ space), Adjacency Matrix ($O(V^2)$ space). #### Breadth-First Search (BFS) - **Concept:** Explores graph layer by layer. Finds shortest path in terms of number of edges. - **Time Complexity:** $O(V+E)$. - **Uses:** Shortest path (unweighted), connectivity, bipartite checking. ```python def bfs(graph, start): visited = {start} queue = collections.deque([start]) distances = {node: float('inf') for node in graph} distances[start] = 0 while queue: u = queue.popleft() for v in graph[u]: if v not in visited: visited.add(v) queue.append(v) distances[v] = distances[u] + 1 return distances ``` #### Depth-First Search (DFS) - **Concept:** Explores as far as possible along each branch before backtracking. - **Time Complexity:** $O(V+E)$. - **Uses:** Topological sort, strongly connected components, cycle detection. ```python def dfs(graph, start, visited=None): if visited is None: visited = set() visited.add(start) for neighbor in graph[start]: if neighbor not in visited: dfs(graph, neighbor, visited) return visited ``` #### Topological Sort - **Concept:** Linear ordering of vertices such that for every directed edge $u \to v$, $u$ comes before $v$ in the ordering. Applicable only to Directed Acyclic Graphs (DAGs). - **Algorithm:** Perform DFS. When a node's exploration is complete, prepend it to a list. - **Time Complexity:** $O(V+E)$. #### Minimum Spanning Trees (MST) - **Problem:** Find a subset of edges that connects all vertices in a connected, undirected, edge-weighted graph, with the minimum possible total edge weight. - **Properties:** Cut property, Cycle property. ##### Kruskal's Algorithm - **Concept:** Greedily adds the smallest weight edge that does not form a cycle. Uses Disjoint Set Union. - **Time Complexity:** $O(E \lg V)$ or $O(E \lg E)$ (dominated by sorting edges). ```python def kruskal(graph_edges, num_vertices): mst = [] edges = sorted(graph_edges, key=lambda x: x[2]) # Sort by weight parent = list(range(num_vertices)) # For DSU def find(i): if parent[i] == i: return i parent[i] = find(parent[i]) return parent[i] def union(i, j): root_i = find(i) root_j = find(j) if root_i != root_j: parent[root_i] = root_j return True return False for u, v, weight in edges: if union(u, v): mst.append((u, v, weight)) return mst ``` ##### Prim's Algorithm - **Concept:** Starts from an arbitrary vertex and grows the MST by adding the cheapest edge connecting a vertex in the MST to one not in the MST. Uses a min-priority queue. - **Time Complexity:** $O(E \lg V)$ with binary heap, $O(E + V \lg V)$ with Fibonacci heap. #### Single-Source Shortest Paths - **Notation:** $\delta(s, v)$ is the shortest path weight from source $s$ to vertex $v$. - **Relaxation:** `RELAX(u, v, w)` updates $d[v]$ if a shorter path to $v$ through $u$ is found. ##### Bellman-Ford Algorithm - **Concept:** Works on graphs with negative edge weights (but no negative cycles reachable from source). - **Time Complexity:** $O(VE)$. - **Process:** Initialize distances, then repeatedly relax all edges $V-1$ times. Check for negative cycles in the $V$-th iteration. ##### Dijkstra's Algorithm - **Concept:** Works on graphs with non-negative edge weights. Greedily selects the closest unvisited vertex. Uses a min-priority queue. - **Time Complexity:** $O(E \lg V)$ with binary heap, $O(E + V \lg V)$ with Fibonacci heap. ```python import heapq def dijkstra(graph, start): distances = {node: float('inf') for node in graph} distances[start] = 0 pq = [(0, start)] # (distance, vertex) while pq: dist, u = heapq.heappop(pq) if dist > distances[u]: continue for v, weight in graph[u].items(): # graph[u] is a dict of neighbors and weights if distances[u] + weight ### Dynamic Programming - **Concept:** Solves complex problems by breaking them into simpler subproblems. Stores results of subproblems to avoid recomputation. - **Characteristics:** Optimal substructure, Overlapping subproblems. - **Approaches:** - **Memoization (Top-down):** Recursive solution that stores results in a table. - **Tabulation (Bottom-up):** Iterative solution that fills a table. #### Longest Common Subsequence (LCS) - **Problem:** Find the longest subsequence common to two sequences. - **Recurrence:** $$LCS(i,j) = \begin{cases} 0 & \text{if } i=0 \text{ or } j=0 \\ LCS(i-1, j-1) + 1 & \text{if } x_i = y_j \\ \max(LCS(i-1, j), LCS(i, j-1)) & \text{if } x_i \neq y_j \end{cases}$$ - **Time Complexity:** $O(mn)$ for sequences of length $m$ and $n$. #### Matrix Chain Multiplication - **Problem:** Parenthesize a chain of matrices to minimize the number of scalar multiplications. - **Recurrence:** $$m[i,j] = \min_{i \le k ### Greedy Algorithms - **Concept:** Makes locally optimal choices at each step with the hope of finding a global optimum. - **Characteristics:** Greedy choice property, Optimal substructure. #### Activity Selection Problem - **Problem:** Given a set of activities, each with a start and finish time, select the maximum number of non-overlapping activities. - **Greedy Choice:** Always select the activity that finishes earliest among the compatible activities. - **Time Complexity:** $O(n \lg n)$ (dominated by sorting by finish times). #### Huffman Codes - **Concept:** Optimal prefix codes for data compression. Assigns shorter codes to more frequent characters. - **Algorithm:** Build a binary tree where leaves are characters and internal nodes represent combined frequencies. Greedily combine two lowest frequency nodes. - **Time Complexity:** $O(n \lg n)$ where $n$ is number of characters. ### String Matching #### Naive Algorithm - **Concept:** Checks for pattern $P$ of length $m$ in text $T$ of length $n$ by sliding $P$ one position at a time. - **Time Complexity:** $O((n-m+1)m)$, worst-case $O(nm)$. #### Knuth-Morris-Pratt (KMP) Algorithm - **Concept:** Uses a precomputed prefix function (LPS array) to avoid re-matching characters that are known to match. - **Time Complexity:** $O(n+m)$. - **Preprocessing (LPS array):** $O(m)$. - **Matching:** $O(n)$. ### NP-Completeness - **P (Polynomial time):** Class of decision problems solvable in polynomial time by a deterministic Turing machine. - **NP (Non-deterministic Polynomial time):** Class of decision problems solvable in polynomial time by a non-deterministic Turing machine (or verifiable in polynomial time by a deterministic Turing machine). - **NP-Hard:** A problem $H$ is NP-hard if for every problem $L$ in NP, $L \le_P H$ (L is polynomially reducible to H). - **NP-Complete:** A problem $C$ is NP-complete if $C \in NP$ and $C$ is NP-hard. - **Polynomial-Time Reducibility ($L_1 \le_P L_2$):** If an algorithm for $L_2$ can be used as a subroutine to solve $L_1$ in polynomial time. - **Common NP-Complete Problems:** - **SAT (Satisfiability):** Given a boolean formula, is there an assignment of truth values to variables that makes the formula true? - **3-SAT:** SAT where each clause has exactly 3 literals. - **Clique:** Given a graph $G=(V,E)$ and an integer $k$, does $G$ contain a clique of size at least $k$? - **Vertex Cover:** Given a graph $G=(V,E)$ and an integer $k$, is there a vertex cover of size at most $k$? - **Hamiltonian Cycle:** Given a graph $G=(V,E)$, does it contain a Hamiltonian cycle (visits every vertex exactly once)? - **Traveling Salesperson Problem (TSP):** Given a list of cities and distances between each pair, find the shortest possible route that visits each city exactly once and returns to the origin city. (Decision version is NP-Complete) - **Subset Sum:** Given a set of integers $S$ and an integer $T$, is there a subset of $S$ whose elements sum to $T$? ### Randomized Algorithms - **Concept:** Uses random choices to achieve good performance, either in expectation or with high probability. - **Types:** - **Las Vegas:** Always produces correct output; running time is probabilistic. Example: Randomized Quicksort. - **Monte Carlo:** May produce incorrect output with some probability; running time is deterministic. Example: Primality testing (e.g., Miller-Rabin). #### Randomized Quicksort - **Concept:** Picks a random pivot instead of a fixed one. This makes the worst-case $O(n^2)$ unlikely to occur for any specific input. - **Expected Time Complexity:** $O(n \lg n)$. ### Amortized Analysis - **Concept:** A method for analyzing the time complexity of a sequence of operations, averaging the time over the sequence. - **Methods:** - **Aggregate Analysis:** Total time for $n$ operations is $T(n)$, so average is $T(n)/n$. - **Accounting Method:** Assigns "costs" to operations; some operations are overcharged to pay for future expensive operations. - **Potential Method:** Defines a potential function $\Phi$ on the data structure's state. Amortized cost = actual cost + change in potential. - **Examples:** - **Dynamic Arrays:** Appending an element is $O(1)$ amortized. - **Disjoint-Set Union:** $O(\alpha(n))$ amortized per operation.