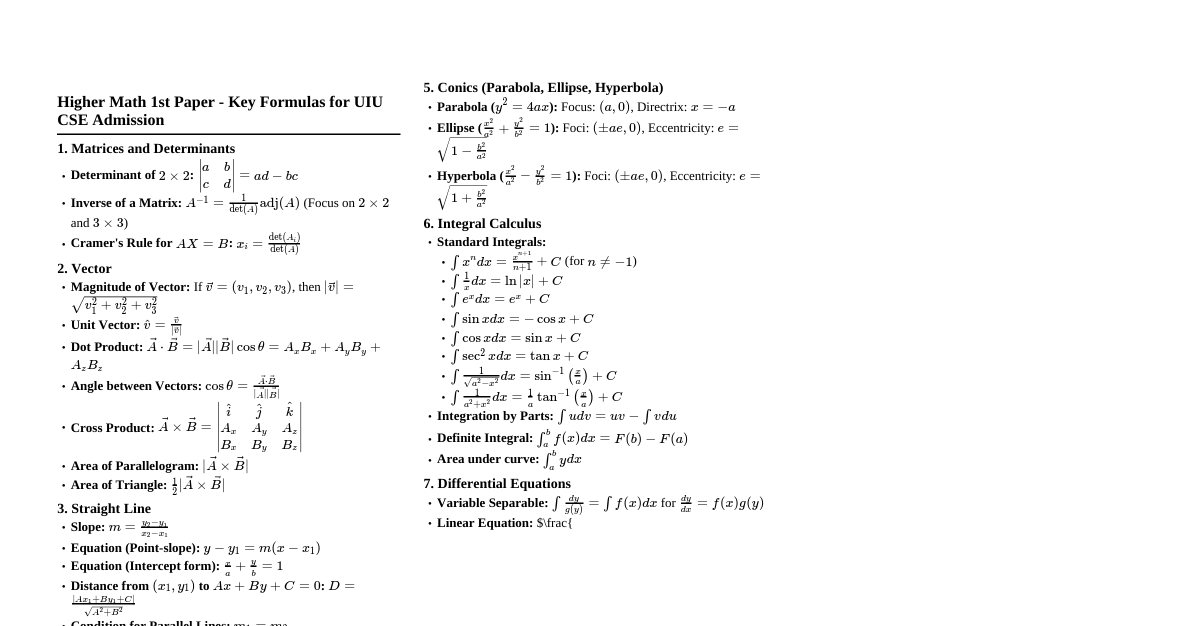

### Matrices - **Inverse & Rank:** - **Inverse:** For $A$, $A^{-1}$ s.t. $AA^{-1} = I$. Use adjoint method or elementary row operations. - **Rank:** Maximum number of linearly independent rows/columns. Find by reducing to Echelon form. - **Elementary Operations:** - Row/Column Swapping ($R_i \leftrightarrow R_j$) - Scalar Multiplication ($R_i \to kR_i$) - Row Addition ($R_i \to R_i + kR_j$) - **System of Linear Equations ($Ax=b$):** - **Consistent:** $\text{rank}(A) = \text{rank}([A|b])$ - Unique solution if $\text{rank}(A) = n$ (number of variables) - Infinite solutions if $\text{rank}(A) ### Vector Calculus - **Vector Function:** $\vec{r}(t) = f(t)\hat{i} + g(t)\hat{j} + h(t)\hat{k}$ - **Differentiation:** $\frac{d\vec{r}}{dt} = \frac{df}{dt}\hat{i} + \frac{dg}{dt}\hat{j} + \frac{dh}{dt}\hat{k}$ - **Scalar Point Function:** $\phi(x, y, z)$ - **Vector Point Function:** $\vec{F}(x, y, z) = F_1\hat{i} + F_2\hat{j} + F_3\hat{k}$ - **Gradient ($\nabla\phi$):** - $\nabla\phi = \frac{\partial\phi}{\partial x}\hat{i} + \frac{\partial\phi}{\partial y}\hat{j} + \frac{\partial\phi}{\partial z}\hat{k}$ - Points in direction of maximum increase of $\phi$. Normal to level surfaces. - **Divergence ($\nabla \cdot \vec{F}$):** - $\nabla \cdot \vec{F} = \frac{\partial F_1}{\partial x} + \frac{\partial F_2}{\partial y} + \frac{\partial F_3}{\partial z}$ - Measures outflux per unit volume. - **Curl ($\nabla \times \vec{F}$):** - $\nabla \times \vec{F} = \begin{vmatrix} \hat{i} & \hat{j} & \hat{k} \\ \frac{\partial}{\partial x} & \frac{\partial}{\partial y} & \frac{\partial}{\partial z} \\ F_1 & F_2 & F_3 \end{vmatrix}$ - Measures rotation/circulation of the vector field. - **Directional Derivative:** - $D_{\hat{u}}\phi = \nabla\phi \cdot \hat{u}$, where $\hat{u}$ is a unit vector. - Rate of change of $\phi$ in the direction of $\hat{u}$. ### Infinite Series - **Convergence of Sequence ($a_n$):** $\lim_{n \to \infty} a_n = L$ (finite) - **Convergence of Series ($\sum a_n$):** A series converges if its sequence of partial sums ($S_N = \sum_{n=1}^N a_n$) converges. - **Tests for Convergence:** - **Comparison Test:** If $0 \le a_n \le b_n$: - If $\sum b_n$ converges, then $\sum a_n$ converges. - If $\sum a_n$ diverges, then $\sum b_n$ diverges. - **Cauchy's Root Test:** For $\sum a_n$, let $L = \lim_{n \to \infty} (a_n)^{1/n}$. - If $L 1$, series diverges. - If $L = 1$, test is inconclusive. - **D'Alembert's Ratio Test:** For $\sum a_n$, let $L = \lim_{n \to \infty} \left| \frac{a_{n+1}}{a_n} \right|$. - If $L 1$, series diverges. - If $L = 1$, test is inconclusive. - **Raabe's Test:** If Ratio Test is inconclusive ($L=1$), let $L = \lim_{n \to \infty} n \left( \left| \frac{a_n}{a_{n+1}} \right| - 1 \right)$. - If $L > 1$, series converges. - If $L 0$. - **Leibnitz's Test (for Alternating Series):** An alternating series converges if: 1. $b_n$ is positive. 2. $b_n$ is decreasing ($b_{n+1} \le b_n$). 3. $\lim_{n \to \infty} b_n = 0$. - **Absolute Convergence:** $\sum a_n$ converges absolutely if $\sum |a_n|$ converges. Absolute convergence implies convergence. - **Conditional Convergence:** $\sum a_n$ converges, but $\sum |a_n|$ diverges. ### First Order Ordinary Differential Equations - **General Form:** $\frac{dy}{dx} = f(x,y)$ or $M(x,y)dx + N(x,y)dy = 0$ - **Exact Equations:** $M(x,y)dx + N(x,y)dy = 0$ is exact if $\frac{\partial M}{\partial y} = \frac{\partial N}{\partial x}$. - Solution: $\int M dx + \int (N - \frac{\partial}{\partial y}\int M dx) dy = C$ - **Linear Equations:** $\frac{dy}{dx} + P(x)y = Q(x)$ - Integrating Factor (IF): $e^{\int P(x)dx}$ - Solution: $y \cdot \text{IF} = \int Q(x) \cdot \text{IF} dx + C$ - **Bernoulli's Equation:** $\frac{dy}{dx} + P(x)y = Q(x)y^n$ - Substitute $v = y^{1-n}$ to convert to linear form. - **Euler's Equations (Homogeneous):** $x^n \frac{d^n y}{dx^n} + \dots + a_1 x \frac{dy}{dx} + a_0 y = 0$ (For first order, trivial: $ay = bx$, separable) - **Equations Not of First Degree:** - **Solvable for $p$ ($p = \frac{dy}{dx}$):** $p = f(x,y)$. Factorize $p$ and solve as separate first-order ODEs. - **Solvable for $y$:** $y = f(x,p)$. Differentiate w.r.t $x$ to get an ODE in $x$ and $p$. - **Solvable for $x$:** $x = f(y,p)$. Differentiate w.r.t $y$ to get an ODE in $y$ and $p$. - **Clairaut's Equation:** $y = xp + f(p)$ - General Solution: $y = cx + f(c)$ (by replacing $p$ with $c$) - Singular Solution: Obtained by eliminating $p$ from $y = xp + f(p)$ and $x + f'(p) = 0$. ### Ordinary Differential Equations of Higher Orders - **Linear ODE with Constant Coefficients:** $a_n y^{(n)} + \dots + a_1 y' + a_0 y = F(x)$ - **Characteristic Equation:** $a_n m^n + \dots + a_1 m + a_0 = 0$. Roots $m_1, m_2, \dots, m_n$. - **Complementary Function (C.F.):** - Real & Distinct Roots: $c_1e^{m_1x} + c_2e^{m_2x} + \dots$ - Real & Repeated Roots ($m$ repeated $k$ times): $(c_1 + c_2x + \dots + c_kx^{k-1})e^{mx}$ - Complex Conjugate Roots ($\alpha \pm i\beta$): $e^{\alpha x}(c_1\cos\beta x + c_2\sin\beta x)$ - **Particular Integral (P.I.) - D-operator Method:** $P.I. = \frac{1}{f(D)}F(x)$ - If $F(x) = e^{ax}$: $P.I. = \frac{1}{f(a)}e^{ax}$ (if $f(a) \neq 0$). If $f(a)=0$, multiply by $x$ and differentiate denominator: $\frac{x}{f'(a)}e^{ax}$. - If $F(x) = \sin(ax)$ or $\cos(ax)$: Replace $D^2$ with $-a^2$. - If $F(x) = x^k$: Use binomial expansion of $(f(D))^{-1}$. - If $F(x) = e^{ax}V(x)$: $P.I. = e^{ax}\frac{1}{f(D+a)}V(x)$. - **Method of Variation of Parameters:** For $y'' + P(x)y' + Q(x)y = R(x)$ - If $y_1, y_2$ are linearly independent solutions of homogeneous equation. - $y_p = u_1 y_1 + u_2 y_2$, where $u_1 = -\int \frac{y_2 R}{W} dx$ and $u_2 = \int \frac{y_1 R}{W} dx$. - Wronskian $W = y_1 y_2' - y_2 y_1'$. - **Cauchy-Euler Equations:** $ax^2y'' + bxy' + cy = F(x)$ - Substitute $x = e^z \implies z = \ln x$. - $xy' = Dy$, $x^2y'' = D(D-1)y$, etc. (where $D = \frac{d}{dz}$) - Converts to linear ODE with constant coefficients in terms of $z$. ### Calculus of Functions of Several Variables - **Functions of Several Variables:** $f(x,y)$, $f(x,y,z)$. - **Limit and Continuity:** Similar to single variable, but path-dependent limits are key for non-existence. - **Partial Derivatives:** - $\frac{\partial f}{\partial x}$ (treat $y,z$ as constants) - $\frac{\partial f}{\partial y}$ (treat $x,z$ as constants) - **Higher Order:** $\frac{\partial^2 f}{\partial x^2}$, $\frac{\partial^2 f}{\partial y^2}$, $\frac{\partial^2 f}{\partial z^2}$, $\frac{\partial^2 f}{\partial x \partial y} = \frac{\partial^2 f}{\partial y \partial x}$ (Clairaut's theorem, if continuous). - **Homogeneous Functions:** A function $f(x,y)$ is homogeneous of degree $n$ if $f(tx,ty) = t^n f(x,y)$. - **Euler's Theorem for Homogeneous Functions:** If $f(x,y)$ is a homogeneous function of degree $n$, then $x \frac{\partial f}{\partial x} + y \frac{\partial f}{\partial y} = nf$. - For three variables: $x \frac{\partial f}{\partial x} + y \frac{\partial f}{\partial y} + z \frac{\partial f}{\partial z} = nf$. ### Multiple Integration - **Line Integrals:** - $\int_C f(x,y,z) ds$ (scalar field, arc length) - $\int_C \vec{F} \cdot d\vec{r} = \int_C (F_1 dx + F_2 dy + F_3 dz)$ (vector field, work done) - **Double Integrals:** $\iint_R f(x,y) dA$ - Over rectangular regions or general regions, iterate $\int_a^b \int_{g_1(x)}^{g_2(x)} f(x,y) dy dx$. - Change of order of integration. - Polar coordinates: $x = r\cos\theta$, $y = r\sin\theta$, $dA = r dr d\theta$. - **Triple Integrals:** $\iiint_R f(x,y,z) dV$ - Iterated integrals like $\int \int \int f(x,y,z) dz dy dx$. - Cylindrical coordinates: $x = r\cos\theta$, $y = r\sin\theta$, $z = z$, $dV = r dz dr d\theta$. - Spherical coordinates: $x = \rho\sin\phi\cos\theta$, $y = \rho\sin\phi\sin\theta$, $z = \rho\cos\phi$, $dV = \rho^2\sin\phi d\rho d\phi d\theta$. - **Green's Theorem:** Relates a line integral around a simple closed curve $C$ to a double integral over the plane region $D$ bounded by $C$. - $\oint_C (P dx + Q dy) = \iint_D \left(\frac{\partial Q}{\partial x} - \frac{\partial P}{\partial y}\right) dA$ - **Stokes' Theorem:** Relates a surface integral of the curl of a vector field over a surface $S$ to a line integral of the vector field over the boundary curve $C$ of $S$. - $\oint_C \vec{F} \cdot d\vec{r} = \iint_S (\nabla \times \vec{F}) \cdot d\vec{S}$ - **Gauss's Divergence Theorem:** Relates the flux of a vector field out of a closed surface $S$ to a triple integral of the divergence of the field over the volume $V$ enclosed by $S$. - $\iint_S \vec{F} \cdot d\vec{S} = \iiint_V (\nabla \cdot \vec{F}) dV$