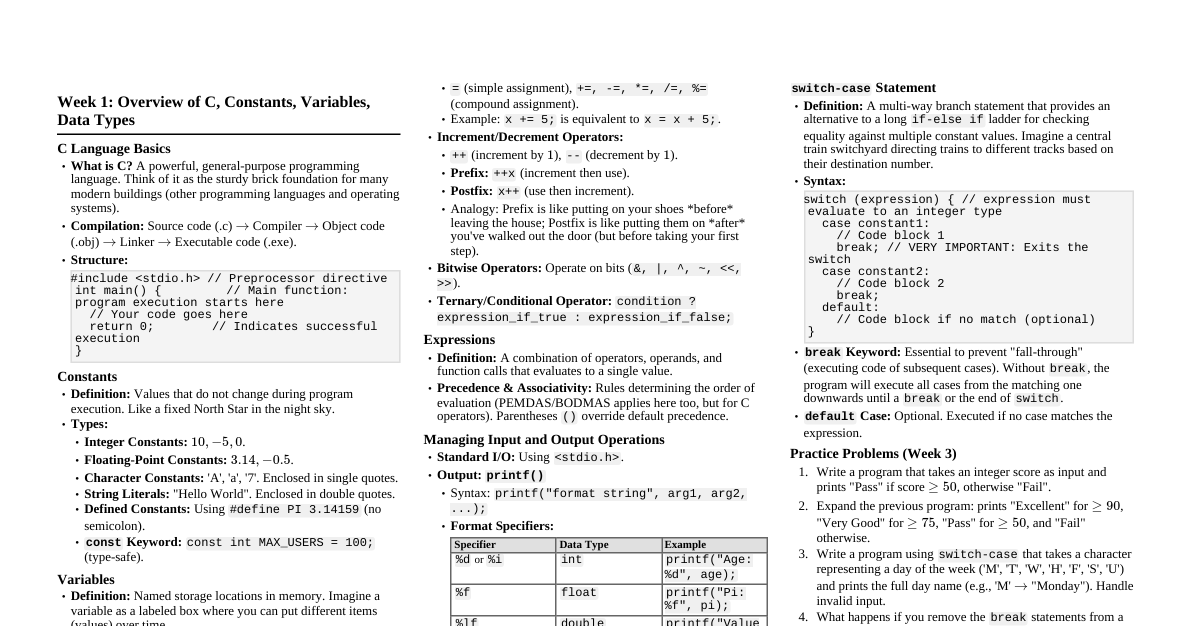

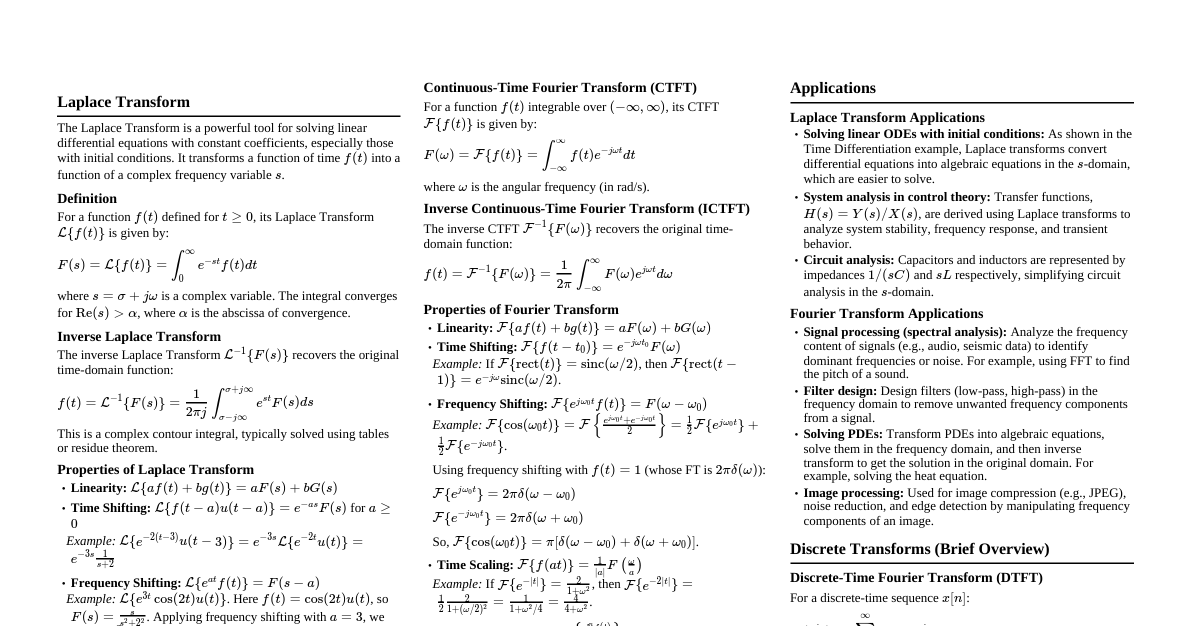

1. Introduction to Random Variables (RVs) A random variable (RV) maps outcomes of a random experiment to numerical values. Purpose: Quantify random phenomena by assigning probabilities to numerical outcomes. Example: Rolling two dice, RV $X$ = sum of outcomes (e.g., $X=7$). 2. Types of Random Variables 2.1. Discrete Random Variables Possible values are countable (finite or countably infinite). Characteristics: Distinct points on a real number line. Typically associated with counting . Examples: Number of bedrooms, floor number, number of people, test attempts. 2.2. Continuous Random Variables Possible values are uncountable and can take any value within an interval. Characteristics: Values lie within an interval, not discrete points. Infinitely divisible. Typically associated with measurements . Examples: Apartment size, distance, temperature, height, speed, exam time. 3. Probability Mass Function (PMF) - For Discrete RVs Describes the probability distribution for discrete random variables . 3.1. Definition A PMF, denoted $P(X = x_i)$ or $p(x_i)$, gives the probability that a discrete RV $X$ takes on a specific value $x_i$. 3.2. Properties of PMF ($p(x_i)$) Non-negativity: $P(X = x_i) \ge 0$ for all possible values $x_i$. (If not defined, $P(X=x_i)=0$). Summation to One: $\sum_{\text{all } x_i} P(X = x_i) = 1$. Example of Validating a PMF: Given $P(X=0)=1/4, P(X=1)=1/2, P(X=2)=1/4$. 1. All probabilities $\ge 0$. 2. Sum: $1/4 + 1/2 + 1/4 = 1$. This is a valid PMF. Example of Finding a Constant in a PMF: If $P(X=i) = C \cdot \lambda^i / i!$ for $i=0,1,2,...$. 1. For $P(X=i) \ge 0$, $C \ge 0$ since $\lambda^i / i!$ is always positive. 2. $\sum_{i=0}^\infty P(X=i) = \sum_{i=0}^\infty C \cdot \lambda^i / i! = C \sum_{i=0}^\infty \lambda^i / i! = C \cdot e^\lambda = 1$. So, $C = e^{-\lambda}$. 3.3. Graphing a PMF Typically a bar graph: x-axis for values, y-axis for probabilities. Each bar is a "mass". Types of PMF Graphs: Uniform Distribution: All values have equal probabilities (e.g., fair die roll). Values Probability Uniform PMF (Fair Die) Symmetric Distribution: Probabilities symmetric around a central value (e.g., sum of two dice). Values Probability Symmetric PMF Positively Skewed: Tail extends right, probabilities higher for smaller values. Values Probability Positively Skewed PMF Negatively Skewed: Tail extends left, probabilities higher for larger values. Values Probability Negatively Skewed PMF No Pattern: No discernible symmetry or skewness. 4. Probability Density Function (PDF) - For Continuous RVs Describes the probability distribution for continuous random variables . 4.1. Definition A PDF, denoted $f(x)$, is a function such that the probability of $X$ falling into an interval $[a, b]$ is given by the integral of $f(x)$ over that interval: $$P(a \le X \le b) = \int_a^b f(x) dx$$ Unlike PMF, $f(x)$ is not a probability itself; $P(X=x) = 0$ for any single value $x$ in a continuous distribution. 4.2. Properties of PDF ($f(x)$) Non-negativity: $f(x) \ge 0$ for all $x$. Total Area is One: $\int_{-\infty}^\infty f(x) dx = 1$. Example: Uniform Distribution (Continuous) If $X \sim U(a, b)$, then $f(x) = \frac{1}{b-a}$ for $a \le x \le b$, and $0$ otherwise. For $U(0, 1)$, $f(x) = 1$ for $0 \le x \le 1$. $\int_0^1 1 dx = 1$. This is a valid PDF. a b 1/(b-a) Uniform PDF x f(x) 5. Cumulative Distribution Function (CDF) - For Both Discrete and Continuous RVs Provides the probability that a random variable takes a value less than or equal to a certain point. 5.1. Definition For Discrete RVs: $F(a) = P(X \le a) = \sum_{x_i \le a} P(X = x_i)$. For Continuous RVs: $F(a) = P(X \le a) = \int_{-\infty}^a f(x) dx$. 5.2. Purpose Useful for calculating probabilities over intervals: $P(X > a) = 1 - F(a)$, $P(X \ge a) = 1 - F(a)$ (for continuous RVs), or $P(a 5.3. Properties of CDF ($F(x)$) Non-decreasing: As $x$ increases, $F(x)$ either stays the same or increases. Range: $0 \le F(x) \le 1$. Limits: $\lim_{x \to -\infty} F(x) = 0$ $\lim_{x \to \infty} F(x) = 1$ Right-continuous: For all $x$, $\lim_{y \to x^+} F(y) = F(x)$. 5.4. Relationship between PDF and CDF (for Continuous RVs) $f(x) = \frac{d}{dx} F(x)$ (if $F(x)$ is differentiable). 5.5. Graphing a CDF For Discrete RVs: Step function. 0 1 2 0 0.25 0.75 1 Discrete CDF Example x F(x) For Continuous RVs: A smooth, non-decreasing curve. 0 1 Continuous CDF Example x F(x) 6. Bernoulli Trial A random experiment with exactly two possible outcomes. Success (S): Probability $p$ Failure (F): Probability $1-p$ Example: Tossing a coin (Head = Success, Tail = Failure). 7. Bernoulli Random Variable Random variable $X$ for a Bernoulli trial: $X=1$ if Success occurs $X=0$ if Failure occurs PMF: $P(X=x) = \begin{cases} p & \text{if } x=1 \\ 1-p & \text{if } x=0 \end{cases}$ Mean: $E(X) = p$ Variance: $Var(X) = p(1-p)$ 8. Binomial Distribution Arises from $n$ independent Bernoulli trials (repeated experiment). Parameters: $n$: number of trials $p$: probability of success in each trial $1-p$: probability of failure in each trial Random Variable: $X$ = number of successes in $n$ trials. PMF (Probability Mass Function): Probability of getting exactly $k$ successes: $$P(X=k) = \binom{n}{k} p^k (1-p)^{n-k}, \quad k = 0,1,2,...,n$$ Mean: $E(X) = np$ Variance: $Var(X) = np(1-p)$ Example: Toss a coin 10 times. Probability of exactly 4 heads is $$P(X=4) = \binom{10}{4} (0.5)^4 (0.5)^6$$ 9. Geometric Distribution Discrete probability distribution modeling the number of trials needed to get the first success. PMF: Probability of first success occurring on the $k$-th trial: $$P(X=k) = (1-p)^{k-1}p$$ Where: $p$ = probability of success, $1-p$ = probability of failure, $k$ = trial number ($k=1,2,3,...$). CDF: Probability that the first success happens on or before the $k$-th trial: $$P(G \le k) = 1 - (1-p)^k$$ Expectation (Mean): $E(X) = \frac{1}{p}$ Variance: $Var(X) = \frac{1-p}{p^2}$ The Memoryless Property: The probability of future successes is independent of past failures. $$P(X > m + n | X > m) = P(X > n)$$ If you've already rolled a die 10 times without a 4, the probability of getting a 4 on the 11th roll is still 1/6. Notation Summary for Geometric Distribution: Notation Meaning Formula In other words... $P(G \ge k)$ First success on or after trial $k$ $(1-p)^{k-1}$ You fail the first $k-1$ times. $P(G > k)$ First success strictly after trial $k$ $(1-p)^k$ You fail the first $k$ times. $P(G \le k)$ First success on or before trial $k$ $1-(1-p)^k$ You don't fail all of the first $k$ times. $P(G First success strictly before trial $k$ $1-(1-p)^{k-1}$ You don't fail all of the first $k-1$ times. 10. Negative Binomial Distribution Denoted $X \sim \text{Negative Binomial}(r, p)$. Definition: Counts the number of trials needed to get $r$ successes. Parameters: $r$: a positive integer (number of successes we want). $p$: probability of success in each independent Bernoulli trial. Generalizes the Geometric distribution (Geometric is special case when $r=1$). Range: $\{r, r+1, r+2, ...\}$ (at least $r$ trials needed). PMF: $$P(X=k) = \binom{k-1}{r-1} p^r (1-p)^{k-r}$$ Where: $k$ = trial on which the $r$-th success occurs. $p^r$ = probability of $r$ successes. $(1-p)^{k-r}$ = probability of $k-r$ failures. $\binom{k-1}{r-1}$ = number of ways to arrange the first $r-1$ successes among the first $k-1$ trials (last trial must be a success). 11. Hypergeometric Distribution Used when drawing samples without replacement . PMF: $$P(X=k) = \frac{\binom{r}{k} \binom{N-r}{m-k}}{\binom{N}{m}}$$ Where $k = \max(0, m - (N-r)), ..., \min(r, m)$. Parameters: $N$: total size of the population. $r$: total number of items in the population classified as successes. $m$: size of the sample drawn from the population. $k$: number of successes in the sample. $\binom{a}{b} = \frac{a!}{b!(a-b)!}$ (binomial coefficient). Mean: $E(X) = m \frac{r}{N}$ Variance: $Var(X) = m \frac{r}{N} \frac{N-r}{N} \frac{N-m}{N-1}$ The term $\frac{N-m}{N-1}$ is the finite population correction factor . It approaches 1 when $N$ is much larger than $m$, making the variance similar to binomial distribution. 12. Poisson Random Variable Counts the number of times an event happens in a fixed interval of time or space , given that the event: Occurs independently of others. Has a constant average rate ($\lambda$). Two events cannot occur at the exact same instant. Definition: A random variable $X$ is a Poisson random variable with parameter $\lambda > 0$ if: $$P(X=k) = \frac{e^{-\lambda}\lambda^k}{k!}, \quad k = 0,1,2,...$$ Where: $\lambda$ = average number of occurrences in the interval (mean rate). $k$ = actual number of occurrences observed. Properties: Mean: $E[X] = \lambda$ Variance: $Var(X) = \lambda$ (mean = variance). Support: $X \in \{0,1,2,...\}$. Examples: Phone calls per minute, accidents per day, defects per page, earthquakes per year. Connection to Binomial: If $n$ is large and $p$ is small, the Binomial distribution $\text{Binomial}(n,p)$ can be approximated by Poisson with parameter $\lambda = np$. In simple words: A Poisson random variable counts "rare events" happening in a fixed interval. 13. Expectation, Deviation and Variance Introduction to Random Variables A random variable is a numerical description of the outcome of a statistical experiment. Discrete Random Variables: Take on a finite or countably infinite number of values. Continuous Random Variables: Can take any value within a given range. For discrete random variables, their distribution is described by: Probability Mass Function (PMF): Specifies the probability for each possible value, sum of probabilities is 1, each probability non-negative. Cumulative Distribution Function (CDF): Gives the probability that the random variable takes a value less than or equal to a specific value. Expectation of a Random Variable The expectation (expected value) of a random variable is a measure of its central tendency, or long-run average value. Definition and Interpretation: The expected value of a discrete random variable $X$, denoted $E[X]$ or $\mu$, is a weighted average of its possible values: $$E[X] = \sum_i x_i P(X=x_i)$$ For a continuous random variable $X$: $$E[X] = \int_{-\infty}^\infty x f(x) dx$$ If an experiment is repeated many times, the average of outcomes approaches the expected value. Properties of Expectation: Let $X$ be a random variable, and $A, B, c$ be constants. Expectation of a Function of $X$: If $g(X)$ is a function of $X$, then $E[g(X)] = \sum_i g(x_i) P(X=x_i)$ (discrete) or $E[g(X)] = \int_{-\infty}^\infty g(x) f(x) dx$ (continuous). Constant Multiple: $E[cX] = cE[X]$. Constant Addition: $E[X+c] = E[X]+c$. Linearity: $E[AX+B] = AE[X]+B$. Sum of Random Variables: $E[X+Y] = E[X]+E[Y]$. For $k$ random variables: $E[\sum_{i=1}^k X_i] = \sum_{i=1}^k E[X_i]$. Important Note: $E[X^2]$ is generally not equal to $(E[X])^2$. Examples of Expectation: Rolling a Fair Die Once: $X \in \{1,2,3,4,5,6\}$, $P(X=x)=1/6$. $E[X] = 3.5$. Rolling Two Dice (Sum): $X = X_1+X_2$. $E[X] = E[X_1]+E[X_2] = 3.5+3.5 = 7$. Tossing a Coin Thrice (Heads): $X = X_1+X_2+X_3$. $E[X] = 0.5+0.5+0.5 = 1.5$. Bernoulli RV: $X=1$ with prob $p$, $X=0$ with prob $1-p$. $E[X]=p$. Discrete Uniform RV: $X \in \{1,...,n\}$, $P(X=x)=1/n$. $E[X]=(n+1)/2$. Hypergeometric RV: $E[X] = n(r/N)$. Variance of a Random Variable Measures the spread or dispersion of its values around its expected value. Motivation: Expectation alone doesn't fully describe a distribution. Variance quantifies spread. E.g., $X=0$ (prob 1), $Y=\{-2,2\}$ (prob 0.5 each), $Z=\{-20,20\}$ (prob 0.5 each) all have $E=0$ but vastly different spreads. Definition and Computational Formula: Denoted $Var(X)$ or $\sigma^2$. $$Var(X) = E[(X-\mu)^2]$$ For discrete RVs: $Var(X) = \sum_i (x_i-\mu)^2 P(X=x_i)$. For continuous RVs: $Var(X) = \int_{-\infty}^\infty (x-\mu)^2 f(x) dx$. Computationally convenient formula (for both): $$Var(X) = E[X^2] - (E[X])^2$$ Properties of Variance: Let $X$ be a random variable, and $A, B, c$ be constants. Constant Multiple: $Var(cX) = c^2 Var(X)$. Constant Addition: $Var(X+c) = Var(X)$. Linear Transformation: $Var(AX+B) = A^2 Var(X)$. Sum of Random Variables (General Case): In general, $Var(X+Y) \ne Var(X)+Var(Y)$. Sum of Independent Random Variables: If $X$ and $Y$ are independent, then $Var(X+Y) = Var(X)+Var(Y)$. This extends to any number of independent RVs: $Var(\sum_{i=1}^k X_i) = \sum_{i=1}^k Var(X_i)$. Examples of Variance: Rolling a Fair Die Once: $E[X]=3.5, E[X^2]=15.167$. $Var(X) = 15.167 - (3.5)^2 = 2.917$. Rolling Two Dice (Sum): $Var(X_1+X_2) = Var(X_1)+Var(X_2) = 2.917+2.917 = 5.834$. Tossing a Coin Thrice (Heads): $Var(X_1+X_2+X_3) = 0.25+0.25+0.25 = 0.75$. Bernoulli RV: $Var(X) = p(1-p)$. Discrete Uniform RV: $Var(X) = (n^2-1)/12$. Standard Deviation of a Random Variable Another measure of dispersion, expressed in the same units as the random variable itself. Definition: Denoted $SD(X)$ or $\sigma$, it is the positive square root of its variance. $$SD(X) = \sqrt{Var(X)}$$ Properties of Standard Deviation: Let $X$ be a random variable, and $c$ be a constant. Constant Multiple: $SD(cX) = |c| \cdot SD(X)$. Constant Addition: $SD(X+c) = SD(X)$. Applications and Interpretation: Measuring Risk: Higher standard deviation indicates greater variability and thus higher risk. Example: Lawyer's Fee Options Option 1 (Fixed Fee): $X=25,000$. $E[X]=25,000$, $SD(X)=0$ (no risk). Option 2 (Gambling Fee): $Y=50,000$ (prob 0.5) or $0$ (prob 0.5). $E[Y]=25,000$, $SD(Y)=25,000$ (high risk). Both have same expected value, but Option 2 has higher risk due to higher $SD$. Family Bonus Example: Anita's bonus ($X$): $E[X]=15,000$, $SD(X)=3,000$. Sanjay's bonus ($Y$): $E[Y]=20,000$, $SD(Y)=4,000$. Assuming $X,Y$ are independent. Total family bonus ($X+Y$): $E[X+Y]=E[X]+E[Y]=35,000$. $Var(X)=(3,000)^2=9,000,000$. $Var(Y)=(4,000)^2=16,000,000$. $Var(X+Y)=Var(X)+Var(Y)=25,000,000$. $SD(X+Y)=\sqrt{25,000,000}=5,000$. Summary of Key Properties Property Expectation ($E[X]$) Variance ($Var(X)$) Standard Deviation ($SD(X)$) Definition (Discrete) $\sum x_i P(X=x_i)$ $\sum (x_i-\mu)^2 P(X=x_i)$ $\sqrt{Var(X)}$ Definition (Continuous) $\int x f(x) dx$ $\int (x-\mu)^2 f(x) dx$ $\sqrt{Var(X)}$ Computational Form $E[X^2]-(E[X])^2$ Units Same as $X$ Units of $X$ squared Same as $X$ $c \cdot X$ $cE[X]$ $c^2 Var(X)$ $|c|SD(X)$ $X+c$ $E[X]+c$ $Var(X)$ $SD(X)$ $AX+B$ $AE[X]+B$ $A^2 Var(X)$ $|A|SD(X)$ $X+Y$ $E[X]+E[Y]$ $Var(X)+Var(Y)$ (if $X,Y$ independent) $\sqrt{Var(X)+Var(Y)}$ (if $X,Y$ independent)