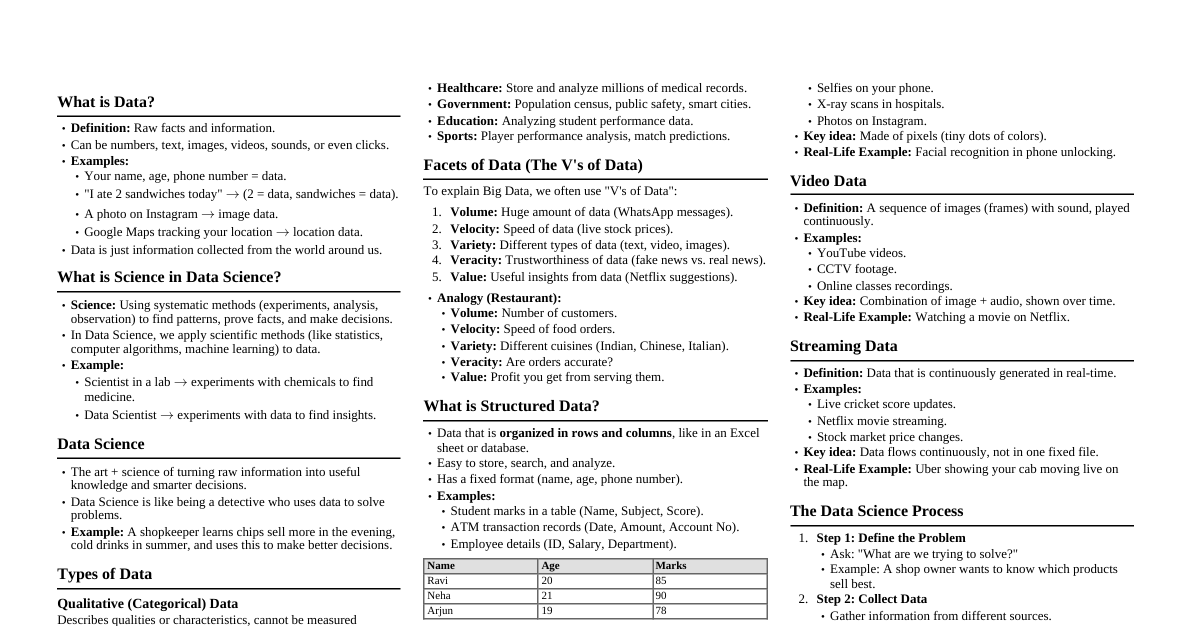

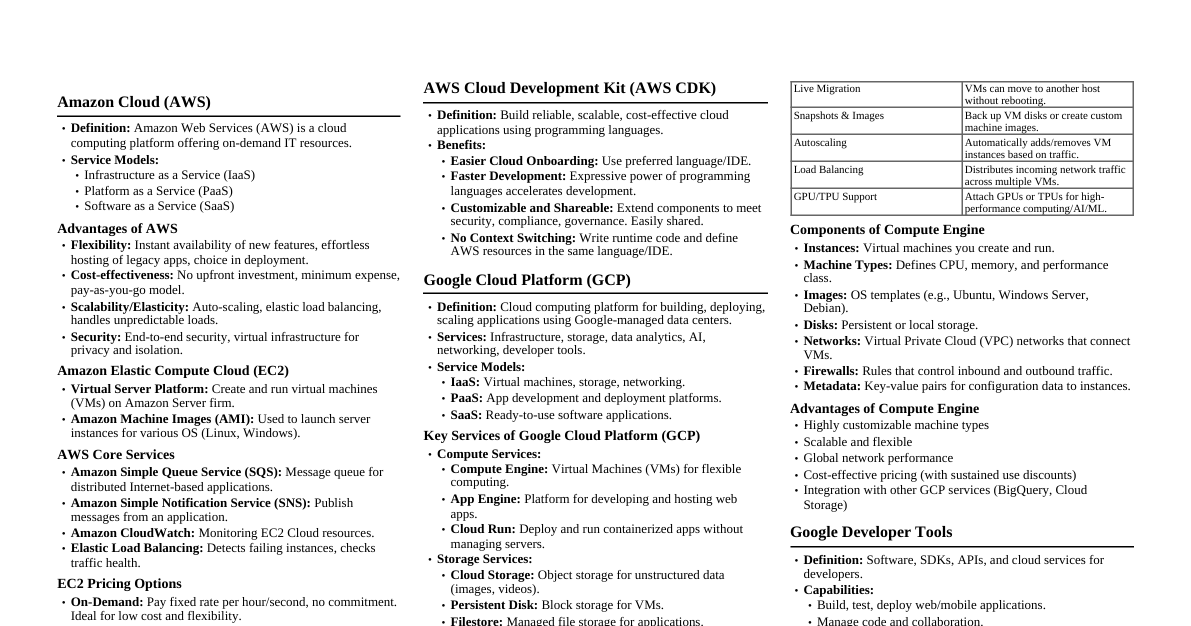

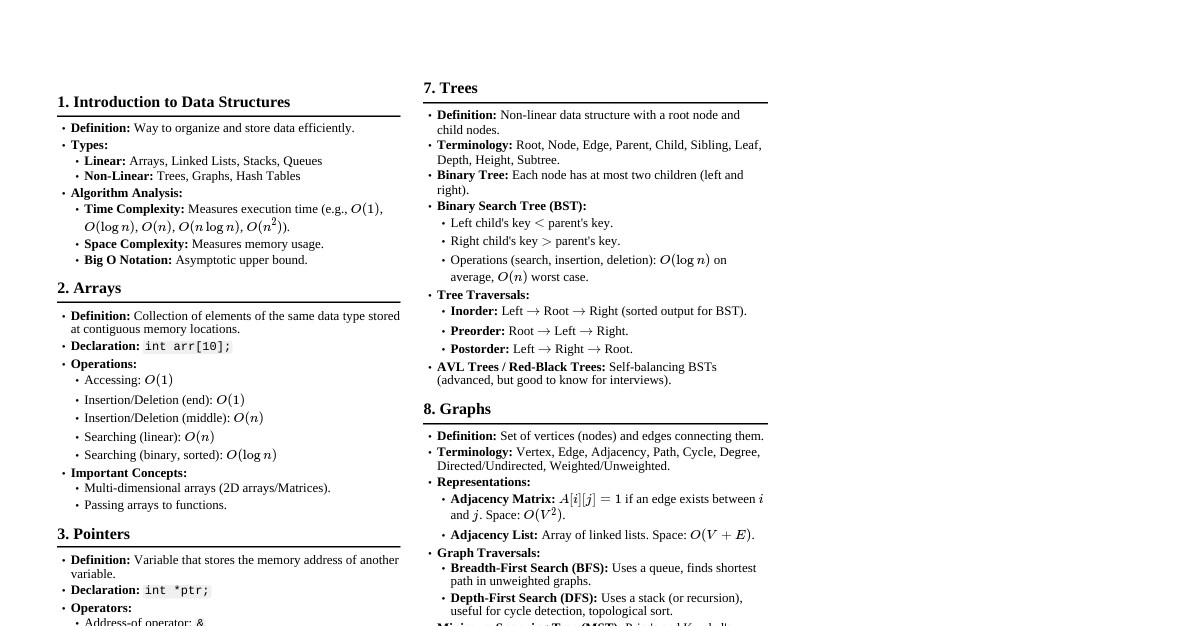

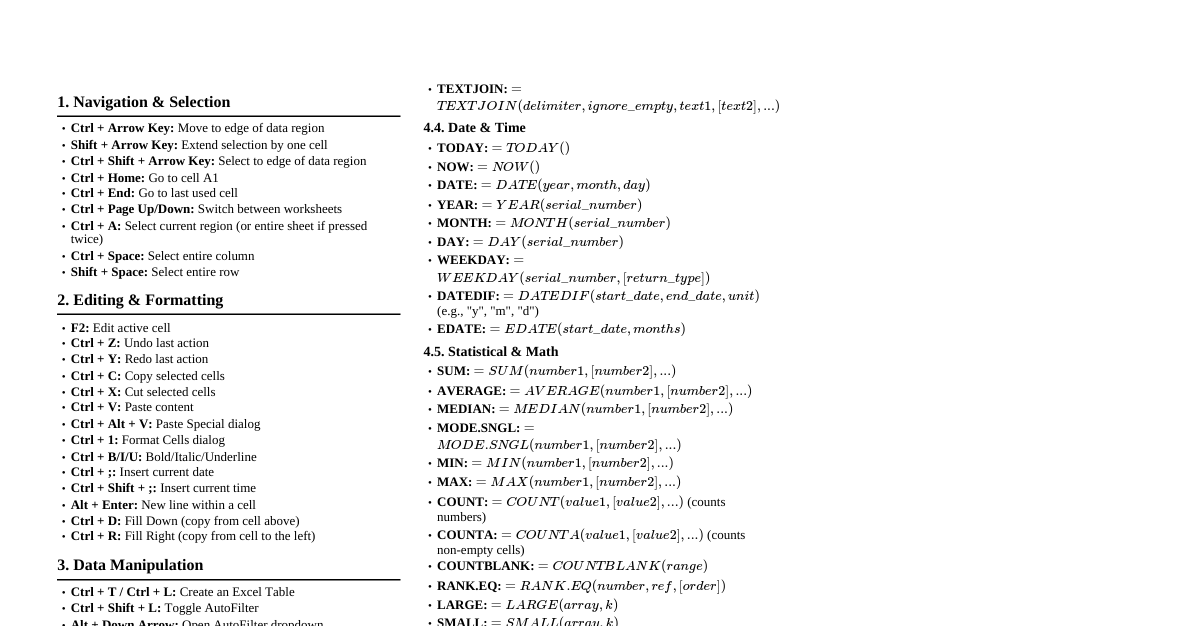

MCQ Based on Unit-I (Big Data Concepts) What is Big Data primarily characterized by? A) High frequency and low volume B) High volume, high variety, and high velocity C) Low complexity and high velocity D) High veracity and low variety Who is credited with introducing the “3 Vs” model to define Big Data? A) McKinsey Global Institute B) The National Institute of Standards and Technology (NIST) C) Doug Laney (Gartner). D) IBM Which of the following is NOT mentioned as a benefit of Big Data analysis? A) Predicting future trends B) Uncovering hidden patterns C) Improving manual data entry efficiency D) Making data-driven decisions During which period did the term “big data” start gaining prominence? A) 1960s B) 1970s-1980s C) 1990s D) 2000s-Present Which framework, was developed to handle the distributed processing of massive datasets? A) SQL B) NoSQL C) Hadoop D) Python Which characteristic of Big Data refers to the speed at which data is generated, processed, and analyzed? A) Volume B) Velocity C) Variety D) Veracity What type of data lacks a predefined data model or organization? A) Structured Data C) Unstructured Data B) Semi-Structured Data D) Variable Data Which “V” of Big Data deals with the trustworthiness and reliability of data sources? A) Volume B) Veracity C) Variety D) Value Which characteristic of Big Data describes the temporal relevance and lifespan of datasets? A) Validity B) Volatility. C) Variability D) Visualization Which characteristic of Big Data emphasizes the diversity of data types and sources? A) Volume B) Velocity C) Variety D) Value What does “Veracity” in Big Data focus on ensuring? A) Speed of data processing B) Accuracy and reliability of data C) Diversity of data types D) Value extraction from data Which industry relies on Big Data for real-time decision-making based on rapid data processing? A) Agriculture B) Finance. C) Education D) Manufacturing What does “Variability” in Big Data describe? A) The speed at which data is generated B) The diversity of data sources C) The inconsistency or fluctuation in data points. D) The reliability and accuracy of data Which characteristic of Big Data involves ensuring that data accurately reflects real world phenomena? A) Validity. B) Volatility. C) Veracity D) Value What does “Velocity” in Big Data emphasize? A) The size and scale of data B) The speed at which data is generated and processed C) The diversity of data types D) The trustworthiness of data sources Which characteristic of Big Data focuses on the potential risks associated with data collection and analysis? A) Volatility B) Vulnerability C) Veracity D) Visualization What role does “Visualization” play in Big Data analytics? A) Ensuring data accuracy. B) Enhancing data storage C) Communicating complex data patterns. D) Managing data variability Which industry example involves analysing structured, semi-structured, and unstructured data types? A) Retail. B) Agriculture C) Construction D) Mining What is the main challenge posed by “Volume” in Big Data? A) Ensuring data accuracy B) Managing large datasets. C) Analyzing diverse data sources D) Visualizing data trends. Which characteristic of Big Data involves ensuring data is consistent and dependable? A) Veracity. B) Velocity C) Validity D) Variety What does “Value” represent in the context of Big Data? A) The speed of data analysis B) The ultimate objective of data analytics. C) The diversity of data sources D) The reliability of data sources Which characteristic of Big Data focuses on the ability to make sense of large datasets through visual representations? A) Veracity B) Visualization C) Volatility D) Variety What does “Variety” in Big Data refer to? A) The speed at which data is generated B) The diversity of data sources and types C) The accuracy of data sources D) The trustworthiness of data sources What does “Validity” in Big Data emphasize? A) The accuracy and relevance of data. B) The speed of data processing C) The diversity of data sources D) The visualization of data patterns Which characteristic of Big Data involves managing the temporal relevance and usage lifespan of data? A) Volatility. B) Vulnerability C) Veracity D) Value Which characteristic of Big Data emphasizes the ability to derive meaningful insights and tangible benefits from data analytics efforts? A) Velocity B) Variety C) Value D) Validity Which aspect of Big Data refers to the potential risks associated with unauthorized access to sensitive information? A) Veracity B) Variability C) Vulnerability D) Velocity Which role does visualization play in Big Data analytics? A) Ensuring data reliability B) Protecting data privacy C) Transforming data into graphical representations D) Analysing data velocity MCQ on DATASET What is a dataset? A) A collection of related data points. B) A random assortment of information C) A type of software application. D) An operating system. What are data elements in a dataset? A) The individual units of information B) The entire collection of data C) The software used to analyze data D) The charts and graphs used to present data What could be an example of a variable in a dataset about customer transactions? A) Purchase amount. B) A customer’s email address C) The date of data collection D) The software used for transactions What is metadata? A) Data about data C) The software used to process data B) The raw data itself D) The visualization of data Which type of dataset is organized into a predefined format like tables in a relational database? A) Structured B) Unstructured C) Semi-structured D) Metadata Which of the following is an example of an unstructured dataset? A) Text documents. B) Tables in a database C) CSV files D) Excel spreadsheets For what purpose are datasets used in machine learning? A) To train models to recognize patterns and make predictions B) To display data visually C) To create operating systems D) To manage databases How do researchers use datasets? A) To test hypotheses and conduct experiments B) To create computer programs C) To build websites D) To manage social media accounts What type of dataset has some organization but does not fit neatly into traditional databases? A) Semi-structured B) Structured C) Unstructured D) Metadata What is a key characteristic of structured data? A) It lacks a predefined format. B) It is organized into predefined fields with fixed data types. C) It primarily includes images and videos. D) It does not fit into traditional databases. Which storage system is commonly used for structured data? A) MongoDB B) Cassandra C) MySQL D) Elasticsearch What type of data would be considered unstructured? A) Customer transaction records B) XML files C) Text documents and social media posts D) CSV files with headers Which characteristic best describes semi-structured data? A) It has a rigid schema. B) It is completely unorganized. C) It incorporates tags and markers for organization. D) It can only be stored in relational databases. Which database system is well-suited for managing semi-structured data? A) Oracle. B) PostgreSQL C) Couchbase. D) SQL Server What type of data storage is typically used for unstructured data? A) Relational databases B) File systems or NoSQL databases C) Spreadsheets D) Metadata catalogs What is a common use of metadata in document management systems? A) Storing raw text data B) Providing additional information like document type, creation date, and author C) Analyzing data trends D) Storing multimedia files Which of the following is an example of metadata in a digital photo? A) The resolution of the photo B) The photo’s pixel values C) The camera settings and GPS coordinates D) The photo’s file size In what kind of applications is semi-structured data commonly used? A) Financial transactions B) Content management systems and IoT applications C) Relational databases D) Document management systems Why is metadata important in data management? A) It helps to increase the size of datasets. B) It provides context and structure, enhancing data usability and accessibility. C) It replaces the need for data storage. D) It is only used for security purposes. MCQ on Data Analytics What is the primary goal of data analytics? A) To generate data from multiple sources B) To uncover hidden patterns and insights for informed decision-making C) To clean and prepare data D) To visualize data in charts and graphs Which step in the data analytics process involves removing errors and inconsistencies from the data? A) Data Collection B) Data Analysis C) Data Cleaning and Preparation D) Interpretation and Visualization Descriptive analytics aims to answer which question? A) What happened? B) Why did it happen? C) What will happen? D) What should we do? What type of analytics is used to investigate the reasons behind certain events or outcomes? A) Descriptive Analytics B) Diagnostic Analytics C) Predictive Analytics D) Prescriptive Analytics Which of the following techniques are typically used in predictive analytics? A) Statistical models and machine learning algorithms. B) Root cause analysis C) Data visualization D) Data cleaning Prescriptive analytics provides recommendations on actions to take based on which other type of analytics? A) Descriptive Analytics C) Predictive Analytics. B) Diagnostic Analytics D) All of the above Which application of data analytics is used for predicting diseases and optimizing patient care? A) Business and Finance B) Healthcare C) Retail and E-commerce D) Manufacturing and Operations In which sector is data analytics used to analyze traffic patterns and optimize public transportation routes? A) Business and Finance B) Healthcare C) Government and Public Sector. D) Retail and E-commerce What is one of the key benefits of data analytics in decision-making? A) Reducing data collection costs B) Enabling data-driven, informed decisions C) Simplifying data cleaning processes D) Generating data reports Which of the following best describes the application of data analytics in retail and e-commerce? A) Managing inventory and optimizing pricing strategies B) Monitoring patient vitals C) Predicting equipment failures D) Analysing traffic patterns What does data cleaning and preparation ensure? A) Data is collected from various sources B) Data is accurate and reliable for analysis. C) Data is visualized effectively D) Data is interpreted correctly Which type of data can be described as raw and unorganized? A) Structured Data B) Unstructured Data C) Semi-Structured Data D) Cleaned Data. How do visualization tools help in data analytics? A) By collecting data from various sources B) By cleaning and preparing data C) By presenting findings in a clear and accessible manner. D) By applying machine learning algorithms Which of the following is an example of using predictive analytics in e-commerce? A) Reviewing sales data from the past year B) Investigating high patient readmission rates C) Forecasting customer demand during holiday seasons. D) Optimizing production schedules. What competitive advantage can organizations gain through data analytics? A) Increased reliance on intuition B) Improved performance and proactive market response C) Reduced data collection efforts D) Decreased need for data visualization What is the main goal of data analysis? A) To generate new data B) To draw conclusions from existing data. C) To collect data from various sources D) To visualize data in charts Which of the following is the first step in the data analysis process? A) Data Cleaning and Pre-processing B) Data Collection C) Data Visualization D) Statistical Analysis During which step of data analysis are errors removed and formats standardized? A) Data Collection B) Data Cleaning and Pre-processing C) Exploratory Data Analysis (EDA) D) Machine Learning and Predictive Analytics What does Exploratory Data Analysis (EDA) involve? A) Cleaning data to remove errors B) Collecting data from various sources C) Visualizing data to understand its characteristics D) Building predictive models Which of the following techniques is used in statistical analysis to determine relationships within the data? A) Data Collection B) Regression Analysis C) Data Visualization D) Data Cleaning How does machine learning contribute to data analysis? A) By collecting data B) By cleaning and standardizing data C) By building models that learn from data and make predictions D) By visualizing data in charts and graphs Which step in data analysis involves the use of charts, graphs, and dashboards? A) Data Collection B) Data Cleaning and Pre-processing C) Data Visualization D) Statistical Analysis In the context of data analysis, what is the purpose of interpretation and decision-making? A) To clean and standardize data B) To visualize data for better understanding C) To extract actionable insights and support informed decision-making. D) To collect data from various sources What is one of the key benefits of data analysis for businesses? A) Reducing the need for data collection B) Increasing reliance on intuition C) Improving operational efficiency and performance. D) Simplifying data cleaning processes What distinguishes data analysis from data analytics? A) Data analysis is about examining and interpreting data, while data analytics encompasses a broader process including data management and advanced tools. B) Data analysis uses only statistical methods, while data analytics uses machine learning. C) Data analysis focuses on data visualization, while data analytics focuses on data collection. D) Data analysis is used only in finance, while data analytics is used in various Industries MCQ on UNIT-2 (Big Data Initiatives & Operations) What drives Big Data initiatives in organizations? A) Technological trends B) Business needs C) Random experiments D) Employee preferences Which of the following is a key consideration for Big Data adoption? A) Ignoring security measures B) Disregarding cloud services C) Establishing governance processes D) Limiting data collection to small volumes What is crucial for ensuring data quality in Big Data initiatives? A) Collecting as much data as possible B) Ensuring data is old and well-defined C) Ensuring data is accurate, complete, and reliable D) Ignoring data sources Which of the following describes the role of encryption in Big Data security? A) Converting data into a coded format only authorized users can understand B) Setting up rules and permissions C) Installing firewalls D) Conducting regular security audits Why is provenance important in Big Data? A) To increase data volume B) To track the origin, history, and lineage of data C) To ignore data quality D) To limit data processing What is a key feature of provenance in Big Data? A) Ignoring data collection B) Origin tracking C) Reducing data volume D) Random data processing Which of the following is NOT an operational prerequisite for Big Data? A) Data Management Framework B) Governance Policies C) Ignoring security measures D) Skilled Workforce What should organizations consider when moving data to the cloud? A) Ignoring scalability B) Cloud Adoption for scalability and flexibility C) Reducing data collection D) Avoiding data integration What does data procurement involve? A) Collecting irrelevant data B) Ensuring data quality and legality C) Ignoring data security D) Limiting data collection methods Which is a key point in ensuring privacy in Big Data? A) Ignoring privacy risks B) Collecting unnecessary data C) Using strong methods like encryption D) Avoiding legal frameworks What is a critical aspect of managing Big Data initiatives effectively? A) Complete lack of oversight B) Balancing innovation with management C) Ignoring business needs D) Reducing data collection Which of the following is part of the Big Data Analytics Lifecycle? A) Ignoring data cleansing B) Establishing a business case C) Avoiding data aggregation D) Skipping data procurement What should organizations ensure when collecting personal information in Big Data? A) Avoiding user consent B) Following data privacy policies and legal frameworks C) Ignoring ethical considerations D) Collecting as much personal information as possible What is necessary for a scalable Big Data infrastructure? A) Limiting hardware and software capabilities B) Ensuring the infrastructure can handle and grow with data needs C) Reducing integration capabilities D) Ignoring long-term planning Why is continuous monitoring and optimization important in Big Data systems? A) To ignore system performance B) To ensure systems run smoothly and efficiently C) To reduce collaboration D) To avoid long-term planning MCQ on Real-time Support & Governance What is a significant challenge of real-time support in big data systems? A) Lack of data B) The volume, velocity, and variety of data C) Too much control over data D) Ignoring data privacy Which tool is used for stream processing to analyze data as it comes in? A) Apache Kafka B) Redis C) Prometheus D) Apache Hadoop Why is in-memory processing used in real-time big data environments? A) To store data in databases B) For faster access and analysis C) To slow down data processing D) To reduce data volume What is a challenge associated with the data velocity in real-time data streams? A) Slow data arrival B) The need to process data quickly C) Lack of data variety D) Data quality issues Which architecture design allows systems to react instantly to data events? A) Batch processing B) Event-driven architecture C) Data warehousing D) Monolithic architecture What is a crucial aspect of maintaining optimal performance in big data ecosystems? A) Reducing data volume B) Efficient resource management C) Ignoring data variety D) Limiting data quality checks Which aspect is vital for ensuring data integrity and compliance in big data initiatives? A) Ignoring data governance B) Robust data governance frameworks C) Limiting data security measures D) Reducing data variety What is necessary for processing and analyzing real-time data streams effectively? A) Ignoring data velocity B) Handling the dynamic and continuous nature of data flow C) Avoiding data variety D) Limiting resource management Which challenge involves ensuring data is accurate and complete in big data analytics? A) Latency B) Data quality C) Data velocity D) Data variety What is the purpose of a Big Data governance framework? A) Ignoring data privacy B) Regulating, standardizing, and evolving data management C) Reducing data volume D) Limiting resource management Why is continuous improvement important in real-time support setups? A) To ignore system performance B) To keep pace with changing needs and technology C) To reduce data variety D) To limit data volume Which approach helps in minimizing the delay between data generation and analysis? A) Increasing data variety B) Reducing data volume C) Minimizing latency D) Limiting data governance What is the benefit of using cloud services for Big Data projects? A) Inadequate data privacy B) Limited capital investment for hardware procurement C) Ignoring data velocity D) Reducing data volume What does a distinct methodology for controlling data flows in Big Data solutions involve? A) Ignoring feedback mechanisms B) Iterative refinement based on feedback C) Reducing data quality checks D) Limiting resource management Which tool is mentioned for real-time monitoring to catch and fix issues quickly? A) Apache Kafka B) Redis C) Prometheus D) Apache Hadoop MCQ on Big Data Analytics Lifecycle What is the primary purpose of the Big Data analytics lifecycle? A) To store data B) To delete unnecessary data C) To derive actionable insights through advanced analytics techniques D) To make data inaccessible Which stage involves evaluating the goals and objectives of a Big Data analysis project? A) Data Visualization B) Data Acquisition C) Business Case Evaluation D) Data Extraction What is the goal of the Data Identification stage? A) To visualize data B) To identify the data needed for the project and its sources C) To delete data D) To store data During which stage is data acquired from various sources and filtered? A. Data Analysis B. Data Aggregation C. Data Acquisition & Filtering D) Data Validation What is the purpose of Data Validation & Cleansing? A) To visualize data B) To acquire data C) To ensure data is accurate and clean D) To store data Which process is NOT part of the Data Aggregation & Representation stage? A) Data Integration B) Data Summarization C) Data Visualization D) Data Cleaning What is the main goal of Data Analysis in the Big Data analytics lifecycle? A) To clean data B) To store data C) To extract valuable insights from data D) To delete data Which approach in data analysis is focused on testing specific hypotheses or theories? A) Exploratory Analysis B) Confirmatory Analysis C) Data Cleaning D) Data Acquisition What is the purpose of Data Visualization in the Big Data analytics lifecycle? A) To store data B) To clean data C) To transform insights into visual representations D) To delete data Which stage involves using analysis results to improve business operations? A) Data Acquisition B) Data Cleaning C) Utilization of Analysis Results D) Data Aggregation In the Business Case Evaluation stage, what do Key Performance Indicators (KPIs) help measure? A) Data accuracy B) Project progress C) Data storage capacity D) Data deletion rate What does Metadata provide information about? A) Data storage locations B) Data accuracy C) Additional information about the data, like size and source D) Data deletion processes What is the benefit of keeping an exact copy of the original dataset? A) To delete unnecessary data B) To visualize data better C) To have a backup and ensure data is available for future use D) To reduce storage costs What does the Data Extraction stage focus on? A) Extracting and transforming data into a compatible format B) Visualizing data C) Storing data D) Deleting data How does Exploratory Analysis help in data analysis? A) By testing hypotheses B) By exploring data patterns and generating new hypotheses C) By deleting irrelevant data D) By storing data efficiently What is the purpose of creating visualizations in data analysis? A) To clean data B) To store data C) To make analysis results easier to understand and interpret D) To delete data What is the key process in the Utilization of Analysis Results stage that helps improve business processes? A) Data deletion B) Data visualization C) Business process optimization D) Data acquisition Which data analysis approach is more flexible and iterative? A) Confirmatory Analysis B) Exploratory Analysis C) Data Cleaning D) Data Acquisition What type of computing is used in Big Data analysis to handle large volumes of data quickly? A) Single-core computing B) Distributed computing C) Local computing D) Cloud computing Which of the following is NOT a benefit of Data Visualization? A) Clarity and understanding B) Enhanced data deletion C) Spotting trends and anomalies D) Improving decision making MCQ on UNIT-3 (OLTP, OLAP, Data Warehousing, NoSQL) What does OLTP stand for? a) Offline transaction processing b) Online transaction processing c) Outline traffic processing d) Online aggregate processing OLTP systems can be used for? a) banking b) point-of-sale c) ticket reservation systems d) All of the above The data is stored, retrieved and updated in _________ a) SMTP b) FTP c) OLAP d) OLTP OLTP systems are used for a) Access to operational and historical data b) Providing various add-ons for quick decision-making c) Supporting day-to-day business process d) Providing complicated computations OLAP stands for a) Online analytical processing b) Online analysis processing c) Online transaction processing d) Online aggregate processing What are the applications of OLAP? a) Business reporting for sales, marketing b) Budgeting c) Forecasting d) All of the above OLAP systems can be implemented as client-server systems a) True b) False The output of an OLAP query is displayed as a ______ a) Matrix b) Pivot c) Both a and b d) Excel What is known as the heart of the warehouse? a) Data mart database servers b) Data mining database server c) Data warehouse database servers d) Relational database Servers Where is data warehousing used? a) Transaction system b) Logical system c) Decision support system d) None Data in the Data warehouse must be in the normalized form a) True b) False Identify the options below that a data warehouse can include. a) Database table b) Online data c) Flat files d) All of the above In data warehousing, ______ typically focuses on a single subject area or line of business. a) Lega Mart b) Meta Mart c) Data Mart d) D Mart The multidimensional model of a data warehouse is known as a) Table b) Tree c) Data cube d) Data structure What do data warehouses support? a) OLAP b) OLAP AND OLTP c) OLTP d) Operational database Choose the incorrect property of the data warehouse. a) Collection from heterogeneous sources b) Subject oriented c) Time variant d) Volatile DSS in data warehouse stands for _________. a) Decision single system b) Decision support system c) Data support system d) Data storable system The process of removing deficiencies and loopholes in the data is called as____ a) Data aggregation b) Extraction of data c) Compression of data d) Cleaning of data Most NoSQL databases support automatic _____ meaning that you get high availability and disaster recovery. a) Processing b) Scalability c) Replication d) All of the options What is a multidimensional database also known as? a) DBMS b) Extended DBMS c) RDBMS d) Extended RDMS ETL stands for ___________ a) Effect, transfer, and load b) Explain, transfer and load c) Extract, transfer, and load d) Extract, transform, and load Identify the correct option that defines Datamart a) A subgroup of data warehouse b) Another type of data warehouse c) Not related to the data warehouse d) None Independent data mart derives data from a) Transactional Systems b) Enterprise Data Warehouse c) Both a and b d) None of the above What is traditional BI? a) Business Investment b) Business Improvement c) Business Intelligence d) None of the above Ad-hoc reports are: a) Generated at regular intervals b) Customized and created as needed c) Standardized and follow a preset format d) Automatically generated without human intervention Dashboards are: a) Static reports with fixed data b) Interactive visualizations that provide real-time data c) Text-based documents for presenting information d) Automatic notifications sent to stakeholders Dashboards typically display a) Only financial data and metrics b) Real-time updates on market trends c ) Key performance indicators (KPIs) d ) Historical data for long-term analysis MCQ on Clusters, File Systems, Sharding & Replication What is the primary purpose of a cluster in computing? a) To store and manage data b) To provide higher availability, scalability, and performance c) To act as a backup server for critical data d) To serve as a standalone computing unit How are nodes within a cluster connected? a) Via USB cables b) Through wireless connections c) Using Ethernet or fiber optic networking d) Connected directly through hardware links What advantage does a cluster provide for tasks? a) It can execute tasks faster than individual computers b) It reduces the need for network connectivity c) It eliminates the need for specialized hardware on each node d) It relies on cloud-based processing power What is the purpose of a file system in computing? a) To manage files on storage devices b) To create directories and sub-directories only c) To organize data in a hierarchical manner d) To support different operating systems What is a file in the context of a file system? a) A collection of related information stored on secondary storage b) A basic unit of storage that holds user data c) A structure that contains other directories and files d) A method used by an operating system to manage data Which statement regarding different operating systems and file systems is true? a) Windows OS supports ext (Extended File System) b) Unix and Linux OS support NTFS (New Technology File System) c) Mac OS supports APFS (Apple File System) d) All operating systems support the same standard file system What is a distributed file system? a) A file system that allows files to be stored on multiple servers b) A file system that only allows files to be stored on a single server c) A file system that can only be accessed by one user at a time d) A file system that does not allow for remote access What is the advantage of using a distributed file system? a) Increased security risks b) Reduced storage capabilities c) Improved accessibility and availability of files. d) Limited scalability options Which technology is commonly used in implementing a distributed file system? a) Blockchain b) Cloud computing c) Centralized storage systems d) Peer-to-peer networks What is sharding in the context of distributed systems? a) Storing data on multiple servers for redundancy b) Dividing data into smaller subsets and distributing them across multiple servers c) Replicating data across multiple servers for faster access d) Encrypting data to ensure security in distributed systems Which of the following is an advantage of sharding? a) Increased storage capacity b) Reduced network traffic c) Improved scalability and performance d) Simplified data recovery process What is replication in the context of distributed systems? a) Storing data on a single server for easy management b) Dividing data into smaller subsets and distributing them across multiple servers c) Duplicating data across multiple servers to ensure availability and fault tolerance d) Encrypting copies of data to achieve higher security levels Which technology is commonly used for replication in distributed systems? a) Blockchain b) Cloud computing c) Peer-to-peer networks d) Database synchronization techniques What are the benefits of replication in distributed systems? a) Improved fault tolerance, high availability, and better-read performance b) Decreased storage costs due to reduced redundancy c) Simplified management processes with centralized control over all replicas d) Reduced network latency through efficient routing algorithms What technique ensures consistency among shards or replicas? a) Voting-based consensus algorithms like Paxos or Raft b) Versions and timestamps using vector clocks. c) Lazy propagation where updates are eventually propagated consistently d) Multiple copies of data What does the CAP theorem in distributed systems state? a) Consistency, Availability, and Partition are mutually exclusive, and only two can be guaranteed at a time b) Consistency, Availability, and Performance are the primary concerns in distributed systems c) The CAP theorem defines the maximum capacity of a distributed system d) It is a framework for evaluating the distributed databases According to the CAP theorem, what does “consistency” refer to? a) All nodes in a distributed system have access to updated data at all times b) The of a system to remain operational despite network failures c) Ensuring that all operations on the database follow predefined rules for validity d) The speed at which data can be accessed and retrieved from a distributed database Which aspect of the CAP theorem refers to a system's ability to continue operating even when some network messages are lost or delayed? a) Consistency b) Availability c) Partition tolerance d) Redundancy In cases where partition tolerance is essential, what tradeoff must be made according to the CAP theorem? a) Consistency versus performance b) Consistency versus high availability c) High availability versus performance d) Consistency versus availability What does the "A" in ACID stand for in the context of database transactions? a) Atomicity b) Availability c) Auditability d) Asymmetry Which principle of ACID ensures that all operations within a transaction are completed successfully or none are completed at all? a) Isolation b) Consistency c) Durability d) Atomicity What does the "C" in ACID refer to in of database transactions? a) Consistency b) Concurrency c) Complexity d) Compliance What aspect of ACID principles ensures concurrent execution of transactions results in a system state that would be obtained if executed serially? a) Consistency b) Independence c) Isolation d) Atomicity Which of the following is a core principle of the BASE (Basically Available, Soft state, Eventual consistency) model for distributed systems? a) Balanced b) Availability c) Atomicity d) Auditable What does the "S" in BASE stand for in the context of distributed systems? a) Stateful b) Scalable c) Soft state d) Stable What does "Eventual consistency" refer to in the BASE model? a) Strictly consistent data at all times b) Guaranteed immediate consistency after every update c) Data will eventually become consistent once all replicas have been updated d) Consistency is not a priority MCQ on UNIT-4 (Big Data Processing) In Big Data processing, what distinguishes it from traditional relational databases? A. Centralized approach B. Serial processing C. Parallel and distributed processing D. Small dataset handling Which storage architecture is leveraged for real-time insights extraction in Big Data analytics? A. On-disk storage B. Cloud storage C. In-memory storage D. Distributed storage What is the advantage of scalability in parallel data processing? A. Slower processing B. Reduced fault tolerance C. Increased cost D. Efficient handling of large datasets How does parallel processing contribute to faster data processing? A. By increasing complexity B. By reducing processing time C. By introducing more overhead D. By limiting computational resources Which processing technique enables a computer to divide up work and execute many tasks simultaneously? A. Serial processing B. Distributed processing C. Sequential processing D. Parallel processing Distributed data processing involves multiple machines connected through a network, forming a ______________. A. Hadoop B. culture C. cluster D. big data In distributed data processing, each machine performs its own tasks while being part of a larger group, thus ______________ the workload and increasing efficiency. A. sharing B. divide C. collect D. access In the comparison between parallel and distributed data processing, which requires multiple locations linked to system components? A. Parallel data processing B. Distributed data processing C. Both D. Neither What feature of Hadoop allows it to manage resources in a cluster? A. Hadoop Common B. Hadoop Distributed File System (HDFS) C. Hadoop YARN D. Hadoop MapReduce _____________ is a distributed file system designed to store and manage large datasets across clusters of commodity hardware. A. Hadoop B. Big Data Distributed File System C. Hadoop Distributed File System (HDFS) D. Apache Hadoop HDFS Big Data isn't worth anything until it's turned into something that can help make money. Hadoop is like a big toolbox that can handle and work with huge amounts of ______________. A. Hadoop B. Big Data C. Distributed File System (HDFS) D. Apache Getting into big data is really hard and complicated, and it's not easy to get to it quickly. Compared to other options, Hadoop helps process and get to ______________ faster. A. Data B. connection C. network D. sharing Every day, Facebook makes 500 TB of data, and airlines make 10 TB every 30 minutes. They both use ______________. A. SQL B. Big Data C. Distributed File System D. MySQL Big Data means having a huge amount of information, which can be organized or not organized. Hadoop is like a tool that helps make sense of Big Data, so we can understand it better. A. True B. False Getting into big data is really hard and complicated, and it's not easy to get to it quickly. Compared to other options, Hadoop helps process and get to data faster. A. True B. False MCQ on Workloads & MapReduce Which type of workload typically involves queries for analyzing data in business intelligence? A. Transactional B. Batch C. Hybrid D. Predictive Which system is commonly associated with transactional processing? A. OLAP B. OLTP C. BI D. ETL Batch processing involves __________ data together for processing. A. grouping B. collecting C. clustering D. dividing In computing, a cluster refers to a closely connected group of servers, or nodes, operating as a unified __________. A. field B. attribute C. entity D. data MapReduce offers flexibility by not imposing any specific data model requirements on input data, making it suitable for processing __________ datasets. A. schema-less B. schema-large C. sub schema D. database Clusters offer linear scalability, meaning that as data volumes grow, additional nodes can be seamlessly added to meet evolving demands. A. True B. False The Shuffle and Sort phase in big data processing facilitates the movement and organization of intermediate data across the cluster before it's sent to the reduce tasks for final processing. A. True B. False What role do clusters play in scalable storage solutions? A. Vertical scalability B. Linear scalability C. Static scalability D. Singular scalability Which framework is celebrated for its scalability and reliability in batch processing? A. Hadoop B. Spark C. MapReduce D. Kafka What is the primary function of the Partition phase in big data processing? A. Combining data B. Shuffling data C. Dividing data into smaller chunks D. Sorting data Which phase is primarily designed for batch workloads rather than real-time processing in MapReduce? A. Map phase B. Shuffle and Sort phase C. Reduce phase D. Combine phase What is the primary advantage of utilizing low-cost commodity nodes in a cluster environment? A. Limited scalability B. High performance C. Reduced redundancy D. Cost-effective scalability What role do clusters play in scalable storage solutions? A. Vertical scalability B. Linear scalability C. Static scalability D. Singular scalability What is a primary advantage of batch processing? A. Real-time analysis B. Scheduled intervals for data processing C. Instant decision-making D. Dynamic resource allocation Which framework is celebrated for its scalability and reliability in batch processing? A. Hadoop B. Spark C. MapReduce D. Kafka MCQ on Big Data Storage What technology emerged in response to the challenge of managing colossal volumes of data in the early 2000s? A. Relational databases B. Big Data Storage C. Solid State Drives (SSDs) D. Converged architecture What is a key advantage of using distributed file systems for storing large datasets? A) Efficient handling of numerous small files B) Reduced data redundancy C) Slower read/write capabilities D) Improved performance with sequential data access Which transparency feature ensures that clients don't need to know the number or locations of file servers and storage devices in a distributed file system? A. Access Transparency B. Naming Transparency C. Replication Transparency D. Structure Transparency Which type of databases operate across a group of connected computers called a cluster? A. Relational databases B. NoSQL databases C. In-memory databases D. Distributed databases Distributed file systems ensure data redundancy and availability by duplicating data across __________ locations through replication. A. multiple B. single C. centralized D. local Relational databases organize data into tables with __________ and columns. A. nodes B. rows C. keys D. arrays On-disk storage is often used when we need to store data for a __________ and don't need to access it frequently. A. short time B. medium time C. long time D. random time Distributed file systems perform better with fewer, __________ files that are accessed sequentially. A. smaller B. larger C. structured D. semi-structured Which database system is known for its high scalability compared to RDBMS? A. RDBMS B. Hadoop C. NoSQL D. MySQL Which feature allows NoSQL databases to handle failures without downtime? A. Static data schema B. Highly available C. Eventual consistency D. Scale out instead of up NoSQL databases typically interact with users through __________. A. RESTful APIs B. SQL queries C. XML files D. JSON formats NoSQL databases require payment for licensed software. A. True B. False Which database type utilizes documents to store data instead of rows and columns? A. Key-Value B. Column-Family C. Document D. Graph Which database type is known for its simplicity, scalability, and speed in storing and retrieving data? A. Key-Value B. Document C. Column-Family D. Graph In which type of database are documents stored in collections? A. Key-Value B. Document C. Column-Family D. Graph