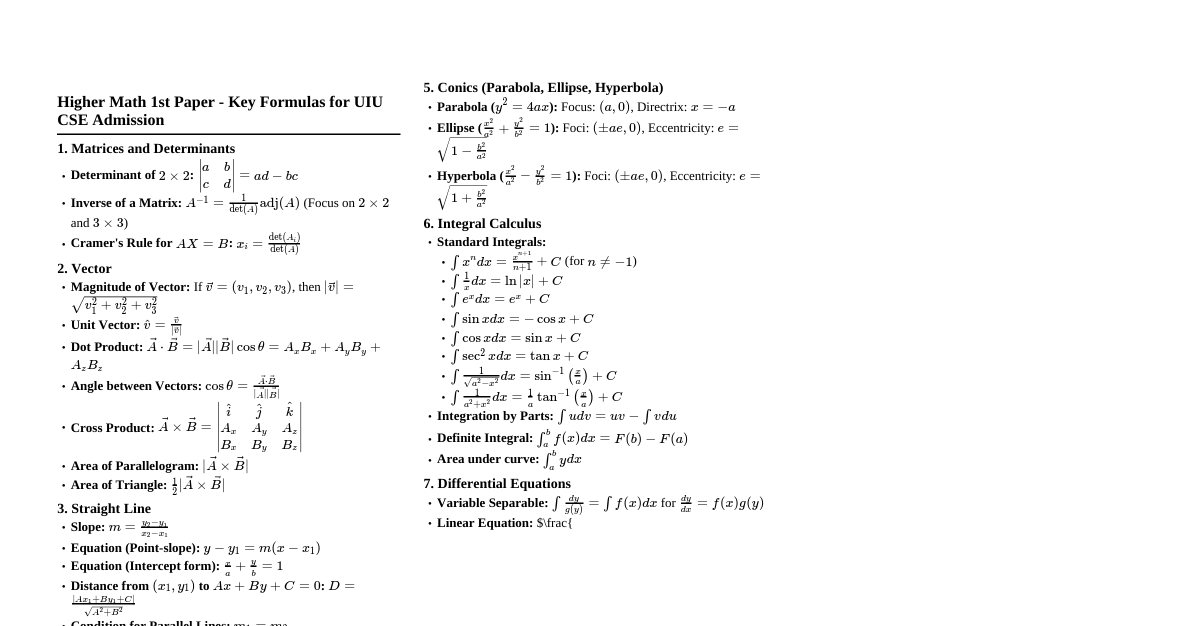

### Linear Algebra: Introduction Linear Algebra is crucial for analyzing systems, signals, and control. In ESE, focus on matrix operations, determinants, eigenvalues, and eigenvectors. It's a high-weight area, often combined with other topics. #### Why Study Linear Algebra? - **Systems of Equations:** Solving multiple equations, fundamental in circuit analysis, signal processing. - **Transformations:** Understanding how systems modify inputs (e.g., filters, amplifiers). - **Data Analysis:** Principal Component Analysis (PCA) built on eigenvectors. #### Key Concepts Intuition - **Vectors:** Directed magnitudes, representing states or signals. - **Matrices:** Transformations acting on vectors, representing system behavior. - **Determinant:** Scaling factor of transformation, indicates invertibility. - **Eigenvalues/Eigenvectors:** Special vectors that are only scaled by a transformation, representing natural modes of a system. ### Matrix Operations Understanding these operations is fundamental. ESE questions often involve basic arithmetic. #### 1. Addition and Subtraction - **Condition:** Matrices must be of the same order ($m \times n$). - **Formula:** $(A \pm B)_{ij} = A_{ij} \pm B_{ij}$ - **Intuition:** Element-wise combination. #### 2. Scalar Multiplication - **Formula:** $(kA)_{ij} = k \cdot A_{ij}$ - **Intuition:** Scaling all elements uniformly. #### 3. Matrix Multiplication - **Condition:** Number of columns in the first matrix must equal number of rows in the second matrix ($A_{m \times n} \cdot B_{n \times p} = C_{m \times p}$). - **Formula:** $(AB)_{ij} = \sum_{k=1}^{n} A_{ik} B_{kj}$ - **Intuition:** A linear combination of column vectors of B, weighted by entries of A's rows, or vice-versa. Represents sequential transformations. - **Common Trap:** Matrix multiplication is generally NOT commutative ($AB \neq BA$). - **Shortcut:** For $2 \times 2$ matrices, multiply row-by-column directly. $$ \begin{pmatrix} a & b \\ c & d \end{pmatrix} \begin{pmatrix} e & f \\ g & h \end{pmatrix} = \begin{pmatrix} ae+bg & af+bh \\ ce+dg & cf+dh \end{pmatrix} $$ #### 4. Transpose ($A^T$) - **Formula:** $(A^T)_{ij} = A_{ji}$ - **Properties:** - $(A^T)^T = A$ - $(A+B)^T = A^T + B^T$ - $(kA)^T = kA^T$ - $(AB)^T = B^T A^T$ (Order reverses!) - **Intuition:** Swapping rows and columns. Useful for inner products and symmetry. #### 5. Conjugate Transpose ($A^* = \overline{A^T}$) - **Formula:** $(A^*)_{ij} = \overline{A_{ji}}$ - **Intuition:** Transpose and take complex conjugate of each element. Important for complex matrices. #### ESE Example (2018, 2 Marks) If $A = \begin{pmatrix} 1 & 2 \\ 3 & 4 \end{pmatrix}$ and $B = \begin{pmatrix} 5 & 6 \\ 7 & 8 \end{pmatrix}$, find $AB$. **Solution:** $$ AB = \begin{pmatrix} 1 & 2 \\ 3 & 4 \end{pmatrix} \begin{pmatrix} 5 & 6 \\ 7 & 8 \end{pmatrix} = \begin{pmatrix} (1)(5)+(2)(7) & (1)(6)+(2)(8) \\ (3)(5)+(4)(7) & (3)(6)+(4)(8) \end{pmatrix} $$ $$ = \begin{pmatrix} 5+14 & 6+16 \\ 15+28 & 18+32 \end{pmatrix} = \begin{pmatrix} 19 & 22 \\ 43 & 50 \end{pmatrix} $$ **Self-Practice Problems:** 1. Given $A = \begin{pmatrix} 2 & -1 \\ 0 & 3 \end{pmatrix}$ and $B = \begin{pmatrix} 1 & 4 \\ -2 & 0 \end{pmatrix}$, calculate $A+B$ and $2A-B$. 2. If $P = \begin{pmatrix} 1 & 0 & 2 \end{pmatrix}$ and $Q = \begin{pmatrix} 3 \\ 1 \\ 4 \end{pmatrix}$, calculate $PQ$ and $QP$. (Hint: Order matters!) 3. For $M = \begin{pmatrix} 1 & i \\ -i & 0 \end{pmatrix}$, find $M^T$ and $M^*$. 4. Let $X = \begin{pmatrix} 0 & 1 \\ 1 & 0 \end{pmatrix}$. Find $X^2$. What does this imply about the transformation? 5. If $A = \begin{pmatrix} \cos\theta & -\sin\theta \\ \sin\theta & \cos\theta \end{pmatrix}$, find $A^T A$. What kind of matrix is A? **Answers:** 1. $A+B = \begin{pmatrix} 3 & 3 \\ -2 & 3 \end{pmatrix}$, $2A-B = \begin{pmatrix} 3 & -6 \\ 2 & 6 \end{pmatrix}$ 2. $PQ = (11)$, $QP = \begin{pmatrix} 3 & 0 & 6 \\ 1 & 0 & 2 \\ 4 & 0 & 8 \end{pmatrix}$ 3. $M^T = \begin{pmatrix} 1 & -i \\ i & 0 \end{pmatrix}$, $M^* = \begin{pmatrix} 1 & i \\ -i & 0 \end{pmatrix}$ (M is Hermitian) 4. $X^2 = \begin{pmatrix} 1 & 0 \\ 0 & 1 \end{pmatrix} = I$. X is an involution (reflects vectors across $y=x$). 5. $A^T A = \begin{pmatrix} 1 & 0 \\ 0 & 1 \end{pmatrix} = I$. A is an orthogonal matrix (rotation). ### Determinants The determinant of a square matrix is a scalar value that provides information about the matrix's properties, particularly its invertibility and the volume scaling of the linear transformation it represents. High-weight area for ESE. #### 1. Determinant of $2 \times 2$ Matrix - **Formula:** For $A = \begin{pmatrix} a & b \\ c & d \end{pmatrix}$, $\det(A) = ad - bc$. - **Intuition:** Area of the parallelogram formed by the column (or row) vectors. #### 2. Determinant of $3 \times 3$ Matrix - **Formula (Sarrus' Rule - only for $3 \times 3$):** For $A = \begin{pmatrix} a & b & c \\ d & e & f \\ g & h & i \end{pmatrix}$, $\det(A) = a(ei - fh) - b(di - fg) + c(dh - eg)$. This is expansion along the first row using cofactors. - **General Formula (Cofactor Expansion):** $\det(A) = \sum_{j=1}^{n} (-1)^{i+j} A_{ij} M_{ij}$ (expansion along row $i$) or $\det(A) = \sum_{i=1}^{n} (-1)^{i+j} A_{ij} M_{ij}$ (expansion along column $j$) where $M_{ij}$ is the determinant of the submatrix obtained by deleting row $i$ and column $j$. - **Shortcut:** For triangular matrices (upper, lower, or diagonal), $\det(A)$ is the product of diagonal elements. #### Properties of Determinants 1. $\det(A^T) = \det(A)$ 2. If two rows/columns are identical or proportional, $\det(A) = 0$. 3. If a row/column is all zeros, $\det(A) = 0$. 4. Swapping two rows/columns changes the sign of the determinant. 5. Multiplying a row/column by a scalar $k$ multiplies $\det(A)$ by $k$. 6. $\det(kA) = k^n \det(A)$ for an $n \times n$ matrix. (Common trap: $k^n$ not just $k$). 7. $\det(AB) = \det(A) \det(B)$ 8. $\det(A^{-1}) = 1/\det(A)$ 9. Row/column operations ($R_i \to R_i + kR_j$) do NOT change the determinant. (Crucial for simplifying calculations!) #### ESE Example (2019, 2 Marks) Find the determinant of the matrix $A = \begin{pmatrix} 1 & 2 & 3 \\ 4 & 5 & 6 \\ 7 & 8 & 9 \end{pmatrix}$. **Solution:** Using Sarrus' Rule or cofactor expansion: $\det(A) = 1(5 \times 9 - 6 \times 8) - 2(4 \times 9 - 6 \times 7) + 3(4 \times 8 - 5 \times 7)$ $= 1(45 - 48) - 2(36 - 42) + 3(32 - 35)$ $= 1(-3) - 2(-6) + 3(-3)$ $= -3 + 12 - 9 = 0$ **Shortcut/Observation:** Notice that Row 2 - Row 1 = $(3,3,3)$ and Row 3 - Row 2 = $(3,3,3)$. This means the rows are linearly dependent (e.g., $R_3 - R_2 = R_2 - R_1 \implies R_3 - 2R_2 + R_1 = 0$). If rows/columns are linearly dependent, determinant is 0. #### ESE Example (2020, 2 Marks) If $A$ is a $3 \times 3$ matrix such that $\det(A) = 5$, what is $\det(2A^T)$? **Solution:** Using properties: $\det(2A^T) = 2^3 \det(A^T)$ (Property 6: $\det(kA) = k^n \det(A)$) $= 8 \det(A)$ (Property 1: $\det(A^T) = \det(A)$) $= 8 \times 5 = 40$ **Self-Practice Problems:** 1. Calculate $\det \begin{pmatrix} 1 & -1 & 0 \\ 2 & 3 & 4 \\ 0 & 1 & 5 \end{pmatrix}$. 2. If $A$ is a $4 \times 4$ matrix with $\det(A) = -2$, find $\det(A^2)$ and $\det(A^{-1})$. 3. For what value of $k$ will the matrix $\begin{pmatrix} 1 & 2 & 3 \\ 4 & k & 6 \\ 7 & 8 & 9 \end{pmatrix}$ have a determinant of 0? 4. Without expanding, explain why $\det \begin{pmatrix} 10 & 20 & 30 \\ 1 & 2 & 3 \\ 5 & 6 & 7 \end{pmatrix} = 0$. 5. If $A = \begin{pmatrix} 2 & 0 & 0 \\ 0 & 3 & 0 \\ 0 & 0 & 4 \end{pmatrix}$, compute $\det(A)$. **Answers:** 1. $\det(A) = 1(15-4) - (-1)(10-0) + 0 = 11 + 10 = 21$. 2. $\det(A^2) = \det(A)\det(A) = (-2)(-2) = 4$. $\det(A^{-1}) = 1/\det(A) = -1/2$. 3. $\det(A) = 1(9k-48) - 2(36-42) + 3(32-7k) = 9k-48 + 12 + 96-21k = -12k + 60$. For $\det(A)=0$, $-12k+60=0 \implies k=5$. 4. Row 1 is 10 times Row 2 ($R_1 = 10R_2$). Since two rows are proportional, the determinant is 0. 5. $A$ is a diagonal matrix. $\det(A)$ = product of diagonal elements = $2 \times 3 \times 4 = 24$. ### Inverse of a Matrix & Rank of a Matrix The inverse allows us to "undo" a matrix transformation. Rank describes the "dimensionality" of the transformation. Both are high-weight for ESE. #### 1. Inverse of a Matrix ($A^{-1}$) - **Condition:** $A$ must be a square matrix and $\det(A) \neq 0$ (non-singular). - **Formula:** $A^{-1} = \frac{1}{\det(A)} \text{adj}(A)$ where $\text{adj}(A)$ is the adjoint of $A$, which is the transpose of the cofactor matrix. - **Cofactor Matrix $C$:** $C_{ij} = (-1)^{i+j} M_{ij}$ (where $M_{ij}$ is the minor). - **Adjoint Matrix:** $\text{adj}(A) = C^T$. - **Properties:** - $AA^{-1} = A^{-1}A = I$ (Identity Matrix) - $(A^{-1})^{-1} = A$ - $(AB)^{-1} = B^{-1}A^{-1}$ (Order reverses!) - $(A^T)^{-1} = (A^{-1})^T$ - **Shortcut for $2 \times 2$ Inverse:** For $A = \begin{pmatrix} a & b \\ c & d \end{pmatrix}$, $A^{-1} = \frac{1}{ad-bc} \begin{pmatrix} d & -b \\ -c & a \end{pmatrix}$. (Swap diagonals, negate off-diagonals, divide by determinant). #### 2. Rank of a Matrix ($\rho(A)$) - **Definition:** The maximum number of linearly independent row vectors (or column vectors) in the matrix. - **Alternative Definition:** The order of the largest non-zero minor (sub-determinant) of the matrix. - **Properties:** - For an $m \times n$ matrix $A$, $\rho(A) \le \min(m, n)$. - $\rho(A) = \rho(A^T)$. - If $A$ is an $n \times n$ matrix, $\rho(A) = n \iff \det(A) \neq 0$. - Row/column operations do not change the rank. (Use Echelon form for calculation). - **Echelon Form:** A matrix is in Echelon form if: 1. All non-zero rows are above any rows of all zeros. 2. The leading entry (pivot) of each non-zero row is in a column to the right of the leading entry of the row above it. 3. All entries in a column below a leading entry are zero. The rank is the number of non-zero rows in its Echelon form. #### ESE Example (2017, 2 Marks) Find the rank of the matrix $A = \begin{pmatrix} 1 & 2 & 3 \\ 2 & 4 & 6 \\ 3 & 6 & 9 \end{pmatrix}$. **Solution:** We use row operations to reduce the matrix to Echelon form. $A = \begin{pmatrix} 1 & 2 & 3 \\ 2 & 4 & 6 \\ 3 & 6 & 9 \end{pmatrix}$ $R_2 \to R_2 - 2R_1$: $\begin{pmatrix} 1 & 2 & 3 \\ 0 & 0 & 0 \\ 3 & 6 & 9 \end{pmatrix}$ $R_3 \to R_3 - 3R_1$: $\begin{pmatrix} 1 & 2 & 3 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{pmatrix}$ The matrix has only one non-zero row. Therefore, $\rho(A) = 1$. **Observation:** All rows are multiples of the first row ($R_2=2R_1$, $R_3=3R_1$). This implies only one linearly independent row, hence rank is 1. #### ESE Example (2018, 2 Marks) If $A = \begin{pmatrix} 1 & 2 \\ 3 & 4 \end{pmatrix}$, find $A^{-1}$. **Solution:** First, calculate $\det(A) = (1)(4) - (2)(3) = 4 - 6 = -2$. Using the $2 \times 2$ shortcut formula: $A^{-1} = \frac{1}{-2} \begin{pmatrix} 4 & -2 \\ -3 & 1 \end{pmatrix} = \begin{pmatrix} -2 & 1 \\ 3/2 & -1/2 \end{pmatrix}$. #### ESE Example (2016, 2 Marks) Consider the system of linear equations: $x + y + z = 6$ $x + 2y + 3z = 10$ $x + 2y + \lambda z = \mu$ For what values of $\lambda$ and $\mu$ does the system have: (i) No solution (ii) Unique solution (iii) Infinitely many solutions **Solution:** Form the augmented matrix $[A|B]$: $[A|B] = \begin{pmatrix} 1 & 1 & 1 & | & 6 \\ 1 & 2 & 3 & | & 10 \\ 1 & 2 & \lambda & | & \mu \end{pmatrix}$ Apply row operations to get Echelon form: $R_2 \to R_2 - R_1$: $\begin{pmatrix} 1 & 1 & 1 & | & 6 \\ 0 & 1 & 2 & | & 4 \\ 1 & 2 & \lambda & | & \mu \end{pmatrix}$ $R_3 \to R_3 - R_1$: $\begin{pmatrix} 1 & 1 & 1 & | & 6 \\ 0 & 1 & 2 & | & 4 \\ 0 & 1 & \lambda-1 & | & \mu-6 \end{pmatrix}$ $R_3 \to R_3 - R_2$: $\begin{pmatrix} 1 & 1 & 1 & | & 6 \\ 0 & 1 & 2 & | & 4 \\ 0 & 0 & \lambda-3 & | & \mu-10 \end{pmatrix}$ Now analyze the conditions for solutions based on $\rho(A)$ and $\rho([A|B])$: The number of variables $n=3$. (i) **No solution:** $\rho(A) \neq \rho([A|B])$. This happens if $\lambda-3=0$ AND $\mu-10 \neq 0$. So, $\lambda = 3$ and $\mu \neq 10$. In this case, $\rho(A)=2$ (2 non-zero rows in A part), but $\rho([A|B])=3$ (3 non-zero rows in augmented part). (ii) **Unique solution:** $\rho(A) = \rho([A|B]) = n$. This happens if $\lambda-3 \neq 0$. So, $\lambda \neq 3$ ( $\mu$ can be any value). In this case, $\rho(A)=3$ and $\rho([A|B])=3$. (iii) **Infinitely many solutions:** $\rho(A) = \rho([A|B]) < n$. This happens if $\lambda-3=0$ AND $\mu-10=0$. So, $\lambda = 3$ and $\mu = 10$. In this case, $\rho(A)=2$ and $\rho([A|B])=2$, which is less than $n=3$. **Common Trap:** Confusing the conditions for solutions. Remember the Rouché-Capelli theorem. **Self-Practice Problems:** 1. Find the inverse of $A = \begin{pmatrix} 2 & 1 \\ 5 & 3 \end{pmatrix}$. 2. Find the rank of the matrix $B = \begin{pmatrix} 1 & 1 & 1 \\ 1 & 2 & 3 \\ 1 & 4 & 9 \end{pmatrix}$. 3. For what value of 'a' does the matrix $\begin{pmatrix} 1 & 2 & 3 \\ 2 & a & 0 \\ 3 & 0 & 1 \end{pmatrix}$ become singular (non-invertible)? 4. Determine the rank of $C = \begin{pmatrix} 1 & 2 & 3 & 4 \\ 0 & 1 & 2 & 3 \\ 0 & 0 & 0 & 0 \end{pmatrix}$. 5. Consider the system: $x + y = 3$ $x + 2y = 5$ $x + 3y = 7$ Does this system have a solution? If yes, is it unique? **Answers:** 1. $\det(A) = 6-5=1$. $A^{-1} = \frac{1}{1} \begin{pmatrix} 3 & -1 \\ -5 & 2 \end{pmatrix} = \begin{pmatrix} 3 & -1 \\ -5 & 2 \end{pmatrix}$. 2. $B = \begin{pmatrix} 1 & 1 & 1 \\ 1 & 2 & 3 \\ 1 & 4 & 9 \end{pmatrix} \xrightarrow{R_2-R_1, R_3-R_1} \begin{pmatrix} 1 & 1 & 1 \\ 0 & 1 & 2 \\ 0 & 3 & 8 \end{pmatrix} \xrightarrow{R_3-3R_2} \begin{pmatrix} 1 & 1 & 1 \\ 0 & 1 & 2 \\ 0 & 0 & 2 \end{pmatrix}$. Rank is 3. 3. For singular, $\det(A)=0$. $\det(A) = 1(a-0) - 2(2-0) + 3(0-3a) = a - 4 - 9a = -8a - 4$. Setting to 0: $-8a-4=0 \implies a = -1/2$. 4. Matrix is already in Echelon form. Number of non-zero rows is 2. So, $\rho(C) = 2$. 5. Augmented matrix: $\begin{pmatrix} 1 & 1 & | & 3 \\ 1 & 2 & | & 5 \\ 1 & 3 & | & 7 \end{pmatrix} \xrightarrow{R_2-R_1, R_3-R_1} \begin{pmatrix} 1 & 1 & | & 3 \\ 0 & 1 & | & 2 \\ 0 & 2 & | & 4 \end{pmatrix} \xrightarrow{R_3-2R_2} \begin{pmatrix} 1 & 1 & | & 3 \\ 0 & 1 & | & 2 \\ 0 & 0 & | & 0 \end{pmatrix}$. $\rho(A)=2$, $\rho([A|B])=2$, $n=2$. Since $\rho(A) = \rho([A|B]) = n$, it has a unique solution. From $R_2$: $y=2$. From $R_1$: $x+y=3 \implies x+2=3 \implies x=1$. Unique solution $(1,2)$. ### Eigenvalues and Eigenvectors Eigenvalues and eigenvectors are fundamental for understanding system dynamics, stability, and transformations. This is a very high-weight area for ESE, often appearing in 5-10 mark questions. #### 1. Definition - **Eigenvector:** A non-zero vector $\vec{v}$ such that when a linear transformation (matrix $A$) is applied to it, the result is a scalar multiple of $\vec{v}$. - **Eigenvalue:** The scalar $\lambda$ by which the eigenvector $\vec{v}$ is scaled. - **Equation:** $A\vec{v} = \lambda\vec{v}$ This can be rewritten as $(A - \lambda I)\vec{v} = \vec{0}$, where $I$ is the identity matrix. #### 2. Finding Eigenvalues - **Characteristic Equation:** For a non-trivial solution ($\vec{v} \neq \vec{0}$), the matrix $(A - \lambda I)$ must be singular. Thus, its determinant must be zero: $\det(A - \lambda I) = 0$ - Solving this polynomial equation for $\lambda$ gives the eigenvalues. For an $n \times n$ matrix, there will be $n$ eigenvalues (counting multiplicity). #### 3. Finding Eigenvectors - For each eigenvalue $\lambda_k$, substitute it back into the equation $(A - \lambda_k I)\vec{v} = \vec{0}$. - Solve this system of linear equations for $\vec{v}$. The solution space is the eigenspace corresponding to $\lambda_k$. Pick any non-zero vector from this space. - Eigenvectors are not unique (any scalar multiple is also an eigenvector for the same $\lambda$). #### 4. Properties of Eigenvalues 1. **Sum of Eigenvalues:** $\sum \lambda_i = \text{trace}(A)$ (sum of diagonal elements). 2. **Product of Eigenvalues:** $\prod \lambda_i = \det(A)$. 3. If $\lambda$ is an eigenvalue of $A$, then: - $k\lambda$ is an eigenvalue of $kA$. - $\lambda^m$ is an eigenvalue of $A^m$. - $1/\lambda$ is an eigenvalue of $A^{-1}$ (if $A$ is invertible). - $\lambda \pm k$ is an eigenvalue of $A \pm kI$. 4. **Symmetric Matrix:** Eigenvalues of a real symmetric matrix are always real. Eigenvectors corresponding to distinct eigenvalues are orthogonal. 5. **Hermitian Matrix:** Eigenvalues of a Hermitian matrix ($A = A^*$) are real. 6. **Skew-Symmetric Matrix:** Eigenvalues are purely imaginary or zero. 7. **Orthogonal Matrix:** Eigenvalues have unit modulus ($|\lambda|=1$). #### 5. Cayley-Hamilton Theorem - **Statement:** Every square matrix satisfies its own characteristic equation. - If the characteristic equation is $\lambda^n + c_{n-1}\lambda^{n-1} + ... + c_1\lambda + c_0 = 0$, then $A^n + c_{n-1}A^{n-1} + ... + c_1A + c_0I = 0$. - **Usefulness:** Calculating higher powers of $A$ or $A^{-1}$ efficiently. From the theorem, $A^{-1} = -\frac{1}{c_0} (A^{n-1} + c_{n-1}A^{n-2} + ... + c_1I)$ (assuming $c_0 = \det(A) \neq 0$). #### ESE Example (2021, 5 Marks) Find the eigenvalues and eigenvectors of the matrix $A = \begin{pmatrix} 2 & 1 \\ 1 & 2 \end{pmatrix}$. **Solution:** **Step 1: Find Eigenvalues** Characteristic equation: $\det(A - \lambda I) = 0$ $$ \det \begin{pmatrix} 2-\lambda & 1 \\ 1 & 2-\lambda \end{pmatrix} = 0 $$ $(2-\lambda)(2-\lambda) - (1)(1) = 0$ $(2-\lambda)^2 - 1 = 0$ $4 - 4\lambda + \lambda^2 - 1 = 0$ $\lambda^2 - 4\lambda + 3 = 0$ $(\lambda - 1)(\lambda - 3) = 0$ Eigenvalues are $\lambda_1 = 1$ and $\lambda_2 = 3$. **Step 2: Find Eigenvector for $\lambda_1 = 1$** $(A - 1I)\vec{v_1} = \vec{0}$ $$ \begin{pmatrix} 2-1 & 1 \\ 1 & 2-1 \end{pmatrix} \begin{pmatrix} x \\ y \end{pmatrix} = \begin{pmatrix} 0 \\ 0 \end{pmatrix} $$ $$ \begin{pmatrix} 1 & 1 \\ 1 & 1 \end{pmatrix} \begin{pmatrix} x \\ y \end{pmatrix} = \begin{pmatrix} 0 \\ 0 \end{pmatrix} $$ This gives $x + y = 0$. So, $y = -x$. Let $x = k$ (any non-zero scalar), then $y = -k$. Eigenvector $\vec{v_1} = k \begin{pmatrix} 1 \\ -1 \end{pmatrix}$. We can choose $k=1$, so $\vec{v_1} = \begin{pmatrix} 1 \\ -1 \end{pmatrix}$. **Step 3: Find Eigenvector for $\lambda_2 = 3$** $(A - 3I)\vec{v_2} = \vec{0}$ $$ \begin{pmatrix} 2-3 & 1 \\ 1 & 2-3 \end{pmatrix} \begin{pmatrix} x \\ y \end{pmatrix} = \begin{pmatrix} 0 \\ 0 \end{pmatrix} $$ $$ \begin{pmatrix} -1 & 1 \\ 1 & -1 \end{pmatrix} \begin{pmatrix} x \\ y \end{pmatrix} = \begin{pmatrix} 0 \\ 0 \end{pmatrix} $$ This gives $-x + y = 0$ (or $x - y = 0$). So, $y = x$. Let $x = k$, then $y = k$. Eigenvector $\vec{v_2} = k \begin{pmatrix} 1 \\ 1 \end{pmatrix}$. We can choose $k=1$, so $\vec{v_2} = \begin{pmatrix} 1 \\ 1 \end{pmatrix}$. **Summary:** Eigenvalues: $\lambda_1 = 1, \lambda_2 = 3$ Eigenvectors: $\vec{v_1} = \begin{pmatrix} 1 \\ -1 \end{pmatrix}, \vec{v_2} = \begin{pmatrix} 1 \\ 1 \end{pmatrix}$ **Check (Optional but recommended):** Trace of $A = 2+2=4$. Sum of eigenvalues $= 1+3=4$. (Matches!) Determinant of $A = (2)(2)-(1)(1)=3$. Product of eigenvalues $= (1)(3)=3$. (Matches!) **Common Trap:** Algebraic Multiplicity vs. Geometric Multiplicity. For symmetric matrices, they are always equal. For non-symmetric matrices, if algebraic multiplicity is greater than geometric multiplicity, the matrix is not diagonalizable. ESE usually gives diagonalizable matrices. **Self-Practice Problems:** 1. Find the eigenvalues of $A = \begin{pmatrix} 5 & 4 \\ 1 & 2 \end{pmatrix}$. 2. If the eigenvalues of $A$ are $1, 2, 3$, what are the eigenvalues of $A^2 + 2A + 3I$? 3. For a matrix $M = \begin{pmatrix} 0 & 1 \\ 1 & 0 \end{pmatrix}$, find its eigenvalues and corresponding eigenvectors. 4. If $A$ is a $3 \times 3$ matrix with eigenvalues $1, -1, 2$, find $\det(A)$ and $\text{trace}(A)$. 5. Given the characteristic equation of a $2 \times 2$ matrix $A$ is $\lambda^2 - 5\lambda + 6 = 0$. Use Cayley-Hamilton theorem to express $A^{-1}$ in terms of $A$. **Answers:** 1. $\det(A-\lambda I) = (5-\lambda)(2-\lambda) - 4 = 10 - 7\lambda + \lambda^2 - 4 = \lambda^2 - 7\lambda + 6 = 0$. $(\lambda-1)(\lambda-6)=0$. Eigenvalues are $\lambda_1=1, \lambda_2=6$. 2. If $\lambda$ is an eigenvalue of $A$, then $f(\lambda)$ is an eigenvalue of $f(A)$. So, eigenvalues are $1^2+2(1)+3 = 6$, $2^2+2(2)+3 = 11$, $3^2+2(3)+3 = 18$. 3. $\det(M-\lambda I) = (-\lambda)(-\lambda) - 1 = \lambda^2 - 1 = 0 \implies \lambda = \pm 1$. For $\lambda=1$: $\begin{pmatrix} -1 & 1 \\ 1 & -1 \end{pmatrix} \begin{pmatrix} x \\ y \end{pmatrix} = \begin{pmatrix} 0 \\ 0 \end{pmatrix} \implies -x+y=0 \implies y=x$. $\vec{v_1} = \begin{pmatrix} 1 \\ 1 \end{pmatrix}$. For $\lambda=-1$: $\begin{pmatrix} 1 & 1 \\ 1 & 1 \end{pmatrix} \begin{pmatrix} x \\ y \end{pmatrix} = \begin{pmatrix} 0 \\ 0 \end{pmatrix} \implies x+y=0 \implies y=-x$. $\vec{v_2} = \begin{pmatrix} 1 \\ -1 \end{pmatrix}$. 4. $\det(A) = \text{product of eigenvalues} = (1)(-1)(2) = -2$. $\text{trace}(A) = \text{sum of eigenvalues} = 1 + (-1) + 2 = 2$. 5. By Cayley-Hamilton theorem, $A^2 - 5A + 6I = 0$. $6I = 5A - A^2 = A(5I - A)$. $I = \frac{1}{6} A(5I - A)$. $A^{-1} = \frac{1}{6} (5I - A)$. ### Diagonalization and Quadratic Forms These concepts are important for simplifying complex systems and understanding multivariable functions. ESE questions might involve diagonalizing a matrix or expressing a quadratic form in canonical form. #### 1. Diagonalization - **Definition:** A square matrix $A$ is diagonalizable if it is similar to a diagonal matrix $D$. This means there exists an invertible matrix $P$ such that $A = PDP^{-1}$. - **Condition for Diagonalization:** An $n \times n$ matrix $A$ is diagonalizable if and only if it has $n$ linearly independent eigenvectors. This is guaranteed if all eigenvalues are distinct, or if for repeated eigenvalues, their algebraic multiplicity equals their geometric multiplicity. - **Construction of $P$ and $D$:** - The diagonal entries of $D$ are the eigenvalues of $A$. - The columns of $P$ are the corresponding eigenvectors of $A$. - $P$ is called the modal matrix. - **Why it's useful:** - Calculating high powers of $A$: $A^k = PD^kP^{-1}$. Since $D$ is diagonal, $D^k$ is easy to compute (just raise diagonal elements to power $k$). - Solving systems of differential equations. #### 2. Quadratic Forms - **Definition:** A quadratic form is a homogeneous polynomial of degree two in $n$ variables. For $n=2$: $Q(x,y) = ax^2 + by^2 + cxy$. For $n=3$: $Q(x,y,z) = ax^2 + by^2 + cz^2 + dxy + eyz + fzx$. - **Matrix Representation:** Any quadratic form can be written as $X^T AX$, where $X$ is the column vector of variables and $A$ is a symmetric matrix. For $Q(x,y) = ax^2 + by^2 + cxy$: $A = \begin{pmatrix} a & c/2 \\ c/2 & b \end{pmatrix}$, $X = \begin{pmatrix} x \\ y \end{pmatrix}$ - **Canonical Form:** By an orthogonal transformation $X = PY$ (where $P$ is an orthogonal matrix whose columns are orthonormal eigenvectors of $A$), the quadratic form can be transformed into a canonical form: $Q(Y) = Y^T D Y = \lambda_1 y_1^2 + \lambda_2 y_2^2 + ... + \lambda_n y_n^2$. Here, $\lambda_i$ are the eigenvalues of $A$. - **Nature of Quadratic Forms:** Determined by the signs of the eigenvalues. - **Positive Definite:** All $\lambda_i > 0$. - **Negative Definite:** All $\lambda_i 0$ and some $\lambda_i ### Calculus: Introduction Calculus forms the backbone of engineering analysis, modeling continuous changes. ESE primarily focuses on limits, continuity, differentiability, mean value theorems, and applications of derivatives and integrals. Integration techniques are very important. #### Why Study Calculus? - **Optimization:** Finding maxima/minima (e.g., maximizing efficiency, minimizing error). - **Rates of Change:** Velocity, acceleration, current, voltage change. - **Accumulation:** Total charge, work done, volume, area. - **Modeling:** Differential equations describe dynamic systems. #### Key Concepts Intuition - **Limit:** Value a function approaches as input approaches some value. - **Derivative:** Instantaneous rate of change, slope of tangent. - **Integral:** Accumulation, area under the curve. ### Limits, Continuity, and Differentiability These are foundational concepts. ESE questions often test definition-based problems and L'Hopital's rule. #### 1. Limits - **Definition:** $\lim_{x \to a} f(x) = L$ if for every $\epsilon > 0$, there exists a $\delta > 0$ such that if $0 1 \end{cases}$ continuous at $x=1$? 5. Evaluate $\lim_{x \to 0} (1+\sin x)^{1/x}$. **Answers:** 1. $\lim_{x \to 0} \frac{e^x - 1}{2x} = \lim_{x \to 0} \frac{e^x}{2} = 1/2$. 2. $f'(0) = \lim_{h \to 0} \frac{h^2 \sin(1/h) - 0}{h} = \lim_{h \to 0} h \sin(1/h)$. Since $|\sin(1/h)| \le 1$, $0 \le |h \sin(1/h)| \le |h|$. By Squeeze Theorem, $\lim_{h \to 0} h \sin(1/h) = 0$. So, it is differentiable at $x=0$ and $f'(0)=0$. 3. Let $y=1/x$. As $x \to \infty$, $y \to 0$. $\lim_{y \to 0} \frac{\sin y}{y} = 1$. 4. For continuity, $f(1^-) = f(1^+) = f(1)$. $a(1)+1 = 1^2+2 = 1+2$. $a+1 = 3 \implies a=2$. 5. This is $1^\infty$ form. Let $L = \lim_{x \to 0} (1+\sin x)^{1/x}$. $\ln L = \lim_{x \to 0} \frac{1}{x} \ln(1+\sin x) = \lim_{x \to 0} \frac{\ln(1+\sin x)}{x}$. (Form $\frac{0}{0}$) Apply L'Hopital: $\lim_{x \to 0} \frac{\frac{\cos x}{1+\sin x}}{1} = \frac{\cos 0}{1+\sin 0} = \frac{1}{1} = 1$. So, $\ln L = 1 \implies L = e^1 = e$. ### Mean Value Theorems These theorems provide crucial links between the values of a function and its derivatives over an interval. ESE questions might directly test application or verification. #### 1. Rolle's Theorem - **Conditions:** Let $f(x)$ be a function such that: 1. It is continuous on the closed interval $[a, b]$. 2. It is differentiable on the open interval $(a, b)$. 3. $f(a) = f(b)$. - **Conclusion:** Then there exists at least one point $c \in (a, b)$ such that $f'(c) = 0$. - **Intuition:** If a differentiable function starts and ends at the same height, its tangent must be horizontal at some point in between. #### 2. Lagrange's Mean Value Theorem (LMVT) - **Conditions:** Let $f(x)$ be a function such that: 1. It is continuous on the closed interval $[a, b]$. 2. It is differentiable on the open interval $(a, b)$. - **Conclusion:** Then there exists at least one point $c \in (a, b)$ such that $f'(c) = \frac{f(b) - f(a)}{b - a}$. - **Intuition:** The instantaneous rate of change ($f'(c)$) at some point $c$ equals the average rate of change over the entire interval. Geometrically, the tangent at $c$ is parallel to the secant line joining $(a, f(a))$ and $(b, f(b))$. - **Note:** Rolle's Theorem is a special case of LMVT where $f(a)=f(b)$, making $f'(c)=0$. #### 3. Cauchy's Mean Value Theorem (CMVT) - **Conditions:** Let $f(x)$ and $g(x)$ be functions such that: 1. Both are continuous on the closed interval $[a, b]$. 2. Both are differentiable on the open interval $(a, b)$. 3. $g'(x) \neq 0$ for any $x \in (a, b)$. - **Conclusion:** Then there exists at least one point $c \in (a, b)$ such that $\frac{f'(c)}{g'(c)} = \frac{f(b) - f(a)}{g(b) - g(a)}$. - **Intuition:** A generalized LMVT for two functions. It's used in the proof of L'Hopital's Rule. #### ESE Example (2019, 2 Marks) Verify Rolle's Theorem for the function $f(x) = x^2 - 4x + 3$ on the interval $[1, 3]$. Find the value of $c$. **Solution:** **Step 1: Check Conditions** 1. **Continuity:** $f(x) = x^2 - 4x + 3$ is a polynomial, so it is continuous everywhere, hence continuous on $[1, 3]$. 2. **Differentiability:** $f'(x) = 2x - 4$. Since $f'(x)$ exists for all $x$, $f(x)$ is differentiable on $(1, 3)$. 3. **$f(a)=f(b)$:** $f(1) = 1^2 - 4(1) + 3 = 1 - 4 + 3 = 0$. $f(3) = 3^2 - 4(3) + 3 = 9 - 12 + 3 = 0$. Since $f(1) = f(3) = 0$, all conditions are met. **Step 2: Find $c$** By Rolle's Theorem, there exists $c \in (1, 3)$ such that $f'(c) = 0$. $f'(c) = 2c - 4 = 0$ $2c = 4 \implies c = 2$. Since $c=2$ lies in the interval $(1, 3)$, Rolle's Theorem is verified. #### ESE Example (2020, 2 Marks) Apply Lagrange's Mean Value Theorem to $f(x) = x^3$ on $[0, 2]$. Find the value of $c$. **Solution:** **Step 1: Check Conditions** 1. **Continuity:** $f(x) = x^3$ is a polynomial, so it is continuous everywhere, hence continuous on $[0, 2]$. 2. **Differentiability:** $f'(x) = 3x^2$. Since $f'(x)$ exists for all $x$, $f(x)$ is differentiable on $(0, 2)$. All conditions are met. **Step 2: Find $c$** By LMVT, there exists $c \in (0, 2)$ such that $f'(c) = \frac{f(b) - f(a)}{b - a}$. $f(a) = f(0) = 0^3 = 0$. $f(b) = f(2) = 2^3 = 8$. $\frac{f(b) - f(a)}{b - a} = \frac{8 - 0}{2 - 0} = \frac{8}{2} = 4$. Now, set $f'(c) = 4$: $3c^2 = 4$ $c^2 = 4/3$ $c = \pm \sqrt{4/3} = \pm 2/\sqrt{3}$. Since $c$ must be in the interval $(0, 2)$, we take the positive value: $c = 2/\sqrt{3}$. $2/\sqrt{3} \approx 2/1.732 \approx 1.154$, which is indeed in $(0, 2)$. **Common Trap:** Not checking all conditions of the theorem before applying it. For example, if $f(a) \neq f(b)$ for Rolle's, the theorem doesn't apply. **Self-Practice Problems:** 1. Verify Rolle's Theorem for $f(x) = \sin x$ on $[0, \pi]$. Find $c$. 2. Apply LMVT to $f(x) = \ln x$ on $[1, e]$. Find $c$. 3. Can Rolle's Theorem be applied to $f(x) = |x-2|$ on $[1, 3]$? Explain why or why not. 4. Using LMVT, show that $|\sin b - \sin a| \le |b-a|$ for any $a, b$. 5. Verify Cauchy's Mean Value Theorem for $f(x) = x^2$ and $g(x) = x^3$ on $[1, 2]$. Find $c$. **Answers:** 1. $f(x)=\sin x$ is continuous on $[0, \pi]$ and differentiable on $(0, \pi)$. $f(0)=0$, $f(\pi)=0$. Conditions met. $f'(x) = \cos x$. $f'(c) = \cos c = 0 \implies c = \pi/2$ (which is in $(0, \pi)$). 2. $f(x)=\ln x$ is continuous on $[1, e]$ and differentiable on $(1, e)$. $f'(x) = 1/x$. $\frac{f(e)-f(1)}{e-1} = \frac{\ln e - \ln 1}{e-1} = \frac{1-0}{e-1} = \frac{1}{e-1}$. $f'(c) = 1/c = \frac{1}{e-1} \implies c = e-1$. $e-1 \approx 2.718-1 = 1.718$, which is in $(1, e)$. 3. No. $f(x) = |x-2|$ is continuous on $[1, 3]$, but not differentiable at $x=2$ (a sharp corner). Rolle's Theorem requires differentiability on the open interval $(a, b)$. 4. Let $f(x) = \sin x$. On any interval $[a, b]$, $f(x)$ is continuous and differentiable. By LMVT, $\frac{\sin b - \sin a}{b-a} = \cos c$ for some $c \in (a, b)$. So, $\sin b - \sin a = (b-a) \cos c$. Taking absolute values: $|\sin b - \sin a| = |b-a| |\cos c|$. Since $|\cos c| \le 1$, we have $|\sin b - \sin a| \le |b-a|$. 5. $f(x)=x^2, g(x)=x^3$ are continuous on $[1, 2]$ and differentiable on $(1, 2)$. $f'(x) = 2x$, $g'(x) = 3x^2$. $g'(x) \neq 0$ on $(1, 2)$. Conditions met. $\frac{f(2)-f(1)}{g(2)-g(1)} = \frac{2^2-1^2}{2^3-1^3} = \frac{4-1}{8-1} = \frac{3}{7}$. $\frac{f'(c)}{g'(c)} = \frac{2c}{3c^2} = \frac{2}{3c}$. $\frac{2}{3c} = \frac{3}{7} \implies 9c = 14 \implies c = 14/9$. $c=14/9 \approx 1.55$, which is in $(1, 2)$. ### Maxima and Minima of Functions of Single Variable Finding extreme values is a core application of differentiation, crucial for optimization problems in engineering. ESE often features problems on finding local/global extrema. #### 1. Local Maxima and Minima - **First Derivative Test:** - Find critical points where $f'(x) = 0$ or $f'(x)$ is undefined. - If $f'(x)$ changes sign from positive to negative as $x$ increases through a critical point $c$, then $f(c)$ is a local maximum. - If $f'(x)$ changes sign from negative to positive as $x$ increases through a critical point $c$, then $f(c)$ is a local minimum. - If $f'(x)$ does not change sign, it's neither. - **Second Derivative Test:** - Find critical points by setting $f'(x) = 0$. - Calculate $f''(x)$. - If $f''(c) 0$, then $f(c)$ is a local minimum. - If $f''(c) = 0$, the test is inconclusive (use First Derivative Test or higher derivatives). #### 2. Global (Absolute) Maxima and Minima - **On a closed interval $[a, b]$:** 1. Find all critical points in $(a, b)$. 2. Evaluate $f(x)$ at all critical points. 3. Evaluate $f(x)$ at the endpoints $a$ and $b$. 4. The largest of these values is the absolute maximum, and the smallest is the absolute minimum. #### ESE Example (2018, 5 Marks) Find the local maximum and minimum values of the function $f(x) = x^3 - 6x^2 + 9x + 15$. **Solution:** **Step 1: Find Critical Points** Calculate the first derivative: $f'(x) = 3x^2 - 12x + 9$. Set $f'(x) = 0$ to find critical points: $3x^2 - 12x + 9 = 0$ $x^2 - 4x + 3 = 0$ $(x-1)(x-3) = 0$ Critical points are $x=1$ and $x=3$. **Step 2: Apply Second Derivative Test** Calculate the second derivative: $f''(x) = 6x - 12$. Evaluate $f''(x)$ at critical points: - At $x=1$: $f''(1) = 6(1) - 12 = -6$. Since $f''(1) 0$, $x=3$ corresponds to a local minimum. Local minimum value: $f(3) = 3^3 - 6(3)^2 + 9(3) + 15 = 27 - 54 + 27 + 15 = 15$. **Summary:** Local maximum value is 19 at $x=1$. Local minimum value is 15 at $x=3$. **Common Trap:** Forgetting to evaluate the function $f(x)$ at the critical points to find the actual maximum/minimum *values*. **Self-Practice Problems:** 1. Find the critical points of $f(x) = x^4 - 8x^2$. 2. Find the local extrema of $f(x) = x e^{-x}$. 3. Find the absolute maximum and minimum of $f(x) = x^3 - 3x + 2$ on the interval $[0, 2]$. 4. A rectangular box with a square base and open top is to be constructed from 108 square meters of material. Find the dimensions that will maximize the volume of the box. 5. If $f(x) = x + \frac{1}{x}$, find its local extrema. **Answers:** 1. $f'(x) = 4x^3 - 16x = 4x(x^2 - 4) = 4x(x-2)(x+2)$. Critical points at $x=0, 2, -2$. 2. $f'(x) = e^{-x} - x e^{-x} = e^{-x}(1-x)$. Critical point at $x=1$. $f''(x) = -e^{-x}(1-x) - e^{-x} = e^{-x}(x-2)$. $f''(1) = e^{-1}(1-2) = -e^{-1} 0$). $V''(x) = \frac{1}{4}(-6x)$. $V''(6) = \frac{1}{4}(-36) = -9 0 \implies$ Local minimum at $x=1$, value $f(1)=2$. $f''(-1) = -2 ### Integrals (Definite and Improper) Integration is about accumulation and finding areas/volumes. ESE emphasizes standard integration techniques, definite integrals, and understanding convergence of improper integrals. #### 1. Indefinite Integrals (Antiderivatives) - **Basic Formulas:** - $\int x^n dx = \frac{x^{n+1}}{n+1} + C$ (for $n \neq -1$) - $\int \frac{1}{x} dx = \ln|x| + C$ - $\int e^x dx = e^x + C$ - $\int \sin x dx = -\cos x + C$ - $\int \cos x dx = \sin x + C$ - $\int \sec^2 x dx = \tan x + C$ - $\int \frac{1}{\sqrt{a^2-x^2}} dx = \sin^{-1}(\frac{x}{a}) + C$ - $\int \frac{1}{a^2+x^2} dx = \frac{1}{a} \tan^{-1}(\frac{x}{a}) + C$ - **Techniques of Integration:** - **Substitution:** $\int f(g(x))g'(x) dx = \int f(u) du$ where $u=g(x)$. - **Integration by Parts:** $\int u dv = uv - \int v du$ (LIATE rule for choosing $u$). - **Partial Fractions:** For rational functions $\frac{P(x)}{Q(x)}$. #### 2. Definite Integrals - **Definition:** $\int_a^b f(x) dx = F(b) - F(a)$, where $F'(x) = f(x)$. (Fundamental Theorem of Calculus) - **Properties:** - $\int_a^b f(x) dx = -\int_b^a f(x) dx$ - $\int_a^b f(x) dx = \int_a^c f(x) dx + \int_c^b f(x) dx$ - $\int_0^a f(x) dx = \int_0^a f(a-x) dx$ (Useful for symmetry) - $\int_0^{2a} f(x) dx = 2\int_0^a f(x) dx$ if $f(2a-x)=f(x)$ (even w.r.t $x=a$) - $\int_0^{2a} f(x) dx = 0$ if $f(2a-x)=-f(x)$ (odd w.r.t $x=a$) - $\int_{-a}^a f(x) dx = 2\int_0^a f(x) dx$ if $f(x)$ is even ($f(-x)=f(x)$) - $\int_{-a}^a f(x) dx = 0$ if $f(x)$ is odd ($f(-x)=-f(x)$) #### 3. Improper Integrals - **Definition:** Integrals where either the interval of integration is infinite, or the integrand has an infinite discontinuity within the interval. - **Type 1 (Infinite Interval):** - $\int_a^\infty f(x) dx = \lim_{b \to \infty} \int_a^b f(x) dx$ - $\int_{-\infty}^b f(x) dx = \lim_{a \to -\infty} \int_a^b f(x) dx$ - $\int_{-\infty}^\infty f(x) dx = \int_{-\infty}^c f(x) dx + \int_c^\infty f(x) dx$ - **Type 2 (Infinite Discontinuity):** - If $f(x)$ has discontinuity at $b$: $\int_a^b f(x) dx = \lim_{t \to b^-} \int_a^t f(x) dx$ - If $f(x)$ has discontinuity at $a$: $\int_a^b f(x) dx = \lim_{t \to a^+} \int_t^b f(x) dx$ - If discontinuity at $c \in (a, b)$: $\int_a^b f(x) dx = \int_a^c f(x) dx + \int_c^b f(x) dx$ - **Convergence:** An improper integral converges if the limit exists and is finite; otherwise, it diverges. - **P-test for $\int_1^\infty \frac{1}{x^p} dx$:** Converges if $p > 1$, diverges if $p \le 1$. - **P-test for $\int_0^1 \frac{1}{x^p} dx$:** Converges if $p 1$, so it converges. **Common Trap:** Forgetting to convert limits of integration when using substitution for definite integrals. **Self-Practice Problems:** 1. Evaluate $\int_0^1 x e^x dx$. 2. Evaluate $\int_0^{\pi/2} \frac{\cos x}{1+\sin^2 x} dx$. 3. Determine if $\int_0^1 \frac{1}{\sqrt{x}} dx$ converges or diverges. If it converges, find its value. 4. Evaluate $\int_0^{\pi} x \sin x dx$. 5. Evaluate $\int_0^\infty e^{-2x} dx$. **Answers:** 1. Use Integration by Parts: $u=x, dv=e^x dx \implies du=dx, v=e^x$. $[xe^x]_0^1 - \int_0^1 e^x dx = (1 \cdot e^1 - 0 \cdot e^0) - [e^x]_0^1 = e - (e^1 - e^0) = e - e + 1 = 1$. 2. Let $u = \sin x$, $du = \cos x dx$. When $x=0, u=0$. When $x=\pi/2, u=1$. $\int_0^1 \frac{1}{1+u^2} du = [\tan^{-1} u]_0^1 = \tan^{-1}(1) - \tan^{-1}(0) = \pi/4 - 0 = \pi/4$. 3. Discontinuity at $x=0$. $\lim_{t \to 0^+} \int_t^1 x^{-1/2} dx = \lim_{t \to 0^+} [2x^{1/2}]_t^1 = \lim_{t \to 0^+} (2\sqrt{1} - 2\sqrt{t}) = 2 - 0 = 2$. It **converges** to 2. (P-test: $p=1/2 ### Multiple Integrals (Double and Triple Integrals) Multiple integrals are used for calculating areas, volumes, mass, and moments of inertia in higher dimensions. ESE often features problems on setting up and evaluating double/triple integrals, especially changing order of integration. #### 1. Double Integrals - **Area:** $\iint_R dA$ - **Volume:** $\iint_R f(x,y) dA$ - **Iterated Integrals:** $\iint_R f(x,y) dA = \int_a^b \int_{g_1(x)}^{g_2(x)} f(x,y) dy dx$ or $\int_c^d \int_{h_1(y)}^{h_2(y)} f(x,y) dx dy$. - **Change of Order of Integration:** Crucial for simplifying integrals. Sketch the region of integration to determine new limits. - **Change of Variables (Polar Coordinates):** For circular or radially symmetric regions. $x = r \cos \theta$, $y = r \sin \theta$, $dA = dx dy = r dr d\theta$. $\iint_R f(x,y) dx dy = \iint_{R'} f(r \cos \theta, r \sin \theta) r dr d\theta$. #### 2. Triple Integrals - **Volume:** $\iiint_R dV$ - **Mass (with density $\rho(x,y,z)$):** $\iiint_R \rho(x,y,z) dV$ - **Iterated Integrals:** $\int \int \int f(x,y,z) dz dy dx$. - **Change of Variables (Cylindrical and Spherical Coordinates):** - **Cylindrical:** For regions symmetric about an axis (e.g., cylinders, cones). $x = r \cos \theta$, $y = r \sin \theta$, $z = z$. $dV = dx dy dz = r dz dr d\theta$. - **Spherical:** For spherical or conical regions. $x = \rho \sin \phi \cos \theta$, $y = \rho \sin \phi \sin \theta$, $z = \rho \cos \phi$. $dV = dx dy dz = \rho^2 \sin \phi d\rho d\phi d\theta$. #### ESE Example (2020, 5 Marks) Evaluate $\int_0^1 \int_y^1 e^{-x^2} dx dy$. **Solution:** The integral $\int e^{-x^2} dx$ cannot be evaluated in terms of elementary functions. This implies we need to change the order of integration. **Step 1: Sketch the Region of Integration** The given limits are: $y \le x \le 1$ $0 \le y \le 1$ This describes a triangular region with vertices: - $y=0, x=1 \implies (1,0)$ - $y=0, x=0 \implies (0,0)$ - $x=1, y=1 \implies (1,1)$ The region is bounded by $x=y$, $x=1$, and $y=0$. **Step 2: Change the Order of Integration** To integrate with respect to $y$ first, we need to define the region in terms of $x$ limits first. The region is bounded by $y=0$ and $y=x$. The $x$ limits are from $0$ to $1$. So, the new integral is $\int_0^1 \int_0^x e^{-x^2} dy dx$. **Step 3: Evaluate the Integral** $\int_0^1 \left[ y e^{-x^2} \right]_0^x dx$ $= \int_0^1 (x e^{-x^2} - 0 \cdot e^{-x^2}) dx$ $= \int_0^1 x e^{-x^2} dx$ Now, use substitution: Let $u = -x^2$. Then $du = -2x dx \implies x dx = -\frac{1}{2} du$. When $x=0, u=0$. When $x=1, u=-1$. $= \int_0^{-1} e^u (-\frac{1}{2} du)$ $= -\frac{1}{2} \int_0^{-1} e^u du$ $= \frac{1}{2} \int_{-1}^0 e^u du$ (Swapping limits changes sign) $= \frac{1}{2} [e^u ]_{-1}^0$ $= \frac{1}{2} (e^0 - e^{-1})$ $= \frac{1}{2} (1 - \frac{1}{e})$. **Common Trap:** Incorrectly swapping limits when changing order of integration. Always sketch the region. **Self-Practice Problems:** 1. Evaluate $\iint_R xy^2 dA$ where $R$ is the rectangle $[0, 1] \times [1, 2]$. 2. Change the order of integration for $\int_0^2 \int_{x}^{2} (x+y) dy dx$. Then evaluate it. 3. Evaluate $\iint_D (x^2+y^2) dA$ where $D$ is the disk $x^2+y^2 \le 4$. (Use polar coordinates). 4. Find the volume of the region bounded by $z = x^2+y^2$ and $z=4$. (Use cylindrical coordinates). 5. Evaluate $\int_0^1 \int_0^x \int_0^{x+y} dz dy dx$. **Answers:** 1. $\int_0^1 \int_1^2 xy^2 dy dx = \int_0^1 x \left[ \frac{y^3}{3} \right]_1^2 dx = \int_0^1 x (\frac{8}{3} - \frac{1}{3}) dx = \int_0^1 x \frac{7}{3} dx = \frac{7}{3} \left[ \frac{x^2}{2} \right]_0^1 = \frac{7}{3} \cdot \frac{1}{2} = \frac{7}{6}$. 2. Region: $x \le y \le 2$, $0 \le x \le 2$. This is a triangle with vertices $(0,0), (2,2), (0,2)$. Changing order: $0 \le x \le y$, $0 \le y \le 2$. $\int_0^2 \int_0^y (x+y) dx dy = \int_0^2 \left[ \frac{x^2}{2} + xy \right]_0^y dy = \int_0^2 (\frac{y^2}{2} + y^2) dy = \int_0^2 \frac{3}{2} y^2 dy$ $= \frac{3}{2} \left[ \frac{y^3}{3} \right]_0^2 = \frac{3}{2} \cdot \frac{8}{3} = 4$. 3. $x^2+y^2 = r^2$. Limits: $0 \le r \le 2$, $0 \le \theta \le 2\pi$. $dA = r dr d\theta$. $\int_0^{2\pi} \int_0^2 r^2 \cdot r dr d\theta = \int_0^{2\pi} \int_0^2 r^3 dr d\theta = \int_0^{2\pi} \left[ \frac{r^4}{4} \right]_0^2 d\theta$ $= \int_0^{2\pi} 4 d\theta = [4\theta]_0^{2\pi} = 8\pi$. 4. Volume is $\iiint dV$. The region is bounded below by $z=x^2+y^2$ and above by $z=4$. In cylindrical: $z=r^2$ and $z=4$. The projection onto $xy$-plane is $r^2 \le 4 \implies r \le 2$. Limits: $r^2 \le z \le 4$, $0 \le r \le 2$, $0 \le \theta \le 2\pi$. $\int_0^{2\pi} \int_0^2 \int_{r^2}^4 r dz dr d\theta = \int_0^{2\pi} \int_0^2 r[z]_{r^2}^4 dr d\theta = \int_0^{2\pi} \int_0^2 r(4-r^2) dr d\theta$ $= \int_0^{2\pi} \left[ 2r^2 - \frac{r^4}{4} \right]_0^2 d\theta = \int_0^{2\pi} (2(4) - \frac{16}{4}) d\theta = \int_0^{2\pi} (8-4) d\theta = \int_0^{2\pi} 4 d\theta = 8\pi$. 5. $\int_0^1 \int_0^x [z]_0^{x+y} dy dx = \int_0^1 \int_0^x (x+y) dy dx$ $= \int_0^1 \left[ xy + \frac{y^2}{2} \right]_0^x dx = \int_0^1 (x^2 + \frac{x^2}{2}) dx = \int_0^1 \frac{3}{2} x^2 dx$ $= \frac{3}{2} \left[ \frac{x^3}{3} \right]_0^1 = \frac{3}{2} \cdot \frac{1}{3} = \frac{1}{2}$. ### Differential Equations: Introduction Differential equations (DEs) are the language of engineering, describing how systems change over time or space. ESE focuses on solving first-order, second-order linear DEs, and sometimes higher order. #### Why Study Differential Equations? - **Circuit Analysis:** RLC circuits, transients. - **Control Systems:** System response, stability. - **Signal Processing:** Filter design. - **Mechanical Systems:** Vibrations, damping. #### Key Concepts Intuition - **Order:** Highest derivative in the equation. - **Degree:** Power of the highest derivative. - **Linearity:** No products of dependent variable and its derivatives, no non-linear functions of dependent variable. - **Homogeneous:** If all terms involve the dependent variable or its derivatives (i.e., no forcing function or constant term). - **Solution:** A function that satisfies the DE. ### First Order Differential Equations These describe systems where the rate of change depends only on the current state. High-weight area for ESE. #### 1. Variables Separable - **Form:** $\frac{dy}{dx} = f(x)g(y)$ - **Solution:** $\int \frac{dy}{g(y)} = \int f(x) dx + C$. #### 2. Homogeneous Equations - **Form:** $\frac{dy}{dx} = F(\frac{y}{x})$ - **Solution Method:** Substitute $y = vx \implies \frac{dy}{dx} = v + x \frac{dv}{dx}$. The equation transforms into a variables separable form in $v$ and $x$. #### 3. Exact Equations - **Form:** $M(x,y) dx + N(x,y) dy = 0$ - **Condition for Exactness:** $\frac{\partial M}{\partial y} = \frac{\partial N}{\partial x}$. - **Solution Method:** If exact, the solution is $u(x,y) = C$ where: $u(x,y) = \int M dx + k(y)$ (integrate $M$ w.r.t $x$, treat $y$ as constant) $\frac{\partial u}{\partial y} = N \implies \frac{\partial}{\partial y} (\int M dx) + k'(y) = N$. Solve for $k'(y)$ and integrate to find $k(y)$. Alternatively, $u(x,y) = \int N dy + l(x)$ (integrate $N$ w.r.t $y$, treat $x$ as constant) $\frac{\partial u}{\partial x} = M \implies \frac{\partial}{\partial x} (\int N dy) + l'(x) = M$. Solve for $l'(x)$ and integrate to find $l(x)$. #### 4. Linear First Order Equations - **Form:** $\frac{dy}{dx} + P(x)y = Q(x)$ - **Integrating Factor (IF):** $IF = e^{\int P(x) dx}$. - **Solution:** $y \cdot (IF) = \int Q(x) \cdot (IF) dx + C$. #### 5. Bernoulli's Equation - **Form:** $\frac{dy}{dx} + P(x)y = Q(x)y^n$ (where $n \neq 0, 1$). - **Solution Method:** Divide by $y^n$: $y^{-n}\frac{dy}{dx} + P(x)y^{1-n} = Q(x)$. Substitute $v = y^{1-n} \implies \frac{dv}{dx} = (1-n)y^{-n}\frac{dy}{dx}$. The equation transforms into a linear first-order equation in $v$ and $x$. #### ESE Example (2020, 5 Marks) Solve the differential equation $(x^2+y^2)dx - 2xy dy = 0$. **Solution:** This is a first-order DE. Let's check its type. $(x^2+y^2)dx - 2xy dy = 0 \implies \frac{dy}{dx} = \frac{x^2+y^2}{2xy}$. This is of the form $\frac{dy}{dx} = F(\frac{y}{x})$, so it's a **homogeneous equation**. **Step 1: Substitute $y = vx$** $\frac{dy}{dx} = v + x \frac{dv}{dx}$. Substitute into the DE: $v + x \frac{dv}{dx} = \frac{x^2+(vx)^2}{2x(vx)} = \frac{x^2(1+v^2)}{2x^2v} = \frac{1+v^2}{2v}$. **Step 2: Separate Variables** $x \frac{dv}{dx} = \frac{1+v^2}{2v} - v = \frac{1+v^2 - 2v^2}{2v} = \frac{1-v^2}{2v}$. $\frac{2v}{1-v^2} dv = \frac{1}{x} dx$. **Step 3: Integrate Both Sides** $\int \frac{2v}{1-v^2} dv = \int \frac{1}{x} dx$. For the left side, let $u = 1-v^2 \implies du = -2v dv$. So $2v dv = -du$. $\int \frac{-du}{u} = -\ln|u| = -\ln|1-v^2|$. For the right side, $\int \frac{1}{x} dx = \ln|x|$. So, $-\ln|1-v^2| = \ln|x| + \ln C_1$ (using $\ln C_1$ for constant of integration). $\ln|1-v^2|^{-1} = \ln|C_1 x|$. $\frac{1}{|1-v^2|} = |C_1 x|$. $\frac{1}{1-v^2} = Cx$ (Combining constants, dropping absolute value). **Step 4: Substitute back $v = y/x$** $\frac{1}{1-(y/x)^2} = Cx$ $\frac{1}{1 - y^2/x^2} = Cx$ $\frac{x^2}{x^2 - y^2} = Cx$. This is the general solution. **Common Trap:** Algebraic errors during substitution or integration. Ensure careful handling of constants. **Self-Practice Problems:** 1. Solve $\frac{dy}{dx} = e^{x+y}$. 2. Solve $(x^2-y^2)dx + 2xy dy = 0$. (Hint: Homogeneous) 3. Solve $y dx + (x+2y^2) dy = 0$. (Hint: Not exact, but can be made exact with IF or rearrange to linear in $x$) 4. Solve $\frac{dy}{dx} + \frac{y}{x} = x^2$. 5. Solve $\frac{dy}{dx} + y = xy^3$. **Answers:** 1. $e^{-y} dy = e^x dx \implies \int e^{-y} dy = \int e^x dx \implies -e^{-y} = e^x + C \implies e^x + e^{-y} = K$. 2. Homogeneous, let $y=vx$. $\frac{dy}{dx} = \frac{y^2-x^2}{2xy} = \frac{v^2x^2-x^2}{2vx^2} = \frac{v^2-1}{2v}$. $v+x\frac{dv}{dx} = \frac{v^2-1}{2v} \implies x\frac{dv}{dx} = \frac{v^2-1}{2v} - v = \frac{v^2-1-2v^2}{2v} = \frac{-1-v^2}{2v}$. $\frac{2v}{1+v^2} dv = -\frac{1}{x} dx \implies \int \frac{2v}{1+v^2} dv = -\int \frac{1}{x} dx$. $\ln(1+v^2) = -\ln|x| + \ln C = \ln(C/|x|)$. $1+v^2 = C/x \implies 1 + (y/x)^2 = C/x \implies \frac{x^2+y^2}{x^2} = C/x \implies x^2+y^2 = Cx$. 3. Rearrange: $\frac{dx}{dy} + \frac{1}{y}x = -2y$. This is linear in $x$. $P(y) = 1/y, Q(y) = -2y$. $IF = e^{\int (1/y)dy} = e^{\ln y} = y$. $x \cdot y = \int (-2y) \cdot y dy + C = \int -2y^2 dy + C = -\frac{2}{3}y^3 + C$. $xy = -\frac{2}{3}y^3 + C$. 4. Linear first order. $P(x) = 1/x, Q(x) = x^2$. $IF = e^{\int (1/x)dx} = e^{\ln x} = x$. $y \cdot x = \int x^2 \cdot x dx + C = \int x^3 dx + C = \frac{x^4}{4} + C$. $xy = \frac{x^4}{4} + C$. 5. Bernoulli's equation with $n=3$. Divide by $y^3$: $y^{-3}\frac{dy}{dx} + y^{-2} = x$. Let $v = y^{-2} \implies \frac{dv}{dx} = -2y^{-3}\frac{dy}{dx} \implies y^{-3}\frac{dy}{dx} = -\frac{1}{2}\frac{dv}{dx}$. $-\frac{1}{2}\frac{dv}{dx} + v = x \implies \frac{dv}{dx} - 2v = -2x$. (Linear in $v$) $P(x)=-2, Q(x)=-2x$. $IF = e^{\int -2 dx} = e^{-2x}$. $v \cdot e^{-2x} = \int (-2x)e^{-2x} dx + C$. $\int -2x e^{-2x} dx$. By parts: $u=-2x, dv=e^{-2x}dx \implies du=-2dx, v=-\frac{1}{2}e^{-2x}$. $= (-2x)(-\frac{1}{2}e^{-2x}) - \int (-\frac{1}{2}e^{-2x})(-2dx) = xe^{-2x} - \int e^{-2x}dx = xe^{-2x} + \frac{1}{2}e^{-2x}$. So, $v e^{-2x} = xe^{-2x} + \frac{1}{2}e^{-2x} + C$. $v = x + \frac{1}{2} + Ce^{2x}$. Substitute back $v=y^{-2}$: $y^{-2} = x + \frac{1}{2} + Ce^{2x}$. ### Second Order Linear Differential Equations These describe systems with inertia or feedback. Very high-weight for ESE, often involving initial conditions. #### 1. Homogeneous Equations with Constant Coefficients - **Form:** $ay'' + by' + cy = 0$ - **Auxiliary Equation:** $am^2 + bm + c = 0$. Solve for $m$. - **General Solution based on Roots ($m_1, m_2$):** - **Case 1: Real and Distinct Roots ($m_1 \neq m_2$)** $y(x) = C_1 e^{m_1 x} + C_2 e^{m_2 x}$ - **Case 2: Real and Equal Roots ($m_1 = m_2 = m$)** $y(x) = (C_1 + C_2 x) e^{mx}$ - **Case 3: Complex Conjugate Roots ($m = \alpha \pm i\beta$)** $y(x) = e^{\alpha x} (C_1 \cos(\beta x) + C_2 \sin(\beta x))$ #### 2. Non-Homogeneous Equations with Constant Coefficients - **Form:** $ay'' + by' + cy = F(x)$ - **General Solution:** $y(x) = y_c(x) + y_p(x)$ - $y_c(x)$ is the complementary solution (solution to the homogeneous equation $ay'' + by' + cy = 0$). - $y_p(x)$ is the particular solution (any solution that satisfies the non-homogeneous equation). - **Method of Undetermined Coefficients (for specific $F(x)$ forms):** - **If $F(x) = P_n(x)$ (polynomial of degree $n$):** Assume $y_p = A_n x^n + ... + A_0$. (If $0$ is a root of the auxiliary equation with multiplicity $s$, multiply by $x^s$). - **If $F(x) = Ce^{ax}$:** Assume $y_p = Ae^{ax}$. (If $a$ is a root of the auxiliary equation with multiplicity $s$, multiply by $x^s$). - **If $F(x) = C_1 \cos(kx) + C_2 \sin(kx)$:** Assume $y_p = A \cos(kx) + B \sin(kx)$. (If $ik$ (or $-ik$) is a root of the auxiliary equation with multiplicity $s$, multiply by $x^s$). - **Combinations:** If $F(x)$ is a sum or product of these forms, use corresponding products/sums for $y_p$. - **Method of Variation of Parameters (general method):** - If $y_c = C_1 y_1(x) + C_2 y_2(x)$, then $y_p = -y_1 \int \frac{y_2 F(x)}{a W(y_1, y_2)} dx + y_2 \int \frac{y_1 F(x)}{a W(y_1, y_2)} dx$ where $W(y_1, y_2) = y_1 y_2' - y_2 y_1'$ is the Wronskian. #### ESE Example (2021, 5 Marks) Solve the differential equation $y'' + 4y = \sin(2x)$. **Solution:** This is a second-order linear non-homogeneous DE with constant coefficients. **Step 1: Find the Complementary Solution ($y_c$)** Homogeneous equation: $y'' + 4y = 0$. Auxiliary equation: $m^2 + 4 = 0 \implies m^2 = -4 \implies m = \pm 2i$. This is Case 3: complex conjugate roots with $\alpha=0, \beta=2$. $y_c(x) = e^{0x} (C_1 \cos(2x) + C_2 \sin(2x)) = C_1 \cos(2x) + C_2 \sin(2x)$. **Step 2: Find the Particular Solution ($y_p$)** The forcing function is $F(x) = \sin(2x)$. The natural choice for $y_p$ would be $A \cos(2x) + B \sin(2x)$. However, notice that $\sin(2x)$ is already part of the complementary solution ($y_c$). This means $ik=2i$ is a root of the auxiliary equation. So, we must multiply the standard form by $x$: Assume $y_p = x(A \cos(2x) + B \sin(2x))$. $y_p = Ax \cos(2x) + Bx \sin(2x)$. Now, find derivatives: $y_p' = A \cos(2x) - 2Ax \sin(2x) + B \sin(2x) + 2Bx \cos(2x)$ $y_p' = (A+2Bx) \cos(2x) + (B-2Ax) \sin(2x)$. $y_p'' = 2B \cos(2x) - 2(A+2Bx)\sin(2x) - 2A \sin(2x) + 2(B-2Ax)\cos(2x)$ $y_p'' = (2B + 2(B-2Ax)) \cos(2x) + (-2(A+2Bx) - 2A) \sin(2x)$ $y_p'' = (4B - 4Ax) \cos(2x) + (-4A - 4Bx) \sin(2x)$. Substitute $y_p$ and $y_p''$ into the non-homogeneous equation $y_p'' + 4y_p = \sin(2x)$: $(4B - 4Ax) \cos(2x) + (-4A - 4Bx) \sin(2x) + 4(Ax \cos(2x) + Bx \sin(2x)) = \sin(2x)$ Combine terms: $4B \cos(2x) - 4Ax \cos(2x) + 4Ax \cos(2x) - 4A \sin(2x) - 4Bx \sin(2x) + 4Bx \sin(2x) = \sin(2x)$ $4B \cos(2x) - 4A \sin(2x) = \sin(2x)$. Equating coefficients of $\cos(2x)$ and $\sin(2x)$: For $\cos(2x)$: $4B = 0 \implies B = 0$. For $\sin(2x)$: $-4A = 1 \implies A = -1/4$. So, $y_p(x) = x(-\frac{1}{4} \cos(2x) + 0 \cdot \sin(2x)) = -\frac{x}{4} \cos(2x)$. **Step 3: General Solution** $y(x) = y_c(x) + y_p(x)$ $y(x) = C_1 \cos(2x) + C_2 \sin(2x) - \frac{x}{4} \cos(2x)$. **Common Trap:** Forgetting to multiply by $x$ (or $x^s$) if the chosen form of $y_p$ is part of the complementary solution. This is known as the "resonance" case. **Self-Practice Problems:** 1. Solve $y'' - y' - 2y = 0$. 2. Solve $y'' + 6y' + 9y = 0$. 3. Solve $y'' + 2y' + 5y = 0$. 4. Solve $y'' - 3y' + 2y = e^{3x}$. 5. Solve $y'' + y = x^2$. **Answers:** 1. Auxiliary equation: $m^2 - m - 2 = 0 \implies (m-2)(m+1)=0 \implies m_1=2, m_2=-1$. $y(x) = C_1 e^{2x} + C_2 e^{-x}$. 2. Auxiliary equation: $m^2 + 6m + 9 = 0 \implies (m+3)^2=0 \implies m_1=m_2=-3$. $y(x) = (C_1 + C_2 x) e^{-3x}$. 3. Auxiliary equation: $m^2 + 2m + 5 = 0$. $m = \frac{-2 \pm \sqrt{4 - 4(1)(5)}}{2} = \frac{-2 \pm \sqrt{-16}}{2} = \frac{-2 \pm 4i}{2} = -1 \pm 2i$. $\alpha=-1, \beta=2$. $y(x) = e^{-x} (C_1 \cos(2x) + C_2 \sin(2x))$. 4. **Complementary Solution:** $m^2 - 3m + 2 = 0 \implies (m-1)(m-2)=0 \implies m_1=1, m_2=2$. $y_c = C_1 e^x + C_2 e^{2x}$. **Particular Solution:** $F(x) = e^{3x}$. $a=3$ is not a root of auxiliary equation. Assume $y_p = Ae^{3x}$. $y_p' = 3Ae^{3x}$, $y_p'' = 9Ae^{3x}$. Substitute: $9Ae^{3x} - 3(3Ae^{3x}) + 2Ae^{3x} = e^{3x}$. $9Ae^{3x} - 9Ae^{3x} + 2Ae^{3x} = e^{3x} \implies 2Ae^{3x} = e^{3x} \implies A=1/2$. $y_p = \frac{1}{2} e^{3x}$. **General Solution:** $y(x) = C_1 e^x + C_2 e^{2x} + \frac{1}{2} e^{3x}$. 5. **Complementary Solution:** $m^2+1=0 \implies m=\pm i$. $\alpha=0, \beta=1$. $y_c = C_1 \cos x + C_2 \sin x$. **Particular Solution:** $F(x) = x^2$. $0$ is not a root of auxiliary equation. Assume $y_p = Ax^2 + Bx + C$. $y_p' = 2Ax + B$, $y_p'' = 2A$. Substitute: $2A + (Ax^2 + Bx + C) = x^2$. $Ax^2 + Bx + (2A+C) = x^2$. Equating coefficients: $A=1$. $B=0$. $2A+C=0 \implies 2(1)+C=0 \implies C=-2$. $y_p = x^2 - 2$. **General Solution:** $y(x) = C_1 \cos x + C_2 \sin x + x^2 - 2$. ### Higher Order Linear Differential Equations UPSC ESE occasionally includes higher-order DEs, usually homogeneous with constant coefficients. The approach is a direct extension of second-order DEs. #### 1. Homogeneous Equations with Constant Coefficients - **Form:** $a_n y^{(n)} + a_{n-1} y^{(n-1)} + ... + a_1 y' + a_0 y = 0$. - **Auxiliary Equation:** $a_n m^n + a_{n-1} m^{n-1} + ... + a_1 m + a_0 = 0$. - **General Solution based on Roots ($m_1, m_2, ..., m_n$):** - **Real and Distinct Roots:** For each distinct real root $m_k$, a term $C_k e^{m_k x}$. - **Real and Repeated Roots:** If $m$ is a real root with multiplicity $k$, terms are $(C_1 + C_2 x + ... + C_k x^{k-1}) e^{mx}$. - **Complex Conjugate Roots:** If $\alpha \pm i\beta$ are complex conjugate roots with multiplicity $k$, terms are $e^{\alpha x} [(C_1 + C_2 x + ... + C_k x^{k-1}) \cos(\beta x) + (D_1 + D_2 x + ... + D_k x^{k-1}) \sin(\beta x)]$. (For $k=1$, this simplifies to $e^{\alpha x} (C_1 \cos(\beta x) + C_2 \sin(\beta x))$). #### ESE Example (2017, 5 Marks) Solve the differential equation $y''' - 3y'' + 3y' - y = 0$. **Solution:** This is a third-order homogeneous linear DE with constant coefficients. **Step 1: Form the Auxiliary Equation** The auxiliary equation is $m^3 - 3m^2 + 3m - 1 = 0$. **Step 2: Solve the Auxiliary Equation for Roots** Recognize this as a binomial expansion: $(m-1)^3 = 0$. So, the root is $m=1$ with multiplicity 3. **Step 3: Write the General Solution** Since $m=1$ is a real root with multiplicity $k=3$, the general solution is: $y(x) = (C_1 + C_2 x + C_3 x^2) e^{1x}$ $y(x) = (C_1 + C_2 x + C_3 x^2) e^x$. **Common Trap:** Incorrectly applying the rule for repeated roots. Each repetition adds a factor of $x$. **Self-Practice Problems:** 1. Solve $y^{(4)} - 5y'' + 4y = 0$. 2. Solve $y^{(4)} + 2y'' + y = 0$. 3. Solve $y''' - y' = 0$. 4. Find the roots of the auxiliary equation for $y^{(4)} - 16y = 0$. 5. Solve $y''' + 8y = 0$. **Answers:** 1. Auxiliary equation: $m^4 - 5m^2 + 4 = 0$. Let $p=m^2$. $p^2 - 5p + 4 = 0 \implies (p-1)(p-4)=0$. $m^2=1 \implies m=\pm 1$. $m^2=4 \implies m=\pm 2$. Roots are $1, -1, 2, -2$ (all real and distinct). $y(x) = C_1 e^x + C_2 e^{-x} + C_3 e^{2x} + C_4 e^{-2x}$. 2. Auxiliary equation: $m^4 + 2m^2 + 1 = 0 \implies (m^2+1)^2 = 0$. $m^2+1=0 \implies m^2=-1 \implies m=\pm i$. So, roots are $i, i, -i, -i$ (complex conjugate roots, each with multiplicity 2). $\alpha=0, \beta=1$. Multiplicity $k=2$. $y(x) = e^{0x} [(C_1 + C_2 x) \cos(1x) + (D_1 + D_2 x) \sin(1x)]$ $y(x) = (C_1 + C_2 x) \cos x + (D_1 + D_2 x) \sin x$. 3. Auxiliary equation: $m^3 - m = 0 \implies m(m^2-1)=0 \implies m(m-1)(m+1)=0$. Roots are $0, 1, -1$. $y(x) = C_1 e^{0x} + C_2 e^{1x} + C_3 e^{-1x} = C_1 + C_2 e^x + C_3 e^{-x}$. 4. Auxiliary equation: $m^4 - 16 = 0 \implies (m^2-4)(m^2+4)=0$. $m^2-4=0 \implies m=\pm 2$. $m^2+4=0 \implies m=\pm 2i$. Roots are $2, -2, 2i, -2i$. 5. Auxiliary equation: $m^3 + 8 = 0 \implies (m+2)(m^2-2m+4) = 0$. One real root: $m_1 = -2$. Quadratic formula for $m^2-2m+4=0$: $m = \frac{2 \pm \sqrt{4 - 4(1)(4)}}{2} = \frac{2 \pm \sqrt{-12}}{2} = \frac{2 \pm 2i\sqrt{3}}{2} = 1 \pm i\sqrt{3}$. Complex roots: $m_{2,3} = 1 \pm i\sqrt{3}$. ($\alpha=1, \beta=\sqrt{3}$) $y(x) = C_1 e^{-2x} + e^x (C_2 \cos(\sqrt{3}x) + C_3 \sin(\sqrt{3}x))$. ### Complex Analysis: Introduction Complex analysis extends calculus to complex numbers, providing powerful tools for solving real-world problems in electrical engineering, signal processing, and fluid dynamics. ESE focuses on analytic functions, Cauchy-Riemann equations, and contour integration. #### Why Study Complex Analysis? - **Circuit Analysis:** Phasors, impedance, frequency response. - **Control Systems:** Stability analysis (Nyquist criterion). - **Signal Processing:** Fourier and Laplace transforms properties. - **Solving Integrals:** Evaluating complicated real integrals. #### Key Concepts Intuition - **Complex Number:** $z = x + iy$, where $i = \sqrt{-1}$. Represents a point in the complex plane. - **Analytic Function:** A function that is differentiable at every point in an open set. Behaves "nicely." - **Contour Integration:** Integration along a path in the complex plane, often simplifying calculations. ### Analytic Functions and Cauchy-Riemann Equations The concept of analyticity is central to complex analysis. ESE often tests the application of Cauchy-Riemann (CR) equations to determine if a function is analytic or to find its conjugate. #### 1. Complex Functions - A complex function $f(z)$ maps a complex number $z = x+iy$ to another complex number $w = u+iv$. - So, $f(z) = u(x,y) + iv(x,y)$, where $u(x,y)$ is the real part and $v(x,y)$ is the imaginary part. #### 2. Limit and Continuity - Similar to real functions, but in the complex plane. - $\lim_{z \to z_0} f(z) = L$ if for every $\epsilon > 0$, there exists a $\delta > 0$ such that if $0 ### Complex Integration Complex integration (contour integration) is a powerful tool, especially for evaluating real integrals and for problems in control theory (e.g., stability). ESE focuses on Cauchy's Integral Theorem and Formula. #### 1. Line Integrals in the Complex Plane - **Definition:** $\int_C f(z) dz = \int_C (u+iv)(dx+idy) = \int_C (u dx - v dy) + i \int_C (v dx + u dy)$. - **Parametric Form:** If $C$ is given by $z(t) = x(t) + iy(t)$ for $a \le t \le b$, then $\int_C f(z) dz = \int_a^b f(z(t)) z'(t) dt$. #### 2. Cauchy's Integral Theorem - **Statement:** If $f(z)$ is analytic within and on a simple closed contour $C$, then $\oint_C f(z) dz = 0$. - **Intuition:** For analytic functions, the integral around a closed loop is zero. Path independence for integration. #### 3. Cauchy's Integral Formula - **Statement:** If $f(z)$ is analytic within and on a simple closed contour $C$ (traversed counter-clockwise), and $z_0$ is any point *inside* $C$, then: $$f(z_0) = \frac{1}{2\pi i} \oint_C \frac{f(z)}{z - z_0} dz$$ - **Rearranged Form for Integration:** $$\oint_C \frac{f(z)}{z - z_0} dz = 2\pi i f(z_0)$$ - **For Derivatives:** $$\oint_C \frac{f(z)}{(z - z_0)^{n+1}} dz = \frac{2\pi i}{n!} f^{(n)}(z_0)$$ where $f^{(n)}(z_0)$ is the $n$-th derivative of $f(z)$ evaluated at $z_0$. #### 4. Singularities and Residues (Briefly) - **Singular Point:** A point where a function is not analytic. - **Isolated Singularity:** If $z_0$ is a singular point and there's a neighborhood around $z_0$ where $f(z)$ is analytic otherwise. - **Pole of Order $m$:** If $\lim_{z \to z_0} (z-z_0)^m f(z)$ is finite and non-zero. - **Residue Theorem:** If $f(z)$ is analytic inside and on a simple closed contour $C$, except for a finite number of isolated singular points $z_1, z_2, ..., z_n$ inside $C$, then: $\oint_C f(z) dz = 2\pi i \sum_{k=1}^n \text{Res}(f, z_k)$. (Residues are rarely asked for direct calculation in ESE, but understanding the theorem's implication is useful). #### ESE Example (2020, 5 Marks) Evaluate $\oint_C \frac{e^z}{(z-2)(z-3)} dz$ where $C$ is the circle $|z|=2.5$ traversed counter-clockwise. **Solution:** **Step 1: Identify Singularities and their Location relative to $C$** The integrand is $f(z) = \frac{e^z}{(z-2)(z-3)}$. The singular points are $z=2$ and $z=3$. The contour $C$ is a circle centered at origin with radius $2.5$. - For $z=2$: $|2|=2 2.5$. So $z=3$ is **outside** $C$. Since only $z=2$ is inside the contour, we can rewrite the integrand to apply Cauchy's Integral Formula. **Step 2: Apply Cauchy's Integral Formula** We want the form $\oint_C \frac{g(z)}{z - z_0} dz$. Here $z_0 = 2$. So, let $g(z) = \frac{e^z}{z-3}$. Is $g(z)$ analytic within and on $C$? Yes, because its only singularity is $z=3$, which is outside $C$. Therefore, by Cauchy's Integral Formula: $\oint_C \frac{g(z)}{z - 2} dz = 2\pi i g(2)$. **Step 3: Calculate $g(2)$** $g(2) = \frac{e^2}{2-3} = \frac{e^2}{-1} = -e^2$. **Step 4: Final Result** The value of the integral is $2\pi i (-e^2) = -2\pi i e^2$. **Common Trap:** Incorrectly identifying which singularities are inside the contour, or algebraic errors in evaluating $g(z_0)$. #### ESE Example (2018, 5 Marks) Evaluate $\oint_C \frac{\cos z}{(z-\pi/2)^2} dz$ where $C$ is the circle $|z|=2$ traversed counter-clockwise. **Solution:** **Step 1: Identify Singularities and their Location relative to $C$** The singularity is $z_0 = \pi/2$. The contour $C$ is a circle centered at origin with radius $2$. $|\pi/2| \approx |3.14159/2| \approx 1.57$. Since $1.57 ### Probability & Statistics: Introduction Probability and Statistics are essential for dealing with uncertainty, analyzing data, and making informed decisions in engineering. ESE questions cover basic probability, distributions, and hypothesis testing. #### Why Study Probability & Statistics? - **Signal Processing:** Noise analysis, filter design. - **Communications:** Error rates, channel capacity. - **Quality Control:** Process monitoring, reliability. - **Machine Learning:** Fundamentally based on statistical models. #### Key Concepts Intuition - **Probability:** Likelihood of an event occurring. - **Random Variable:** A variable whose value is a numerical outcome of a random phenomenon. - **Distribution:** Describes the probabilities of different values of a random variable. - **Hypothesis Testing:** Using data to make inferences about a population. ### Basic Probability This section covers fundamental concepts and rules of probability, which are building blocks for understanding distributions and statistical inference. High-weight area for ESE. #### 1. Definitions - **Experiment:** Any process that generates well-defined outcomes. - **Sample Space ($S$):** The set of all possible outcomes of an experiment. - **Event ($E$):** A subset of the sample space. - **Probability of an Event ($P(E)$):** - For equally likely outcomes: $P(E) = \frac{\text{Number of favorable outcomes}}{\text{Total number of possible outcomes}}$. - Axioms of Probability: 1. $0 \le P(E) \le 1$ 2. $P(S) = 1$ 3. For mutually exclusive events $E_1, E_2, ...$, $P(E_1 \cup E_2 \cup ...) = P(E_1) + P(E_2) + ...$. #### 2. Rules of Probability - **Complement Rule:** $P(E') = 1 - P(E)$, where $E'$ is the complement of $E$. - **Addition Rule (for any two events $A, B$):** $P(A \cup B) = P(A) + P(B) - P(A \cap B)$. - For mutually exclusive events ($A \cap B = \emptyset$): $P(A \cup B) = P(A) + P(B)$. - **Conditional Probability:** $P(A|B) = \frac{P(A \cap B)}{P(B)}$, provided $P(B) > 0$. (Probability of $A$ given $B$ has occurred). - **Multiplication Rule:** $P(A \cap B) = P(A|B) P(B) = P(B|A) P(A)$. - For independent events ($A, B$ are independent if $P(A|B) = P(A)$ or $P(B|A) = P(B)$): $P(A \cap B) = P(A) P(B)$. #### 3. Bayes' Theorem - **Formula:** $P(A|B) = \frac{P(B|A) P(A)}{P(B)}$ - **Total Probability Rule:** $P(B) = P(B|A_1)P(A_1) + P(B|A_2)P(A_2) + ... + P(B|A_n)P(A_n)$, where $A_i$ form a partition of the sample space. - **Intuition:** Updates the probability of an event based on new evidence. #### ESE Example (2020, 2 Marks) A bag contains 5 red and 3 black balls. If two balls are drawn at random without replacement, what is the probability that both are red? **Solution:** Let $R_1$ be the event that the first ball drawn is red. Let $R_2$ be the event that the second ball drawn is red. We want to find $P(R_1 \cap R_2)$. **Method 1: Using Conditional Probability** $P(R_1) = \frac{\text{Number of red balls}}{\text{Total number of balls}} = \frac{5}{8}$. After drawing one red ball, there are 4 red balls left and a total of 7 balls. $P(R_2|R_1) = \frac{\text{Number of remaining red balls}}{\text{Total remaining balls}} = \frac{4}{7}$. Using the multiplication rule for dependent events: $P(R_1 \cap R_2) = P(R_1) P(R_2|R_1) = \frac{5}{8} \times \frac{4}{7} = \frac{20}{56} = \frac{5}{14}$. **Method 2: Using Combinations** Total ways to draw 2 balls from 8: $\binom{8}{2} = \frac{8 \times 7}{2 \times 1} = 28$. Ways to draw 2 red balls from 5: $\binom{5}{2} = \frac{5 \times 4}{2 \times 1} = 10$. Probability = $\frac{\text{Favorable outcomes}}{\text{Total outcomes}} = \frac{10}{28} = \frac{5}{14}$. #### ESE Example (2019, 5 Marks) In a factory, machine A produces 60% of the total output and machine B produces 40%. Machine A produces 2% defective items, and machine B produces 3% defective items. If a randomly selected item is found to be defective, what is the probability that it was produced by machine B? **Solution:** Let $A$ be the event that the item is produced by machine A. Let $B$ be the event that the item is produced by machine B. Let $D$ be the event that the item is defective. Given probabilities: $P(A) = 0.60$ $P(B) = 0.40$ $P(D|A) = 0.02$ (Probability of defective given produced by A) $P(D|B) = 0.03$ (Probability of defective given produced by B) We need to find $P(B|D)$ (Probability that it was produced by B given it is defective). Use Bayes' Theorem: $P(B|D) = \frac{P(D|B) P(B)}{P(D)}$. First, find $P(D)$ using the Total Probability Rule: $P(D) = P(D|A)P(A) + P(D|B)P(B)$ $P(D) = (0.02)(0.60) + (0.03)(0.40)$ $P(D) = 0.012 + 0.012 = 0.024$. Now, substitute into Bayes' Theorem: $P(B|D) = \frac{(0.03)(0.40)}{0.024} = \frac{0.012}{0.024} = \frac{1}{2} = 0.5$. The probability that the defective item was produced by machine B is 0.5 or 50%. **Common Trap:** Confusing $P(A|B)$ with $P(B|A)$. Clearly define events and what is being asked. **Self-Practice Problems:** 1. A fair coin is tossed three times. What is the probability of getting exactly two heads? 2. Two dice are rolled. What is the probability that the sum of the numbers is 7? 3. In a class, 40% of students study Math, 30% study Physics, and 10% study both. What is the probability that a randomly chosen student studies Math or Physics? 4. A card is drawn from a well-shuffled deck of 52 cards. What is the probability that it is a King or a Spade? 5. A box contains 10 items, 3 of which are defective. If 2 items are selected at random without replacement, what is the probability that exactly one is defective? **Answers:** 1. Sample space: HHH, HHT, HTH, THH, HTT, THT, TTH, TTT (8 outcomes). Exactly two heads: HHT, HTH, THH (3 outcomes). Probability = $3/8$. 2. Total outcomes: $6 \times 6 = 36$. Sums of 7: (1,6), (2,5), (3,4), (4,3), (5,2), (6,1) (6 outcomes). Probability = $6/36 = 1/6$. 3. $P(M) = 0.4$, $P(P) = 0.3$, $P(M \cap P) = 0.1$. $P(M \cup P) = P(M) + P(P) - P(M \cap P) = 0.4 + 0.3 - 0.1 = 0.6$. 4. $P(K) = 4/52 = 1/13$. $P(S) = 13/52 = 1/4$. $P(K \cap S) = P(\text{King of Spades}) = 1/52$. $P(K \cup S) = P(K) + P(S) - P(K \cap S) = 1/13 + 1/4 - 1/52 = 4/52 + 13/52 - 1/52 = 16/52 = 4/13$. 5. Total ways to select 2 items from 10: $\binom{10}{2} = 45$. Ways to select 1 defective (from 3) AND 1 non-defective (from 7): $\binom{3}{1} \times \binom{7}{1} = 3 \times 7 = 21$. Probability = $21/45 = 7/15$. ### Random Variables and Distributions Random variables provide a mathematical framework for describing outcomes of random events. Probability distributions quantify the likelihood of these outcomes. Very high-weight for ESE, especially Binomial, Poisson, and Normal distributions. #### 1. Random Variables - **Definition:** A function that maps outcomes of a random experiment to real numbers. - **Types:** - **Discrete Random Variable:** Takes on a finite or countably infinite number of values (e.g., number of heads, number of defects). - **Continuous Random Variable:** Takes on any value within a given range (e.g., height, temperature, current). #### 2. Discrete Probability Distributions - **Probability Mass Function (PMF):** $P(X=x)$ for discrete RV. $\sum P(X=x) = 1$. - **Cumulative Distribution Function (CDF):** $F(x) = P(X \le x) = \sum_{t \le x} P(X=t)$. - **Expected Value (Mean):** $E[X] = \sum x P(X=x)$. - **Variance:** $Var(X) = E[X^2] - (E[X])^2 = \sum (x - E[X])^2 P(X=x)$. ##### Common Discrete Distributions - **Bernoulli Distribution:** $X \sim Ber(p)$. Outcome is 0 or 1 (failure/success). $P(X=1)=p, P(X=0)=1-p$. $E[X]=p, Var(X)=p(1-p)$. - **Binomial Distribution:** $X \sim B(n,p)$. Number of successes in $n$ independent Bernoulli trials. $P(X=k) = \binom{n}{k} p^k (1-p)^{n-k}$. $E[X]=np, Var(X)=np(1-p)$. - **Poisson Distribution:** $X \sim Poi(\lambda)$. Number of events in a fixed interval of time/space, where events occur with a known average rate $\lambda$. $P(X=k) = \frac{e^{-\lambda} \lambda^k}{k!}$. $E[X]=\lambda, Var(X)=\lambda$. (Often used as an approximation to Binomial when $n$ is large and $p$ is small, with $\lambda=np$). #### 3. Continuous Probability Distributions - **Probability Density Function (PDF):** $f(x)$ for continuous RV. $\int_{-\infty}^\infty f(x) dx = 1$. $P(a \le X \le b) = \int_a^b f(x) dx$. - **Cumulative Distribution Function (CDF):** $F(x) = P(X \le x) = \int_{-\infty}^x f(t) dt$. $f(x) = F'(x)$. - **Expected Value (Mean):** $E[X] = \int_{-\infty}^\infty x f(x) dx$. - **Variance:** $Var(X) = E[X^2] - (E[X])^2 = \int_{-\infty}^\infty (x - E[X])^2 f(x) dx$. ##### Common Continuous Distributions - **Uniform Distribution:** $X \sim U(a,b)$. All values in $[a,b]$ are equally likely. $f(x) = \frac{1}{b-a}$ for $a \le x \le b$, $0$ otherwise. $E[X]=\frac{a+b}{2}, Var(X)=\frac{(b-a)^2}{12}$. - **Exponential Distribution:** $X \sim Exp(\lambda)$. Time until an event occurs in a Poisson process. Memoryless property. $f(x) = \lambda e^{-\lambda x}$ for $x \ge 0$, $0$ otherwise. $E[X]=1/\lambda, Var(X)=1/\lambda^2$. - **Normal (Gaussian) Distribution:** $X \sim N(\mu, \sigma^2)$. The most common distribution in nature and statistics. Bell-shaped curve. $f(x) = \frac{1}{\sigma\sqrt{2\pi}} e^{-(x-\mu)^2/(2\sigma^2)}$. $E[X]=\mu, Var(X)=\sigma^2$. - **Standard Normal Distribution:** $Z \sim N(0,1)$. $Z = \frac{X-\mu}{\sigma}$. Use Z-tables. #### ESE Example (2021, 5 Marks) A fair coin is tossed 10 times. (i) What is the probability of getting exactly 7 heads? (ii) What is the probability of getting at least 8 heads? **Solution:** This is a Binomial distribution problem. $n = 10$ (number of trials) $p = 0.5$ (probability of getting a head for a fair coin) $X \sim B(10, 0.5)$. PMF: $P(X=k) = \binom{n}{k} p^k (1-p)^{n-k} = \binom{10}{k} (0.5)^k (0.5)^{10-k} = \binom{10}{k} (0.5)^{10}$. (i) **Probability of exactly 7 heads ($P(X=7)$):** $P(X=7) = \binom{10}{7} (0.5)^{10}$ $\binom{10}{7} = \binom{10}{10-7} = \binom{10}{3} = \frac{10 \times 9 \times 8}{3 \times 2 \times 1} = 10 \times 3 \times 4 = 120$. $(0.5)^{10} = \frac{1}{2^{10}} = \frac{1}{1024}$. $P(X=7) = 120 \times \frac{1}{1024} = \frac{120}{1024} = \frac{15}{128} \approx 0.1171875$. (ii) **Probability of at least 8 heads ($P(X \ge 8)$):** $P(X \ge 8) = P(X=8) + P(X=9) + P(X=10)$. $P(X=8) = \binom{10}{8} (0.5)^{10} = \binom{10}{2} (0.5)^{10} = \frac{10 \times 9}{2} \times \frac{1}{1024} = 45 \times \frac{1}{1024} = \frac{45}{1024}$. $P(X=9) = \binom{10}{9} (0.5)^{10} = \binom{10}{1} (0.5)^{10} = 10 \times \frac{1}{1024} = \frac{10}{1024}$. $P(X=10) = \binom{10}{10} (0.5)^{10} = 1 \times \frac{1}{1024} = \frac{1}{1024}$. $P(X \ge 8) = \frac{45}{1024} + \frac{10}{1024} + \frac{1}{1024} = \frac{45+10+1}{1024} = \frac{56}{1024} = \frac{7}{128} \approx 0.0546875$. **Common Trap:** Calculation errors with combinations or powers of 0.5. #### ESE Example (2018, 5 Marks) The average number of calls received by a call center is 3 per minute. Assuming a Poisson distribution, what is the probability that in a randomly selected minute: (i) Exactly 2 calls are received. (ii) More than 2 calls are received. **Solution:** This is a Poisson distribution problem. Given average rate $\lambda = 3$ calls per minute. PMF: $P(X=k) = \frac{e^{-\lambda} \lambda^k}{k!} = \frac{e^{-3} 3^k}{k!}$. (Note: ESE questions usually provide $e^{-3}$ or expect you to leave it in terms of $e^{-3}$). (i) **Probability of exactly 2 calls ($P(X=2)$):** $P(X=2) = \frac{e^{-3} 3^2}{2!} = \frac{e^{-3} \times 9}{2} = 4.5 e^{-3}$. (Using $e^{-3} \approx 0.0498$): $P(X=2) \approx 4.5 \times 0.0498 = 0.2241$. (ii) **Probability of more than 2 calls ($P(X > 2)$):** $P(X > 2) = 1 - P(X \le 2)$ $P(X \le 2) = P(X=0) + P(X=1) + P(X=2)$. $P(X=0) = \frac{e^{-3} 3^0}{0!} = \frac{e^{-3} \times 1}{1} = e^{-3}$. $P(X=1) = \frac{e^{-3} 3^1}{1!} = \frac{e^{-3} \times 3}{1} = 3 e^{-3}$. $P(X=2) = 4.5 e^{-3}$ (from part i). $P(X \le 2) = e^{-3} + 3e^{-3} + 4.5e^{-3} = (1+3+4.5)e^{-3} = 8.5e^{-3}$. $P(X > 2) = 1 - 8.5e^{-3}$. (Using $e^{-3} \approx 0.0498$): $P(X > 2) \approx 1 - 8.5 \times 0.0498 = 1 - 0.4233 = 0.5767$. **Common Trap:** Misinterpreting "more than 2" as $P(X \ge 2)$ instead of $P(X > 2)$. Also, calculation errors with factorials. **Self-Practice Problems:** 1. A company manufactures light bulbs, and 5% of them are defective. If a random sample of 10 bulbs is taken, what is the probability that none are defective? 2. The number of accidents on a highway follows a Poisson distribution with an average of 4 accidents per week. What is the probability that there will be exactly 3 accidents in a given week? 3. The height of students in a college is normally distributed with a mean of 160 cm and a standard deviation of 5 cm. What is the probability that a randomly selected student has a height between 150 cm and 165 cm? (Given: $P(0 \le Z \le 2) \approx 0.4772$, $P(0 \le Z \le 1) \approx 0.3413$) 4. A continuous random variable $X$ has PDF $f(x) = kx$ for $0 \le x \le 2$, and $0$ otherwise. Find the value of $k$. 5. For the random variable in problem 4, find $P(X > 1)$. **Answers:** 1. Binomial distribution: $n=10, p=0.05$. $P(X=0) = \binom{10}{0} (0.05)^0 (0.95)^{10} = (0.95)^{10} \approx 0.5987$. 2. Poisson distribution: $\lambda=4$. $P(X=3) = \frac{e^{-4} 4^3}{3!} = \frac{e^{-4} \times 64}{6} = \frac{32}{3} e^{-4} \approx 10.67 \times 0.0183 \approx 0.1957$. 3. $X \sim N(160, 5^2)$. We want $P(150 \le X \le 165)$. Standardize: For $X=150: Z_1 = (150-160)/5 = -10/5 = -2$. For $X=165: Z_2 = (165-160)/5 = 5/5 = 1$. $P(-2 \le Z \le 1) = P(-2 \le Z \le 0) + P(0 \le Z \le 1)$ $= P(0 \le Z \le 2) + P(0 \le Z \le 1)$ (by symmetry of normal distribution) $= 0.4772 + 0.3413 = 0.8185$. 4. $\int_0^2 kx dx = 1 \implies k [\frac{x^2}{2}]_0^2 = 1 \implies k (\frac{4}{2} - 0) = 1 \implies 2k=1 \implies k=1/2$. 5. $P(X > 1) = \int_1^2 \frac{1}{2}x dx = \frac{1}{2} [\frac{x^2}{2}]_1^2 = \frac{1}{2} (\frac{4}{2} - \frac{1}{2}) = \frac{1}{2} (\frac{3}{2}) = \frac{3}{4}$. ### Numerical Methods: Introduction Numerical methods are essential when analytical solutions are difficult or impossible to obtain. They provide approximate solutions to mathematical problems. ESE focuses on solving linear systems, finding roots, and numerical integration. #### Why Study Numerical Methods? - **Solving Complex Equations:** Non-linear equations, transcendental equations. - **Large Systems:** Solving systems with many variables (e.g., FEM, CFD). - **Approximation:** When analytical solutions are not feasible. #### Key Concepts Intuition - **Iteration:** Repeating a process to get closer to a solution. - **Approximation:** Finding a value close enough to the true solution within an acceptable error. - **Convergence:** Whether an iterative method approaches the true solution. ### Solving Systems of Linear Equations (Numerical) For large systems, direct methods become computationally expensive. Iterative methods are often preferred. ESE often tests Gauss-Seidel or Jacobi methods. #### 1. Direct Methods (Briefly) - **Gauss Elimination:** Transforms the system into an upper triangular matrix, then uses back-substitution. - **LU Decomposition:** Decomposes $A$ into $L$ (lower triangular) and $U$ (upper triangular) matrices, then solves $Ly=b$ and $Ux=y$. #### 2. Iterative Methods - **General Idea:** Start with an initial guess $x^{(0)}$, and generate a sequence of approximations $x^{(k+1)}$ until convergence. - **Condition for Convergence:** The iterative methods (Jacobi, Gauss-Seidel) converge if the matrix $A$ is strictly diagonally dominant (i.e., $|a_{ii}| > \sum_{j \neq i} |a_{ij}|$ for all $i$). ##### a. Jacobi Iteration - **System:** $Ax=b$. $a_{11}x_1 + a_{12}x_2 + ... + a_{1n}x_n = b_1$ ... $a_{n1}x_1 + a_{n2}x_2 + ... + a_{nn}x_n = b_n$ - **Iterative Formula:** Solve each equation for the corresponding diagonal variable: $x_1^{(k+1)} = \frac{1}{a_{11}} (b_1 - a_{12}x_2^{(k)} - ... - a_{1n}x_n^{(k)})$ ... $x_i^{(k+1)} = \frac{1}{a_{ii}} (b_i - \sum_{j \neq i} a_{ij}x_j^{(k)})$ - **Key Feature:** Uses *old* values of all other variables ($x_j^{(k)}$) to compute the *new* value $x_i^{(k+1)}$. ##### b. Gauss-Seidel Iteration - **Iterative Formula:** $x_1^{(k+1)} = \frac{1}{a_{11}} (b_1 - a_{12}x_2^{(k)} - ... - a_{1n}x_n^{(k)})$ $x_2^{(k+1)} = \frac{1}{a_{22}} (b_2 - a_{21}x_1^{(k+1)} - a_{23}x_3^{(k)} - ... - a_{2n}x_n^{(k)})$ ... $x_i^{(k+1)} = \frac{1}{a_{ii}} (b_i - \sum_{j i} a_{ij}x_j^{(k)})$ - **Key Feature:** Uses the *most recently computed* values for other variables ($x_j^{(k+1)}$ for $j i$). This generally leads to faster convergence than Jacobi. #### ESE Example (2020, 5 Marks) Solve the following system of equations using Gauss-Seidel method for two iterations, starting with $x=0, y=0, z=0$: $20x + y - 2z = 17$ $3x + 20y - z = -18$ $2x - 3y + 20z = 25$ **Solution:** **Step 1: Check for Diagonal Dominance (Optional but good practice)** $|20| > |1| + |-2| \implies 20 > 3$ (True) $|20| > |3| + |-1| \implies 20 > 4$ (True) $|20| > |2| + |-3| \implies 20 > 5$ (True) The matrix is strictly diagonally dominant, so Gauss-Seidel will converge. **Step 2: Rewrite Equations for Iteration** $x = \frac{1}{20} (17 - y + 2z)$ $y = \frac{1}{20} (-18 - 3x + z)$ $z = \frac{1}{20} (25 - 2x + 3y)$ **Step 3: First Iteration (k=0 to k=1)** Start with $x^{(0)}=0, y^{(0)}=0, z^{(0)}=0$. $x^{(1)} = \frac{1}{20} (17 - y^{(0)} + 2z^{(0)}) = \frac{1}{20} (17 - 0 + 0) = \frac{17}{20} = 0.85$. $y^{(1)} = \frac{1}{20} (-18 - 3x^{(1)} + z^{(0)}) = \frac{1}{20} (-18 - 3(0.85) + 0) = \frac{1}{20} (-18 - 2.55) = \frac{-20.55}{20} = -1.0275$. $z^{(1)} = \frac{1}{20} (25 - 2x^{(1)} + 3y^{(1)}) = \frac{1}{20} (25 - 2(0.85) + 3(-1.0275)) = \frac{1}{20} (25 - 1.7 - 3.0825) = \frac{20.2175}{20} = 1.010875$. Results after 1st iteration: $x^{(1)}=0.85, y^{(1)}=-1.0275, z^{(1)}=1.010875$. **Step 4: Second Iteration (k=1 to k=2)** Using $x^{(1)}, y^{(1)}, z^{(1)}$: $x^{(2)} = \frac{1}{20} (17 - y^{(1)} + 2z^{(1)}) = \frac{1}{20} (17 - (-1.0275) + 2(1.010875))$ $= \frac{1}{20} (17 + 1.0275 + 2.02175) = \frac{20.04925}{20} \approx 1.0024625$. $y^{(2)} = \frac{1}{20} (-18 - 3x^{(2)} + z^{(1)}) = \frac{1}{20} (-18 - 3(1.0024625) + 1.010875)$ $= \frac{1}{20} (-18 - 3.0073875 + 1.010875) = \frac{-19.9965125}{20} \approx -0.9998256$. $z^{(2)} = \frac{1}{20} (25 - 2x^{(2)} + 3y^{(2)}) = \frac{1}{20} (25 - 2(1.0024625) + 3(-0.9998256))$ $= \frac{1}{20} (25 - 2.004925 - 2.9994768) = \frac{19.9955982}{20} \approx 0.9997799$. Results after 2nd iteration: $x^{(2)} \approx 1.0025$ $y^{(2)} \approx -0.9998$ $z^{(2)} \approx 0.9998$ (The exact solution is $x=1, y=-1, z=1$, so the method converges quickly here). **Common Trap:** Using old values for variables that have already been updated in the current iteration (i.e., using Jacobi instead of Gauss-Seidel). **Self-Practice Problems:** 1. Solve $x+y=3, 2x+3y=8$ using Jacobi iteration for one iteration, starting with $x=0, y=0$. 2. Solve $5x - 2y = 11, x + 4y = 11$ using Gauss-Seidel iteration for one iteration, starting with $x=0, y=0$. 3. For the system $x-2y = 1, -2x+y = -1$, will Jacobi or Gauss-Seidel converge? Explain why. 4. Rewrite the system $3x+y=7, x+2y=4$ for Jacobi iteration. 5. If the exact solution to a system is $(1,2)$ and a numerical method gives $(1.05, 1.98)$, calculate the absolute error for $x$ and $y$. **Answers:** 1. Rewrite: $x = 3-y$, $y = \frac{1}{3}(8-2x)$. $x^{(1)} = 3-0 = 3$. $y^{(1)} = \frac{1}{3}(8-2(0)) = 8/3 \approx 2.6667$. Result: $x^{(1)}=3, y^{(1)}=2.6667$. (Note: This system is not diagonally dominant, so convergence is not guaranteed, but one step can still be computed). 2. Rewrite: $x = \frac{1}{5}(11+2y)$, $y = \frac{1}{4}(11-x)$. $x^{(1)} = \frac{1}{5}(11+2(0)) = 11/5 = 2.2$. $y^{(1)} = \frac{1}{4}(11-x^{(1)}) = \frac{1}{4}(11-2.2) = \frac{8.8}{4} = 2.2$. Result: $x^{(1)}=2.2, y^{(1)}=2.2$. (Exact solution is $x=3, y=2$). 3. Check diagonal dominance: For 1st eq: $|1| \ngtr |-2|$. Not diagonally dominant. For 2nd eq: $|1| \ngtr |-2|$. Not diagonally dominant. Neither Jacobi nor Gauss-Seidel are guaranteed to converge for this system in its current form. 4. $x = \frac{1}{3}(7-y)$, $y = \frac{1}{2}(4-x)$. 5. Absolute error for $x = |1.05 - 1| = 0.05$. Absolute error for $y = |1.98 - 2| = 0.02$. ### Roots of Equations (Numerical) Finding roots (zeros) of functions is a common task. Numerical methods are used when algebraic solutions are not possible. ESE focuses on Bisection, Newton-Raphson, and Regula-Falsi methods. #### 1. Bisection Method - **Principle:** If $f(x)$ is continuous on $[a,b]$ and $f(a)$ and $f(b)$ have opposite signs ($f(a)f(b) ### Numerical Integration Numerical integration (quadrature) is used to approximate definite integrals when analytical integration is difficult or impossible. ESE often tests Trapezoidal Rule and Simpson's Rule. #### 1. Trapezoidal Rule - **Principle:** Approximates the area under the curve by dividing the interval into $n$ subintervals and approximating the area of each subinterval as a trapezoid. - **Formula:** For $\int_a^b f(x) dx$, with $n$ subintervals and $h = (b-a)/n$: $$\int_a^b f(x) dx \approx \frac{h}{2} [f(x_0) + 2f(x_1) + 2f(x_2) + ... + 2f(x_{n-1}) + f(x_n)]$$ where $x_i = a + ih$. - **Error:** Proportional to $h^2$. #### 2. Simpson's 1/3 Rule - **Principle:** Approximates the area by fitting parabolic segments to sets of three points. - **Formula:** For $\int_a^b f(x) dx$, with $n$ *even* subintervals and $h = (b-a)/n$: $$\int_a^b f(x) dx \approx \frac{h}{3} [f(x_0) + 4f(x_1) + 2f(x_2) + 4f(x_3) + ... + 2f(x_{n-2}) + 4f(x_{n-1}) + f(x_n)]$$ where $x_i = a + ih$. - **Conditions:** $n$ must be an even number. - **Error:** Proportional to $h^4$. More accurate than Trapezoidal Rule for the same $h$. #### ESE Example (2021, 5 Marks) Evaluate $\int_0^1 e^{-x^2} dx$ using the Trapezoidal Rule with $n=4$ subintervals. **Solution:** Interval $[a,b] = [0,1]$. Number of subintervals $n=4$. Step size $h = (b-a)/n = (1-0)/4 = 0.25$. The points are $x_0=0, x_1=0.25, x_2=0.5, x_3=0.75, x_4=1$. The function is $f(x) = e^{-x^2}$. Evaluate $f(x)$ at these points: $f(x_0) = f(0) = e^{-0^2} = e^0 = 1$. $f(x_1) = f(0.25) = e^{-(0.25)^2} = e^{-0.0625} \approx 0.9394$. $f(x_2) = f(0.5) = e^{-(0.5)^2} = e^{-0.25} \approx 0.7788$. $f(x_3) = f(0.75) = e^{-(0.75)^2} = e^{-0.5625} \approx 0.5709$. $f(x_4) = f(1) = e^{-1^2} = e^{-1} \approx 0.3679$. Apply Trapezoidal Rule formula: $\int_0^1 e^{-x^2} dx \approx \frac{h}{2} [f(x_0) + 2f(x_1) + 2f(x_2) + 2f(x_3) + f(x_4)]$ $= \frac{0.25}{2} [1 + 2(0.9394) + 2(0.7788) + 2(0.5709) + 0.3679]$ $= 0.125 [1 + 1.8788 + 1.5576 + 1.1418 + 0.3679]$ $= 0.125 [5.9461]$ $\approx 0.7432625$. **Common Trap:** Errors in calculations, especially when summing up the terms or multiplying by 2 (or 4/2 in Simpson's). #### ESE Example (2018, 5 Marks) Use Simpson's 1/3 Rule to evaluate $\int_0^2 \frac{1}{1+x^2} dx$ with $n=4$ subintervals. **Solution:** Interval $[a,b] = [0,2]$. Number of subintervals $n=4$ (even, so Simpson's 1/3 is applicable). Step size $h = (b-a)/n = (2-0)/4 = 0.5$. The points are $x_0=0, x_1=0.5, x_2=1, x_3=1.5, x_4=2$. The function is $f(x) = \frac{1}{1+x^2}$. Evaluate $f(x)$ at these points: $f(x_0) = f(0) = \frac{1}{1+0^2} = 1$. $f(x_1) = f(0.5) = \frac{1}{1+(0.5)^2} = \frac{1}{1+0.25} = \frac{1}{1.25} = 0.8$. $f(x_2) = f(1) = \frac{1}{1+1^2} = \frac{1}{2} = 0.5$. $f(x_3) = f(1.5) = \frac{1}{1+(1.5)^2} = \frac{1}{1+2.25} = \frac{1}{3.25} \approx 0.30769$. $f(x_4) = f(2) = \frac{1}{1+2^2} = \frac{1}{5} = 0.2$. Apply Simpson's 1/3 Rule formula: $\int_0^2 \frac{1}{1+x^2} dx \approx \frac{h}{3} [f(x_0) + 4f(x_1) + 2f(x_2) + 4f(x_3) + f(x_4)]$ $= \frac{0.5}{3} [1 + 4(0.8) + 2(0.5) + 4(0.30769) + 0.2]$ $= \frac{0.5}{3} [1 + 3.2 + 1 + 1.23076 + 0.2]$ $= \frac{0.5}{3} [6.73076]$ $\approx 0.166666 \times 6.73076 \approx 1.12179$. (Analytical solution: $\int_0^2 \frac{1}{1+x^2} dx = [\tan^{-1} x]_0^2 = \tan^{-1}(2) - \tan^{-1}(0) \approx 1.10714$. The approximation is quite good.) **Common Trap:** Using Simpson's rule with an odd number of subintervals $n$. Always check that $n$ is even. **Self-Practice Problems:** 1. Evaluate $\int_0^1 x^2 dx$ using the Trapezoidal Rule with $n=2$. Compare with the exact value. 2. Evaluate $\int_0^2 e^x dx$ using Simpson's 1/3 Rule with $n=2$. Compare with the exact value. 3. What is the condition on the number of subintervals $n$ for Simpson's 1/3 Rule? 4. Calculate $h$ for Trapezoidal Rule to approximate $\int_1^3 \ln x dx$ with 5 subintervals. 5. If the integral of $f(x)$ from $a$ to $b$ is approximated by Trapezoidal Rule with $n$ subintervals, what is the approximate error if the actual value is known? **Answers:** 1. $h=(1-0)/2 = 0.5$. $x_0=0, x_1=0.5, x_2=1$. $f(0)=0, f(0.5)=0.25, f(1)=1$. $\int_0^1 x^2 dx \approx \frac{0.5}{2} [f(0) + 2f(0.5) + f(1)] = 0.25 [0 + 2(0.25) + 1] = 0.25 [0.5+1] = 0.25 \times 1.5 = 0.375$. Exact value: $\int_0^1 x^2 dx = [\frac{x^3}{3}]_0^1 = \frac{1}{3} \approx 0.3333$. 2. $h=(2-0)/2 = 1$. $x_0=0, x_1=1, x_2=2$. $f(0)=e^0=1, f(1)=e^1 \approx 2.7183, f(2)=e^2 \approx 7.3891$. $\int_0^2 e^x dx \approx \frac{1}{3} [f(0) + 4f(1) + f(2)] = \frac{1}{3} [1 + 4(2.7183) + 7.3891]$ $= \frac{1}{3} [1 + 10.8732 + 7.3891] = \frac{1}{3} [19.2623] \approx 6.4207$. Exact value: $\int_0^2 e^x dx = [e^x]_0^2 = e^2 - e^0 = e^2 - 1 \approx 7.3891 - 1 = 6.3891$. 3. The number of subintervals $n$ must be an even number. 4. $h = (3-1)/5 = 2/5 = 0.4$. 5. Absolute error = $| \text{Exact Value} - \text{Approximate Value} |$.