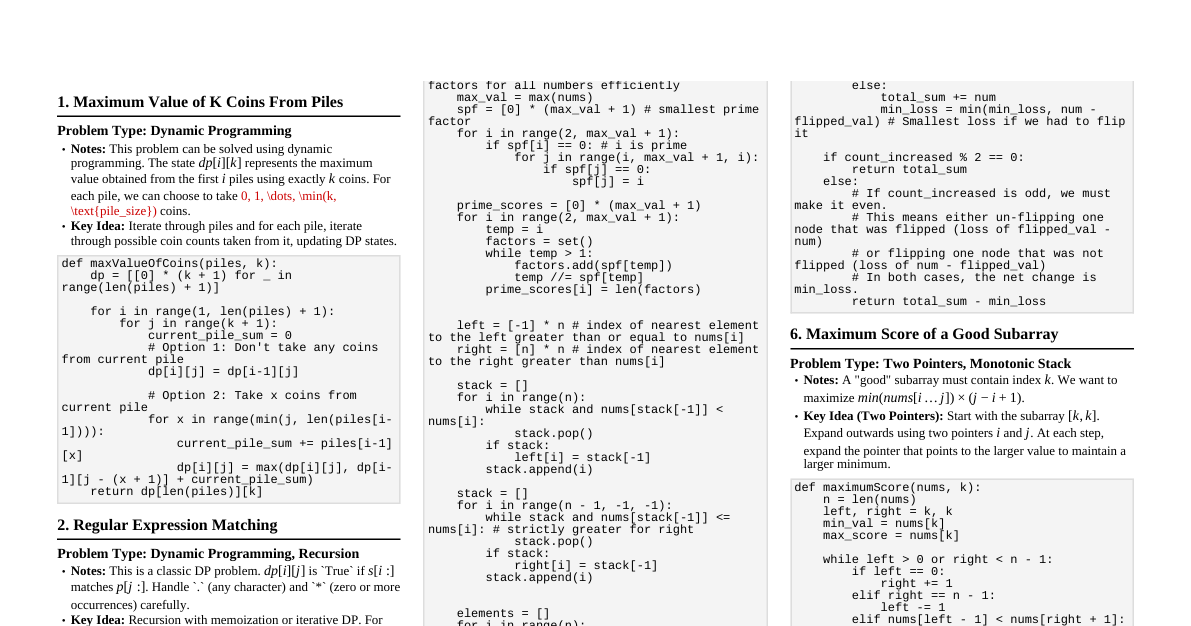

1. Minimize Deviation in Array Concept: Use a max-heap to keep track of the largest element. Repeatedly reduce the largest element (divide even by 2, multiply odd by 2 once to make it even) and update the minimum possible value in the array. The goal is to minimize the difference between the max and min elements. Effective Solution: Start by making all odd numbers even (multiply by 2). Then, use a max-heap. In each step, extract the maximum element, update the minimum seen so far, and if the extracted element is even, divide it by 2 and push it back. import heapq class Solution: def minimumDeviation(self, nums: list[int]) -> int: pq = [] min_val = float('inf') for x in nums: if x % 2 == 1: x *= 2 heapq.heappush(pq, -x) # Max-heap by storing negative values min_val = min(min_val, x) min_deviation = float('inf') while True: max_val = -heapq.heappop(pq) min_deviation = min(min_deviation, max_val - min_val) if max_val % 2 == 1: # Cannot divide further break max_val //= 2 min_val = min(min_val, max_val) heapq.heappush(pq, -max_val) return min_deviation 2. Recover a Tree From Preorder Traversal Concept: Reconstruct a binary tree given its preorder traversal string, where depth is indicated by dashes. Effective Solution: Use recursion or a stack. The number of leading dashes indicates the depth. When parsing, if the current node's depth is greater than the parent's, it's a child. If it's equal, it's a sibling. If less, we backtrack. # Definition for a binary tree node. class TreeNode: def __init__(self, val=0, left=None, right=None): self.val = val self.left = left self.right = right class Solution: def recoverFromPreorder(self, S: str) -> TreeNode: path = [] # Stack to keep track of current path i = 0 while i depth: path.pop() # Backtrack to correct depth if path: if not path[-1].left: path[-1].left = node else: path[-1].right = node path.append(node) return path[0] # The root is the first node added 3. Word Break II Concept: Given a string $s$ and a dictionary of words, add spaces in $s$ to construct a sentence where each word is a valid dictionary word. Return all such possible sentences. Effective Solution: Dynamic programming with backtracking or memoized recursion. First, determine if $s$ can be segmented at all (using standard Word Break I DP). Then, use DFS to find all valid segmentations, building sentences backward or forward. Memoization is crucial to avoid recomputing results for substrings. class Solution: def wordBreak(self, s: str, wordDict: list[str]) -> list[str]: word_set = set(wordDict) memo = {} def backtrack(index): if index in memo: return memo[index] if index == len(s): return [""] # Base case: empty string means a valid segmentation res = [] for i in range(index, len(s)): word = s[index : i+1] if word in word_set: for sentence in backtrack(i + 1): if sentence == "": res.append(word) else: res.append(word + " " + sentence) memo[index] = res return res return backtrack(0) 4. Maximum Score Words Formed by Letters Concept: Given a list of words, a list of available letters, and a score for each letter, find the maximum score that can be obtained by forming words using the available letters. Each letter can be used at most once. Effective Solution: This is a classic backtracking/DFS problem. Iterate through all possible subsets of words. For each subset, check if the required letters are available. If yes, calculate the score. Keep track of the maximum score. Letter counts can be managed efficiently using frequency arrays/dictionaries. from collections import Counter class Solution: def maxScoreWords(self, words: list[str], letters: list[str], score: list[int]) -> int: letter_counts = Counter(letters) max_score = 0 def calculate_word_score(word): s = 0 for char in word: s += score[ord(char) - ord('a')] return s def backtrack(index, current_letter_counts, current_score): nonlocal max_score max_score = max(max_score, current_score) for i in range(index, len(words)): word = words[i] word_freq = Counter(word) can_form = True for char, count in word_freq.items(): if current_letter_counts[char] 5. N-Queens II Concept: The N-Queens puzzle is the problem of placing $N$ non-attacking queens on an $N \times N$ chessboard. This variant asks for the total number of distinct solutions. Effective Solution: Backtracking. Place queens row by row. For each cell $(r, c)$, check if it's safe (no other queen in the same column, or on either diagonal). Use boolean arrays/sets to keep track of occupied columns and diagonals for $O(1)$ lookup. class Solution: def totalNQueens(self, n: int) -> int: self.count = 0 cols = [False] * n diag1 = [False] * (2 * n - 1) # r + c diag2 = [False] * (2 * n - 1) # r - c + n - 1 def backtrack(row): if row == n: self.count += 1 return for col in range(n): if not cols[col] and not diag1[row + col] and not diag2[row - col + n - 1]: cols[col] = True diag1[row + col] = True diag2[row - col + n - 1] = True backtrack(row + 1) cols[col] = False diag1[row + col] = False diag2[row - col + n - 1] = False backtrack(0) return self.count 6. Find Building Where Alice and Bob Can Meet Concept: Given heights of buildings, find the rightmost building where Alice (at $i$) and Bob (at $j$) can meet, such that all buildings between $i$ and $j$ (inclusive) are strictly shorter than the meeting building. This is a complex query problem. Effective Solution: This problem requires a segment tree or similar data structure to efficiently query maximum height in a range, combined with a monotonic stack or binary search for finding the meeting building. For each query $(i, j)$, one approach is to iterate between $i$ and $j$, finding the max height. A more efficient way involves processing queries offline or using a segment tree with custom merging logic. The linked problem is very specific and usually needs advanced techniques like a segment tree with coordinate compression or a sparse table. The "can meet" condition usually means $max(buildings[i], buildings[j]) buildings[i]$ and $buildings[k] > buildings[j]$. The specific problem needs further analysis of its constraints. A general approach might involve a segment tree storing max height, then binary searching for the first building from $max(i, j)$ that satisfies the height condition. # This problem is complex and usually involves advanced data structures. # A simplified approach for a common variant (where k is just an index > max(i,j)) # might use a monotonic stack or segment tree. # For the exact problem, an offline approach with a segment tree or Fenwick tree # combined with a stack to find the next greater element is often used. # Example for a simplified variant (finding next greater element to the right): # This snippet is NOT a full solution to the LeetCode problem, # but illustrates a common building block (monotonic stack). def find_next_greater_elements(heights: list[int]) -> list[int]: n = len(heights) next_greater = [-1] * n stack = [] # stores indices for i in range(n - 1, -1, -1): while stack and heights[stack[-1]] 7. Constrained Subsequence Sum Concept: Given an integer array $nums$ and an integer $k$, return the maximum sum of a non-empty subsequence such that for every two consecutive integers in the subsequence $nums[i]$ and $nums[j]$ (with $i Effective Solution: Dynamic programming with a sliding window maximum (deque). $dp[i]$ is the maximum subsequence sum ending at index $i$. $dp[i] = nums[i] + \max(0, \max_{j=max(0, i-k)}^{i-1} dp[j])$. A deque can find this maximum in $O(1)$ on average. from collections import deque class Solution: def constrainedSubsetSum(self, nums: list[int], k: int) -> int: n = len(nums) dp = [0] * n dq = deque() # Stores indices, in decreasing order of dp[index] for i in range(n): # Remove elements outside the window [i-k, i-1] if dq and dq[0] 8. Number of Flowers in Full Bloom Concept: Given a list of flower blooming periods $[start, end]$ and a list of people's arrival times, determine for each person how many flowers are in full bloom at their arrival time. Effective Solution: Use a sweep-line algorithm or difference array. Sort all start and end times. Iterate through sorted events, incrementing a counter for bloom starts and decrementing for bloom ends. For each person's arrival time, find the corresponding bloom count. A more efficient way for many queries is to use coordinate compression or sort all events (start, end, person arrival) and process them. A common efficient approach is to sort `start` times and `end` times separately. For each person's arrival `t`, count `starts import bisect class Solution: def fullBloomFlowers(self, flowers: list[list[int]], people: list[int]) -> list[int]: starts = sorted([f[0] for f in flowers]) ends = sorted([f[1] for f in flowers]) # Note: For end, consider flower stops blooming AFTER its end day. # So we count ends strictly less than person's time. ans = [] for p_time in people: # Number of flowers that have started blooming by or at p_time started_count = bisect.bisect_right(starts, p_time) # Number of flowers that have finished blooming strictly before p_time ended_count = bisect.bisect_left(ends, p_time) ans.append(started_count - ended_count) return ans 9. Maximum Performance of a Team Concept: Given engineers with speed and efficiency, choose $k$ engineers to form a team such that the team's performance (sum of speeds $\times$ minimum efficiency) is maximized. Effective Solution: Sort engineers by efficiency in descending order. Iterate through the sorted engineers. For each engineer, consider them as the one with minimum efficiency for the current team. Maintain a min-heap of $k-1$ speeds from previously processed engineers (those with higher efficiency) to maximize speed sum. If the heap size exceeds $k-1$, remove the smallest speed. import heapq class Solution: def maxPerformance(self, n: int, speed: list[int], efficiency: list[int], k: int) -> int: engineers = [] for i in range(n): engineers.append((efficiency[i], speed[i])) engineers.sort(key=lambda x: x[0], reverse=True) # Sort by efficiency descending max_performance = 0 current_speed_sum = 0 min_heap = [] # Stores speeds of k-1 engineers for eff, spd in engineers: # Add current engineer's speed current_speed_sum += spd heapq.heappush(min_heap, spd) # If we have more than k engineers, remove the one with smallest speed if len(min_heap) > k: current_speed_sum -= heapq.heappop(min_heap) # Calculate performance with current engineer as min efficiency max_performance = max(max_performance, current_speed_sum * eff) return max_performance % (10**9 + 7) 10. Count Prefix and Suffix Pairs II Concept: Given a 0-indexed string array `words`, return the number of pairs $(i, j)$ such that $i Effective Solution: Use an Aho-Corasick automaton or a Trie. Insert all words into a Trie. For each word `w`, traverse the Trie with `w` to find all words that are prefixes. Simultaneously, traverse the Trie with `w` reversed to find all words that are suffixes. A more direct Trie approach: for each word, add it to a Trie. Then, for each word, traverse the Trie. When traversing, if a node marks the end of a word, it's a prefix. If it also marks the end of the reversed word (or a suffix match), count it. This problem is efficiently solved using a Trie where each node stores a count of words ending at that node. When inserting a word, for every prefix of the word, if it's also a suffix, increment a counter. This often involves a generalized suffix tree or a Trie where each node has a link to its suffix. A simpler approach is to use a Trie for prefixes. For each word `w`, iterate through all its prefixes. For each prefix `p`, check if `p` is also a suffix of `w`. If yes, increment a counter associated with `p` in the Trie. The total count is the sum of these counters. For efficient suffix checking, one can use string hashing. # This problem is challenging. A common approach uses a Trie for prefixes # and then for each word, checks its prefixes for suffix matches. # A more optimized solution involves a Trie where each node stores a list of indices # of words that have this node as a prefix. Then, for each word, iterate through its # suffixes and query the Trie. # A common simpler approach for this type of problem is to use a Trie. # Each node in the Trie can store a dictionary mapping (count of prefix words, count of suffix words). # Or, more directly, combine Trie with string hashing for efficient suffix checks. # Let's consider a Trie approach where we store words and then iterate. class TrieNode: def __init__(self): self.children = {} self.is_end = False self.count = 0 # Number of words ending here class Solution: def countPrefixSuffixPairs(self, words: list[str]) -> int: root = TrieNode() total_pairs = 0 def insert(word): node = root for char in word: if char not in node.children: node.children[char] = TrieNode() node = node.children[char] node.is_end = True node.count += 1 def search_and_count(word): nonlocal total_pairs node = root for i, char in enumerate(word): if char not in node.children: return # No more prefixes node = node.children[char] # If current prefix (word[:i+1]) is a stored word, # check if it's also a suffix of `word`. if node.is_end and word.endswith(word[:i+1]): total_pairs += node.count # Insert all words into the Trie for word in words: insert(word) # For each word, search for prefix-suffix pairs # We need to iterate over words and check against already processed words # The problem specifies i 11. Sum of Prefix Scores of Strings Concept: Given an array of strings `words`, return an array `scores` of the same length, where `scores[i]` is the total score of `words[i]`. The score of a string `word` is the sum of the lengths of all its non-empty prefixes that are also prefixes of other strings in `words`. Effective Solution: Use a Trie. Insert all words into the Trie. While inserting, increment a counter at each node visited. After building the Trie, for each word, traverse it again. The score for a word is the sum of the counts at all nodes corresponding to its prefixes. class TrieNode: def __init__(self): self.children = {} self.count = 0 # Number of words that have this node as a prefix class Solution: def sumPrefixScores(self, words: list[str]) -> list[int]: root = TrieNode() def insert(word): node = root for char in word: if char not in node.children: node.children[char] = TrieNode() node = node.children[char] node.count += 1 # Increment count for this prefix # Build the Trie for word in words: insert(word) # Calculate scores scores = [] for word in words: current_score = 0 node = root for char in word: node = node.children[char] current_score += node.count # Add count of words that share this prefix scores.append(current_score) return scores 12. Find Minimum Diameter After Merging Two Trees Concept: Given two trees, merge them by adding an edge between any node in tree 1 and any node in tree 2. Find the minimum possible diameter of the resulting merged tree. Effective Solution: The diameter of a tree is the longest path between any two nodes. When merging two trees, the new diameter will either be: 1. The diameter of tree 1. 2. The diameter of tree 2. 3. A path that goes through the newly added edge. This path would be $max\_depth_1 + 1 + max\_depth_2$, where $max\_depth_1$ is the maximum depth from the chosen merge node in tree 1 to any other node in tree 1, and similarly for tree 2. To minimize the third case, we want to choose the merge nodes such that their maximum depths in their respective trees are minimized. This means picking nodes closest to the "center" of each tree. However, the problem statement often means $1 + \text{radius}_1 + \text{radius}_2$ (where radius is min max_depth to any node). The overall strategy is to calculate the diameter of each individual tree. Then, for every node in tree 1, calculate its maximum distance to any other node in tree 1. Do the same for tree 2. The minimum diameter of the merged tree is $\min(\text{diameter}_1, \text{diameter}_2, \min_{u \in T_1, v \in T_2} (\text{max_dist}(u, T_1) + 1 + \text{max_dist}(v, T_2)))$. A key observation is that $\min_{u \in T_1} \text{max_dist}(u, T_1)$ is the radius of $T_1$. So, the formula simplifies to $\min(\text{diameter}_1, \text{diameter}_2, 1 + \text{radius}_1 + \text{radius}_2)$. Calculating radius usually involves finding the diameter first, then finding the center(s). # This problem requires finding diameter and radius of a tree. # Diameter: longest path between any two nodes. # Radius: minimum among all max_distances from a node to any other node (center). # Helper function to find diameter and max_depth from each node (for radius) # This is typically done with two BFS/DFS traversals. # 1. Start BFS/DFS from an arbitrary node (say, 0) to find the farthest node (u). # 2. Start BFS/DFS from u to find the farthest node (v) and the path length (diameter). # 3. For radius, from every node, find its maximum distance to any other node. # This can be done by combining distances from 'u' and 'v' (endpoints of diameter). from collections import deque def get_farthest_node_and_dist(n, adj, start_node): distances = [-1] * n distances[start_node] = 0 q = deque([(start_node, 0)]) farthest_node = start_node max_dist = 0 while q: u, d = q.popleft() if d > max_dist: max_dist = d farthest_node = u for v in adj[u]: if distances[v] == -1: distances[v] = d + 1 q.append((v, d + 1)) return farthest_node, max_dist, distances def get_tree_info(n, edges): if n == 0: return 0, 0 # diameter, radius if n == 1: return 0, 0 adj = [[] for _ in range(n)] for u, v in edges: adj[u].append(v) adj[v].append(u) # 1. Find an endpoint of a diameter (node_u) node_u, _, _ = get_farthest_node_and_dist(n, adj, 0) # 2. Find the other endpoint (node_v) and the diameter node_v, diameter, dist_from_u = get_farthest_node_and_dist(n, adj, node_u) # 3. Get distances from node_v as well _, _, dist_from_v = get_farthest_node_and_dist(n, adj, node_v) # Calculate radius: min(max_distance from node to any other node) # For each node 'i', max_distance = max(dist_from_u[i], dist_from_v[i]) radius = float('inf') for i in range(n): radius = min(radius, max(dist_from_u[i], dist_from_v[i])) return diameter, radius class Solution: def findMinimumDiameterAfterMerge(self, n1: int, edges1: list[list[int]], n2: int, edges2: list[list[int]]) -> int: diam1, rad1 = get_tree_info(n1, edges1) diam2, rad2 = get_tree_info(n2, edges2) # The new diameter will be the max of: # 1. Diameter of tree1 # 2. Diameter of tree2 # 3. Path connecting tree1 and tree2: radius1 + radius2 + 1 (for the new edge) return max(diam1, diam2, rad1 + rad2 + 1) 13. Second Minimum Time to Reach Destination Concept: Given a city represented as a graph, find the second minimum time to travel from node 1 to node $n$. Traffic lights change color every `change` minutes (green to red, red to green). Travel time between nodes is `time`. Effective Solution: This is a variation of shortest path. Since we need the second shortest path, Dijkstra's algorithm needs modification. Instead of storing just the minimum distance, store the two smallest distances to each node. When relaxing an edge, update these two distances. The traffic light logic: if current time `t` is in a red light cycle, wait until it turns green. A red light cycle starts at `k * change` and ends at `k * change + change`. If `t // change` is odd, it's red. The wait time would be `change - (t % change)` if `t % change != 0`. Otherwise, no wait. import heapq class Solution: def secondMinimum(self, n: int, edges: list[list[int]], time: int, change: int) -> int: adj = [[] for _ in range(n + 1)] for u, v in edges: adj[u].append(v) adj[v].append(u) # dists[i][0] = shortest time to reach i # dists[i][1] = second shortest time to reach i dists = [[float('inf')] * 2 for _ in range(n + 1)] dists[1][0] = 0 # (current_time, node) pq = [(0, 1)] while pq: curr_time, u = heapq.heappop(pq) # Calculate actual departure time from u considering traffic lights if (curr_time // change) % 2 == 1: # If current time is during a red light wait_time = change - (curr_time % change) actual_departure_time = curr_time + wait_time else: actual_departure_time = curr_time # Time to arrive at neighbor v arrival_at_v_time = actual_departure_time + time for v in adj[u]: if arrival_at_v_time 14. Minimum Number of Days to Disconnect Island Concept: Given a 2D binary grid representing an island (1s are land, 0s are water), find the minimum number of days (0, 1, or 2) to disconnect the island. An island is disconnected if it has 0 or more than 1 connected components of land cells. Effective Solution: 1. Count initial connected components. If 0 or >1, it's already disconnected (0 days). 2. Try removing 1 cell: Iterate through all land cells $(r, c)$. Temporarily change `grid[r][c]` to 0. Count connected components. If >1 or 0, then 1 day is sufficient. Revert `grid[r][c]`. 3. If neither 0 nor 1 day works, it must be 2 days. (It's always possible to disconnect an island in 2 days by removing two adjacent land cells, or two cells that are part of a 'bridge'). Counting connected components: Use BFS/DFS. class Solution: def minDays(self, grid: list[list[int]]) -> int: m, n = len(grid), len(grid[0]) def count_connected_components(current_grid): rows, cols = len(current_grid), len(current_grid[0]) visited = [[False] * cols for _ in range(rows)] components = 0 def dfs(r, c): if not (0 1 components, it's already disconnected return 0 # Check for 1 day for r in range(m): for c in range(n): if grid[r][c] == 1: grid[r][c] = 0 # Temporarily remove land cell if count_connected_components(grid) != 1: return 1 grid[r][c] = 1 # Revert # If neither 0 nor 1 day works, it must be 2 days return 2 15. Remove Max Number of Edges to Keep Graph Fully Traversable Concept: Given a graph with 3 types of edges (Alice-only, Bob-only, both), remove the maximum number of edges such that both Alice and Bob can still traverse the entire graph. Effective Solution: Use Disjoint Set Union (DSU). The key is to process type 3 (both Alice and Bob) edges first. For each type 3 edge, if it connects two previously disconnected components for both Alice AND Bob, add it and merge. This edge benefits both. If it connects components for only one, or neither, it's redundant. After processing type 3, process type 1 (Alice) and type 2 (Bob) edges separately. For Alice, use her DSU structure. For Bob, use his DSU. Count edges added. The maximum removable edges are `total_edges - (edges_added_type3 + edges_added_type1 + edges_added_type2)`. Ensure both Alice and Bob can traverse the *entire* graph, meaning their DSU structures should each have only one component remaining (number of connected components = 1, or `num_nodes - num_edges_used = 1`). class DSU: def __init__(self, n): self.parent = list(range(n + 1)) self.components = n # Number of disjoint components def find(self, i): if self.parent[i] == i: return i self.parent[i] = self.find(self.parent[i]) return self.parent[i] def union(self, i, j): root_i = self.find(i) root_j = self.find(j) if root_i != root_j: self.parent[root_i] = root_j self.components -= 1 return True # Edge was useful return False # Edge was redundant class Solution: def maxNumEdgesToRemove(self, n: int, edges: list[list[int]]) -> int: dsu_alice = DSU(n) dsu_bob = DSU(n) edges_used = 0 # Process type 3 edges first (beneficial for both) for type, u, v in edges: if type == 3: # If this edge connects components for Alice OR Bob, it's useful for at least one. # If it connects for BOTH, it's a shared useful edge. # If it connects for neither, it's redundant. # We count it as used if it connects for Alice OR Bob. # The problem phrasing "keep graph fully traversable" implies # we need to ensure connectivity for both. # Check if edge (u,v) connects new components for Alice AND/OR Bob # If it connects for Alice: union_alice = dsu_alice.union(u, v) # If it connects for Bob: union_bob = dsu_bob.union(u, v) if union_alice or union_bob: # If it helped either Alice or Bob connect edges_used += 1 # Process type 1 edges (Alice only) for type, u, v in edges: if type == 1: if dsu_alice.union(u, v): edges_used += 1 # Process type 2 edges (Bob only) for type, u, v in edges: if type == 2: if dsu_bob.union(u, v): edges_used += 1 # After processing all necessary edges, check if both graphs are fully connected if dsu_alice.components != 1 or dsu_bob.components != 1: return -1 # Cannot make fully traversable return len(edges) - edges_used 16. Maximum Employees to Be Invited to a Meeting Concept: Given an array `favorite` where `favorite[i]` is the person employee `i` loves, find the maximum number of employees that can be invited to a meeting. A meeting can be held if every employee `i` invited loves exactly one other employee `j` who is also invited, and `j` loves `i`. This forms cycles. Effective Solution: The problem describes a functional graph where each node has exactly one outgoing edge. Such a graph is a collection of components, where each component contains exactly one cycle, with trees rooted at cycle nodes pointing towards the cycle. We need to find the maximum number of employees. This is either: 1. The sum of lengths of all cycles of length 2. 2. The maximum length of any cycle of length > 2. The "trick" for length 2 cycles: if `i` loves `j` and `j` loves `i`, they form a cycle. For such pairs, we can also include all employees that can reach `i` or `j` without going through `i` or `j` (i.e., paths leading into `i` or `j`). For each such pair $(i, j)$, find the longest path (tree branch) leading into `i` (excluding $j$) and `j` (excluding $i$). For cycles of length > 2: we can only invite employees from one such cycle. We pick the longest one. Algorithm: a. Identify all cycles using DFS. b. For each cycle of length 2 (i.e., $i \to j \to i$): Calculate the maximum path length that can attach to $i$ (excluding $j$) and $j$ (excluding $i$). Sum these up. c. For each cycle of length > 2: The invited employees are just the cycle members. Find the maximum length among these. The final answer is $\max(\text{sum_of_lengths_for_length_2_cycles_with_attachments}, \text{max_length_of_other_cycles})$. To find max path length attaching to a node: use BFS/DFS on the reversed graph (indegrees). from collections import deque class Solution: def maximumInvitations(self, favorite: list[int]) -> int: n = len(favorite) # Build adjacency list and in-degrees adj = [[] for _ in range(n)] rev_adj = [[] for _ in range(n)] # Reversed graph for paths leading into nodes in_degree = [0] * n for i in range(n): adj[i].append(favorite[i]) rev_adj[favorite[i]].append(i) in_degree[favorite[i]] += 1 # Find longest paths into nodes that are not part of a cycle (trees) # Use Kahn's algorithm (topological sort) to remove non-cycle nodes q = deque() for i in range(n): if in_degree[i] == 0: q.append(i) max_path_len = [1] * n # Length of path ending at this node (including itself) while q: u = q.popleft() for v in rev_adj[u]: # For nodes pointing to u # If v points to u, and u is removed (part of a non-cycle path) # This logic is for finding longest path ending at a specific node # which is part of a cycle. # The correct way is to find longest path into any node that ISN'T on a cycle. # If we remove nodes with in_degree 0, these are leaves of trees pointing to cycles. # We want to know how long the "branches" are that feed into cycle nodes. # A more direct way to calculate max path length into a node: # `max_branch_len[i]` is the length of the longest path ending at `i` # that does not pass through any cycle node (except `i` itself if `i` is a cycle node). # Initialize `max_branch_len` to 1 for all nodes. # Use BFS starting from nodes with in_degree 0 (leaves of trees) # and propagate max path lengths. pass # This part is tricky. Let's refine. # Refined: Calculate the longest path for each node that eventually leads to a cycle node # This is `max_depth[u]` = longest path ending at `u` from a non-cycle node # (or 1 if `u` is a cycle node and no non-cycle nodes lead to it). q_leaves = deque() for i in range(n): if in_degree[i] == 0: q_leaves.append(i) depths = [1] * n # Max path length ending at this node, from a path of non-cycle nodes while q_leaves: u = q_leaves.popleft() for v_neighbor in adj[u]: # v_neighbor is favorite[u] depths[v_neighbor] = max(depths[v_neighbor], depths[u] + 1) in_degree[v_neighbor] -= 1 if in_degree[v_neighbor] == 0: q_leaves.append(v_neighbor) # Now, `in_degree` will only have non-zero values for nodes that are part of cycles. # `depths[i]` now holds the longest path ending at `i` from a node that is *not* part of a cycle. max_cycle_len = 0 # Max length of cycles > 2 sum_two_cycle_branches = 0 # Sum of (max_branch_len_into_i + max_branch_len_into_j) for 2-cycles visited_cycle_nodes = [False] * n for i in range(n): if in_degree[i] > 0 and not visited_cycle_nodes[i]: # If i is part of a cycle cycle_len = 0 curr = i path = [] while not visited_cycle_nodes[curr]: visited_cycle_nodes[curr] = True path.append(curr) curr = favorite[curr] # Check if we landed back in the current path (i.e., found a cycle) if curr in path: start_of_cycle_idx = path.index(curr) cycle_len = len(path) - start_of_cycle_idx if cycle_len == 2: node1, node2 = path[start_of_cycle_idx], path[start_of_cycle_idx + 1] # For a 2-cycle (node1 node2), we can take all branches leading to them. # `depths[node]` calculated above already gives the max branch length into `node`. sum_two_cycle_branches += depths[node1] + depths[node2] else: # Cycle length > 2 max_cycle_len = max(max_cycle_len, cycle_len) return max(max_cycle_len, sum_two_cycle_branches) 17. Number of Good Paths Concept: Given a tree with node values, find the number of "good paths". A path is good if: 1) start and end nodes have the same value, 2) all intermediate nodes have values less than or equal to the start/end value. Effective Solution: This is a DSU problem with a twist. Sort nodes by their values. Process nodes in increasing order of values. When processing a node `u` with value `val[u]`, add it to the DSU. For each neighbor `v` of `u`: if `val[v] class DSU: def __init__(self, n): self.parent = list(range(n)) self.size = [1] * n # Size of each component self.val_counts = {} # Stores {root: {value: count}} def find(self, i): if self.parent[i] == i: return i self.parent[i] = self.find(self.parent[i]) return self.parent[i] def union(self, i, j): root_i = self.find(i) root_j = self.find(j) if root_i != root_j: # Merge smaller tree into larger tree if self.size[root_i] int: n = len(vals) adj = [[] for _ in range(n)] for u, v in edges: adj[u].append(v) adj[v].append(u) # Group nodes by value nodes_by_value = {} for i in range(n): if vals[i] not in nodes_by_value: nodes_by_value[vals[i]] = [] nodes_by_value[vals[i]].append(i) dsu = DSU(n) ans = n # Each single node is a good path # Initialize val_counts for each node as its own root for i in range(n): dsu.val_counts[i] = {vals[i]: 1} # Iterate through values in increasing order sorted_values = sorted(nodes_by_value.keys()) for val in sorted_values: for u in nodes_by_value[val]: # For each neighbor v of u for v in adj[u]: if vals[v] 18. Minimum Cost Walk in Weighted Graph Concept: Given a weighted undirected graph, find the minimum cost to travel between two nodes $start$ and $end$. The cost of a path is the bitwise AND of all edge weights along the path. Effective Solution: This looks like a variation of shortest path. The "cost" being bitwise AND is tricky. If we can reach node $B$ from $A$ with a path cost $X$, and $C$ from $B$ with path cost $Y$, then $C$ from $A$ via $B$ has path cost $X \text{ & } Y$. Notice that the AND operation can only decrease or keep the value the same. It never increases. This suggests that if two nodes are in the same connected component, they can always achieve a certain AND sum. The minimum cost between $u$ and $v$ is the bitwise AND of all edge weights in the connected component containing $u$ and $v$. If $u$ and $v$ are connected, you can always form a path. The path with the minimum AND sum will involve taking all edges in the cycle that contains $u$ and $v$, and then taking the AND of all edges in that cycle. A more robust approach: Use DSU. For each component, maintain the bitwise AND of all edge weights that have been used to form that component. When merging two components, their new AND value is the AND of their individual AND values. For each query $(u, v)$: 1. If $u$ and $v$ are the same node, the cost is `vals[u]`. (No, problem says edge weights). 2. If $u$ and $v$ are in the same DSU component, the minimum cost is the AND of all edges used to form that component. 3. If $u$ and $v$ are not in the same DSU component, they are unreachable, cost is -1. The DSU approach is powerful. Initialize each node's component AND value to 0xFFFFFFFF (all bits set). When an edge $(u, v, w)$ is processed: - If $u$ and $v$ are in different components, merge them. The new component's AND value is `component_and[root_u] & component_and[root_v] & w`. - If $u$ and $v$ are already in the same component, update the component's AND value with `w`. This is not quite right. The AND of edges on a path. If two nodes are in the same component, any path between them exists. We want the minimal AND sum. The key property of bitwise AND is that it's monotonic non-increasing. If $A \to B$ has cost $X$, and $B \to C$ has cost $Y$, $A \to C$ has cost $X \text{ & } Y$. The minimum AND cost between any two nodes $u, v$ in the same connected component is the bitwise AND of all edge weights $w$ such that $(u,v,w)$ is an edge that is part of some path between $u$ and $v$. This is simply the bitwise AND of ALL edge weights in the entire connected component. So, use DSU. For each component, store the bitwise AND of all edges that have been "processed" to connect nodes in that component. Initialize each component with `0xFFFFFFFF`. When an edge `(u, v, w)` is processed, if `u` and `v` are merged, the new component's AND sum is `(current_AND_u | current_AND_v) & w`? No. It's just `current_AND_u & current_AND_v & w`. Correct approach: Build DSU. When merging components $R_u$ and $R_v$ via edge $(u, v, w)$, the new component's AND value is `component_AND[R_u] & component_AND[R_v] & w`. Store this. For queries: if $find(u) == find(v)$, return `component_AND[find(u)]`. Else return -1. Since $u$ and $v$ can be the same node, if $u==v$, the cost is the AND of all edge weights incident to $u$. If no edges, it's 0. If $u==v$ and $u$ has no incident edges, the cost is 0. If $u==v$ and $u$ has edges, then the cost is the AND of all edges incident to $u$. The problem implies "walk". A walk can revisit edges. The minimum cost is the AND of all edges in the connected component. Final correct DSU approach: 1. Initialize DSU. Each node $i$ is its own component. Store for each root $r$: `component_val[r] = -1` (or a special value meaning 'no edges yet'). 2. Iterate through all edges $(u, v, w)$: Let $R_u = find(u)$, $R_v = find(v)$. If $R_u \ne R_v$: Merge $R_u, R_v$. Let $R_{new}$ be the new root. `component_val[R_{new}] = (component_val[R_u] if component_val[R_u] != -1 else 0xFFFFFFFF) & (component_val[R_v] if component_val[R_v] != -1 else 0xFFFFFFFF) & w`. (Handle -1 for initial state) Else ($R_u = R_v$): `component_val[R_u] = (component_val[R_u] if component_val[R_u] != -1 else 0xFFFFFFFF) & w`. 3. For each query $(s, t)$: If $find(s) == find(t)$: return `component_val[find(s)]`. Else: return -1. Special case: if $s==t$, the cost is the AND of all edges incident to $s$. If $s$ is isolated, it's 0. The wording "minimum cost walk" implies that if a path exists, we can always find one where the AND sum is the AND of all edges in the component. E.g., if we have edges $e_1, e_2, e_3$ in a component, and a path uses $e_1, e_2$, its cost is $e_1 \& e_2$. But if $e_3$ is also in the component, we could potentially use it to make the AND sum even smaller $e_1 \& e_2 \& e_3$. So, for each connected component, find the AND of all weights of edges *within* that component. Revised DSU: 1. `parent` array for DSU. 2. `component_and_sum[i]` stores the bitwise AND of all edge weights *encountered so far* that connect nodes in the component rooted at `i`. Initialize to `0xFFFFFFFF` (all bits set). 3. For each edge `(u, v, w)`: `root_u = find(u)`, `root_v = find(v)`. `new_and_sum = component_and_sum[root_u] & component_and_sum[root_v] & w`. If `root_u != root_v`: `union(root_u, root_v)` `component_and_sum[new_root] = new_and_sum`. Else: (already in same component) `component_and_sum[root_u] = new_and_sum`. This is a classic DSU problem where we maintain aggregate information in roots. The special case $s=t$: If $s$ is isolated, cost is 0. If $s$ is connected, the cost is the AND of all edges in its component. The problem implies if there's any path, the cost is the bitwise AND of all edges in the component. A simpler way to handle $s=t$: If $s$ has no incident edges, cost is 0. Else, it's same as $s \ne t$. If $s=t$ and there are no edges incident to $s$, the cost is $0$. Otherwise, it's the AND of all edge weights in the component containing $s$. So, if $s=t$ and degree of $s$ is 0, return 0. Otherwise, use the DSU logic. The provided solution for this problem on LeetCode usually involves: 1. Building a DSU where each root stores the bitwise AND of all edge weights that have connected nodes in its component. 2. Initialize the AND sum of each node's component to `0xFFFFFFFF`. 3. For each edge `(u, v, w)`: `root_u = dsu.find(u)`, `root_v = dsu.find(v)`. `dsu.component_and[root_u] &= w` (update for `u`'s component) `dsu.component_and[root_v] &= w` (update for `v`'s component) If `root_u != root_v`: `dsu.union(u, v)` (merges components). `new_root = dsu.find(u)` `dsu.component_and[new_root] = dsu.component_and[root_u] & dsu.component_and[root_v]` (no, this isn't right either) Let's rethink: The actual minimum cost between any two nodes $u, v$ in the same connected component is the AND of ALL edge weights in that component. This means we need to find all edges belonging to each component. 1. Build adjacency list. 2. Use DSU to find connected components. While doing this, for each component's root, maintain a set of all edges in that component. 3. For each query $(s, t)$: If $s=t$: if $s$ has any incident edges, calculate AND of all edge weights in its component. Else 0. If $find(s) \ne find(t)$: return -1. If $find(s) == find(t)$: calculate AND of all edge weights in the component. This is too slow if we re-calculate AND for each query. Correct DSU for this problem: Each component root `r` stores `component_min_and_val[r]`, which is the bitwise AND of all edge weights that have been used to *form or expand* this component. Initialize `component_min_and_val[i] = -1` (or `0xFFFFFFFF`) for all `i`. Iterate through edges `(u, v, w)`: `root_u = find(u)`, `root_v = find(v)`. `val_u = component_min_and_val[root_u]` `val_v = component_min_and_val[root_v]` If `root_u != root_v`: `union(u, v)` (say, `root_v` becomes child of `root_u`) `component_min_and_val[root_u] = val_u & val_v & w` Else: (already connected) `component_min_and_val[root_u] &= w` For queries $(s, t)$: If $find(s) != find(t)$: return -1. Else: return `component_min_and_val[find(s)]`. Special case: $s=t$. If $s$ is an isolated node (no edges), cost is 0. Otherwise it's the component AND value. To handle isolated nodes: keep track of `is_isolated[i]`. Initialize `component_min_and_val[i] = 0xFFFFFFFF` for all `i`. Initialize `has_edges[i] = False` for all `i`. For each edge $(u, v, w)$: `has_edges[u] = True`, `has_edges[v] = True`. ... DSU logic ... For query $(s, t)$: If $s == t$ and not `has_edges[s]`: return 0. If $find(s) != find(t)$: return -1. Else: return `component_min_and_val[find(s)]`. class DSU: def __init__(self, n): self.parent = list(range(n)) # Stores the bitwise AND of all edge weights connecting nodes within this component. # Initialized to all bits set (0xFFFFFFFF or (1 list[int]: dsu = DSU(n) has_incident_edges = [False] * n # Track if a node has any edges for u, v, w in edges: has_incident_edges[u] = True has_incident_edges[v] = True dsu.union(u, v, w) # The union method handles updating component_and_val results = [] for s, t in queries: if s == t: # If s is isolated, cost is 0. Otherwise, it's the component's AND value. if not has_incident_edges[s]: results.append(0) else: results.append(dsu.component_and_val[dsu.find(s)]) elif dsu.find(s) != dsu.find(t): results.append(-1) else: results.append(dsu.component_and_val[dsu.find(s)]) return results 19. Minimum Time to Visit a Cell In a Grid Concept: Given a grid where each cell has a value representing the minimum time to enter that cell, find the minimum time to travel from $(0,0)$ to $(m-1, n-1)$. You can only move to adjacent cells (up, down, left, right). You can only enter a cell $(r, c)$ at time $t$ if $t \ge grid[r][c]$. Effective Solution: This is a shortest path problem on a grid with a time constraint. Dijkstra's algorithm is suitable. The "edge weight" is 1 (time to move to an adjacent cell), but there's a waiting time constraint. When moving from $(r, c)$ at `current_time` to an adjacent cell $(nr, nc)$: 1. The earliest we can arrive at $(nr, nc)$ is `current_time + 1`. 2. We can only enter $(nr, nc)$ if `current_time + 1 >= grid[nr][nc]`. 3. If `current_time + 1 1` and `grid[1][0] > 1` (i.e., we cannot move to any adjacent cell from (0,0) at time 1), then it's impossible to move. This needs careful handling. The problem statement usually implies we start at time 0 at (0,0). So `grid[0][0]` is not a constraint. A crucial edge case: if `grid[0][1] > 1` and `grid[1][0] > 1`, we can't make the first move from $(0,0)$ at time 1. If we can't move to any adjacent cell from $(0,0)$ by time 1, then it's impossible. This can be simplified: if `grid[0][1] > 1` AND `grid[1][0] > 1` AND $m,n > 1$, then return -1. The initial time at $(0,0)$ is 0. The first step takes 1 unit of time. So we arrive at an adjacent cell at time 1. If `grid[next_cell_r][next_cell_c]` is larger than 1, we cannot enter. This makes the starting condition `grid[0][1] > 1` and `grid[1][0] > 1` (for a $2 \times 2$ or larger grid) an impossible scenario. For $grid[0][0]$ to be 0 or 1, we can depart immediately. But the problem states `grid[r][c]` is the earliest time you can enter. So we depart $(0,0)$ at time 0, arrive at $(0,1)$ at time 1. If $grid[0][1] > 1$, we can't enter. A simple check: if `grid[0][1] > 1` and `grid[1][0] > 1`, it's impossible. This is because to move from $(0,0)$ to $(0,1)$ takes 1 unit of time, arriving at time 1. If $grid[0][1]$ is, say, 2, we can't enter. Same for $(1,0)$. So, if `grid[0][1] > 1` and `grid[1][0] > 1` (and if $m, n > 1$ to ensure these cells exist), then return -1. Otherwise, Dijkstra. Initial state: `(0, 0, 0)` -> `(time_taken, row, col)`. `dist[r][c]` is min time to reach $(r, c)$. When exploring from `(r, c)` with `d = dist[r][c]`: For neighbor `(nr, nc)`: `next_time = max(d + 1, grid[nr][nc])`. If `next_time import heapq class Solution: def minimumTime(self, grid: list[list[int]]) -> int: m, n = len(grid), len(grid[0]) if m == 1 and n == 1: return 0 # Already at destination # Edge case: Cannot move from (0,0) to any adjacent cell at time 1. # We start at (0,0) at time 0. First move arrives at adjacent cell at time 1. # If both (0,1) and (1,0) have entry times > 1, we are stuck. # This is true if m > 1 and n > 1. # Check if it's possible to move from (0,0) to (0,1) or (1,0) can_move_right = (n > 1 and grid[0][1] 1 and grid[1][0] 1 and n > 1: # Only if both paths are blocked and grid is large enough return -1 elif m == 1 and n > 1 and not can_move_right: # Only row, and right blocked return -1 elif n == 1 and m > 1 and not can_move_down: # Only col, and down blocked return -1 # Initialize distances dist = [[float('inf')] * n for _ in range(m)] dist[0][0] = 0 # Priority queue: (current_time, r, c) pq = [(0, 0, 0)] dr = [-1, 1, 0, 0] dc = [0, 0, -1, 1] while pq: current_time, r, c = heapq.heappop(pq) if r == m - 1 and c == n - 1: return current_time if current_time > dist[r][c]: continue for i in range(4): nr, nc = r + dr[i], c + dc[i] if 0 = grid[nr][nc]`. # If not, we wait until `grid[nr][nc]`. # The time we finally get to move into (nr, nc) # is `max(current_time + 1, grid[nr][nc])`. # If `(r,c)` is (0,0), `current_time` is 0. # `arrival_time_at_neighbor = 0 + 1 = 1`. # `actual_entry_time = max(1, grid[nr][nc])`. actual_entry_time = max(current_time + 1, grid[nr][nc]) if actual_entry_time 20. Minimum Cost to Make at Least One Valid Path in a Grid Concept: Given a grid where each cell has a direction (1:right, 2:left, 3:down, 4:up), find the minimum cost to change directions such that there is at least one valid path from $(0,0)$ to $(m-1, n-1)$. Changing a direction costs 1, using an existing direction costs 0. Effective Solution: This is a 0-1 BFS problem (Dijkstra variant). Since edge weights are either 0 or 1, a deque can be used instead of a priority queue for $O(V+E)$ complexity. - If an edge has weight 0 (following `grid[r][c]` direction), push to front of deque. - If an edge has weight 1 (changing direction), push to back of deque. This ensures that cells reachable with 0 cost are explored first, then cells reachable with 1 cost, and so on. from collections import deque class Solution: def minCost(self, grid: list[list[int]]) -> int: m, n = len(grid), len(grid[0]) # dist[r][c] stores min cost to reach (r,c) dist = [[float('inf')] * n for _ in range(m)] dist[0][0] = 0 # Deque for 0-1 BFS: (cost, r, c) dq = deque([(0, 0, 0)]) # Directions: 1:right, 2:left, 3:down, 4:up # (dr, dc) for each direction dirs = { 1: (0, 1), # Right 2: (0, -1), # Left 3: (1, 0), # Down 4: (-1, 0) # Up } while dq: cost, r, c = dq.popleft() if r == m - 1 and c == n - 1: return cost # If we found a shorter path previously, skip if cost > dist[r][c]: continue # Explore all 4 possible moves from (r, c) for d_val, (dr, dc) in dirs.items(): nr, nc = r + dr, c + dc if 0