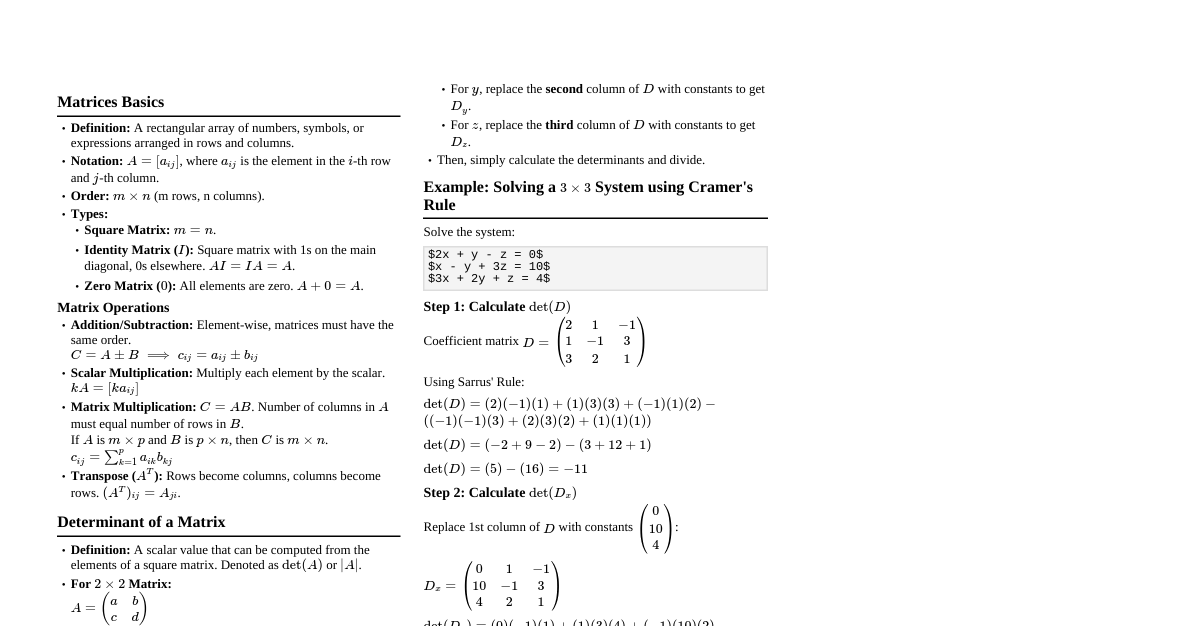

1. Basic Definitions Matrix: A rectangular array of numbers, symbols, or expressions. An $m \times n$ matrix has $m$ rows and $n$ columns. $$ A = \begin{pmatrix} a_{11} & a_{12} & \cdots & a_{1n} \\ a_{21} & a_{22} & \cdots & a_{2n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m1} & a_{m2} & \cdots & a_{mn} \end{pmatrix} $$ Element: $a_{ij}$ refers to the element in the $i$-th row and $j$-th column. Row Vector: A matrix with one row ($1 \times n$). Column Vector: A matrix with one column ($m \times 1$). Square Matrix: A matrix where $m=n$. Diagonal Matrix: A square matrix where all non-diagonal elements are zero ($a_{ij}=0$ for $i \neq j$). Identity Matrix ($I_n$): A diagonal matrix with all diagonal elements equal to 1. $$ I_3 = \begin{pmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{pmatrix} $$ Zero Matrix ($0$): A matrix where all elements are zero. 2. Matrix Operations 2.1. Addition and Subtraction Matrices must have the same dimensions. Performed element-wise: $(A \pm B)_{ij} = a_{ij} \pm b_{ij}$. Properties: $A+B = B+A$, $(A+B)+C = A+(B+C)$, $A+0=A$. 2.2. Scalar Multiplication Multiply each element by the scalar $c$: $(cA)_{ij} = c \cdot a_{ij}$. Properties: $c(A+B) = cA+cB$, $(c+d)A = cA+dA$, $c(dA)=(cd)A$. 2.3. Matrix Multiplication For $A_{m \times p}$ and $B_{p \times n}$, the product $C = AB$ is an $m \times n$ matrix. The number of columns in $A$ must equal the number of rows in $B$. $(AB)_{ij} = \sum_{k=1}^{p} a_{ik}b_{kj}$. $$ \begin{pmatrix} a & b \\ c & d \end{pmatrix} \begin{pmatrix} e & f \\ g & h \end{pmatrix} = \begin{pmatrix} ae+bg & af+bh \\ ce+dg & cf+dh \end{pmatrix} $$ Properties: Not commutative: $AB \neq BA$ (in general). Associative: $(AB)C = A(BC)$. Distributive: $A(B+C) = AB+AC$, $(A+B)C = AC+BC$. Identity: $AI = IA = A$. Scalar: $k(AB) = (kA)B = A(kB)$. 2.4. Transpose ($A^T$) Rows become columns and columns become rows: $(A^T)_{ij} = a_{ji}$. Properties: $(A^T)^T = A$ $(A+B)^T = A^T+B^T$ $(cA)^T = cA^T$ $(AB)^T = B^T A^T$ Symmetric Matrix: $A = A^T$. Skew-Symmetric Matrix: $A = -A^T$. 3. Determinant of a Matrix ($det(A)$ or $|A|$) Defined only for square matrices. $2 \times 2$ Matrix: $$ \begin{vmatrix} a & b \\ c & d \end{vmatrix} = ad - bc $$ $3 \times 3$ Matrix (Sarrus' Rule): $$ \begin{vmatrix} a & b & c \\ d & e & f \\ g & h & i \end{vmatrix} = a(ei-fh) - b(di-fg) + c(dh-eg) $$ Cofactor Expansion: For an $n \times n$ matrix $A$, along row $i$: $det(A) = \sum_{j=1}^{n} a_{ij} C_{ij}$, where $C_{ij} = (-1)^{i+j} M_{ij}$. $M_{ij}$ is the determinant of the submatrix obtained by deleting row $i$ and column $j$. Properties: $det(A^T) = det(A)$. $det(AB) = det(A)det(B)$. $det(kA) = k^n det(A)$ for an $n \times n$ matrix. If a row/column is all zeros, $det(A)=0$. If two rows/columns are identical, $det(A)=0$. If a row/column is a scalar multiple of another, $det(A)=0$. Swapping two rows/columns changes the sign of the determinant. Adding a multiple of one row/column to another does not change the determinant. A matrix is invertible if and only if $det(A) \neq 0$. 4. Inverse of a Matrix ($A^{-1}$) Defined only for square matrices. $A A^{-1} = A^{-1} A = I$. A matrix is invertible (non-singular) if $det(A) \neq 0$. $2 \times 2$ Matrix Inverse: $$ A = \begin{pmatrix} a & b \\ c & d \end{pmatrix} \implies A^{-1} = \frac{1}{ad-bc} \begin{pmatrix} d & -b \\ -c & a \end{pmatrix} $$ General Inverse (using Adjoint): $A^{-1} = \frac{1}{det(A)} adj(A)$. $adj(A)$ is the adjugate matrix, which is the transpose of the cofactor matrix $C$. $adj(A) = C^T$. Cofactor matrix $C$ has elements $C_{ij} = (-1)^{i+j} M_{ij}$. Properties: $(A^{-1})^{-1} = A$. $(AB)^{-1} = B^{-1}A^{-1}$. $(A^T)^{-1} = (A^{-1})^T$. $det(A^{-1}) = \frac{1}{det(A)}$. 5. Systems of Linear Equations A system of $m$ linear equations in $n$ variables can be written as $AX=B$. $$ \begin{pmatrix} a_{11} & \cdots & a_{1n} \\ \vdots & \ddots & \vdots \\ a_{m1} & \cdots & a_{mn} \end{pmatrix} \begin{pmatrix} x_1 \\ \vdots \\ x_n \end{pmatrix} = \begin{pmatrix} b_1 \\ \vdots \\ b_m \end{pmatrix} $$ Solving by Inverse: If $A$ is square and invertible, then $X = A^{-1}B$. Gaussian Elimination / Row Reduction: Transform the augmented matrix $[A|B]$ into row echelon form or reduced row echelon form. Elementary Row Operations: Swap two rows. Multiply a row by a non-zero scalar. Add a multiple of one row to another row. Cramer's Rule: For a system $AX=B$ where $A$ is $n \times n$ and $det(A) \neq 0$, the solution is given by: $x_j = \frac{det(A_j)}{det(A)}$, where $A_j$ is the matrix $A$ with its $j$-th column replaced by the column vector $B$. 6. Eigenvalues and Eigenvectors For a square matrix $A$, a non-zero vector $\mathbf{v}$ is an eigenvector if $A\mathbf{v} = \lambda\mathbf{v}$ for some scalar $\lambda$. $\lambda$ is the eigenvalue corresponding to $\mathbf{v}$. To find eigenvalues: Solve the characteristic equation $det(A - \lambda I) = 0$. To find eigenvectors: For each eigenvalue $\lambda$, solve $(A - \lambda I)\mathbf{v} = \mathbf{0}$. Eigenspace: The set of all eigenvectors corresponding to an eigenvalue $\lambda$, plus the zero vector, forms a subspace. Diagonalization: A square matrix $A$ is diagonalizable if there exists an invertible matrix $P$ such that $P^{-1}AP = D$, where $D$ is a diagonal matrix. The diagonal entries of $D$ are the eigenvalues of $A$, and the columns of $P$ are the corresponding eigenvectors. 7. Special Types of Matrices Orthogonal Matrix: A square matrix $Q$ such that $Q^T Q = Q Q^T = I$. Implies $Q^{-1} = Q^T$. Columns (and rows) form an orthonormal basis. Unitary Matrix: A complex square matrix $U$ such that $U^* U = U U^* = I$, where $U^*$ is the conjugate transpose. Hermitian Matrix: A complex square matrix $A$ such that $A = A^*$. (Self-adjoint). Positive Definite Matrix: A symmetric matrix $A$ such that $\mathbf{x}^T A \mathbf{x} > 0$ for all non-zero vectors $\mathbf{x}$. All eigenvalues are positive. Nilpotent Matrix: A square matrix $A$ such that $A^k = 0$ for some positive integer $k$. Idempotent Matrix: A square matrix $A$ such that $A^2 = A$.