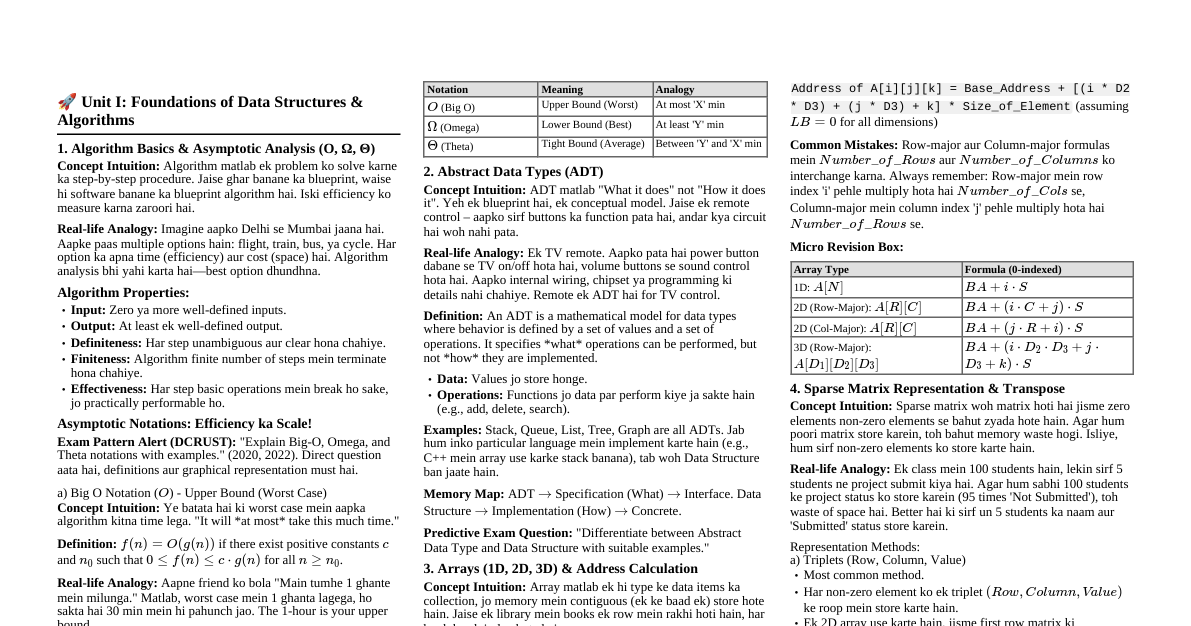

Unit I: Algorithms and Searching 1. Binary Search vs. Linear Search & C Program for Binary Search Binary Search vs. Linear Search: Linear Search: Searches elements sequentially from the beginning until the desired element is found or the end of the list is reached. Works on unsorted or sorted arrays. Time Complexity: $O(n)$ in worst and average cases. Binary Search: Requires the array to be sorted. Divides the search interval in half repeatedly. Compares the target value to the middle element of the array; if they are not equal, the half in which the target cannot lie is eliminated, and the search continues on the remaining half. Time Complexity: $O(\log n)$ in worst and average cases. More efficient for large datasets than linear search. C Program for Binary Search: #include <stdio.h> int binarySearch(int arr[], int size, int item) { int low = 0; int high = size - 1; int mid; while (low <= high) { mid = low + (high - low) / 2; // To prevent potential overflow if (arr[mid] == item) { return mid; // Item found at index mid } else if (arr[mid] < item) { low = mid + 1; // Item is in the right half } else { high = mid - 1; // Item is in the left half } } return -1; // Item not found } int main() { int arr[] = {11, 22, 30, 33, 40, 44, 55, 60, 66, 77, 80, 88, 99}; int size = sizeof(arr) / sizeof(arr[0]); int item = 40; int result = binarySearch(arr, size, item); if (result != -1) { printf("Item %d found at index %d.\n", item, result); } else { printf("Item %d not found in the array.\n", item); } return 0; } Complexity of Binary Search: In binary search, the array is repeatedly divided into two halves. This means that after each comparison, the search space is reduced by half. If the initial size of the array is $N$, after the first comparison, the size becomes $N/2$. After the second, it's $N/4$, and so on. This continues until the search space is reduced to 1 element. If $k$ is the number of comparisons, then $N/2^k = 1 \implies N = 2^k \implies k = \log_2 N$. Thus, the time complexity of binary search is $O(\log N)$. 2. Algorithm Definition, Properties, and Asymptotic Notations Algorithm Definition: An algorithm is a finite set of well-defined instructions to accomplish a particular task. It is a step-by-step procedure for solving a computational problem. For instance, a recipe is an algorithm for cooking a dish, and a mathematical formula is an algorithm for solving a problem. Properties of an Algorithm: Finiteness: An algorithm must terminate after a finite number of steps. Definiteness: Each step of an algorithm must be precisely defined; the actions to be carried out must be unambiguously specified for each case. Input: An algorithm has zero or more well-defined inputs. Output: An algorithm has one or more well-defined outputs, and should relate to the input. Effectiveness: All operations must be sufficiently basic that they can, in principle, be done exactly and in a finite amount of time by a person using pencil and paper. Asymptotic Notations for Running Time: Asymptotic notations are mathematical tools to express the time and space complexity of algorithms. They describe the behavior of the algorithm as the input size ($n$) grows. Big O Notation ($O$): Describes the upper bound of an algorithm's running time. It represents the slowest growth rate an algorithm can have. $T(n) = O(g(n))$ if there exist positive constants $c$ and $n_0$ such that $0 \le T(n) \le c \cdot g(n)$ for all $n \ge n_0$. Example: If an algorithm takes $3n^2 + 2n + 5$ operations, its complexity is $O(n^2)$. Omega Notation ($\Omega$): Describes the lower bound of an algorithm's running time. It represents the fastest growth rate an algorithm can have. $T(n) = \Omega(g(n))$ if there exist positive constants $c$ and $n_0$ such that $0 \le c \cdot g(n) \le T(n)$ for all $n \ge n_0$. Example: An algorithm with $T(n) = 3n^2 + 2n + 5$ has a complexity of $\Omega(n^2)$. Theta Notation ($\Theta$): Describes both the upper and lower bounds (tight bound) of an algorithm's running time. It represents the exact growth rate. $T(n) = \Theta(g(n))$ if there exist positive constants $c_1, c_2$ and $n_0$ such that $0 \le c_1 \cdot g(n) \le T(n) \le c_2 \cdot g(n)$ for all $n \ge n_0$. Example: An algorithm with $T(n) = 3n^2 + 2n + 5$ has a complexity of $\Theta(n^2)$. Unit II: Stacks and Expressions 3. (a) PUSH and POP Operations in Array-implemented Stack When a stack is implemented using an array, a variable (e.g., top ) keeps track of the topmost element. Initially, top = -1 for an empty stack. Algorithm for PUSH operation: Check if the stack is full ( top == MAX_SIZE - 1 , where MAX_SIZE is the maximum capacity of the array). If full, print "Stack Overflow" and exit. If not full, increment top ( top = top + 1 ). Place the new element at the new top position ( stack[top] = element ). Algorithm for POP operation: Check if the stack is empty ( top == -1 ). If empty, print "Stack Underflow" and exit. If not empty, retrieve the element at the top position ( element = stack[top] ). Decrement top ( top = top - 1 ). Return the retrieved element . 3. (b) Infix to Postfix Transformation using Stack Infix Expression: $A + (B * C –(D / E \uparrow F) * G )$ Rules for Infix to Postfix: Scan the infix expression from left to right. If an operand is encountered, append it to the postfix expression. If a '(' is encountered, push it onto the stack. If a ')' is encountered, pop all operators from the stack and append them to the postfix expression until a '(' is found. Discard both parentheses. If an operator is encountered: Pop operators from the stack and append them to the postfix expression as long as the stack is not empty, the top of the stack is not '(', and the operator at the top of the stack has higher or equal precedence than the current operator (for left-associative operators). Push the current operator onto the stack. After scanning the entire expression, pop any remaining operators from the stack and append them to the postfix expression. Precedence (Higher to Lower): $\uparrow$ (Exponentiation) $\rightarrow$ $*, /$ $\rightarrow$ $+, -$ Transformation Steps: Scanned Symbol Stack Postfix Expression A A + + A ( +( A B +( AB * +(* AB C +(* ABC - +(- ABC* ( +(-( ABC* D +(-( ABC*D / +(-(/ ABC*D E +(-(/ ABC*DE $\uparrow$ +(-(/$\uparrow$ ABC*DE F +(-(/$\uparrow$ ABC*DEF ) +(- ABC*DEF$\uparrow$/ * +(-* ABC*DEF$\uparrow$/ G +(-* ABC*DEF$\uparrow$/G ) + ABC*DEF$\uparrow$/G* - (End) ABC*DEF$\uparrow$/G*-+ Resulting Postfix Expression: $A B C * D E F \uparrow / G * - +$ 3. (c) Postfix Expression Evaluation Postfix Expression: $2, 3, 10, +, *, 8, 2, /,-$ Rules for Postfix Evaluation: Scan the postfix expression from left to right. If an operand is encountered, push it onto the stack. If an operator is encountered, pop the required number of operands (usually two) from the stack, perform the operation, and push the result back onto the stack. After scanning the entire expression, the final result will be the only element left on the stack. Evaluation Steps: Scanned Symbol Stack Operation/Comment 2 [2] Push 2 3 [2, 3] Push 3 10 [2, 3, 10] Push 10 + [2, 13] Pop 10, 3. $3+10=13$. Push 13. * [26] Pop 13, 2. $2*13=26$. Push 26. 8 [26, 8] Push 8 2 [26, 8, 2] Push 2 / [26, 4] Pop 2, 8. $8/2=4$. Push 4. - [22] Pop 4, 26. $26-4=22$. Push 22. Result: The final value of the expression is $22$. 4. (a) Stack Overflow in Linked Stack In a linked list implementation of a stack, new nodes are dynamically allocated from the heap memory. An "overflow" condition in an array-based stack occurs when the array is full and no more elements can be added. In a linked stack, there is no fixed size limit like an array. New nodes can be created as long as there is available memory in the heap. Therefore, an explicit "stack overflow" check (like `top == MAX_SIZE - 1`) is generally not implemented for a linked stack because it can theoretically grow as large as the available system memory. The only practical limit is when the system runs out of memory (heap exhaustion), which is a system-level error rather than a data structure-specific overflow condition. 4. (b) Stack Definition and Linearity Stack Definition: A stack is a linear data structure that follows the Last-In, First-Out (LIFO) principle. This means the element inserted last is the first one to be removed. It allows operations only at one end, called the "top" of the stack. The primary operations are PUSH (to add an element) and POP (to remove an element). Linear or Non-linear: A stack is a linear data structure. Even though it can be implemented using non-contiguous memory (like a linked list), the logical arrangement of elements is sequential or linear. Elements are added and removed in a specific linear order (LIFO), and each element has a unique predecessor and successor (except for the top and bottom elements). Non-linear data structures (like trees and graphs) represent hierarchical or networked relationships where elements can have multiple predecessors or successors. 4. (c) D-queues (Deque) D-queue (Double-Ended Queue or Deque): A deque is a linear data structure that allows insertion and deletion of elements from both ends (front and rear). It can be thought of as a generalized queue where elements can be added or removed from either the front or the back. The name "deque" is pronounced "deck" and is short for "double-ended queue". Operations: insert_front(element) : Adds an element to the front of the deque. insert_rear(element) : Adds an element to the rear of the deque. delete_front() : Removes an element from the front of the deque. delete_rear() : Removes an element from the rear of the deque. get_front() : Returns the front element without removing it. get_rear() : Returns the rear element without removing it. isEmpty() : Checks if the deque is empty. isFull() : Checks if the deque is full (for array-based implementation). Types of Deque: Input Restricted Deque: Allows insertion only at one end but allows deletion from both ends. Output Restricted Deque: Allows deletion only at one end but allows insertion from both ends. Example of Deque Operations: Initial Deque: Empty insert_rear(10) $\rightarrow$ Deque: $[10]$ insert_rear(20) $\rightarrow$ Deque: $[10, 20]$ insert_front(5) $\rightarrow$ Deque: $[5, 10, 20]$ delete_front() $\rightarrow$ Returns 5. Deque: $[10, 20]$ insert_front(3) $\rightarrow$ Deque: $[3, 10, 20]$ delete_rear() $\rightarrow$ Returns 20. Deque: $[3, 10]$ Unit III: Linked Lists and Trees 5. (a) Linked List vs. Array Linked List: Memory Allocation: Dynamic; nodes are allocated from heap memory as needed, not necessarily contiguous. Size: Dynamic; can grow or shrink at runtime. Access: Sequential access only; to access the $n$-th element, you must traverse from the beginning. Time complexity $O(n)$. Insertion/Deletion: Efficient; involves changing a few pointers. Time complexity $O(1)$ if the position is known, $O(n)$ if searching for the position. Memory Usage: More memory overhead due to pointers stored in each node. Utilization: Efficient memory utilization as memory is allocated only when required. Array: Memory Allocation: Static (for fixed-size arrays) or dynamic (for dynamically allocated arrays like using malloc ), but elements are always stored in contiguous memory locations. Size: Fixed size (for static arrays); cannot grow or shrink once declared. Dynamic arrays can be resized but often involve reallocating and copying. Access: Random access; any element can be accessed directly using its index. Time complexity $O(1)$. Insertion/Deletion: Inefficient; requires shifting elements. Time complexity $O(n)$. Memory Usage: Less memory overhead. Utilization: Can lead to memory wastage if not fully utilized, or overflow if capacity is exceeded. 5. (b) Adding a Node "Before" a Node and Counting Nodes in Doubly Linked List Doubly Linked List Node Structure: struct Node { int data; struct Node* prev; struct Node* next; }; Function to add a node 'before' a given node (assuming `head` is the start of the list): #include <stdio.h> #include <stdlib.h> struct Node { int data; struct Node* prev; struct Node* next; }; // Function to create a new node struct Node* createNode(int data) { struct Node* newNode = (struct Node*)malloc(sizeof(struct Node)); if (newNode == NULL) { printf("Memory allocation failed!\n"); exit(1); } newNode->data = data; newNode->prev = NULL; newNode->next = NULL; return newNode; } // Function to add a new node before a given existing node struct Node* addBefore(struct Node** head_ref, int newData, int beforeData) { struct Node* newNode = createNode(newData); if (*head_ref == NULL) { // If list is empty printf("List is empty. Adding as head.\n"); *head_ref = newNode; return *head_ref; } struct Node* current = *head_ref; while (current != NULL && current->data != beforeData) { current = current->next; } if (current == NULL) { // beforeData not found printf("Node with data %d not found. New node not added.\n", beforeData); free(newNode); return *head_ref; } // Found 'current' node, insert 'newNode' before it newNode->next = current; newNode->prev = current->prev; if (current->prev != NULL) { // If 'current' is not the head current->prev->next = newNode; } else { // If 'current' is the head, newNode becomes the new head *head_ref = newNode; } current->prev = newNode; return *head_ref; } // Function to count the number of nodes in a doubly linked list int countNodes(struct Node* head) { int count = 0; struct Node* current = head; while (current != NULL) { count++; current = current->next; } return count; } // Helper function to print the list (for verification) void displayList(struct Node* head) { struct Node* current = head; printf("Doubly Linked List: "); while (current != NULL) { printf("%d <-> ", current->data); current = current->next; } printf("NULL\n"); } /* int main() { struct Node* head = NULL; // Build a simple list: 10 <-> 20 <-> 30 head = createNode(10); head->next = createNode(20); head->next->prev = head; head->next->next = createNode(30); head->next->next->prev = head->next; displayList(head); printf("Number of nodes: %d\n", countNodes(head)); // Output: 3 // Add 15 before 20 addBefore(&head, 15, 20); displayList(head); // Output: 10 <-> 15 <-> 20 <-> 30 printf("Number of nodes: %d\n", countNodes(head)); // Output: 4 // Add 5 before 10 (new head) addBefore(&head, 5, 10); displayList(head); // Output: 5 <-> 10 <-> 15 <-> 20 <-> 30 printf("Number of nodes: %d\n", countNodes(head)); // Output: 5 // Add 35 before 40 (not found) addBefore(&head, 35, 40); displayList(head); // List remains unchanged printf("Number of nodes: %d\n", countNodes(head)); // Output: 5 // Free memory (important for linked lists) struct Node* temp; while (head != NULL) { temp = head; head = head->next; free(temp); } return 0; } */ Function to count the number of nodes: (Included in the code above as countNodes ) Initialize a counter to 0. Start from the head of the list. Traverse the list, incrementing the counter for each node encountered. Stop when the current node is NULL. Return the final count. 6. (a) Complete Binary Tree and Example Complete Binary Tree: A complete binary tree is a binary tree in which every level, except possibly the last, is completely filled, and all nodes in the last level are as far left as possible. It's a specific type of binary tree that is "almost complete", meaning it's filled level by level from left to right. All nodes must be as far left as possible. The depth of all leaves differs by at most 1. Example: 10 / \ 20 30 / \ / 40 50 60 In this example: Level 0: Node 10 (filled) Level 1: Nodes 20, 30 (filled) Level 2: Nodes 40, 50, 60 (last level, filled from left to right, no gaps). If node 60 were missing, it would still be complete. If node 40 were missing, it would not be complete because node 50 is not "as far left as possible". 6. (b) AVL Tree vs. Binary Search Tree and AVL Representation AVL Tree vs. Binary Search Tree (BST): Binary Search Tree (BST): A binary tree where for each node, all nodes in its left subtree have keys less than its own key, and all nodes in its right subtree have keys greater than its own key. Insertion, deletion, and search operations take $O(h)$ time, where $h$ is the height of the tree. In the worst case (e.g., insertion of elements in sorted order), a BST can degenerate into a skewed tree (like a linked list), leading to a height of $O(n)$ and thus $O(n)$ time complexity for operations. AVL Tree: An AVL tree is a self-balancing Binary Search Tree. It maintains the BST properties but adds a crucial balance condition: for every node in the tree, the height difference between its left and right subtrees (called the balance factor) must be at most 1 (i.e., $-1, 0,$ or $1$). If this balance condition is violated after an insertion or deletion, the tree performs rotations (single or double rotations) to rebalance itself. This self-balancing property guarantees that the height of an AVL tree is always $O(\log n)$, preventing it from degenerating into a skewed tree. Therefore, all operations (insertion, deletion, search) in an AVL tree always take $O(\log n)$ time. How AVL Trees are Represented in Memory: An AVL tree node typically requires additional storage compared to a basic BST node to maintain its balance. Each node in an AVL tree usually stores: Data/Key: The actual value stored in the node. Left Child Pointer: A pointer to its left child node. Right Child Pointer: A pointer to its right child node. Height (or Balance Factor): An integer value representing the height of the subtree rooted at this node, or its balance factor (height_left - height_right). This information is crucial for checking the balance condition and performing rotations. Example Node Structure: struct AVLNode { int data; struct AVLNode* left; struct AVLNode* right; int height; // Or int balanceFactor; }; The height field is often preferred as it can be easily calculated or updated and then used to derive the balance factor for any node. The height of a leaf node is 0 (or 1, depending on convention), and the height of an internal node is $1 + \max(\text{height of left child}, \text{height of right child})$. When an insertion or deletion causes a balance factor to become $\pm 2$, rotations are performed by adjusting these pointers and updating heights/balance factors to restore the AVL property. Unit IV: Sorting, Hashing, and Graphs 7. (a) Insertion Sort Algorithm Algorithm for Insertion Sort: Start from the second element (index 1) of the array. Consider this element as the `key`. Compare the `key` with elements to its left. If an element to the left is greater than the `key`, shift it one position to the right. Continue shifting elements to the right until an element smaller than or equal to the `key` is found, or the beginning of the array is reached. Insert the `key` into the correct position. Repeat steps 1-6 for all remaining unsorted elements. Sorting the list: $44, 33, 11, 55, 77, 90, 40, 60$ Pass Array State Comment Initial $[44, 33, 11, 55, 77, 90, 40, 60]$ Pass 1 $[33, 44, 11, 55, 77, 90, 40, 60]$ 33 is smaller than 44, insert 33 before 44. Pass 2 $[11, 33, 44, 55, 77, 90, 40, 60]$ 11 is smaller than 44, 33. Insert 11 at beginning. Pass 3 $[11, 33, 44, 55, 77, 90, 40, 60]$ 55 is greater than 44, already in place. Pass 4 $[11, 33, 44, 55, 77, 90, 40, 60]$ 77 is greater than 55, already in place. Pass 5 $[11, 33, 44, 55, 77, 90, 40, 60]$ 90 is greater than 77, already in place. Pass 6 $[11, 33, 40, 44, 55, 77, 90, 60]$ 40 is smaller than 90, 77, 55, 44. Insert 40 after 33. Pass 7 $[11, 33, 40, 44, 55, 60, 77, 90]$ 60 is smaller than 90, 77. Insert 60 after 55. Sorted List: $11, 33, 40, 44, 55, 60, 77, 90$ 7. (b) Hashing, Hash Functions, and Collision Resolution Hashing: Hashing is a technique used to map data of arbitrary size to fixed-size values. This process is often used to index and retrieve items in a database or to quickly locate stored data. It converts a given search key into an address or index in an array called a hash table. Hash Functions: A hash function is a mathematical function that takes a key as input and returns an integer value, which is the index (or hash value) in the hash table where the data associated with the key should be stored. A good hash function should: Be easy and quick to compute. Distribute keys uniformly across the hash table to minimize collisions. Two Common Hash Functions: Division Method: $h(k) = k \pmod M$, where $k$ is the key and $M$ is the size of the hash table. The remainder of the division of the key by the table size $M$ is used as the hash address. It is usually best to choose $M$ as a prime number not too close to a power of 2. Multiplication Method: $h(k) = \lfloor M \cdot (k \cdot A \pmod 1) \rfloor$, where $A$ is a constant between 0 and 1 (e.g., $A \approx 0.618$ for Knuth's method). This involves multiplying the key by a constant $A$, taking the fractional part of the result, multiplying by the table size $M$, and then taking the floor. This method works well with any value of $M$ and is often used when $M$ is a power of 2. Other methods: Mid-square, Folding, Digit analysis. Collision Resolution: A collision occurs when two different keys hash to the same index in the hash table. Since each slot can hold only one item, a strategy is needed to handle such situations. Two Main Techniques: 1. Open Addressing: When a collision occurs, the system probes for an alternative empty slot within the hash table itself. Linear Probing: Searches for the next available slot sequentially (e.g., $h(k)+1, h(k)+2, \dots \pmod M$). Suffers from primary clustering. Quadratic Probing: Searches for slots using a quadratic function (e.g., $h(k)+1^2, h(k)+2^2, \dots \pmod M$). Helps reduce primary clustering but can suffer from secondary clustering. 2. Separate Chaining: Each slot in the hash table is a pointer to the head of a linked list. When a collision occurs, the new element is simply added to the end of the linked list at the corresponding hash table index. This method avoids clustering problems but requires additional memory for pointers and can lead to performance degradation if lists become very long. 8. (a) C Program for Selection Sort Algorithm for Selection Sort: Find the minimum element in the unsorted part of the array. Swap the found minimum element with the element at the beginning of the unsorted part. Repeat steps 1 and 2 for the remaining unsorted part until the entire array is sorted. C Program: #include <stdio.h> void selectionSort(int arr[], int n) { int i, j, min_idx; // One by one move boundary of unsorted subarray for (i = 0; i < n - 1; i++) { // Find the minimum element in unsorted array min_idx = i; for (j = i + 1; j < n; j++) { if (arr[j] < arr[min_idx]) min_idx = j; } // Swap the found minimum element with the first element // of the unsorted subarray int temp = arr[min_idx]; arr[min_idx] = arr[i]; arr[i] = temp; } } void printArray(int arr[], int size) { int i; for (i = 0; i < size; i++) printf("%d ", arr[i]); printf("\n"); } int main() { int arr[] = {64, 25, 12, 22, 11}; int n = sizeof(arr) / sizeof(arr[0]); printf("Original array: "); printArray(arr, n); selectionSort(arr, n); printf("Sorted array: "); printArray(arr, n); return 0; } 8. (b) Graph Representations and DFS Algorithm Example Two Representations of a Graph: 1. Adjacency Matrix: A $V \times V$ matrix (where $V$ is the number of vertices) is used. Let the matrix be $A$. If there is an edge from vertex $i$ to vertex $j$, then $A[i][j] = 1$; otherwise, $A[i][j] = 0$. For a weighted graph, $A[i][j]$ can store the weight of the edge. Space Complexity: $O(V^2)$. Advantages: Checking if an edge exists between two vertices is $O(1)$. Adding/removing an edge is $O(1)$. Disadvantages: Inefficient for sparse graphs (many zeros). Finding all neighbors of a vertex takes $O(V)$ time. Example: For a graph with vertices A, B, C, D and edges (A,B), (A,C), (B,D), (C,D) A B C D A 0 1 1 0 B 1 0 0 1 C 1 0 0 1 D 0 1 1 0 2. Adjacency List: An array of linked lists is used. The size of the array is $V$. Each array index $i$ represents a vertex $V_i$. The linked list at $A[i]$ contains all vertices adjacent to $V_i$. For a weighted graph, each node in the linked list can store both the adjacent vertex and the edge weight. Space Complexity: $O(V+E)$, where $E$ is the number of edges. Advantages: Efficient for sparse graphs (saves space). Finding all neighbors of a vertex takes $O(k)$ where $k$ is the degree of the vertex. Disadvantages: Checking if an edge exists between two vertices takes $O(k)$ time. Example: For the same graph as above: A: -> B -> C B: -> A -> D C: -> A -> D D: -> B -> C Algorithm for Depth-First Search (DFS): DFS explores as far as possible along each branch before backtracking. It uses a stack (explicitly or implicitly via recursion). Steps: Start DFS from a given source vertex $S$. Mark $S$ as visited and push it onto a stack. While the stack is not empty: Pop a vertex $V$ from the stack. Process $V$ (e.g., print it). For each unvisited neighbor $U$ of $V$: Mark $U$ as visited. Push $U$ onto the stack. If the graph is disconnected, repeat the process for any unvisited vertices. Demonstrate DFS using an example: Consider the following undirected graph: A --- B | | C --- D Vertices: A, B, C, D Edges: (A,B), (A,C), (B,D), (C,D) Let's start DFS from vertex A. Initialization: Stack = [], Visited = {} Step-by-step trace: Push A onto stack. Mark A visited. Stack = [A], Visited = {A} Pop A. Print A. Neighbors of A: B, C. B is unvisited. Mark B visited, Push B. Stack = [B], Visited = {A, B} C is unvisited. Mark C visited, Push C. Stack = [B, C], Visited = {A, B, C} Pop C. Print C. Neighbors of C: A, D. A is visited. D is unvisited. Mark D visited, Push D. Stack = [B, D], Visited = {A, B, C, D} Pop D. Print D. Neighbors of D: B, C. B is visited. C is visited. Pop B. Print B. Neighbors of B: A, D. A is visited. D is visited. Stack is empty. DFS complete. DFS Traversal Order: A $\rightarrow$ C $\rightarrow$ D $\rightarrow$ B (This order can vary depending on the order of neighbors in adjacency list/matrix).